Integrating Harness with Dynatrace enables automated verification of application performance across deployment pipelines, reducing manual verification time from 60 minutes to 15 minutes per deployment and enabling automatic rollback in case of performance regressions.

These days, it's not uncommon for customers to use Application Performance Monitoring (APM) software across their dev, QA and production environments.

A few weeks ago a large FS enterprise customer evaluated Harness for Continuous Delivery (CD) and said: "we need Dynatrace support asap."

So, a few weeks later thru the power of APIs, here we are.

Why Harness + Dynatrace?

Harness helps customers master CD so they can deploy new applications and services into production with speed and confidence.

Dynatrace helps customers manage the performance of these new applications and services.

Customers typically have one or more deployment pipelines for each of application/service. A typical pipeline has several stages that reflect the environments (e.g. dev, QA, staging, production) which are used to test/validate code before production deployment.

Integrating Harness with Dynatrace now allows customers to automatically verify application performance across their deployment pipelines.

A Simple Deployment Pipeline Example

Let's imagine we have a Docker microservice called 'My Microservice' and our Continuous Integration (CI) tool Jenkins creates a new build (#101) which results in a new artifact version for us.

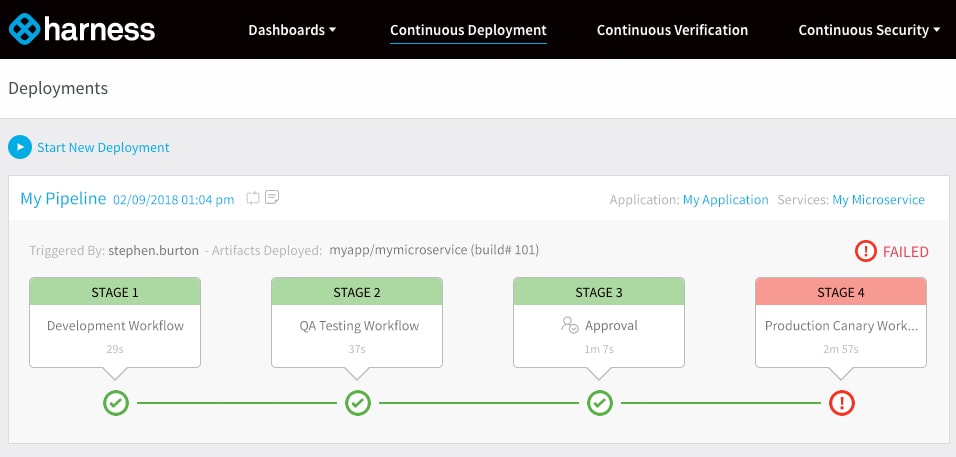

With Harness, we could pick up that new version and immediately trigger a 4-stage deployment pipeline like this:

Each pipeline stage deploys our new microservice to a different environment, runs a few tests, verifies everything is good before proceeding to the next stage.

You can see from the above screenshot that stages 1 thru 3 (dev/QA) succeeded and turned green. This is generally good, it means the artifact passed all tests and is ready for production and customers.

The bad news it that stage 4: production turned red and our pipeline status is 'failed'.

Automating Deployment Health Checks With Harness

At Harness, we take CD one step further with something called Continuous Verification.

One of our early customers, Build.com, used to verify production deployments with 5-6 team leads manually analyzing monitoring data and log files. This process took each team lead 60 minutes and occurred 3 times a week. That's 1,080 minutes or 18 hours of team lead time spent on verification. With Harness, Build.com reduced verification time to just 15 minutes, and also enabled automatic rollback to occur in production.

With Dynatrace, Harness is able to deploy and verify the performance of artifacts instantly in every environment. The second a new artifact is deployed, Harness will automatically connect to Dynatrace and start analyzing the application/service performance data to understand the real business impact of each deployment.

Harness applies unsupervised machine learning (Hidden Markov models & Symbolic Aggregate Representation) to understand whether performance deviated for key business transactions and flags performance regressions accordingly.

Let's take a look at what this verification looks like with Dynatrace.

Continuous Verification With Dynatrace

The below deployment workflow relates to the above-failed pipeline, and specifically stage 4 in production.

You can see that phase 1 of the canary deployment succeeded but phase 2 failed. The deployment in terms of upgrading the containers succeeded, as did the verifications and tests relating to Jenkins and ELK. However, the Dynatrace verification failed which means new performance regressions have been identified. The resulting action, in this case, was an automatic rollback (this is the safety net that Harness provides).

Clicking on the Dynatrace failed step (red) shows us why the verification failed:

We can see that one key business transaction "requestLogin" has a response time performance regression after our new microservice version was deployed. Mousing over the red dot we can get more details - response time actually increased 58% from 840ms to 1330ms post-deployment:

The default time period for this verification was 15-minutes but this number can be custom. Some deployment pipelines and workflows can take hours to complete because not everything is automated (manual checks/approvals etc.).

Configuring Dynatrace Continuous Verification

Our integration was pretty simple to build using the standard Dynatrace API.

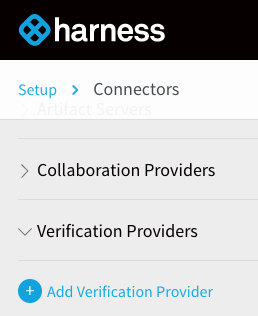

The first thing you need to do is Setup > Connectors > Add New Verification Provider:

Then enter your Dynatrace URL and API Token details:

You can get generate your API Token from the Dynatrace settings menu:

Adding Dynatrace Verification To Deployment Workflows

First, add or edit an existing workflow in Harness. Simply click Add Verification and select Dynatrace from the menu.

Next, select your Dynatrace connector instance, and add each of the service methods (key business transactions) you want to verify in addition to a verification time period (default is 15 minutes).

Note: We're working with the Dynatrace team to update their API so you can just select an application or service name and it will automatically verify all key business transactions associated by default.

Click submit and your deployment workflow will now automatically verify application performance using Dynatrace.

More to Come

As you can probably imagine, there are lots more things we can do with Dynatrace. Here are few things to expect in the future:

- Harness deployment markers for Dynatrace users (shifting right)

- Harness drill-down in context to Dynatrace business transactions (shifting right)

- Support for Dynatrace log unstructured event data (similar to our Splunk, ELK and Sumo Logic support)

Special thanks to our customers, engineering team and Andreas Grabner from Dynatrace who helped create this support.

Cheers!

Steve.