Harness Blog

Featured Blogs

Today, Harness is announcing the General Availability of Artifact Registry, a milestone that marks more than a new product release. It represents a deliberate shift in how artifact management should work in secure software delivery.

For years, teams have accepted a strange reality: you build in one system, deploy in another, and manage artifacts somewhere else entirely. CI/CD pipelines run in one place, artifacts live in a third-party registry, and security scans happen downstream. When developers need to publish, pull, or debug an artifact, they leave their pipelines, log into another tool, and return to finish their work.

It works, but it’s fragmented, expensive, and increasingly difficult to govern and secure.

At Harness, we believe artifact management belongs inside the platform where software is built and delivered. That belief led to Harness Artifact Registry.

A Startup Inside Harness

Artifact Registry started as a small, high-ownership bet inside Harness and a dedicated team with a clear thesis: artifact management shouldn’t be a separate system developers have to leave their pipelines to use. We treated it like a seed startup inside the company, moving fast with direct customer feedback and a single-threaded leader driving the vision.The message from enterprise teams was consistent: they didn’t want to stitch together separate tools for artifact storage, open source dependency security, and vulnerability scanning.

So we built it that way.

In just over a year, Artifact Registry moved from concept to core product. What started with a single design partner expanded to double digit enterprise customers pre-GA – the kind of pull-through adoption that signals we've identified a critical gap in the DevOps toolchain.

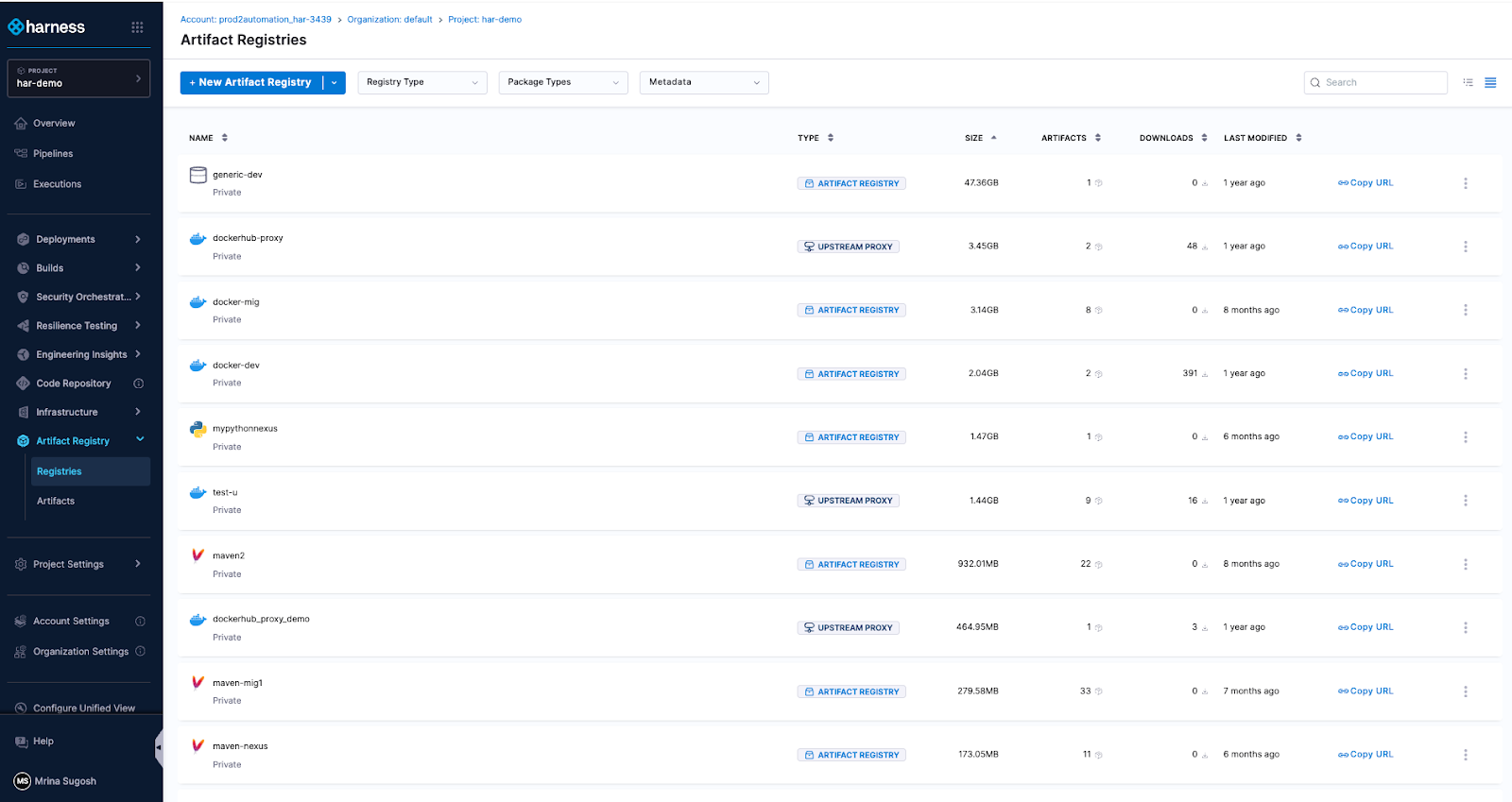

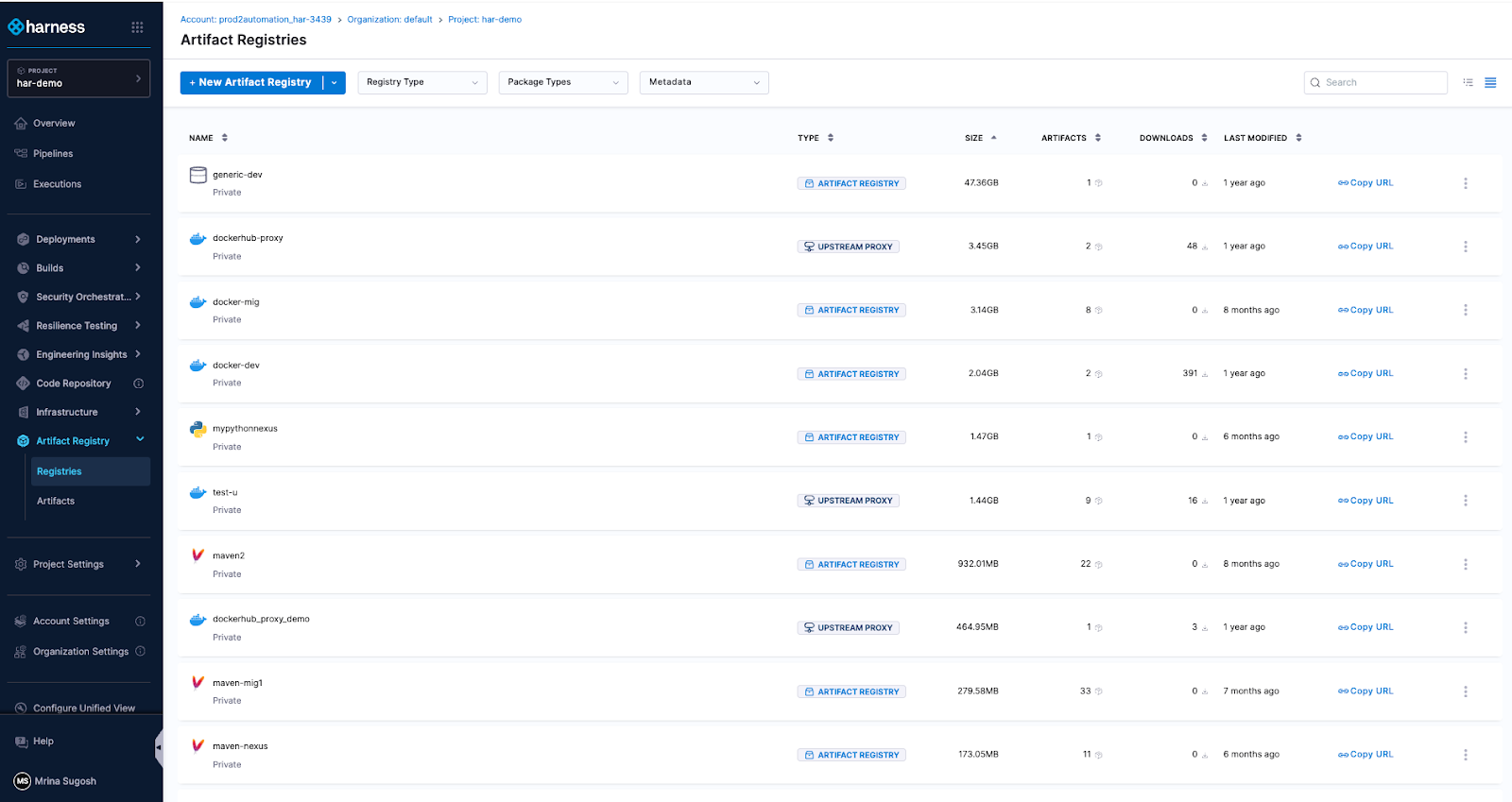

Today, Artifact Registry supports a broad range of container formats, package ecosystems, and AI artifacts, including Docker, Helm (OCI), Python, npm, Go, NuGet, Dart, Conda, and more, with additional support on the way. Enterprise teams are standardizing on it across CI pipelines, reducing registry sprawl, and eliminating the friction of managing diverse artifacts outside their delivery workflows.

One early enterprise customer, Drax Group, consolidated multiple container and package types into Harness Artifact Registry and achieved 100 percent adoption across teams after standardizing on the platform.

As their Head of Software Engineering put it:

"Harness is helping us achieve a single source of truth for all artifact types containerized and non-containerized alike making sure every piece of software is verified before it reaches production." - Jasper van Rijn

Why This Matters: The Registry as a Control Point

In modern DevSecOps environments, artifacts sit at the center of delivery. Builds generate them, deployments promote them, rollbacks depend on them, and governance decisions attach to them. Yet registries have traditionally operated as external storage systems, disconnected from CI/CD orchestration and policy enforcement.

That separation no longer holds up against today’s threat landscape.

Software supply chain attacks are more frequent and more sophisticated. The SolarWinds breach showed how malicious code embedded in trusted update binaries can infiltrate thousands of organizations. More recently, the Shai-Hulud 2.0 campaign compromised hundreds of npm packages and spread automatically across tens of thousands of downstream repositories.

These incidents reveal an important business reality: risk often enters early in the software lifecycle, embedded in third-party components and artifacts long before a product reaches customers.When artifact storage, open source governance, and security scanning are managed in separate systems, oversight becomes fragmented. Controls are applied after the fact, visibility is incomplete, and teams operate in silos. The result is slower response times, higher operational costs, and increased exposure.

We saw an opportunity to simplify and strengthen this model.

By embedding artifact management directly into the Harness platform, the registry becomes a built-in control point within the delivery lifecycle. RBAC, audit logging, replication, quotas, scanning, and policy enforcement operate inside the same platform where pipelines run. Instead of stitching together siloed systems, teams manage artifacts alongside builds, deployments, and security workflows. The outcome is streamlined operations, clearer accountability, and proactive risk management applied at the earliest possible stage rather than after issues surface.

Introducing Dependency Firewall: Blocking Risk at Ingest

Security is one of the clearest examples of why registry-native governance matters.

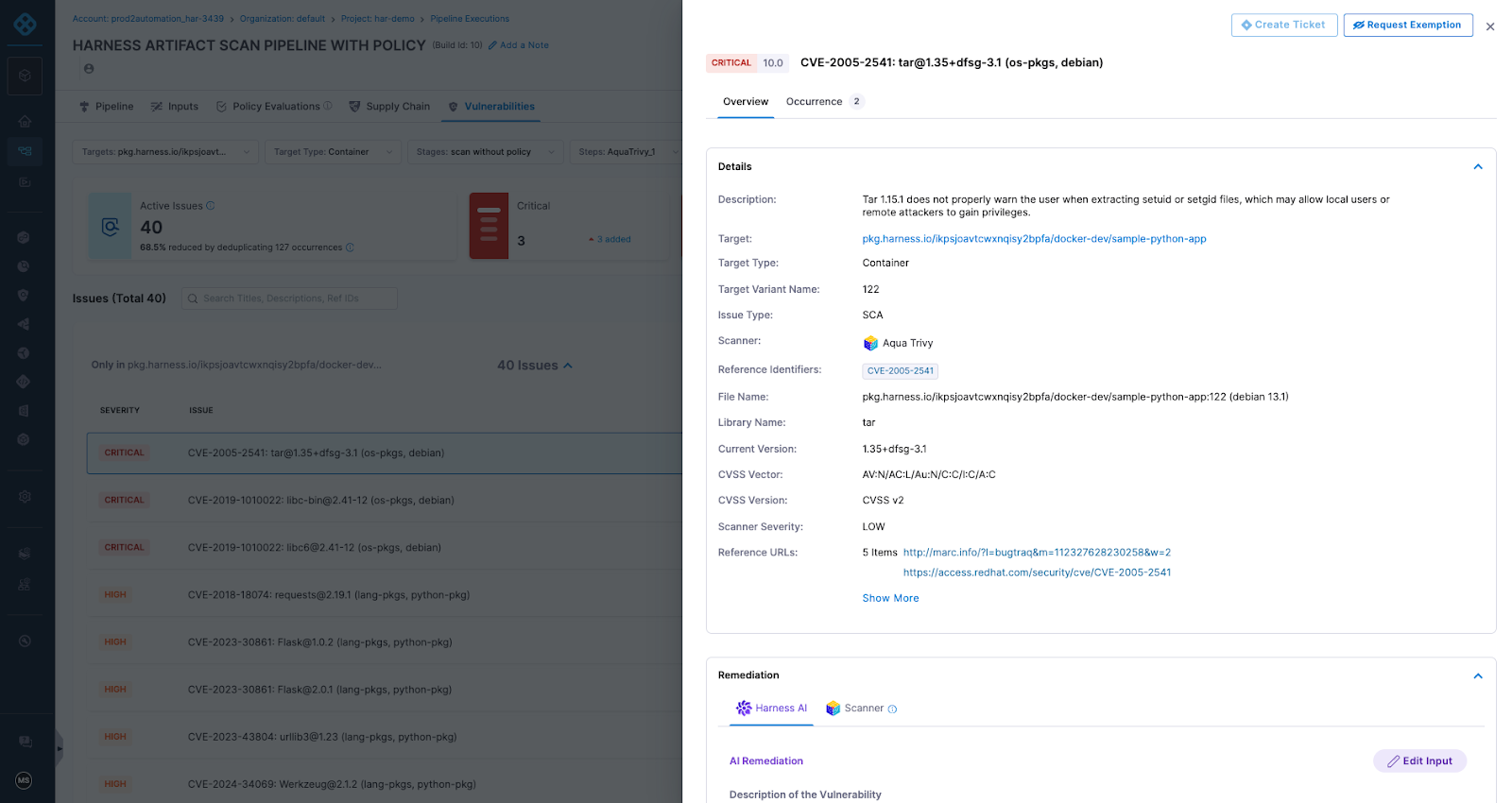

Artifact Registry delivers this through Dependency Firewall, a registry-level enforcement control applied at dependency ingest. Rather than relying on downstream CI scans after a package has already entered a build, Dependency Firewall evaluates dependency requests in real time as artifacts enter the registry. Policies can automatically block components with known CVEs, license violations, excessive severity thresholds, or untrusted upstream sources before they are cached or consumed by pipelines.

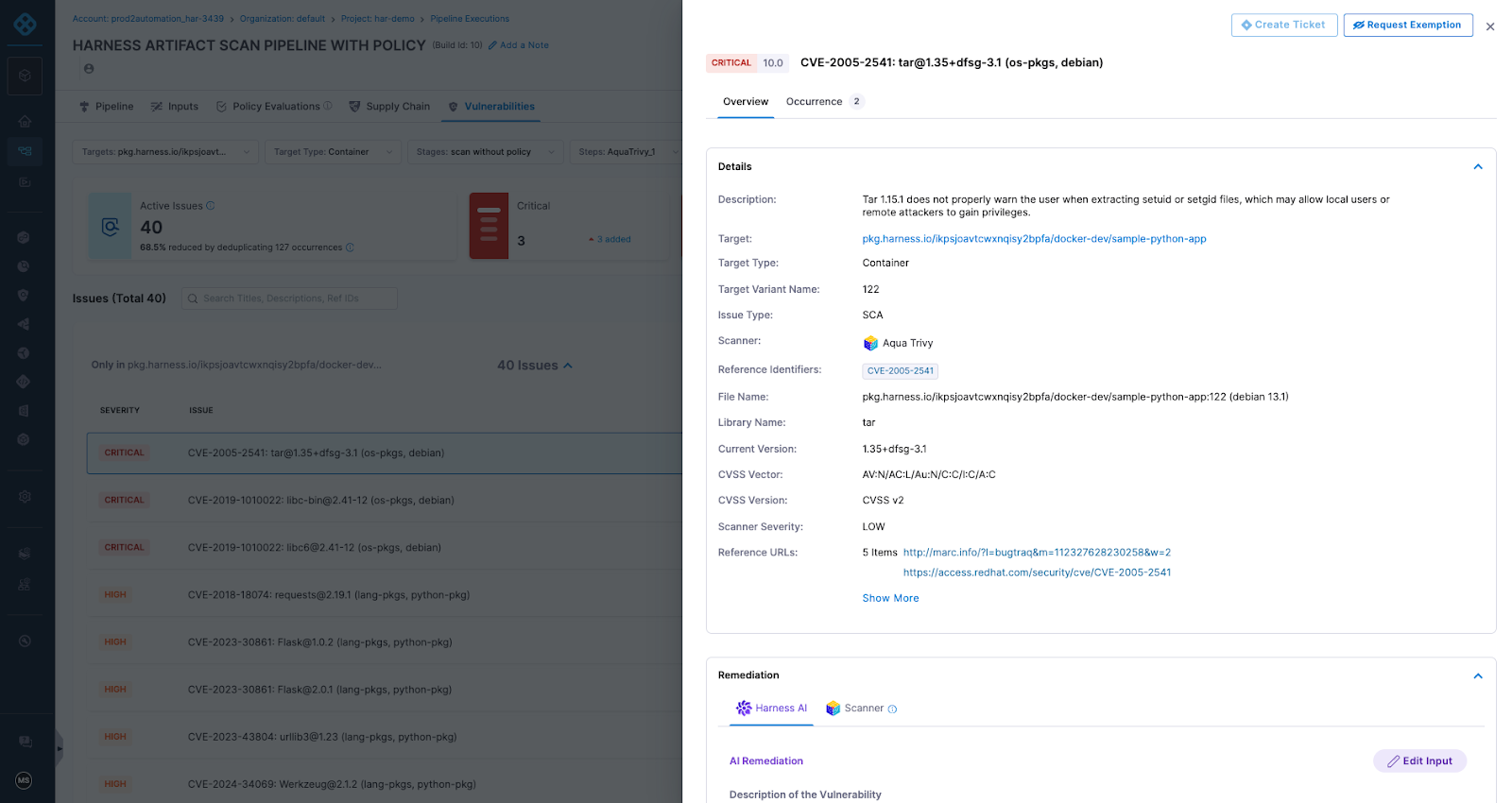

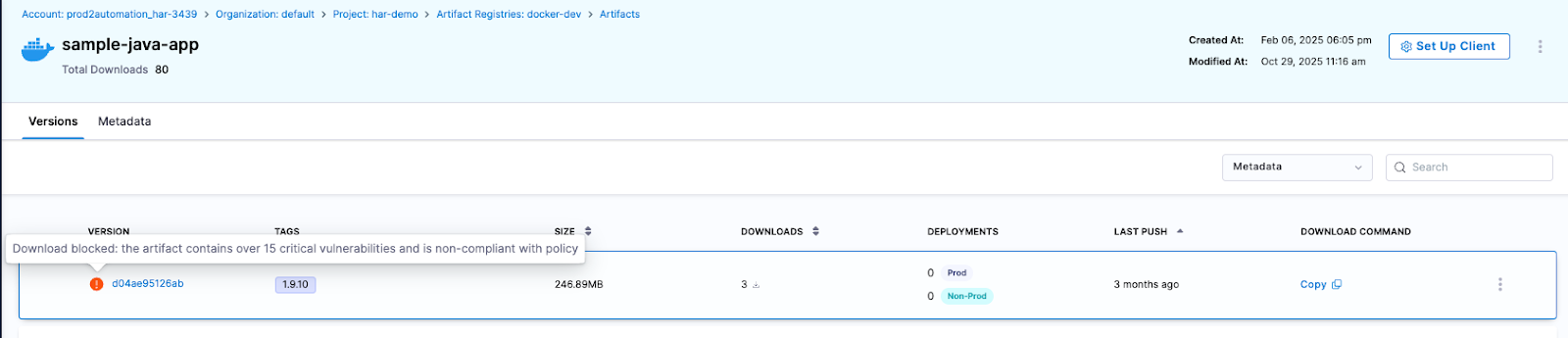

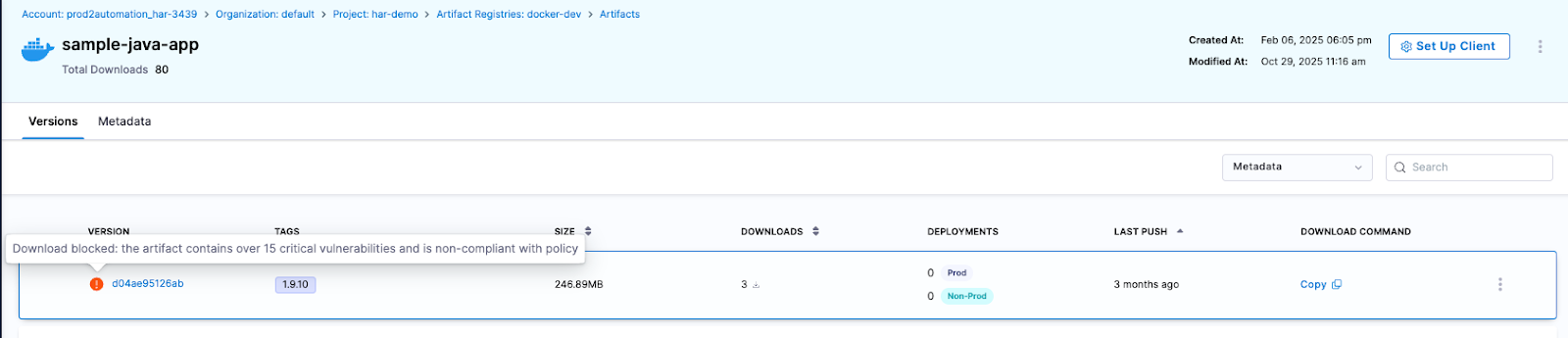

Artifact quarantine extends this model by automatically isolating artifacts that fail vulnerability or compliance checks. If an artifact does not meet defined policy requirements, it cannot be downloaded, promoted, or deployed until the issue is addressed. All quarantine and release actions are governed by role-based access controls and fully auditable, ensuring transparency and accountability. Built-in scanning powered by Aqua Trivy, combined with integrations across more than 40 security tools in Harness, feeds results directly into policy evaluation. This allows organizations to automate release or quarantine decisions in real time, reducing manual intervention while strengthening control at the artifact boundary.

The result is a registry that functions as an active supply chain control point, enforcing governance at the artifact boundary and reducing risk before it propagates downstream.

The Future of Artifact Management is here

General Availability signals that Artifact Registry is now a core pillar of the Harness platform. Over the past year, we’ve hardened performance, expanded artifact format support, scaled multi-region replication, and refined enterprise-grade controls. Customers are running high-throughput CI pipelines against it in production environments, and internal Harness teams rely on it daily.

We’re continuing to invest in:

- Expanded package ecosystem support

- Advanced lifecycle management, immutability, and auditing

- Deeper integration with Harness Security and the Internal Developer Portal

- AI-powered agents for OSS governance, lifecycle automation, and artifact intelligence

Modern software delivery demands clear control over how software is built, secured, and distributed. As supply chain threats increase and delivery velocity accelerates, organizations need earlier visibility and enforcement without introducing new friction or operational complexity.

We invite you to sign up for a demo and see firsthand how Harness Artifact Registry delivers high-performance artifact distribution with built-in security and governance at scale.

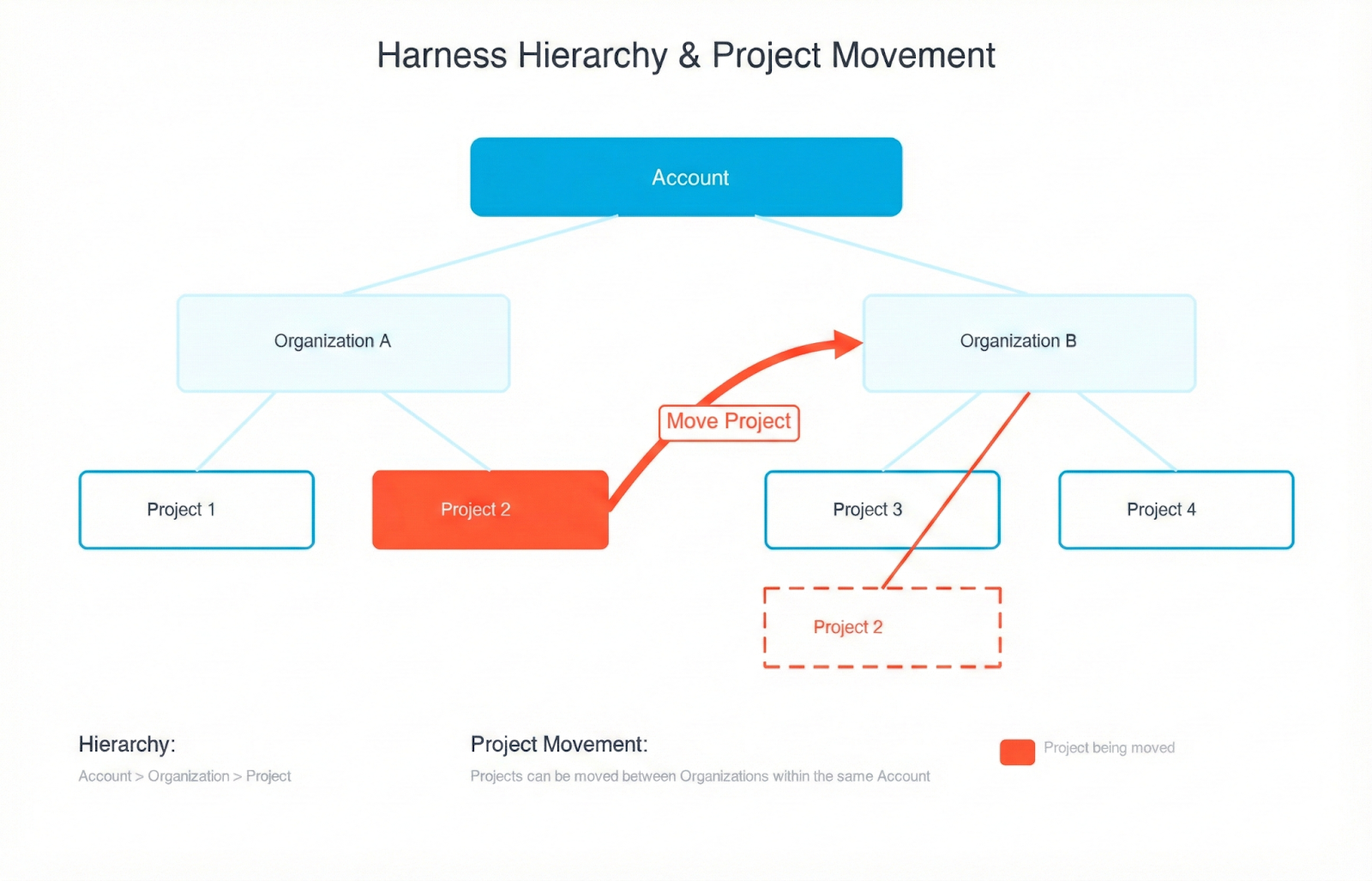

TLDR: We have rolled out Project Movement: the ability to transfer entire Harness projects between Organizations with a few clicks. It's been our most-requested Platform feature for a reason. Your pipelines, configurations, and rest come along for the ride.

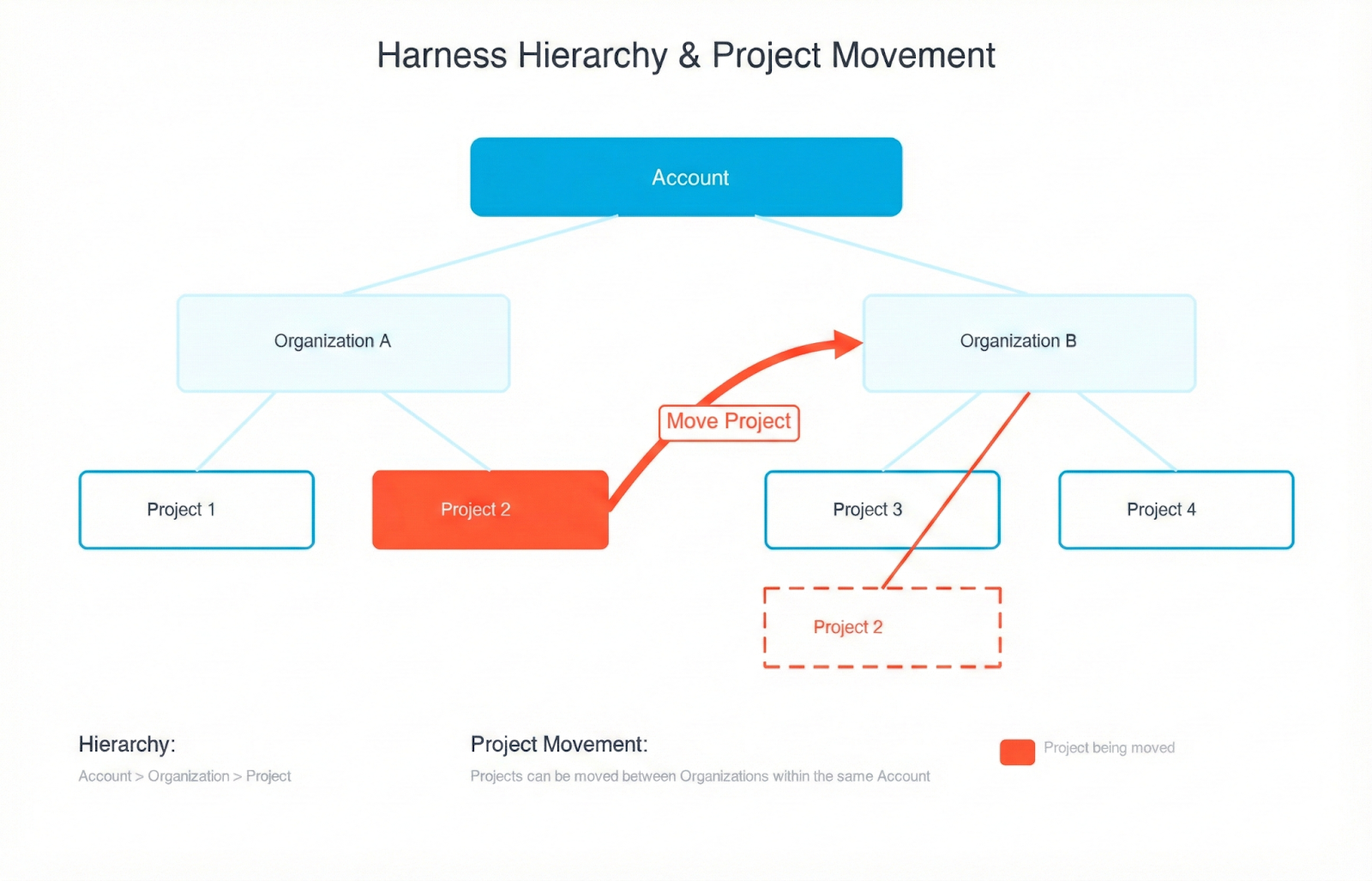

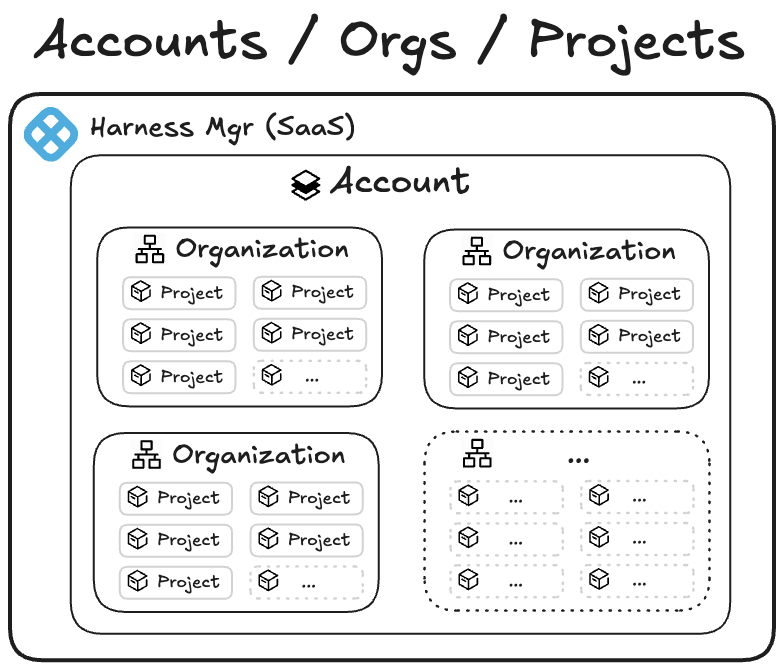

What are Projects and Organizations?

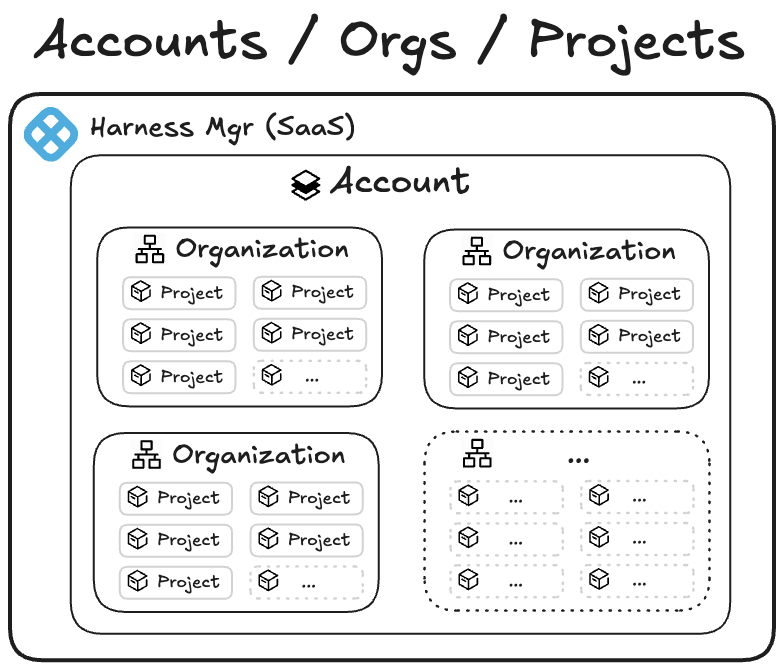

In Harness, an Account is the highest-scoped entity. It contains organizations and projects. An organization is the space that represents your business unit or team and helps you manage users, access, and shared settings in one place. Within an organization, a project is where your teams do their day-to-day work, such as building pipelines, managing services, and tracking deployments. Projects keep related resources grouped together, making it easier to collaborate, control permissions, and scale across teams.

The main benefit of keeping organizations and projects separate is strong isolation and predictability. By not allowing projects to move between organizations, you can ensure that each organization serves as a rigid boundary for security, RBAC, governance, billing, and integrations. Customers could trust that once a project was created within an org, all its permissions, secrets, connectors, audit history, and compliance settings would remain stable and wouldn’t be accidentally inherited or lost during a move. This reduced the risk of misconfiguration, privilege escalation, broken pipelines, or compliance violations — especially for large enterprises with multiple business units or regulated environments.

However, imagine this scenario: last quarter, your company reorganized around customer segments. This quarter, two teams merged. Next quarter, who knows—but your software delivery shouldn't grind to a halt every time someone redraws the org chart.

We've heard this story from dozens of customers: the experimental project that became critical, the team consolidation that changed ownership, the restructure that reshuffled which team owns what. And until now, moving a Harness project from one Organization to another meant one thing: start from scratch.

Not anymore.

That’s why we have rolled out Project Movement—the ability to transfer entire Harness projects between Organizations with a few clicks. It's been our most-requested Platform feature for a reason. Your pipelines, configurations, and rest come along for the ride.

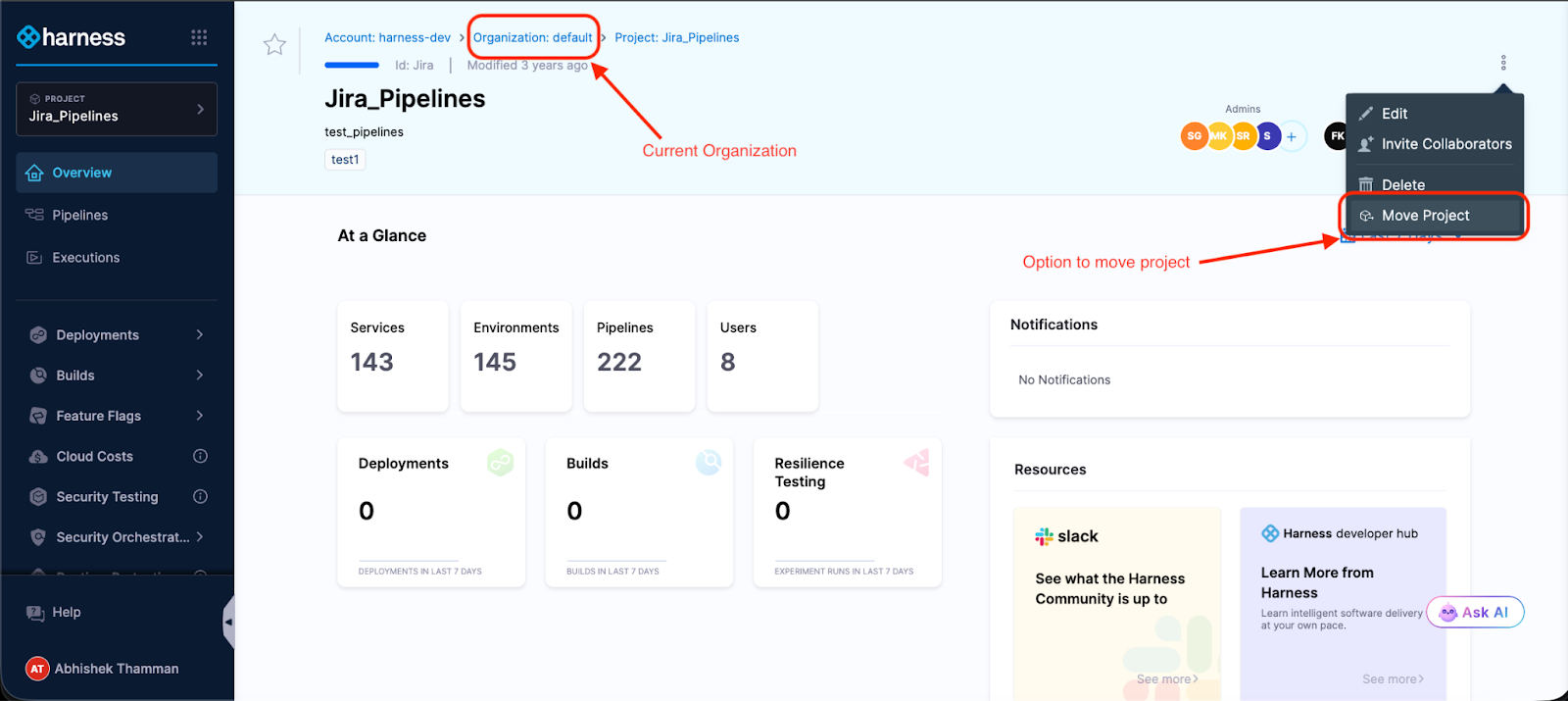

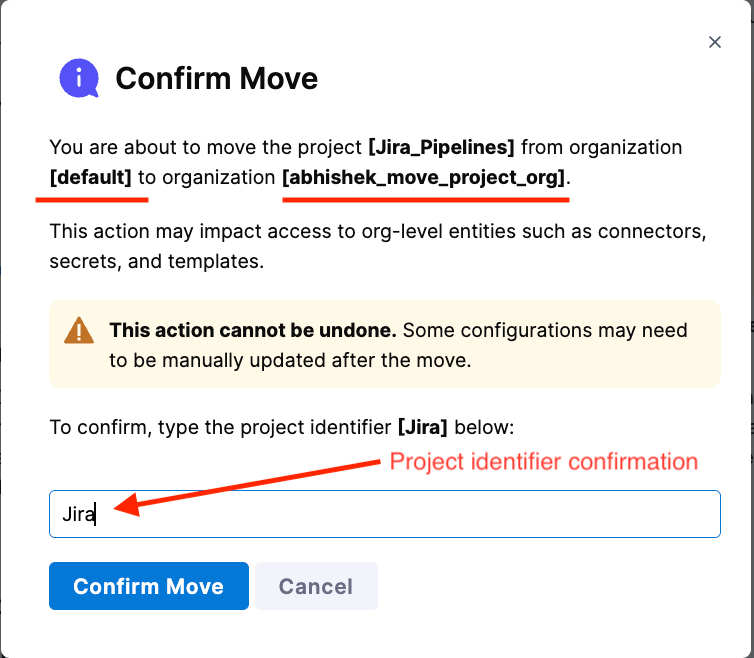

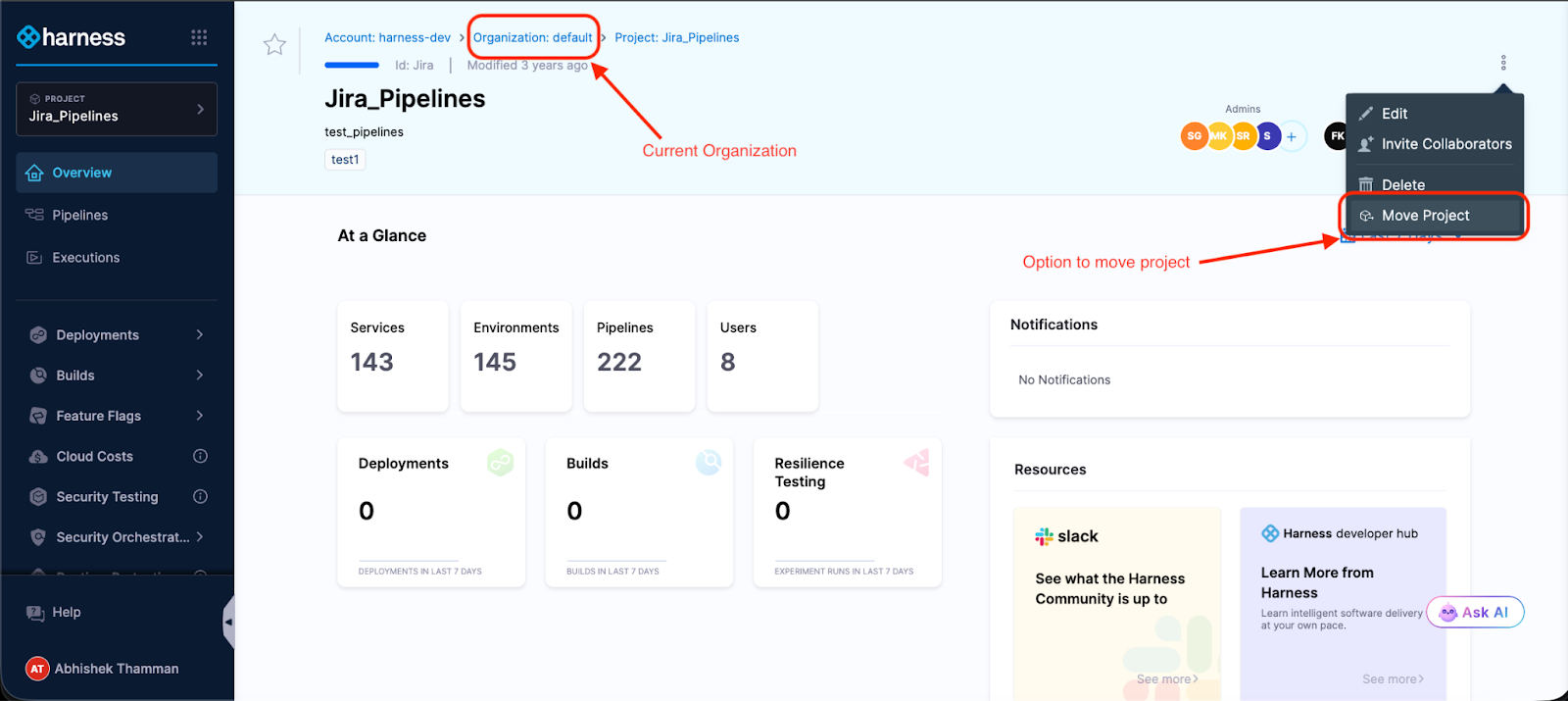

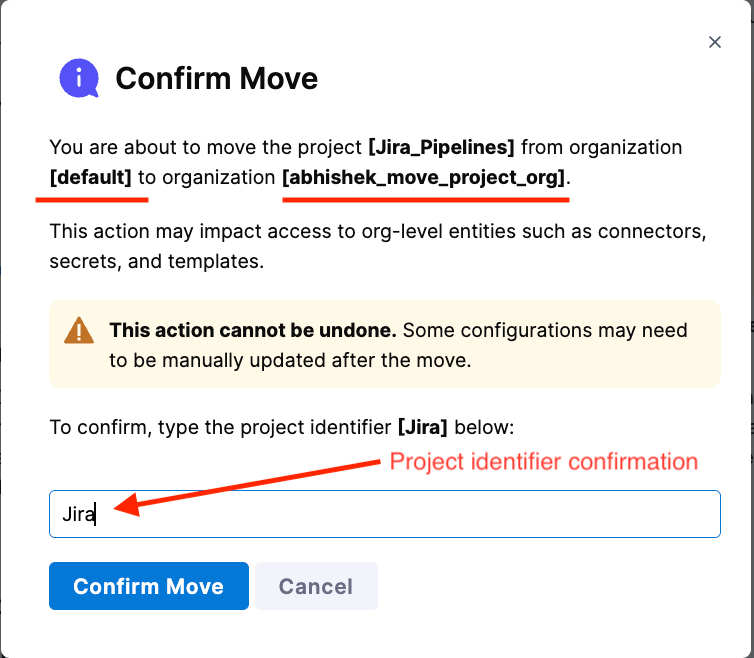

What Moving a Project Actually Feels Like

You're looking at 47 pipelines, 200+ deployment executions, a dozen services, and countless hours of configuration work. The company's org chart says this project now belongs to a different team. Deep breath.

Click the menu. Select "Move Project." Pick your destination Organization.

The modal shows you what might break—Organization-level connectors, secrets, and templates that the project references. Not an exhaustive list, but enough to know what you're getting into.

Type the project identifier to confirm.

Done.

Your project is now in its new Organization. Pipelines intact. Execution history preserved. Templates, secrets, connectors—all right where you left them. The access control migration happens in the background while you grab coffee.

What used to take days of YAML wrangling and "did we remember to migrate X?" conversations now takes minutes.

To summarize:

To move a Harness project between organizations:

1. Open the project menu and select “Move Project.”

2. Choose the destination organization.

3. Review impacted organization-level resources.

4. Confirm by typing the project identifier.

5. Monitor access control migration while pipelines remain intact.

What Moves with Projects

Here's what transfers automatically when you move a project:

- Platform - Your pipelines with their full execution history, all triggers and input sets, services and environments, project-scoped connectors, secrets, templates, delegates, and webhooks.

- Continuous Delivery (CD) - All your deployment workflows, service definitions, and infrastructure configurations. If you built it for continuous delivery, it moves.

- Continuous Integration (CI) - Build configurations, test intelligence settings, and the whole CI setup.

- Internal Developer Portal (IDP) - Service catalog entries and scorecards.

- Security Test Orchestration (STO) - Scan configurations and security testing workflows.

- Code Repository - Repository settings, configurations, and more.

- Database DevOps - Database schema management configurations and more.

Access control follows along too: role bindings, service accounts, user groups, and resource groups. This happens asynchronously, so the move doesn't block, but you can track progress in real-time.

The project becomes immediately usable in its new Organization. No downtime, no placeholder period, no "check back tomorrow."

What doesn’t Move with Projects?

Let's talk about what happens to Organization-level resources and where you'll spend some time post-move.

Organization-scoped resources don't move—and that makes sense when you think about it. That GitHub connector at the Organization level? It's shared across multiple projects. We can't just yank it to the new Organization. So after moving, you'll update references that pointed to:

- Organization-level connectors (GitHub, Docker Hub, cloud providers)

- Organization-level secrets (API keys, credentials)

- Organization-level templates (shared pipeline components)

- User groups inherited from the source Organization

After the move, you'll update these references in your pipelines and configurations. Click the resource field, select a replacement from the new Organization or create a new one, and save. Rinse and repeat. The pre-move and post-move guide walks through the process.

A few CD features aren't supported yet, but on the roadmap: GitOps entities, and Continuous Verification don't move with the project. If your pipelines use these, you'll need to manually reconfigure them in the new Organization after the move. The documentation has specific guidance on supported modules and entities.

Security Boundaries Stay Intact

The Harness hierarchical model, Account > Organization > Project, exists for strong isolation and predictable security boundaries. Moving projects doesn't compromise that architecture. Here's why: Organization-level resources stay put. Your GitHub connectors, cloud credentials, and secrets remain scoped to their Organizations. When a project moves, it doesn't drag sensitive org-wide resources along; it references new ones in the destination. This means your security boundaries stay intact, RBAC policies remain predictable, and teams can't accidentally leak credentials across organizational boundaries. The project moves. The isolation doesn't.

An Example of Moving Projects

A platform engineering team had a familiar problem: three different product teams each had their own Harness Organization with isolated projects. Made sense when the teams were autonomous. But as the products matured and started sharing infrastructure, the separation became friction.

The platform team wanted to consolidate everything under a single "Platform Services" Organization for consistent governance and easier management. Before project movement, that meant weeks of work—export configurations, recreate pipelines, remap every connector and secret, test everything, hope nothing broke.

With project movement, they knocked it out in an afternoon. Move the projects. Update references to Organization-level resources. Standardize secrets across the consolidated projects. Test a few deployments. Done.

The product teams kept shipping. The platform team got its unified structure. Nobody lost weeks to migration work.

Try It (With Smart Guardrails)

Moving a project requires two permissions: Move on the source project and Create Project in the destination Organization. Both sides of the transfer need to agree—you can't accidentally move critical projects out of an Organization or surprise a team with unwanted projects.

When you trigger a move, you'll type the project identifier to confirm.

A banner sticks around for 7 days post-move, reminding you to check for broken references. Use that week to methodically verify everything, especially if you're moving a production project.

Our recommendation: Try it with a non-production project first. Get a feel for what moves smoothly and what needs attention. Then tackle the production stuff with confidence.

Why This Took Time (A Peek Behind the Scenes)

On the surface, moving a project sounds simple-just change where it lives, and you’re done. But in reality, a Harness project is a deeply connected system.

Your pipelines, execution history, connectors, secrets, and audit logs are all tied together behind the scenes. Historically, Harness identified these components using their specific "address" in the hierarchy. That meant if a project moved, every connected entity would need its address updated across multiple services at the same time. Doing that safely without breaking history or runtime behavior was incredibly risky.

To solve this, we re-architected the foundation.

We stopped tying components to their location and introduced stable internal identifiers. Now, every entity has a unique ID that travels with it, regardless of where it lives. When you move a project, we simply update its parent relationship. The thousands of connected components inside don’t even realize they’ve moved.

This architectural shift is what allows us to preserve your execution history and audit trails while keeping project moves fast and reliable.

What's Coming

This is version one. The foundations are solid: projects move, access control migrates, pipelines keep running. But we're not done.

We're listening. If you use this feature and hit rough edges, we want to hear about it.

The Bottom Line

Organizational change is inevitable. The weeks of cleanup work afterward don't have to be.

Project Movement means your Harness setup can adapt as fast as your org chart does. When teams move, when projects change ownership, when you consolidate for efficiency, your software delivery follows without the migration overhead.

No more lost history. No more recreated pipelines. No more week-long "let's just rebuild everything in the new Organization" projects.

Ready to try it? Check out the step-by-step guide or jump into your Harness account and look for "Move Project" in the project menu.

At Harness, our story has always been about change — helping teams ship faster, deploy safer, and control the blast radius of every modification to production. Deployments, feature flags, pipelines, and governance are all expressions of how organizations evolve their software.

Today, the pace of change is accelerating. As AI-assisted development becomes the norm, more code reaches production faster, often without a clear link to the engineer who wrote it. Now, Day 2 isn’t just supporting the unknown – it’s supporting software shaped by changes that may not have a clear human owner.

And as every SRE and on-call engineer knows, even rigorous change hygiene doesn’t prevent incidents because real-world systems don’t fail neatly. They fail under load, at the edges, in the unpredictable ways software meets traffic patterns, caches, databases, user behavior, and everything in between.

When that happens, teams fall back on what they’ve always relied on: Human conversation and deep understanding of what changed.

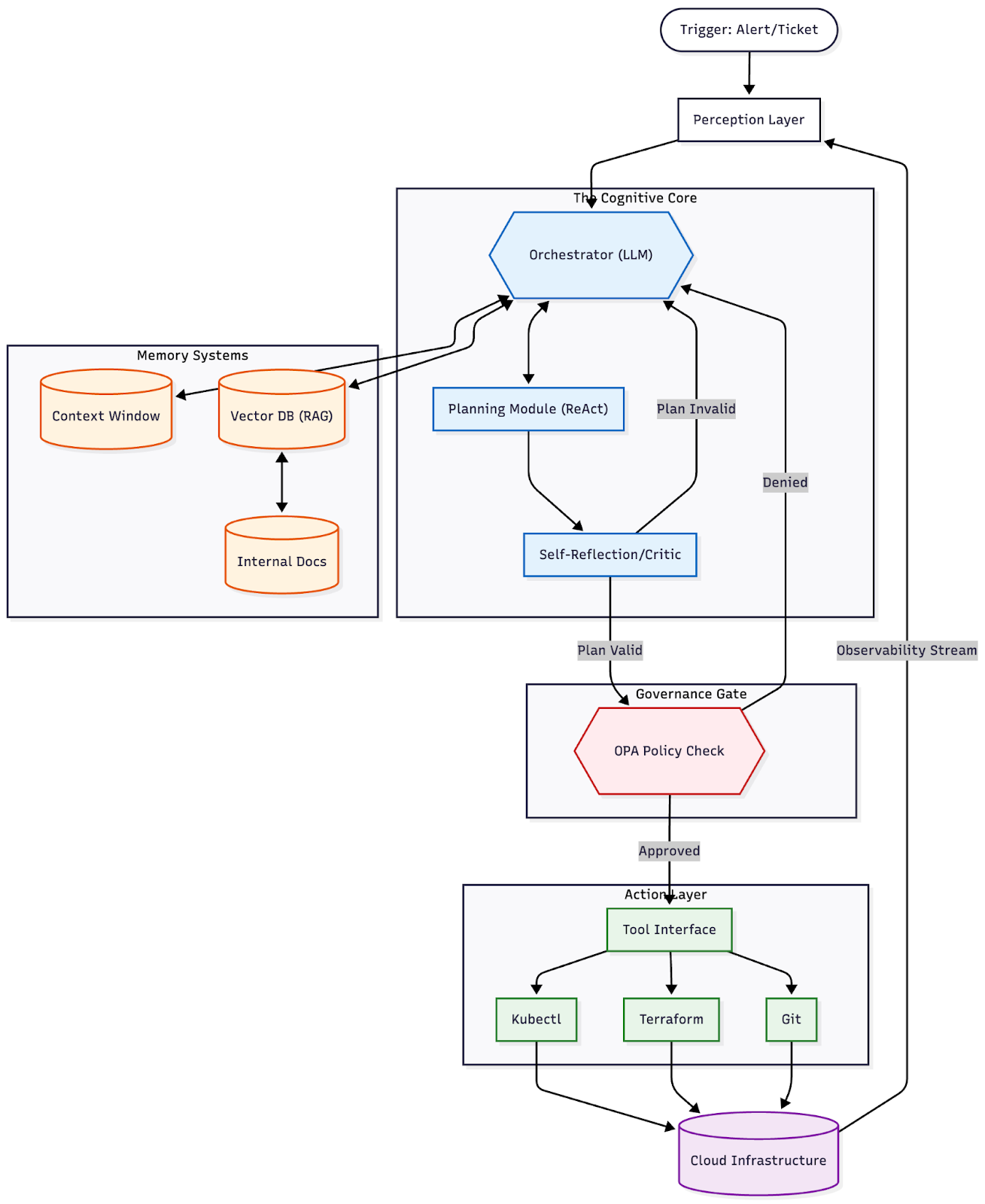

That’s why today we’re excited to introduce the Harness Human-Aware Change Agent — the first AI system designed to treat human insight as operational data and use it to drive automated, change-centric investigation during incidents.

Not transcription plus RCA. One unified intelligence engine grounded in how incidents actually unfold.

📞 A Quick Look at Harness AI SRE

The Human-Aware Change Agent is part of Harness AI SRE — a unified incident response system built to help teams resolve incidents faster without scaling headcount. AI SRE brings together the critical parts of response: capturing context, coordinating action, and operationalizing investigation.

At the center is the AI Scribe, because the earliest and most important clues in an incident often surface in conversation before they appear in dashboards. Scribe listens across an organization’s tools with awareness of the incident itself – filtering out unrelated chatter and capturing only the decisions, actions, and timestamps that matter. The challenge isn’t producing a transcript; it’s isolating the human signals responders actually use.

Those signals feed directly into the Human-Aware Change Agent, which drives change-centric investigation during incidents.

And once that context exists, AI SRE helps teams act on it: Automation Runbooks standardize first response and remediation, while On-Call and Escalations ensure incidents reach the right owner immediately.

AI SRE also fits into the tools teams already run — with native integrations and flexible webhooks that connect observability, alerting, ticketing, and chat across systems like Datadog, PagerDuty, Jira, ServiceNow, Slack, and Teams.

🌐 Why We Built a Human-Aware Change Agent

Most AI approaches to SRE assume incidents can be solved entirely through machine signals — logs, metrics, traces, dashboards, anomaly detectors. But if you’ve ever been on an incident bridge, you know that’s not how reality works.

Some of the most important clues come from humans:

- “The customer said the checkout button froze right after they updated their cart.”

- “Service X felt slow an hour before this started.”

- “Didn’t we flip a flag for the recommender earlier today?”

- “This only happens in the US-East cluster.”

These early observations shape the investigation long before anyone pulls up a dashboard.

Yet most AI tools never hear any of that.

The Harness Human-Aware Change Agent changes this. It listens to the same conversations your engineers are having — in Slack, Teams, Zoom bridges — and transforms the human story of the incident into actionable intelligence that guides automated change investigation.

It is the first AI system that understands both what your team is saying and what your systems have changed — and connects them in real time.

🔍 How the Human-Aware Change Agent Works

1. It listens and understands human context.

Using AI Scribe as its conversational interface, the agent captures operational signals from a team’s natural dialogue – impacted services, dependencies, customer-reported symptoms, emerging theories or contradictions, and key sequence-of-events clues (“right before…”).

The value is in recognizing human-discovered clues, and converting them into signals that guide next steps.

2. It investigates changes based on those clues.

The agent then uses these human signals to direct investigation across your full change graph including deployments, feature flags or config changes, infrastructure updates, and ITSM change records – triangulating what engineers are seeing with what is actually changing in your production environment.

3. It surfaces evidence-backed hypotheses.

Instead of throwing guesses at the team, it produces clear, explainable insights:

“A deployment to checkout-service completed 12 minutes before the incident began. That deploy introduced a new retry configuration for the payment adapter. Immediately afterward, request latency started climbing and downstream timeouts increased.”

Each hypothesis comes with supporting data and reasoning, allowing teams to quickly validate or discard theories.

4. It helps teams act faster and safer

By uniting human observations with machine-driven change intelligence, the agent dramatically shortens the path from:

What are we seeing? → What changed? → What should we do?

Teams quickly gain clarity on where to focus, what’s most suspicious, and which rollback or mitigation actions exist and are safest.

🌅 A New Era of Incident Response

With this release, Harness is redefining what AI for incident management looks like.

Not a detached assistant. Not a dashboard summarizer. But a teammate that understands what responders are saying, investigates what systems have changed, connects the dots, and helps teams get to truth faster.

Because the future of incident response isn’t AI working alone. It’s AI working alongside engineers — understanding humans and systems in equal measure.

Book a demo of Harness AI SRE to see how human insight and change intelligence come together during real incidents.

Blog Library

Cloud Cost Optimization: Why Your Approach Is Broken

If cloud cost optimization feels like a never-ending game of whack-a-mole—new recommendations every 30 days, the same debates with engineering, another set of dashboards no one trusts—you’re not alone.

But what if your cloud cost optimization strategy is the reason your AWS bill keeps climbing?

Not the lack of one.

Not poor execution.

The strategy itself.

We've seen this pattern dozens of times: teams implement tagging standards, build dashboards, schedule monthly FinOps reviews, and still watch costs spiral. The infrastructure is tagged. The metrics exist. The meetings happen. Yet every quarter, the CFO asks the same uncomfortable question:

“Why are we spending this much?”

The problem usually isn’t the idea of optimization. It’s the approach: too reactive, too late in the lifecycle, and too disconnected from how software is actually built and shipped.

And in high-velocity engineering environments, that gap between deployment and optimization review is exactly where runaway spend lives.

Why Traditional Cloud Cost Optimization Strategies Fail at Scale

Most organizations adopt a cloud cost management approach that sounds reasonable:

Deploy infrastructure → monitor spend → identify anomalies → remediate issues → repeat.

This is the classic “observe and optimize” model, borrowed from decades of on-premises capacity planning.

It breaks in the cloud.

In traditional datacenters, provisioning took weeks. Infrastructure decisions went through multiple approval layers. The natural friction slowed spend.

In cloud environments, engineers can provision thousands of dollars of compute in minutes. The speed that makes cloud infrastructure powerful also makes reactive cost optimization dangerously slow.

The Monthly Treadmill Problem

A huge reason teams feel like they’re starting over every month is that the default workflow looks like this:

Spend happens

A report shows waste

FinOps sends recommendations

Engineering says “not now”

Repeat next month

Even if your team is doing all the “right” things—rightsizing, commitments, idle cleanup, non-prod shutdown—you’re still reacting to what already happened.

And if your cloud spending optimization depends on sporadic human follow-through, you’ll keep reliving the same cycle.

The Reporting Trap

The most common failure mode we encounter is what we call “the reporting trap.”

Organizations invest heavily in cost visibility dashboards, allocation reports, and trend analysis, then wonder why costs don't improve.

The reports show what happened.

They rarely prevent what’s about to happen.

Consider a typical scenario: an engineering team deploys a new microservice on Friday. It includes an RDS instance sized for anticipated peak load, plus a few EC2 instances running 24/7 for background processing.

The deployment succeeds. The service works.

Two weeks later, someone notices the RDS instance costs $3,000/month and runs at 12% utilization. By the time this surfaces in a cost review, you’ve burned $6,000.

Reporting-based infrastructure cost optimization identifies problems. It doesn’t prevent them.

And in CI/CD environments shipping multiple times per day, prevention matters far more than detection.

The Allocation Illusion

Another common broken strategy: obsess over cost allocation and chargeback models.

Get the tagging right.

Assign every dollar to a team.

Generate showback reports.

Declare victory.

Allocation solves an accounting problem. It doesn’t solve an engineering problem.

Knowing which team caused overspend doesn’t stop the next deployment from repeating the same mistake. It creates visibility into financial responsibility without creating controls that prevent waste.

Effective cloud cost governance requires allocation and guardrails. You need to know who’s spending—but you also need mechanisms that stop obviously wasteful configurations from ever reaching production.

FinOps Best Practices: Finance + DevOps (And That’s the Point)

A lot of cloud cost optimization strategies fail because they treat FinOps as:

- a tool

- a tagging project

- a finance initiative

- a savings sprint

- “finance trying to cut engineering’s budget”

But mature FinOps best practices are built around collaboration:

Finance, engineering, infrastructure/platform teams, and business owners operating from the same data and goals—even if their priorities differ.

- Finance wants predictability and accountability

- Engineering wants velocity and reliability

- Platform teams want consistency and governance

- Business owners want clear unit economics and value delivery

When those groups operate in silos, cloud bills become a mystery, optimization becomes political, and waste becomes “the cost of doing business.”

A mature cloud cost optimization strategy flips that. It makes spend a shared responsibility—with shared context.

What Cloud Cost Optimization Should Actually Look Like

A working cost optimization framework starts from a different premise:

Cost decisions should happen at the same place and time as infrastructure decisions.

Not in a dashboard two weeks later.

Not in a quarterly business review.

In the pull request.

In the Terraform plan.

In the CI/CD pipeline before deployment.

Shift Cost Controls Left (Shift-Left FinOps)

The biggest step-change happens when you stop treating cloud cost reduction strategy work as an operational clean-up task—and start treating cost governance as a software delivery design constraint.

That’s what “shift left” means in a cost context: bringing optimization upstream into the provisioning and deployment workflow before overspend becomes production reality.

Because engineers don’t overprovision out of malice. They do it because their job is reliability:

- “Let’s size for the spike.”

- “Let’s pick the robust instance.”

- “Let’s over-allocate just in case.”

And then utilization never reaches what was provisioned.

Shift-left changes the default by putting guardrails and approved patterns into the path engineers already use to ship software—so cost control doesn’t require constant cost review meetings.

Think Roads, Not Speeding Tickets

A useful mental model is roads and cars:

Applications are the cars.

Infrastructure is the road system.

Roads set the rules—speed limits, exits, lanes—not the cars.

When your platform and provisioning workflows define the safe, optimized options, you reduce chaos and make the right choice the easy choice.

That’s what scalable cloud cost governance actually looks like.

“Zero Drift”: Don’t Just Set Guardrails—Keep Them

Once you shift left, the next question is:

How do you prevent teams from gradually drifting away from the intended standard?

That’s where the concept of zero drift comes in.

Zero drift is the idea that the desired state (cost-aware, governed, optimized) is continuously enforced through automation—so you aren’t babysitting optimization forever.

Humans shouldn’t be the control plane.

In practice, zero drift means:

- provisioning is standardized and policy-driven

- instance/cluster choices are constrained to approved configurations

- optimization actions (like rightsizing) can be automated with confidence

- anomalies are monitored, investigated, and resolved without breaking the system

Instead of monthly restarts, you get continuous alignment.

This is the difference between a cloud cost management approach that scales and one that collapses under velocity.

Tagging: Necessary, Painful… and Still a Common Failure Mode

Let’s address the elephant in the room: visibility.

If you can’t reliably answer “who is spending what, and why?” you can’t run FinOps at scale.

And yet, even in large organizations, tagging quality is frequently the weak link. Many companies can’t attribute the vast majority of spend with high confidence.

That’s not just an administrative issue—it’s a blocker for automation.

You can’t automate decisions against spend you can’t confidently attribute.

The takeaway is simple:

Treat attribution as foundational, but don’t stop there. Mature FinOps doesn’t end at “better tags.” It moves toward system-enforced governance and workload-level controls that reduce dependence on perfect tagging for every single decision.

The Pivot: From Savings to Unit Economics (and Business Value)

Most teams eventually hit diminishing returns on classic savings levers:

- Reserved instances / savings plans

- basic rightsizing

- cleaning up idle resources

- non-prod stopping

- commitment discounts

At some point, you’ve harvested the low-hanging fruit.

The next question becomes:

How do we define—and improve—the value of every cloud dollar going forward?

That’s where unit economics comes in.

Instead of asking “How much did we save?” you ask:

- What does it cost per customer?

- Per transaction?

- Per workload?

- Per feature, environment, or product line?

This reframes cloud cost reduction strategy work from “cost cutting” to “value engineering.”

And it’s one of the clearest signals that your cloud cost optimization strategy has matured.

How Harness Cloud Cost Management Approaches This Problem

Harness Cloud Cost Management is built around the premise that cost optimization happens in the engineering workflow, not after it.

Instead of treating cost management as a separate finance function, it integrates cost visibility and governance directly into CI/CD pipelines, infrastructure provisioning workflows, and day-to-day development processes.

Cost Visibility Across Your Entire Cloud Estate

Harness provides unified cost visibility across AWS, Azure, GCP, and Kubernetes clusters, with automatic allocation by team, service, environment, and business unit.

You get real-time dashboards showing exactly where spend is happening, down to individual workloads and namespaces.

Cost anomaly detection highlights unexpected changes automatically, with alerts routed directly to responsible engineering teams.

This supports both showback and chargeback models—without creating manual reporting overhead. Teams see their spend in real time, not weeks after the invoice closes.

In-Workflow Cost Governance

Where Harness differs from traditional tools is how governance works.

Cost policies enforce directly in CI/CD pipelines and infrastructure-as-code workflows.

Before a Terraform plan applies, Harness evaluates estimated costs against defined budgets and thresholds. If a deployment would exceed limits, the pipeline fails with clear feedback on what needs to change.

This creates a natural feedback loop where engineers see cost impacts immediately—while they still have full context on the infrastructure decisions being made.

It prevents expensive mistakes from reaching production rather than identifying them later through reporting.

Harness also supports automated optimization recommendations, including:

- rightsizing suggestions

- idle resource cleanup

- non-prod stopping automation

- commitment-based discount opportunities

Teams can implement these recommendations directly through the same pipelines they use for regular infrastructure changes.

Built for Engineering-Led Cost Optimization

Harness treats cloud cost management as an engineering problem, not a finance problem.

The platform integrates with existing tools (GitHub, GitLab, Jira, Slack) and workflows (Terraform, CloudFormation, Kubernetes) rather than requiring separate processes.

Engineers interact with cost data in the same interfaces they already use for infrastructure management.

Policy enforcement is flexible but opinionated:

Default guardrails prevent common waste patterns (idle resources, oversized instances, untagged infrastructure) while allowing teams to define exceptions for legitimate use cases.

The goal is to make cost-efficient choices the path of least resistance—not to create approval bottlenecks.

For organizations managing cost at scale, Harness supports advanced workflows like:

- environment-based budgets (dev/staging/production)

- cost allocation hierarchies (business units, products, teams)

- integration with business metrics for cost-per-transaction analysis

Fixing Your Cloud Cost Optimization Approach

If your current cloud cost optimization strategy feels broken, you’re probably optimizing the wrong thing.

Cost visibility and allocation are necessary, but they’re not sufficient.

Real cost control happens when engineers see cost impacts before deployment, not when finance reviews invoices after.

A working cost optimization framework:

- embeds cost awareness directly into CI/CD and IaC workflows

- combines proactive guardrails with real-time visibility

- uses automation to prevent drift

- measures success by cost efficiency and unit economics—not just raw spend reduction

Reactive cloud spending optimization scales poorly in high-velocity engineering environments.

Proactive cloud cost governance scales effortlessly.

Ready to shift cost controls left? Start with Harness Cloud Cost Management and see what engineering-native cost optimization looks like in practice.

What To Go Deeper?

Watch our webinar, Cloud Cost Optimization Isn't Broken_The Approach is to learn more.

Learn more about how Harness Cloud Cost Management works or explore the CCM documentation.

Engineering Metrics Success: Communicate Speed, Quality, and Business Outcomes

Engineering organizations are waking up to something that used to be optional: measurement.

Not vanity dashboards. Not a quarterly “engineering metrics review” that no one prepares for. Real measurement that connects delivery speed, quality, and reliability to business outcomes and decision-making.

That shift is a good sign. It means engineering leaders are taking the craft seriously.

But there are two patterns I keep seeing across the industry that turn this good intention into a slow-motion failure. Both patterns look reasonable on paper. Both patterns are expensive. And both patterns lead to the same outcome: a metrics tool becomes shelfware, trust erodes, and leaders walk away thinking, “Metrics do not work here.”

Engineering metrics do work. But only when leaders use them the right way, for the right purpose, with the right operating rhythm.

Here are the two patterns, and how to address them.

Pattern #1: “We bought the tool, gave it to leaders, and expected behavior to change”

This is the silent killer.

An engineering executive buys a measurement platform and rolls it out to directors and managers with a message like: “Now you’ll have visibility. Use this to improve.”

Then the executive who sponsored the initiative rarely uses the tool themselves.

No consistent review cadence. No decisions being made with the data. No visible examples of metrics guiding priorities. No executive-level questions that force a new standard of clarity.

What happens next is predictable.

Managers and directors conclude that engineering metrics are optional. They might log in at first. They might explore the dashboards. But soon the tool becomes “another thing” competing with real work. And because leadership is not driving the behavior, the culture defaults to the old way: opinions, anecdotes, and local optimization.

If leaders are not driving direction with data, why would managers choose to?

This is not a tooling problem. It is a leadership ownership problem.

What to do instead: make metrics executive-owned, not manager-assigned

If measurement is important, the most senior leaders must model it.

That does not mean micromanaging teams through numbers. It means creating a clear expectation that engineering metrics are part of how the organization thinks, communicates, and makes decisions.

Here is what executive ownership looks like in practice:

- The executive sponsor uses the tool publicly. In staff meetings, in reviews, in planning, in post-incident discussions.

- Metrics show up in decision moments. Prioritization, investment tradeoffs, risk calls, capacity conversations.

- Leaders ask better questions because they have data. Not “Why are you slow?” but “What is slowing you down, and what would move it?”

- A consistent cadence exists. Not random dashboard reviews. A repeatable operating rhythm.

When executives do this, managers follow. Not because they are told to, but because the organization has made measurement real.

Pattern #2: “Buying a measurement tool will fix our engineering problems”

This is the other trap, and it is even more common.

There is a false belief that if an organization has DORA metrics, improvements in throughput and quality will automatically follow. Like measurement itself is the intervention.

But measurement does not create performance. It reveals performance.

A tool can tell you:

- how long changes take to reach production

- how often you deploy

- how frequently you experience failure

- how quickly you recover

Those are powerful signals. But they do not change anything on their own.

If the system that produces those numbers stays the same, the numbers stay the same.

This is why organizations buy tools, instrument everything, and still feel stuck. They measured the pain, but never built the discipline to diagnose and treat the cause.

What to do instead: treat engineering metrics as instrumentation, not transformation

If you want metrics to lead to improvement, you need two things:

- Clear definitions and shared understanding

- A metrics practice that turns numbers into decisions and experiments

Without definitions, metrics turn into arguments. Everyone interprets the same number differently, then stops trusting the system.

Without a practice, metrics turn into observation. You notice, you nod, then you go back to work.

The purpose of measurement is not to create pressure. It is to create clarity. Clarity about where the system is constrained, what tradeoffs you are making, and whether your interventions actually helped.

The real goal: measure change, not teams

Here is the shift that unlocks everything:

The goal is not to measure engineers.

The goal is to measure the system.

More specifically, the goal is to prove whether a change you made actually improved outcomes.

A change could be:

- a tooling change

- a process change

- a policy change

- a staffing or org change

- a reliability investment

- a platform improvement

- a CI/CD modernization effort

If you cannot measure movement after you make a change, you are operating on opinions and hope.

If you can measure movement, you can run engineering like a disciplined improvement engine.

This is where DORA metrics become extremely valuable, when they are used as confirmation and learning, not as a scoreboard.

Engineering metrics should confirm reality, not replace judgment

The best leaders I have worked with do not hand leadership over to dashboards. They use metrics as confirmation of what they already sense, and as a way to test assumptions.

- “We believe code reviews are a bottleneck. Do we see it in cycle time breakdowns?”

- “We believe flaky tests are slowing delivery. Do we see increased rework or longer lead time?”

- “We believe incident recovery is too manual. Do we see MTTR improve after automation?”

- “We believe our deployment process is too risky. Does change failure rate drop after we change release strategy?”

That is the role of measurement. It turns gut feel into validated understanding, then turns interventions into provable outcomes.

A practical operating model that works

If you want measurement to drive real improvement, here is a straightforward structure that scales.

1) Define what “good” means in your context

Use DORA as a baseline, but make definitions explicit:

- What counts as a deployment?

- What counts as a production failure?

- How do you define lead time?

- How do you define recovery?

This prevents endless debates and keeps the organization aligned.

2) Establish a simple cadence

You do not need a heavy process. You need consistency.

A strong starting point:

- Weekly: team-level review of flow and reliability signals, focused on removing friction

- Monthly: leadership review focused on trend movement, constraints, and investments

- Quarterly: strategic review to decide where to focus improvement efforts next

3) Pair every metric with a lever

A metric without a lever becomes a complaint.

Examples:

- If lead time is high, what levers do you pull?

- reduce batch size, improve trunk-based practices, improve test speed, remove manual approvals

- reduce batch size, improve trunk-based practices, improve test speed, remove manual approvals

- If change failure rate is high, what levers do you pull?

- improve testing strategy, release safety patterns, observability, rollback mechanisms

- improve testing strategy, release safety patterns, observability, rollback mechanisms

- If MTTR is high, what levers do you pull?

- better alerting, runbooks, ownership clarity, automated remediation, incident practices

- better alerting, runbooks, ownership clarity, automated remediation, incident practices

4) Run experiments and measure outcomes

This is the part most organizations skip.

Pick one change. Implement it. Measure before and after. Learn. Repeat.

Improvement becomes a system, not a motivational speech.

5) Make leaders the model

This brings us back to Pattern #1.

If executives use the tool and drive decisions with it, measurement becomes real. If they do not, the tool becomes optional, and optional always loses.

Where the best organizations land

The organizations that do this well eventually stop talking about “metrics adoption.” They talk about “how we run the business.”

Measurement becomes part of how engineering communicates with leadership, how priorities get set, how teams remove friction, and how investment decisions are made.

And the biggest shift is this:They stop expecting a measurement tool to fix problems.They use measurement to prove that the problems are being fixed.

That is the point. Not dashboards, not reporting, not performance theater: Clarity, decisions, experiments, and outcomes.

In the end, measurement is not the transformation. It is the instrument panel that tells you whether your transformation is working.

From Chaos Engineering to Resilience Testing: Why We’re Expanding How Teams Validate Reliability

At Harness, we’re committed to helping teams build and deliver software that doesn’t just work – it thrives under pressure, scales reliably, and recovers swiftly from the unexpected. Today, we’re taking the next step in that mission by evolving our Chaos Engineering module into Resilience Testing.

This evolution reflects how reliability is tested in practice today. While Chaos Engineering has long been a powerful way to proactively identify weaknesses through controlled fault injection, many teams – SREs, platform engineers, performance specialists, and DevOps leaders – are already validating resilience across the same workflows:

- How systems behave when dependencies fail

- How services perform under sustained load

- How infrastructure and applications recover during real outages

Resilience Testing brings these efforts together into a single, continuous approach.

Built On Open Source and Real Systems

My work in Chaos Engineering started with a simple goal: make resilience testing practical for real-world systems. Before that, I spent years building foundational cloud-native infrastructure at places like CloudByte and MayaData, and I kept coming back to the same lesson: you learn fastest when you build in the open and stay close to production users.

Before joining Harness, my team and I created LitmusChaos to help teams running Kubernetes understand how their systems actually behave under failure. What began as an open source project grew into one of the most widely adopted chaos engineering projects in the CNCF, used by organizations testing real production environments.

When Harness acquired Chaos Native in 2022, it was clear we shared the same belief: chaos engineering shouldn’t be a standalone activity. It belongs inside the software delivery lifecycle. We then donated LitmusChaos to the CNCF, and Harness continues to actively maintain and contribute to the project today.

That combination of open source leadership and enterprise integration has directly shaped how chaos engineering evolved inside Harness.

How Chaos Engineering Expanded in Practice

Over the past four years, teams using Chaos Engineering pushed beyond isolated experiments toward broader resilience workflows.

What mattered most wasn’t injecting failures – it was understanding what to test, when to test, and how to learn continuously. That led to deeper capabilities around service and dependency discovery, targeted risk testing, monitoring-driven validation, automated gamedays, and AI-assisted recommendations.

As software delivery has become more automated and increasingly AI-assisted, these same principles naturally extended beyond chaos engineering alone.

Introducing Resilience Testing

Today, we’re launching Resilience Testing, with new Load Testing and Disaster Recovery Testing capabilities built on top of our Chaos Engineering foundation.

Resilience Testing brings together three core areas:

- Chaos Engineering to validate failure handling and recovery

- Load Testing to understand behavior under scale and stress

- Disaster Recovery Testing to prove readiness for real outages

These capabilities are unified through automation and AI-driven insights, helping teams prioritize risk, improve coverage, and continuously validate resilience as systems evolve.

Chaos Eengineering gave us a strong foundation, and Resilience Testing is the broader practice teams have been building toward as systems and workflows evolve.

A Milestone Shaped By Community

This evolution follows years of collaboration with the broader resilience engineering community, including Chaos Carnival, now in its sixth year, which brings together thousands of engineers sharing real lessons from production systems.

As systems grow more dynamic and AI-driven, resilience testing must move beyond periodic checks toward continuous, intelligent validation. Resilience Testing is designed for that reality, and it reflects what we’ve learned building, operating, and scaling real systems over time.

Ready to expand beyond chaos experiments? Talk to your Harness representative to enable the new capabilities, or book a demo with our team to explore the right rollout for your environment.

.png)

.png)

Harness AI February 2026 Updates: Securing & Making the SDLC Reliable and Shipping Faster with Agents

February is all about making AI in software delivery secure and easier to operate at scale. This month’s updates span enterprise-grade application security, API security via MCP, SRE automation, and a major upgrade to the DevOps Agent.

Bring API Security Intelligence Directly into Your AI Workflows

Harness’s WAAP Public MCP Server is now Generally Available, enabling querying API security data with natural language in popular AI environments such as VS Code, Cursor, and Claude Desktop. Teams can pull in insights across API discovery, inventory, risk, vulnerabilities, remediation, and runtime protection, and then blend that data with internal sources inside custom AI workflows.

This brings API security context directly into daily developer and security workflows, rather than locking it behind dashboards. By putting WAAP data behind an MCP server, organizations can enable richer, real-time AI-driven security analysis and make API telemetry usable in the same AI agents and copilots developers already rely on.

Looking ahead, WAAP tools will also be integrated with Harness AI, enabling joint customers to access capabilities through the Harness MCP server. The goal is a unified MCP experience where security and delivery agents can reason over the same API security data without brittle, one-off integrations.

Shift-Left Security That Actually Prioritizes What Matters

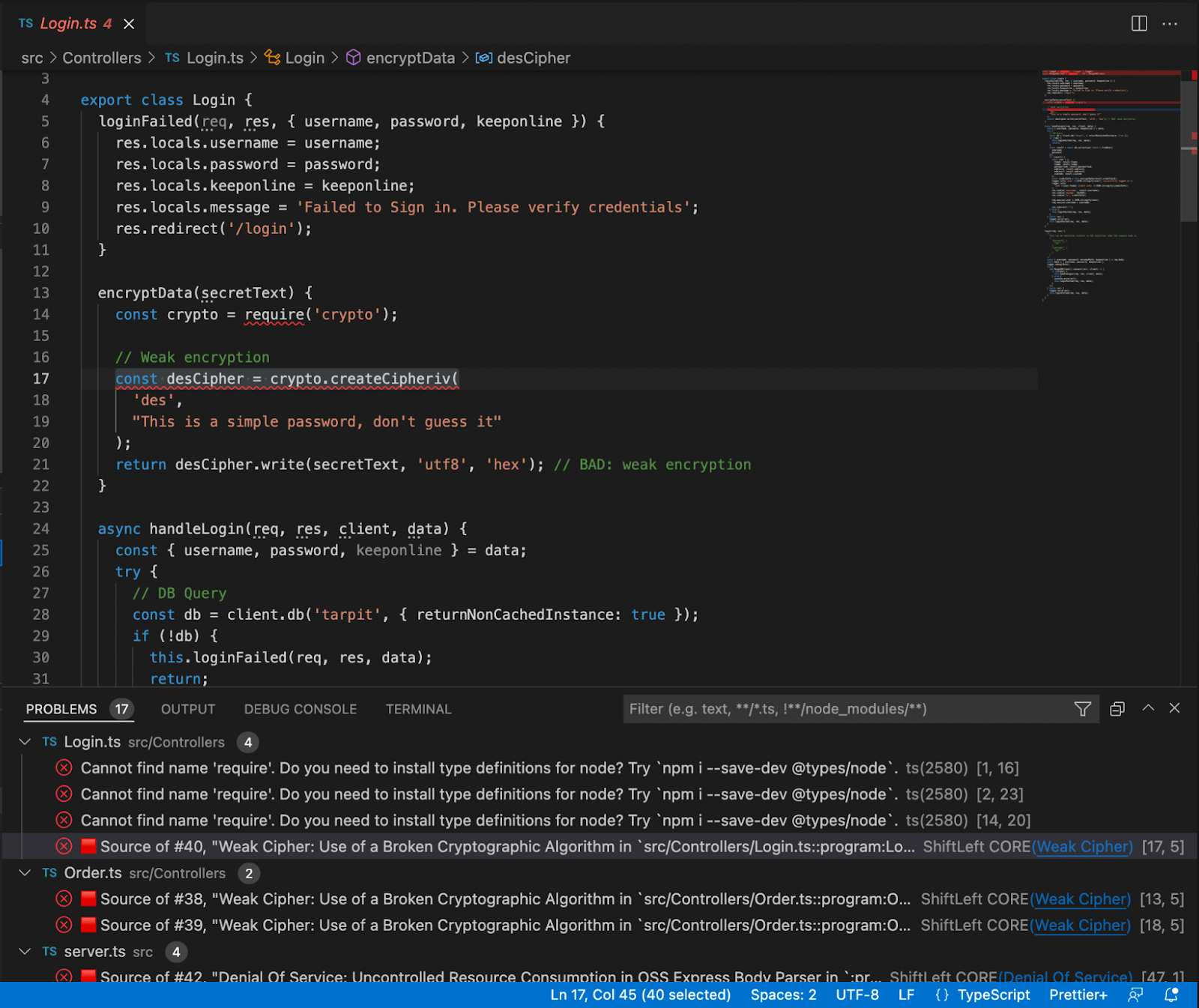

Harness SAST and SCA are now Generally Available as native security scanners within Security Testing Orchestration (STO), delivering AI-powered static analysis and software composition analysis right where AI agents and coding copilots generate code—in your pipelines. In the era of agentic coding, where AI autonomously writes, iterates, and deploys code at unprecedented speed, SAST scans repositories for security issues, hard-coded secrets, and vulnerable open-source libraries, while SCA analyzes container images for vulnerable OS packages and libraries, all with static reachability-based prioritization to cut through AI-amplified noise.

Onboarding is intentionally minimal: Harness automatically detects repositories and manages scanner hosting and licensing, including a 45-day free trial for existing STO customers. Within pipelines, SAST and SCA are available as native steps with auto repo detection, generate SBOMs for application and container dependencies, and surface results centrally for security and dev teams.

What sets this release apart is reachability-based prioritization and AI-assisted remediation, perfectly tuned for AI-driven workflows. Vulnerabilities are ranked based on whether they’re actually reachable from application code, helping teams focus on truly exploitable issues instead of noisy findings from rapid AI code gen, and AI-generated fixes can automatically open pull requests to accelerate remediation.

Incident Runbooks That Understand Your Jira Schema

In AI SRE, the Jira integration for runbooks has been rebuilt to support dynamic fields for both Create Jira Ticket and Update Jira Issue actions. When builders select a project and issue type, the runbook step now automatically loads the exact fields required by that Jira workflow, including custom fields, labels, and multi-select values.

This eliminates guesswork around field names and internal Jira schema details, and greatly reduces broken automations caused by missing or misconfigured required fields. For more advanced runbooks where the issue type is determined at runtime, a key-value mode lets builders set any Jira field directly while still benefiting from built-in validation that catches broken URLs and missing required fields before execution.

You can use the new Jira experience today by adding the updated Create or Update Jira actions to any AI SRE runbook. It’s particularly useful for complex incident workflows where different incident types must map cleanly to different Jira projects and issue types without manual rework.

A Smarter, Faster DevOps Agent for Enterprise-Scale Pipelines

The DevOps Agent has received a major upgrade and is now powered by an Opus 4.5 foundation model. From our internal testing, we found out that the new model improves speed, context retention, and overall pipeline generation accuracy, particularly for large, highly templated enterprise pipelines.

Teams will see faster response times, higher-quality YAML generation, and better handling of longer, more complex pipelines. This upgrade has been validated against complex pipelines. Enhanced template awareness also means the agent is better at reusing existing templates and making high-fidelity updates to existing pipelines, reducing the amount of manual cleanup after AI-generated changes.

The upgraded DevOps Agent is rolling out to our customers soon and will be available directly in the AI Chat experience, with no configuration changes required. This is especially impactful for large enterprises running deeply nested template hierarchies, where context management and accuracy are critical for safe automation.

Escaping the AI Velocity Paradox

These February updates directly tackle the AI Velocity Paradox: where AI coding tools accelerate code generation but create downstream bottlenecks in testing, security, deployment, and observability that erase those gains. By providing reachability-aware SAST/SCA to secure agentic code without slowing pipelines, MCP-powered API security for contextual risk analysis, smarter SRE runbooks for resilient operations, and an upgraded DevOps Agent for complex pipeline automation, Harness extends AI intelligence across the full software delivery lifecycle. The result? Teams ship faster, safer software without the fragility of fragmented tools or unproven hype, turning AI potential into measurable business velocity.

Reimagining Artifact Management for DevSecOps: Harness Artifact Registry GA

Today, Harness is announcing the General Availability of Artifact Registry, a milestone that marks more than a new product release. It represents a deliberate shift in how artifact management should work in secure software delivery.

For years, teams have accepted a strange reality: you build in one system, deploy in another, and manage artifacts somewhere else entirely. CI/CD pipelines run in one place, artifacts live in a third-party registry, and security scans happen downstream. When developers need to publish, pull, or debug an artifact, they leave their pipelines, log into another tool, and return to finish their work.

It works, but it’s fragmented, expensive, and increasingly difficult to govern and secure.

At Harness, we believe artifact management belongs inside the platform where software is built and delivered. That belief led to Harness Artifact Registry.

A Startup Inside Harness

Artifact Registry started as a small, high-ownership bet inside Harness and a dedicated team with a clear thesis: artifact management shouldn’t be a separate system developers have to leave their pipelines to use. We treated it like a seed startup inside the company, moving fast with direct customer feedback and a single-threaded leader driving the vision.The message from enterprise teams was consistent: they didn’t want to stitch together separate tools for artifact storage, open source dependency security, and vulnerability scanning.

So we built it that way.

In just over a year, Artifact Registry moved from concept to core product. What started with a single design partner expanded to double digit enterprise customers pre-GA – the kind of pull-through adoption that signals we've identified a critical gap in the DevOps toolchain.

Today, Artifact Registry supports a broad range of container formats, package ecosystems, and AI artifacts, including Docker, Helm (OCI), Python, npm, Go, NuGet, Dart, Conda, and more, with additional support on the way. Enterprise teams are standardizing on it across CI pipelines, reducing registry sprawl, and eliminating the friction of managing diverse artifacts outside their delivery workflows.

One early enterprise customer, Drax Group, consolidated multiple container and package types into Harness Artifact Registry and achieved 100 percent adoption across teams after standardizing on the platform.

As their Head of Software Engineering put it:

"Harness is helping us achieve a single source of truth for all artifact types containerized and non-containerized alike making sure every piece of software is verified before it reaches production." - Jasper van Rijn

Why This Matters: The Registry as a Control Point

In modern DevSecOps environments, artifacts sit at the center of delivery. Builds generate them, deployments promote them, rollbacks depend on them, and governance decisions attach to them. Yet registries have traditionally operated as external storage systems, disconnected from CI/CD orchestration and policy enforcement.

That separation no longer holds up against today’s threat landscape.

Software supply chain attacks are more frequent and more sophisticated. The SolarWinds breach showed how malicious code embedded in trusted update binaries can infiltrate thousands of organizations. More recently, the Shai-Hulud 2.0 campaign compromised hundreds of npm packages and spread automatically across tens of thousands of downstream repositories.

These incidents reveal an important business reality: risk often enters early in the software lifecycle, embedded in third-party components and artifacts long before a product reaches customers.When artifact storage, open source governance, and security scanning are managed in separate systems, oversight becomes fragmented. Controls are applied after the fact, visibility is incomplete, and teams operate in silos. The result is slower response times, higher operational costs, and increased exposure.

We saw an opportunity to simplify and strengthen this model.

By embedding artifact management directly into the Harness platform, the registry becomes a built-in control point within the delivery lifecycle. RBAC, audit logging, replication, quotas, scanning, and policy enforcement operate inside the same platform where pipelines run. Instead of stitching together siloed systems, teams manage artifacts alongside builds, deployments, and security workflows. The outcome is streamlined operations, clearer accountability, and proactive risk management applied at the earliest possible stage rather than after issues surface.

Introducing Dependency Firewall: Blocking Risk at Ingest

Security is one of the clearest examples of why registry-native governance matters.

Artifact Registry delivers this through Dependency Firewall, a registry-level enforcement control applied at dependency ingest. Rather than relying on downstream CI scans after a package has already entered a build, Dependency Firewall evaluates dependency requests in real time as artifacts enter the registry. Policies can automatically block components with known CVEs, license violations, excessive severity thresholds, or untrusted upstream sources before they are cached or consumed by pipelines.

Artifact quarantine extends this model by automatically isolating artifacts that fail vulnerability or compliance checks. If an artifact does not meet defined policy requirements, it cannot be downloaded, promoted, or deployed until the issue is addressed. All quarantine and release actions are governed by role-based access controls and fully auditable, ensuring transparency and accountability. Built-in scanning powered by Aqua Trivy, combined with integrations across more than 40 security tools in Harness, feeds results directly into policy evaluation. This allows organizations to automate release or quarantine decisions in real time, reducing manual intervention while strengthening control at the artifact boundary.

The result is a registry that functions as an active supply chain control point, enforcing governance at the artifact boundary and reducing risk before it propagates downstream.

The Future of Artifact Management is here

General Availability signals that Artifact Registry is now a core pillar of the Harness platform. Over the past year, we’ve hardened performance, expanded artifact format support, scaled multi-region replication, and refined enterprise-grade controls. Customers are running high-throughput CI pipelines against it in production environments, and internal Harness teams rely on it daily.

We’re continuing to invest in:

- Expanded package ecosystem support

- Advanced lifecycle management, immutability, and auditing

- Deeper integration with Harness Security and the Internal Developer Portal

- AI-powered agents for OSS governance, lifecycle automation, and artifact intelligence

Modern software delivery demands clear control over how software is built, secured, and distributed. As supply chain threats increase and delivery velocity accelerates, organizations need earlier visibility and enforcement without introducing new friction or operational complexity.

We invite you to sign up for a demo and see firsthand how Harness Artifact Registry delivers high-performance artifact distribution with built-in security and governance at scale.

From Chef to Chief Architect: Navigating the Intersection of AI and Data Security

Prefer to watch the video instead?

Managing a global army of 450+ developers, Devan has seen the "DevOps to DevSecOps to AI" evolution firsthand. Here are the core AI data security insights from his conversation on ShipTalk.

1. The Bedrock: Scaling to 450+ Developers

IBM’s internal "OnePipeline" isn't just a convenience; it’s a necessity. Built on Tekton (CI) and Argo CD, the platform has become the standard for both SaaS and on-prem deliveries.

- The "Speed vs. Value" Trade-off: During the migration from Travis CI to Tekton, build times initially jumped from 10 minutes to 30. However, the team realized this wasn't inefficiency—it was automated maturity. The extra time accounted for mandatory static, dynamic, and open source scanning.

- The Result: Audits for SOX, NIST, and ISO compliance changed from "scrambling for logs" to "pulling automated reports."

This transition highlights a growing industry trend: as code generation accelerates, the bottleneck shifts to delivery and security. This phenomenon, often called the AI Velocity Paradox, suggests that without downstream automation, upstream speed gains are often neutralized by manual security gates.

2. AI in the SDLC: "Bob" and the Rules of Engagement

IBM uses an internal AI coding agent called "Bob." But how do you ensure AI-generated code doesn't become technical debt?

"It’s not just about the code working; it’s about it being maintainable. If you don't provide context, the AI will build its own functions for JWT validation or encryption instead of using your existing, secure SDKs." — Devan Shah

To combat this, the team implements:

- Contextual Rules Files: Hard-coded guidelines that tell the AI which helper packages and security protocols to use.

- AI Code Reviews: Using LLMs to review PRs with specific guardrails, ensuring that "vibe coding" (quick POCs) is elevated to production-ready standards.

Quantifying the success of these initiatives is the next frontier. For organizations looking to move beyond "vibes" and toward hard data, the ebook Measuring the Impact of AI Development Tools offers a framework for tracking how these assistants actually affect cycle time and code quality.

3. AI Data Security: "Crown Jewels In, Crown Jewels Out"

We’ve all heard of "Garbage In, Garbage Out," but in the AI era, Devan warns of "Crown Jewels In, Crown Jewels Out." If you feed sensitive data or hard-coded secrets into an LLM training set, that model becomes a potential leak for attackers using sophisticated prompt injection.

The Rise of DSPM

Data Security Posture Management (DSPM) has emerged as a critical layer. It solves three major problems:

- Shadow Data Discovery: Finding where PII lives in unstructured formats (PDFs, logs, S3 buckets).

- Posture Verification: Identifying unencrypted buckets or public-facing databases.

- Data Flow Mapping: Detecting GDPR violations.

For a deeper technical look at how infrastructure must be architected to protect customer data in this environment, the Harness Data Security Whitepaper provides an excellent breakdown of security and privacy-by-design principles.

4. Architecting for the "Agentic" Future

We are moving beyond simple chatbots to Agentic Workflows—where AI agents talk to other agents and API endpoints.

- The Risk: An agent with "Full Admin" permissions could accidentally delete an entire cloud account if it misinterprets a prompt.

- The Solution: Architecting for Just-In-Time (JIT) token provisioning and Least Privilege access. Agents should only have the permissions they need for the exact second they are performing a task.

5. The "No Jail" Architectural Principle

When asked how to balance speed with security, Devan's philosophy is simple: Identify the "Bare Minimum 15." You don't need a list of 300 compliance checks to start, but you do need 10 to 15 non-negotiables:

- Automated CI/CD security gates: Ensuring every piece of code—AI or human—passes the same rigorous checks.

- An inventory of every AI model used: To prevent "Shadow AI."

- Pre-approved weight and training data reviews: For off-the-shelf models.

Final Thoughts

Whether you are a startup or a global giant like IBM, the goal of software delivery remains the same: Ship fast, but stay out of legal trouble. By integrating AI data security guardrails and robust data protection directly into the pipeline, security stops being a "speed bump" and starts being a foundational feature.

Want to dive deeper? Connect with Devan Shah on LinkedIn to follow his latest work, and subscribe to the ShipTalk podcast for more insights on using AI for everything after code.

Open Source Liquibase MongoDB Native Executor by Harness

Harness Database DevOps is introducing an open source native MongoDB executor for Liquibase Community Edition. The goal is simple: make MongoDB workflows easier, fully open, and accessible for teams already relying on Liquibase without forcing them into paid add-ons.

This launch focuses on removing friction for open source users, improving MongoDB success rates, and contributing meaningful functionality back to the community.

Why Does Liquibase MongoDB Support Matter for Open Source Users?

Teams using MongoDB often already maintain scripts, migrations, and operational workflows. However, running them reliably through Liquibase Community Edition has historically required workarounds, limited integrations, or commercial extensions.

This native executor changes that. It allows teams to:

- Run existing MongoDB scripts directly through Liquibase Community Edition.

- Avoid rewriting database workflows just to fit tooling limitations.

- Keep migrations versioned and automated alongside application CI/CD.

- Stay within a fully open source ecosystem.

This is important because MongoDB adoption continues to grow across developer platforms, fintech, eCommerce, and internal tooling. Teams want consistency: application code, infrastructure, and databases should all move through the same automation pipelines. The executor helps bring MongoDB into that standardised DevOps model.

It also reflects a broader philosophy: core database capabilities should not sit behind paywalls when the community depends on them. By open-sourcing the executor, Harness is enabling developers to move faster while keeping the ecosystem transparent and collaborative.

Liquibase MongoDB Native Executor: What It Enables In Community Edition

With the native MongoDB executor:

- Liquibase Community can execute MongoDB scripts natively

- Teams can reuse existing operational scripts

- Database changes become traceable and repeatable

- Migration workflows align with CI/CD practices

This improves the success rate for MongoDB users adopting Liquibase because the workflow becomes familiar rather than forced. Instead of adapting MongoDB to fit the tool, the tool now works with MongoDB.

How To Install The Liquibase MongoDB Extension (Step-By-Step)

1. Getting started is straightforward. The Liquibase MongoDB extension is hosted on HAR registry, which can be downloaded by using below command:

curl -L \

"https://us-maven.pkg.dev/gar-prod-setup/harness-maven-public/io/harness/liquibase-mongodb-dbops-extension/1.1.0-4.24.0/liquibase-mongodb-dbops-extension-1.1.0-4.24.0.jar" \

-o liquibase-mongodb-dbops-extension-1.1.0-4.24.0.jar

2. Add the extension to Liquibase: Place the downloaded JAR file into the Liquibase library directory, example path: "LIQUIBASE_HOME/lib/".

3. Configure Liquibase: Update the Liquibase configuration to point to the MongoDB connection and changelog files.

4. Run migrations: Use the "liquibase update" command and Liquibase Community will now execute MongoDB scripts using the native executor.

Generating MongoDB Changelogs From A Running Database

Migration adoption often stalls when teams lack a clean way to generate changelogs from an existing database. To address this, Harness is also sharing a Python utility that mirrors the behavior of "generate-changelog" for MongoDB environments.

The script:

- Connects to a live MongoDB instance

- Reads configuration and structure

- Produces a Liquibase-compatible changelog

- Helps teams transition from unmanaged MongoDB to versioned workflows

This reduces onboarding friction significantly. Instead of starting from scratch, teams can bootstrap changelogs directly from production-like environments. It bridges the gap between legacy MongoDB setups and modern database DevOps practices.

Why Is Harness Contributing This To Open Source?

The intent is not just to release a tool. The intent is to strengthen the open ecosystem.

Harness believes:

- Foundational database capabilities should remain accessible.

- Community users deserve production-ready tooling.

- Open contributions drive innovation faster than closed ecosystems.

By contributing a native MongoDB executor:

- Liquibase Community users gain real functionality.

- MongoDB adoption inside DevOps workflows becomes easier.

- The ecosystem remains open and collaborative.

- Higher success rate for MongoDB users adopting Liquibase Community.

This effort also reinforces Harness as an active open source contributor focused on solving real developer problems rather than monetizing basic functionality.

The Most Complete Open Source Liquibase MongoDB Integration Available Today

The native executor, combined with changelog generation support, provides:

- Script execution

- Migration automation

- Changelog creation from running environments

- CI/CD alignment

Together, these create one of the most functional open source MongoDB integrations available for Liquibase Community users. The objective is clear: make it the default path developers discover when searching for Liquibase MongoDB workflows.

Start Using and Contributing to Liquibase MongoDB Today

Discover the open-source MongoDB native executor. Teams can adopt it in their workflows, extend its capabilities, and contribute enhancements back to the project. Progress in database DevOps accelerates when the community collaborates and builds in the open.

Top Continuous Integration Metrics Every Platform Engineering Leader Should Track

Your developers complain about 20-minute builds while your cloud bill spirals out of control. Pipeline sprawl across teams creates security gaps you can't even see. These aren't separate problems. They're symptoms of a lack of actionable data on what actually drives velocity and cost.

The right CI metrics transform reactive firefighting into proactive optimization. With analytics data from Harness CI, platform engineering leaders can cut build times, control spend, and maintain governance without slowing teams down.

Why Do CI Metrics Matter for Platform Engineering Leaders?

Platform teams who track the right CI metrics can quantify exactly how much developer time they're saving, control cloud spending, and maintain security standards while preserving development velocity. The importance of tracking CI/CD metrics lies in connecting pipeline performance directly to measurable business outcomes.

Reclaim Hours Through Speed Metrics

Build time, queue time, and failure rates directly translate to developer hours saved or lost. Research shows that 78% of developers feel more productive with CI, and most want builds under 10 minutes. Tracking median build duration and 95th percentile outliers can reveal your productivity bottlenecks.

Harness CI delivers builds up to 8X faster than traditional tools, turning this insight into action.

Turn Compute Minutes Into Budget Predictability

Cost per build and compute minutes by pipeline eliminate the guesswork from cloud spending. AWS CodePipeline charges $0.002 per action-execution-minute, making monthly costs straightforward to calculate from your pipeline metrics.

Measuring across teams helps you spot expensive pipelines, optimize resource usage, and justify infrastructure investments with concrete ROI.

Measure Artifact Integrity at Scale

SBOM completeness, artifact integrity, and policy pass rates ensure your software supply chain meets security standards without creating development bottlenecks. NIST and related EO 14028 guidance emphasize on machine-readable SBOMs and automated hash verification for all artifacts.

However, measurement consistency remains challenging. A recent systematic review found that SBOM tooling variance creates significant detection gaps, with tools reporting between 43,553 and 309,022 vulnerabilities across the same 1,151 SBOMs.

Standardized metrics help you monitor SBOM generation rates and policy enforcement without manual oversight.

10 CI/CD Metrics That Move the Needle

Not all metrics deserve your attention. Platform engineering leaders managing 200+ developers need measurements that reveal where time, money, and reliability break down, and where to fix them first.

- Performance metrics show where developers wait instead of code. High-performing organizations achieve up to 440 times faster lead times and deploy 46 times more frequently by tracking the right speed indicators.