Featured Blogs

If 2024 was the year AI started quietly showing up in our workflows, 2025 was the year it kicked the door down.

AI-generated code and AI-powered workflows have become part of nearly every software team’s daily rhythm. Developers are moving faster than ever, automation is woven into every step, and new assistants seem to appear in the pipeline every week.

I’ve spent most of my career on both sides of the equation — first in security, then leading engineering teams — and I’ve seen plenty of “next big things” come and go. But this shift feels different. Developers are generating twice the code in half the time. It’s a massive leap forward — and a wake-up call for how we think about security.

The Question Everyone’s Asking

The question I hear most often is, “Has AI made coding less secure?”

Honestly, not really. The code itself isn’t necessarily worse — in fact, a lot of it’s surprisingly good. The real issue isn’t the quality of the code. It’s the sheer volume of it. More code means more surface area: more endpoints, more integrations, more places for something to go wrong.

Harness recently surveyed 500 security practitioners and decision makers responsible for securing AI-native applications from the United States, UK, Germany, and France to share findings on global security practices. In our latest report, The State of AI-Native Application Security 2025, 82% of security practitioners said AI-native applications are the new frontier for cybercriminals, and 63% believe these apps are more vulnerable than traditional ones.

It’s like a farmer suddenly planting five times more crops. The soil hasn’t changed, but now there’s five times more to water, tend, and protect from bugs. The same applies to software. Five times more code doesn’t just mean five times more innovation — it means five times more vulnerabilities to manage.

And the tools we’ve relied on for years weren’t built for this. Traditional security systems were designed for static codebases that changed every few months, not adaptive, learning models that evolve daily. They simply can’t keep pace.

And this is where visibility collapses.

The AI Visibility Problem

In our research, 63% of security practitioners said they have no visibility into where large language models are being used across their organizations. That’s the real crisis — not bad actors or broken tools, but the lack of understanding about what’s actually running and where AI is operating.

When a developer spins up a new AI assistant on their laptop or an analyst scripts a quick workflow in an unapproved tool, it’s not because they want to create risk. It’s because they want to move faster. The intent is good, but the oversight just isn’t there yet.

The problem is that our governance and visibility models haven’t caught up. Traditional security tools were built for systems we could fully map and predict. You can’t monitor a generative model the same way you monitor a server — it behaves differently, evolves differently, and requires a different kind of visibility.

Security Has to Move Closer to Engineering

Security has to live where engineering lives — inside the pipeline, not outside it.

That’s why we’re focused on everything after code: using AI to continuously test, validate, and secure applications after the code is written. Because asking humans to manually keep up with AI speed is a losing game.

If security stays at a checkpoint after development, we’ll always be behind. The future is continuous — continuous delivery, continuous validation, continuous visibility.

Developers Don’t Need to Slow Down — They Need Guardrails

In the same report, 74% of security leaders said developers view security as a barrier to innovation. I get it — security has a reputation for saying “no.” But the future of software delivery depends on us saying “yes, and safely.”

Developers shouldn’t have to slow down. They need guardrails that let them move quickly without losing control. That means automation that quietly scans for secrets, flags risky dependencies, and tests AI-generated code in real time — all without interrupting the creative flow.

AI isn’t replacing developers; it’s amplifying them. The teams that learn to work with it effectively will outpace everyone else.

Seeing What Matters

We’re generating more innovation than ever before, but if we can’t see where AI is working or what it’s touching, we’re flying blind.

Visibility is the foundation:

- Map where AI exists across your workflows, models, and pipelines.

- Automate validation so issues are caught continuously, not just at release time.

- Embed governance early, not as an afterthought.

- Align security and development around shared goals and shared ownership.

AI isn’t creating chaos — it’s revealing the chaos that was already there. And that’s an opportunity. Once you can see it, you can fix it.

You can read the full State of AI-Native Application Security 2025 report here.

Recent Blogs

Harness x AWS re:Invent 2025

Enter the AI Survival Arena 🦑🟩

Dec 1 to 5 · Booth 731 · The Venetian · Las Vegas

(Harness is an AWS Partner)

AI dominates re:Invent 2025, and engineering leaders everywhere are asking the same question:

Which AI will actually help teams ship better software on AWS with less friction?

This year Harness invites you to step into a Squid Game inspired AI Survival Arena. Pick your role, take on challenges, earn rewards, and leave Las Vegas with real AI powered delivery strategies. Captain Canary will be on site in a special 456 uniform to welcome players into the game.

Add Booth 731 to your conference planner and find all event details here.

🔺 Choose Your Role

Select the role that defines your strategy inside the arena:

- The Builder seeking faster dev loops and fewer blockers

- The Platform Architect creating golden paths for teams

- The Cost Strategist eliminating AWS waste

- The Security Guardian protecting code, pipelines, and AI flows

Your journey begins at Booth 731.

🟥 Main Challenge: Beat the AI Tool Sprawl Game Master

In The State of AI in Software Engineering, teams report using 8 to 10 different AI tools across dev, test, security, and ops. Tool overload slows delivery, increases friction, and creates unnecessary complexity.

Your objective: Discover how one unified, AI powered delivery platform on AWS can simplify CI, CD, cost, and security at once.

Download The State of AI in Software Engineering before re:Invent.

🏟 Booth 731: The AI Survival Arena

Inside the arena, you can unlock:

- Live AI demos across CI, CD, cost, and security

- The Ask an Agent Challenge, where you present a delivery bottleneck and see how AI solves it

- A chance to Spin to Win on our slot machine

- One-to-one strategy conversations with product and engineering leaders

This is where the competition begins.

🎁 Bonus Loot: Swag Drops

Get ready for epic AI GAME swag, surprise giveaways, and booth-exclusive merch. We’re talking a mix of playful items, premium collectibles, and fan favorites designed to make your re:Invent run a lot more fun. Swing by to see what you can win.

🟩 Side Quests and Achievements

Complete as many as you can:

☐ Ask where AI can remove a step in your delivery flow

☐ Pick your role and request a Day 1 Experiment to try at home

☐ Bring your cloud bill and learn where AI optimization can have immediate impact

☐ Share your top engineering metric and see how AI can improve it

Bonus: Share your most challenging pipeline story and ask how AI can help resolve it.

🌃 Night Raid: After Hours with Harness

Dec 2 · 8:45 PM to 11:45 PM · Flight Club, The Venetian

Darts, drinks, and DevOps. This is where teams talk honestly about AI, velocity, AWS, risk, and reality.

Register for the After Hours event.

🥂 Leadership Missions

For directors, VPs, and execs looking for high signal conversations.

Executive Resiliency Roundtable

Dec 2 · 5:30 PM to 8:30 PM · Mastro’s Ocean Prime

VIP Networking Dinner

Dec 3 · 6:30 PM to 8:30 PM · STK Steakhouse [Invite only].

Enjoy a curated culinary experience and meaningful conversation with Harness executives and industry leaders in an evening designed to connect, celebrate, and look ahead.

This dinner is at capacity. To join the waitlist, email jessica.jackson@harness.io

The Future of AppSec Luncheon

Dec 3 · 11:30 AM to 1:30 PM · Sadelle’s Cafe

Connect with technology and security leaders to explore modern AppSec challenges and how top organizations are securing apps and APIs without slowing innovation. Gain actionable insights through open conversation in an intimate, curated executive setting.

Register for the AppSec Luncheon.

🎤 Hear from Harness at re:Invent

Engineering the Future of Hospitality: Marriott’s Global Digital Transformation

Thursday, December 4 | 1:00 PM | Room: MGM Grand 116

Join leaders from Marriott International and Harness for a deep dive into how Marriott modernized their global delivery ecosystem, built a resilient cloud-native foundation, and prepared their engineering org for an AI-enabled future.

Speakers include:

- Jyoti Bansal, CEO, Harness

- Nick Durkin, Field CTO, Harness

- Adnan Haq, VP, DevSecOps & Infrastructure, Marriott International

- Sean Corkum, Sr. Director, DevSecOps & Automation, Marriott International

Add this session to your agenda [DVT104-S].

🤝 Co-op Mode: Harness + AWS

Harness is an AWS Partner with a delivery platform purpose-built for AWS environments. Many teams also choose to run Harness through AWS Marketplace for a native buying experience.

🏁 Final Mission: Your Path to Victory

Before re:Invent

- Download the AI report

- Pick your character

- Bring one challenge you want solved

During re:Invent

- Visit Booth 731

- Hit at least one leadership event or the Marriott session

- Try the Spin to Win machine

After re:Invent

- Run one small experiment inspired by your week

- Meet with Harness for an AI delivery blueprint

See you in Las Vegas.

Come ready to play, learn, build, and win. Step into the arena with confidence because Harness will bring the AWS expertise, the AI innovation, and the platform your team needs to advance.

The games begin at Booth 731. Are you ready to make it to the final round?

Checkout the Event: After Hours with Harness at AWS re:Invent!, re:Invent re:Cap w/ Harness Raffle

The AI Visibility Problem: When Speed Outruns Security

If 2024 was the year AI started quietly showing up in our workflows, 2025 was the year it kicked the door down.

AI-generated code and AI-powered workflows have become part of nearly every software team’s daily rhythm. Developers are moving faster than ever, automation is woven into every step, and new assistants seem to appear in the pipeline every week.

I’ve spent most of my career on both sides of the equation — first in security, then leading engineering teams — and I’ve seen plenty of “next big things” come and go. But this shift feels different. Developers are generating twice the code in half the time. It’s a massive leap forward — and a wake-up call for how we think about security.

The Question Everyone’s Asking

The question I hear most often is, “Has AI made coding less secure?”

Honestly, not really. The code itself isn’t necessarily worse — in fact, a lot of it’s surprisingly good. The real issue isn’t the quality of the code. It’s the sheer volume of it. More code means more surface area: more endpoints, more integrations, more places for something to go wrong.

Harness recently surveyed 500 security practitioners and decision makers responsible for securing AI-native applications from the United States, UK, Germany, and France to share findings on global security practices. In our latest report, The State of AI-Native Application Security 2025, 82% of security practitioners said AI-native applications are the new frontier for cybercriminals, and 63% believe these apps are more vulnerable than traditional ones.

It’s like a farmer suddenly planting five times more crops. The soil hasn’t changed, but now there’s five times more to water, tend, and protect from bugs. The same applies to software. Five times more code doesn’t just mean five times more innovation — it means five times more vulnerabilities to manage.

And the tools we’ve relied on for years weren’t built for this. Traditional security systems were designed for static codebases that changed every few months, not adaptive, learning models that evolve daily. They simply can’t keep pace.

And this is where visibility collapses.

The AI Visibility Problem

In our research, 63% of security practitioners said they have no visibility into where large language models are being used across their organizations. That’s the real crisis — not bad actors or broken tools, but the lack of understanding about what’s actually running and where AI is operating.

When a developer spins up a new AI assistant on their laptop or an analyst scripts a quick workflow in an unapproved tool, it’s not because they want to create risk. It’s because they want to move faster. The intent is good, but the oversight just isn’t there yet.

The problem is that our governance and visibility models haven’t caught up. Traditional security tools were built for systems we could fully map and predict. You can’t monitor a generative model the same way you monitor a server — it behaves differently, evolves differently, and requires a different kind of visibility.

Security Has to Move Closer to Engineering

Security has to live where engineering lives — inside the pipeline, not outside it.

That’s why we’re focused on everything after code: using AI to continuously test, validate, and secure applications after the code is written. Because asking humans to manually keep up with AI speed is a losing game.

If security stays at a checkpoint after development, we’ll always be behind. The future is continuous — continuous delivery, continuous validation, continuous visibility.

Developers Don’t Need to Slow Down — They Need Guardrails

In the same report, 74% of security leaders said developers view security as a barrier to innovation. I get it — security has a reputation for saying “no.” But the future of software delivery depends on us saying “yes, and safely.”

Developers shouldn’t have to slow down. They need guardrails that let them move quickly without losing control. That means automation that quietly scans for secrets, flags risky dependencies, and tests AI-generated code in real time — all without interrupting the creative flow.

AI isn’t replacing developers; it’s amplifying them. The teams that learn to work with it effectively will outpace everyone else.

Seeing What Matters

We’re generating more innovation than ever before, but if we can’t see where AI is working or what it’s touching, we’re flying blind.

Visibility is the foundation:

- Map where AI exists across your workflows, models, and pipelines.

- Automate validation so issues are caught continuously, not just at release time.

- Embed governance early, not as an afterthought.

- Align security and development around shared goals and shared ownership.

AI isn’t creating chaos — it’s revealing the chaos that was already there. And that’s an opportunity. Once you can see it, you can fix it.

You can read the full State of AI-Native Application Security 2025 report here.

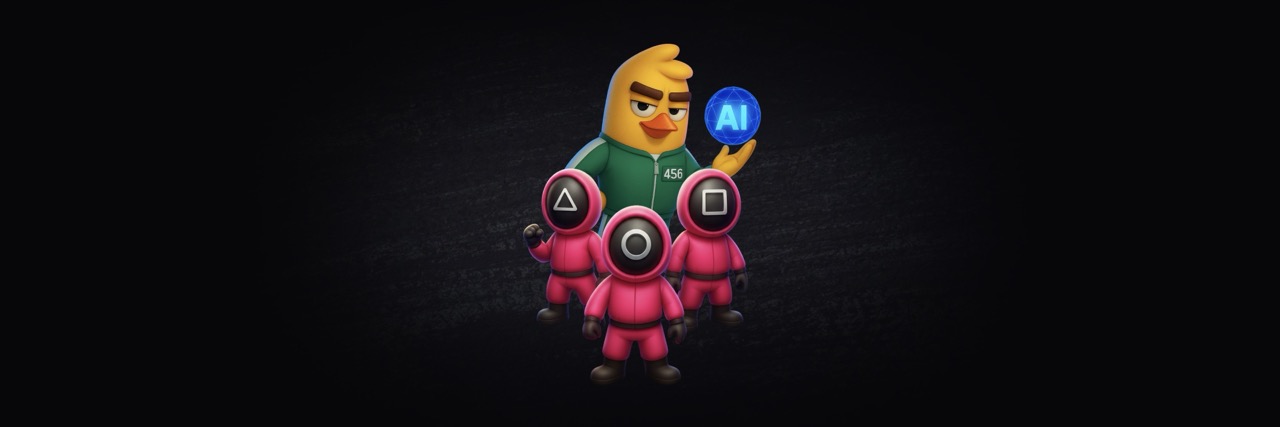

Intent-Driven Assertions are Redefining How We Test Software

Picture this: your QA team just rolled out a comprehensive new test suite ; polished, precise, and built to catch every bug. Yet soon after, half the tests fail. Not because the code is broken, but because the design team shifted a button slightly. And even when the tests pass, users still find issues in production. A familiar story?

End-to-end testing was meant to bridge that gap. This is how teams verify that complete user workflows actually work the way users expect them to. It's testing from the user's perspective; can they log in, complete a transaction, see their data?

The Real Problem Isn't Maintenance. It's Misplaced Focus.

Maintaining traditional UI tests often feels endless. Hard-coded selectors break with every UI tweak, which happens nearly every sprint. A clean, well-structured test suite quickly turns into a maintenance marathon. Then come the flaky tests: scripts that fail because a button isn’t visible yet or an overlay momentarily blocks it. The application might work perfectly, yet the test still fails, creating unpredictable false alarms and eroding trust in test results.

The real issue lies in what’s being validated. Conventional assertions often focus on technical details- like whether a div.class-name-xy exists or a CSS selector returns a value, rather than confirming that the user experience actually works.

The problem with this approach is that it tests how something is implemented, not whether it works for the user. As a result, a test might pass even when the actual experience is broken, giving teams a false sense of confidence and wasting valuable debugging time.

Some common solutions attempt to bridge that gap. Teams experiment with smarter locators, dynamic waits, self-healing scripts, or visual validation tools to reduce flakiness. Others lean on behavior-driven frameworks such as Cucumber, SpecFlow, or Gauge to describe tests in plain, human-readable language. These approaches make progress, but they still rely on predefined selectors and rigid code structures that don’t always adapt when the UI or business logic changes.

What’s really needed is a shift in perspective : one that focuses on intent rather than implementation. Testing should understand what you’re trying to validate, not just how the test is written.

That’s exactly where Harness builds on these foundations. By combining AI understanding with intent-driven, natural language assertions, it goes beyond behavior-driven testing, actually turning human intent directly into executable validation.

What Are Intent-Driven Natural Language Assertions?

Harness AI Test Automation reimagines testing from the ground up. Instead of writing brittle scripts tied to UI selectors, it allows testers to describe what they actually want to verify, in plain, human language.

Think of it as moving from technical validation to intent validation. Rather than writing code to confirm whether a button exists, you can simply ask:

- “Did the login succeed?” or

- “Is the latest transaction a deposit?”.

Behind the scenes, Harness AI interprets these statements dynamically, understanding both the context and the intent of the test. It evaluates the live state of the application to ensure assertions reflect real business logic, not just surface-level UI details.

This shift is more than a technical improvement; it’s a cultural one. It democratizes testing, empowering anyone on the team, from developers to product managers, to contribute meaningful, resilient checks. The result is faster test creation, easier maintenance, and validations that truly align with what users care about: a working, seamless experience.

Harness describes this as "Intent-based Testing", where tests express what matters rather than how to check it, enabling developers and QA teams to focus on outcomes, not implementation details.

Harness AI Test Automation Solving Traditional Testing Issues

Traditional automation for end-to-end testing/UI testing often breaks when UIs change, leading to high maintenance overhead and flaky results. Playwright, Selenium, or Cypress scripts frequently fail because they depend on exact element paths or hardcoded data, which makes CI/CD pipelines brittle.

Industry statistics reveal that 70-80% of organizations still rely heavily on manual testing methods, creating significant bottlenecks in otherwise automated DevOps toolchains. Source

Harness AI Test Automation addresses these issues by leveraging AI-powered assertions that dynamically adapt to the live page or API context. Benefits include:

- Reduced flakiness: Tests automatically handle UI changes without manual intervention

- Lower maintenance costs: AI-generated selectors eliminate constant rewriting of selectors or brittle logic

- Focus on business logic: Teams concentrate on verifying user-centric outcomes rather than technical details

- Faster and No-Code test creation: Organizations report 10x faster test creation and the ability to cut test creation time by up to 90%

Organizations using AI Test Automation see up to 70% less maintenance effort and significant improvements in release velocity.

How Harness AI Test Understands and Validates Your Intent

Harness uses large language models (LLMs) optimized for testing contexts. The AI:

- Understands Your Intent: The AI parses your natural language assertion to grasp what you're trying to verify, for example, “Did the login succeed?" or “Is the button visible after submission?"

- Analyzes Real Application Context: It evaluates the live state of your application by analyzing the HTML DOM and the rendered viewport. This provides the AI with a comprehensive understanding of the app's current behavior, structure, and visual presentation.

- Maintains Context History: it keeps a record of previous steps and results, so the AI can use historical context when validating new assertions.

- Learns from Past Runs: Outputs from prior test executions are stored and referenced, allowing future assertions to become more accurate and context-aware over time.

- Provides Detailed Reasoning: Instead of just marking a test as “pass” or “fail,” the AI explains why, offering insights backed by both visual and structural evidence.

Together, these layers of intelligence make Harness AI Assertions not just smarter but contextually aware, giving you a more human-like and reliable testing experience every time you run your pipeline.

This context-aware approach identifies subtle bugs that are often missed by traditional tests and reduces the risks associated with AI “hallucinations.” Hybrid verification techniques cross-check outputs against real-time data, ensuring reliability.

For example, when testing a dynamic transaction table, an assertion like “Verify the latest transaction is a deposit over $500” will succeed even if the table order changes or new rows are added. Harness adapts automatically without requiring code changes

Harness Blog on AI Test Automation.

Crucially, we are not asking the AI to generate code (although for some math questions it might) and then never consult it again; we actually ask the AI this question with the context of the webpage every time you run the test.

Successful or not, the assertion will also give you back reasoning as to why it is true:

How Teams Use Harness AI Assertions

Organizations across fintech, SaaS, and e-commerce are using Harness AI to simplify complex testing scenarios:

- Financial services: Validating transaction tables and workflows with natural language assertions.

- SaaS platforms: Checking onboarding flows and dynamic permission rules.

- E-commerce: Confirming discount logic and inventory updates dynamically.

- Healthcare: Transforming test creation from days to minutes

Even less-technical users can author and maintain robust tests. Auto-suggested assertions and natural language prompts accelerate collaboration across QA, developers, and product teams.

You can also perform assertions based on parameters.

An early adopter reported that after integrating Harness AI Assertions, release verification time dropped by more than 50%, freeing QA teams to focus on higher-value work. DevOpsDigest coverage

Transforming QA with Harness AI: Faster, Smarter, Reliable

Harness AI Test Automation empowers teams to move faster with confidence. Key benefits include:

- Faster test creation: Write robust assertions in minutes rather than hours.

- Reduced test maintenance: Fewer broken scripts and less manual debugging.

- Improved collaboration: Align developers, testers, and product managers around shared intent.

- Future-ready QA: Supports modern DevOps practices and continuous delivery pipelines.

Harness AI Test Automation turns traditional QA challenges into opportunities for smarter, more reliable automation, enabling organizations to release software faster while maintaining high quality.

Harness AI is to test what intelligent assistants are to coding: it allows humans to focus on strategy, intent, and value, while the AI handles repetitive validation (Harness AI Test Automation).

Harness AI Test Automation represents a paradigm shift in testing. By combining intent-driven natural language assertions, AI-powered context awareness, and self-adapting validation, it empowers teams to deliver reliable software faster and with less friction.

If you are excited about and want to simplify maintenance while improving test reliability, contact us to learn more about how intent-driven, natural-language assertions can transform your testing experience.

AI Agents vs Real-World Web Tasks: Harness Leads the Way in Enterprise Test Automation

AI Agents vs Real-World Web Tasks: Harness Leads the Way in Enterprise Test Automation

Written by Deba Chatterjee, Gurashish Brar, Shubham Agarwal, and Surya Vemuri

Can an AI agent test your enterprise banking workflow without human help? We found out. AI-powered test automation will be the de facto method for engineering teams to validate applications. Following our previous work exploring AI operations on the web and test automation capabilities, we expand our evaluation to include agents from the leading model providers to execute web tasks. In this latest benchmark, we evaluate how well top AI agents, including OpenAI Operator and Anthropic Computer Use, perform real-world enterprise scenarios. From banking applications to audit trail log navigation, we tested 22 tasks inspired by our customers and users.

Building on Previous Research

Our journey began with introducing a framework to benchmark AI-powered web automation solutions. We followed up with a direct comparison between our AI Test Automation and browser-use. This latest evaluation extends our research by incorporating additional enterprise-focused tasks inspired by the demands of today’s B2B applications.

The B2B Challenge

Business applications present unique challenges for agents performing tasks through web browser interactions. They feature complex workflows, specialized interfaces, and strict security requirements. Testing these applications demands precision, adaptability, and repeatability — the ability to navigate intricate UIs while maintaining consistent results across test runs.

To properly evaluate each agent, we expanded our original test suite with three additional tasks:

- A banking application workflow requiring precise transaction handling, i.e., deposit of funds into a checking account

- Navigation of a business application to view audit logs filtered by date

- Interacting with a messaging application and validating the conversation in the history

These additions brought the total test suite to 22 distinct tasks varying in complexity and domain specificity.

Comprehensive Evaluation Results

User tasks and Agent results

The four solutions performed very differently, especially on complex tasks. Our AI Test Automation led with an 86% success rate, followed by browser-use at 64%, while OpenAI Operator and Anthropic Computer Use achieved 45% and 41% success rates, respectively.

The performance varies as tasks interact with complex artifacts such as calendars, information-rich tables, and chat interfaces.

Additional Web Automation Tasks

As in previous research, each agent executed their tasks on popular browsers, i.e., Firefox and Chrome. Also, even though OpenAI Operator required some user interaction, no additional manual help or intervention was provided outside the evaluation task.

Banking Application Navigation

The first additional task involves banking. The instructions include logging into a demo banking application, depositing $350 into a checking account, and verifying the transaction. Each solution must navigate the site without prior knowledge of the interface.

Our AI Test Automation completed the workflow, correctly selecting the family checking account and verifying that the $350 deposit appeared in the transaction history. Browser-use struggled with account selection and failed to complete the deposit action. Both Anthropic Computer Use and OpenAI Operator encountered login issues. Neither solution progressed past the initial authentication step.

Audit Trail Navigation

Finding audit trail records in a table full of data is a common enterprise requirement. We challenged each solution to navigate Harness’s Audit Trail interface to locate two-day-old entries. The AI Test Automation solution navigated to the Audit Logs and paged through the table to identify two-day-old entries. Browser-use reached the audit log UI but failed to navigate, i.e., paginate to the requested records. Anthropic Computer Use did not scroll sufficiently to find the Audit Trail tile. The default browser resolution is a limiting factor with Anthropic Computer Use. The OpenAI Operator found the two-day-old audit logs.

This task demonstrates that handling information-rich tables remains challenging for browser automation tools.

Messaging Application Interaction

The third additional task involves a messaging application. The intent is to initiate a conversation with a bot and verify the conversation in a history table. This task incorporates browser interaction and verification logic.

The AI Test Automation solution completed the chat interaction and correctly verified the conversation’s presence in the history. Browser-use also completed this task. Anthropic Computer Use, on the other hand, is unable to start a conversation. OpenAI Operator initiates the conversation but never sends a message. As a result, a new conversation does not appear in the history.

This task reveals varying levels of sophistication in executing multi-step workflows with validation.

What Makes Solutions Perform Differently?

Several factors contribute to the performance differences observed:

Specialized Architecture: Harness AI Test Automation leverages multiple agents designed for software testing use cases. Each agent has varying levels of responsibility, from planning to handling special components like calendars and data-intensive tables.

Enterprise Focus: Harness AI Test Automation is designed with enterprise use cases in mind. There are certain features to take into account from the enterprise. A sample of these features includes:

- security

- repeatability for CI/CD integration

- precision

- ability to interact with an API

- uncommon interfaces that are not generally accessible via web crawling, hence not available for training

Task Complexity: Browser-use, Anthropic Computer Use, and OpenAI Operator execute many tasks. But as complexity increases, the performance gap widens significantly.

Why Harness Outperforms

- Custom agents for calendars, rich tables

- API-driven validation where UI alone is insufficient

- Secure handling of login and secrets

Conclusion

Our evaluation demonstrates that while all four solutions handle basic web tasks, the performance diverges when faced with more complex tasks and web UI elements. In such a fast-moving environment, we will continue to evolve our solution to execute more use cases. We will stay committed to tracking performance across emerging solutions and sharing insights with the developer community.

At Harness, we continue to enhance our solution to meet enterprise challenges. Promising enhancements to the product include self-diagnosis and tighter CI/CD integrations. Intent-based software testing is easier to write, more adaptable to updates, and easier to maintain than classic solutions. We continue to enhance our AI Test Automation solution to address the unique challenges of enterprise testing, empowering development teams to deliver high-quality software confidently. After all, we’re obsessed with empowering developers to do what they love: ship great software.

DevSecOps Summit 2025: AI Security From Pipeline to Production

The AI revolution isn't coming—it's already here, and it's rewriting the rules of software development at breakneck speed. AI agents autonomously navigate entire codebases and generate code faster than ever before. But as we embrace these powerful tools, a critical question emerges: Are we all building on solid ground, or are we constructing skyscrapers on quicksand?

Welcome to the new frontier of DevSecOps, where artificial intelligence isn't just changing how we build software—it's fundamentally transforming what we need to protect and how we protect it.

On November 12th, Harness is hosting the virtual DevSecOps Summit 2025. Industry leaders, security practitioners, and AI innovators are converging to tackle the most pressing challenge of our generation: securing AI systems from the first line of code to production deployment and beyond. This isn't about adding another checkbox to your security compliance list. This is about reimagining security for an era where code writes code, where models make decisions, and where vulnerabilities can be AI-generated as quickly as features.

Why AI Features Matter Now

The statistics are sobering. AI-generated code is proliferating across enterprise codebases, often without adequate security review. Large Language Models (LLMs) are being deployed with proprietary data access, creating unprecedented attack surfaces. Agentic systems are making autonomous decisions that can impact millions of users. And traditional security tools? They're struggling to keep pace.

But here's the paradox: while AI introduces new security challenges, it's also a powerful multiplier to our efforts to address them. The same technology that can generate vulnerable code can also detect anomalies, predict threats, and automate security responses at machine speed.

From Pipeline to Production: A Holistic Approach

This summit explores the complete AI security lifecycle—because threats don't respect the boundaries of your CI/CD pipeline. Here are just a few of the topics that we’ll examine at the Summit:

- When Vibe Coding Loses Its Cool: Vibe coding is changing how we design, code, and secure software, but it must be done thoughtfully. Tanya Janca (author of Alice and Bob Learn Secure Coding) and Adam Arellano of Harness will engage in a lively fireside chat about bringing AI into your development workflow safely and effectively.

- Ways to Ensure Security Across the SDLC: Leading experts from Harness, Wiz, Citizens Bank, and InterSystems will demonstrate a unified and integrated approach to AI security, emphasizing that robust protection must span the entire lifecycle, from the initial pipeline stages to full production deployment. Attendees will:

- Gain insights into how to bridge the existing gaps between development and operations security with a practical, actionable framework for securing AI applications end-to-end.

- Learn how to ensure that your security controls are not static, but evolve dynamically and as rapidly as your AI capabilities with continuous protection against emerging threats and vulnerabilities in an ever-changing landscape.

- How to Check Your AI Blind Spot: Security researchers from Harness, SentinalOne, and ASPEN Labs share real-world examples of threats targeting AI-native applications, risky behavior is usage of AI components, and even how attackers are using AI tools to attack faster. You'll learn:

- Practical approaches to securing your new AI attack surface, implementing guardrails for rolling out AI functionality without slowing down development.

- Invaluable insights into the intricacies of securing an AI-powered future, exploring practical approaches to counter sophisticated attack vectors.

- What researchers are experiencing the wild and how they’re securing AI-native applications in real-time.

What You'll Discover

Throughout this summit, you'll hear from practitioners who are solving AI challenges in real-world environments. They'll share hard-won lessons about securing agentic applications, preventing prompt injection attacks, validating AI-generated code, and building governance frameworks that scale with AI adoption.

Whether you're a security professional adapting to AI-powered threats, a developer integrating AI tools into your workflow, or a leader navigating the strategic implications of AI adoption, this summit offers actionable insights for your journey.

The future of software is AI-native. The question isn't whether to embrace it, but how to do so securely, responsibly, and effectively. Let's explore that future together—from pipeline to production, and everything in between.

Join us at DevSecOps Summit 2025.

.jpg)

.jpg)

Reverse Engineering Comet: How Perplexity’s Browser Automates the Web Without Screenshots (and Where It Falls Short)

Comet is a new browser from Perplexity, and it is amazing at performing tasks autonomously. You simply need to give a high-level intent, and it does the rest.

Find me the cheapest flight from SFO to LAX departing on 14th September and returning on 19th September.

That's all you need to tell Comet, and it will find you the flight. Needless to say, this will change how we browse the internet.

As an engineer, I wanted to know how it works. Specifically, what is the context it's using? I tried to find information online, but there is no engineering blog or any other insights I could find. So I tried the next best thing: just talk to Comet and see what it has to say. Surprisingly, it is quite vocal about how it figures out things.

Let's go step by step and prompt Comet about its inner workings:

Do you use accessibility tree on the website to see what elements to act on?

Comet: Yes, I utilize the website’s accessibility tree — specifically roles, ARIA attributes, labels, and states — to precisely identify and interact with page elements for automation and control tasks

What about screenshots?

Comet: No, I do not use screenshots to identify or interact with website elements. My actions are based on the underlying accessibility tree and HTML structure, not images of the page.

Do you look at the raw DOM as well?

Comet: Yes, I use the raw DOM (Document Object Model) in addition to the accessibility tree. This allows me to access the full structure, attributes, and content of every element on the page for precise interaction and automation.

But DOMs can be huge and won't fit your context window.

Do you do any special processing to reduce its size?

Comet: Yes, special processing is performed on large DOMs to make them manageable:

Filtering: Only relevant elements are extracted — those visible, interactive, or matching the task (e.g., buttons, form fields).

Pruning: Non-essential elements (such as scripts, styles, or hidden sections) are ignored.

Summarization: Hierarchical structure is flattened, and elements are grouped by their roles and ARIA landmarks.

Chunking: The DOM is broken into smaller, logical sections for stepwise analysis.

This targeted extraction ensures only the essential information needed for the automation task is processed, keeping the context efficient and focused.

So in a nutshell, it's using DOM + Accessibility tree, well, that will limit its capability somewhat, especially when dealing with applications that are complex and do not follow rules (unfortunately, a common occurrence on the internet)

Exploring Limitations

Next, let's talk about the limitations of Comet

How do you deal with IFRAME with cross origin source? You are not allowed to see inside it, so how will you know where to act?

Comet: If the element to act on is inside a cross-origin iframe, I cannot access or manipulate it directly. Only the iframe as a whole can be targeted from the parent page.

Since you cannot "see" i.e. no access to screenshot, doesn't that mean you cannot act on images or icons or svg that do not have good label?

Comet: Correct. If an image, icon, or SVG does not have accessible labels, ARIA attributes, alt text, or useful DOM properties (such as a descriptive title or role), I cannot reliably identify or act on it. My ability to interact is limited to what can be detected in the DOM or accessibility tree.

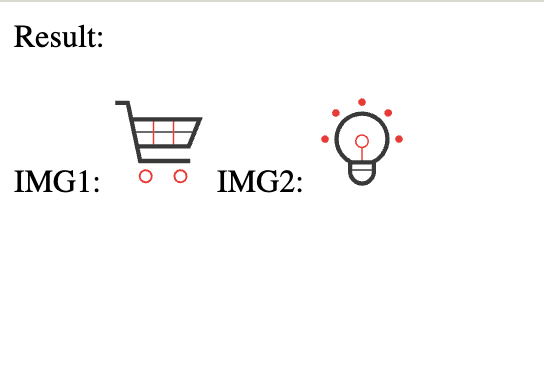

Testing Comet with simple HTML

Let’s verify Comet’s claim that it relies solely on accessibility features and labels, without using screenshots.

Simple HTML to demonstrate

Click on the cart

Comet: There is still no clickable “Cart” element detected on this page. The word “Cart:” might be present as plain text, but it is not an interactive button or link that can be clicked. If the cart is meant to be clickable, it should be implemented as a button or link in the HTML code.

It failed to click on the cart; if it could “see” it would have been able to find the correct element.

Click on the bulb

Comet: There are no elements labeled “bulb” or displaying a bulb icon on this page, so nothing could be clicked. If you’re expecting a bulb icon or button, it may not be present or may not be labeled as such in the HTML.

Again, failed to click on the bulb

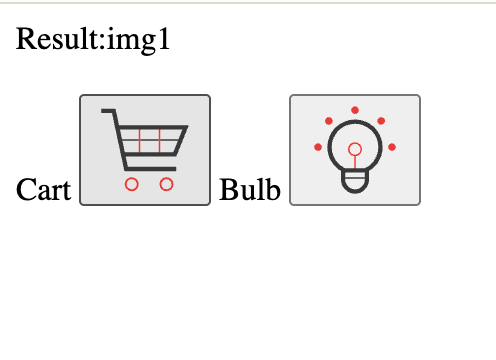

Let’s make the page more readable and add some labels

Click on cart

Comet: There is no clickable “Cart” element found on this page. The word “Cart” is present, but it is not implemented as an interactive button or link that can be clicked. If you want it to be clickable, it should be defined as a button or anchor tag in the HTML.

It failed again — despite the clickable image next to the Cart text, Comet couldn’t make the connection.

Let's be a bit more explicit and add an alt attribute to the cart image. This will make it obvious it's a cart in the HTML

Comet: There is no clickable “cart” element found on the current page. If you would like me to try anything else or if the cart should be activated in another way, please let me know.

Failed again? I guess it cannot tell that the img is clickable, it claimed to check the event handlers, but it failed to do so in this case.

Let's wrap the img in a button and see if we can make it see it now

Click on the cart

Comet: The cart button was found and successfully clicked on your current page

Finally, it was able to act!

Verdict:

Comet works well with well-structured applications, but it makes some basic assumptions. When apps don’t follow best practices, Comet can struggle or fail outright.

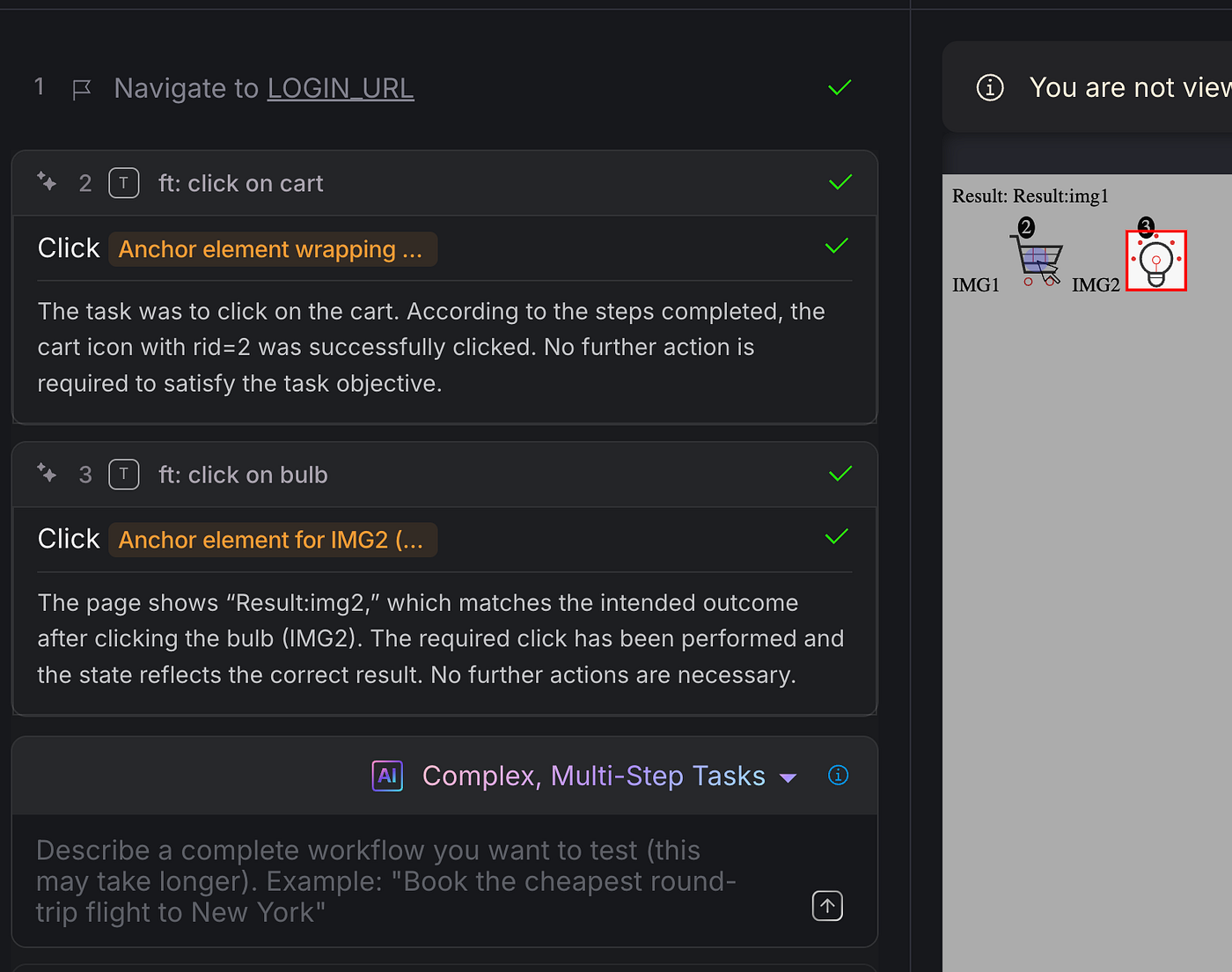

Contrasting with Harness AI Test Automation

Harness AI Test takes a bimodal approach: it looks at the screenshot while also generating a wireframe from the DOM and Accessibility tree.

This allows it to identify clickable, writable, and selectable elements — regardless of how the developer implemented them, whether through tags like <button>, onclick handlers, tabIndex, pointer, or other variations.

Let’s take a look at the original HTML with AI Test, which can truly see.

In both prompts, the AI Test was able to locate and click on the appropriate image and also verify that the expected outcome was achieved.

Conclusion

Real-world DOMs are messy, and accessibility is often weak. Even id uniqueness gets broken, almost anything can be made clickable, and custom tags are common. To build agents that truly match human behavior, we need multiple signals: screenshots, a condensed DOM, accessibility data, and practical knowledge of JS-driven behavior. That last piece comes from experience with legacy patterns (yes, even frameset).

Comet’s choice to lean on accessibility and the DOM over screenshots looks like a technical and product trade-off: computer-use models are early, screenshot “decoration” can hurt consumer UX, speed and cost matter, and top consumer sites generally follow accessibility best practices — so it works well there. Enterprise apps are different: older stacks and inconsistent accessibility make a screenshot+vision (bimodal) approach better for accuracy, coverage, and repeatability across edge cases.

Bottom line: Comet is excellent where its assumptions hold, but the web is diverse. A bimodal path would broaden its reach.

.jpg)

.jpg)

Harness AI Test Automation: End-to-End, AI-Powered Testing for Faster, Smarter DevOps

We’re excited to announce the General Availability (GA) of Harness AI Test Automation – the industry’s first truly AI-native, end-to-end test automation solution, that's fully integrated across the entire CI/CD pipeline, built to meet the speed, scale, and resilience demanded by modern DevOps. With AI Test Automation, Harness is transforming the software delivery landscape by eliminating the bottlenecks of manual and brittle testing and empowering teams to deliver quality software faster than ever before.

This launch comes at a critical time. Organizations spend billions of dollars on software quality assurance each year, yet most still face the same core challenges of fragility, speed, and pace. Testing remains a significant obstacle in an otherwise automated DevOps toolchain, with around 70-80% of organizations still reliant on manual testing methods, thus slowing down delivery and introducing risks.

Harness AI Test Automation changes that paradigm, replacing outdated test frameworks with a seamless, AI-powered solution that delivers smarter, faster, and more resilient testing across the SDLC. With this offering, Harness has the industry’s first fully automated software delivery platform, where our customers can code, build, test, and deploy applications seamlessly.

What Makes Harness AI Test Automation Different?

Harness AI Test Automation has a lot of unique capabilities that make high-quality end-to-end testing effortless.

"Traditional testing methods struggled to keep up, it is too manual, fragile, and slow. So we’ve reimagined testing with AI. Intent-based testing brings greater intelligence and adaptability to automation, and it seamlessly integrates into your delivery pipeline." - Sushil Kumar, Head of Business, AI Test Automation

Some of the standout benefits of AI Test Automation are:

Create High-Quality Tests in Minutes: No Code Required

AI Test Automation streamlines the creation of high-quality tests:

- Live Test Authoring: Record tests automatically by simply interacting with your web app. No scripting or coding expertise needed.

- Intent-Based Testing: Author test cases and assertions using natural language prompts. Just type what you want to verify—like “Did the login succeed?”—and let AI handle the rest.

- AI Auto Assertions: Harness AI suggests and auto-generates assertions after each step, pre-verified for seamless functionality. This ensures robust coverage and saves hours of manual work.

- Visual Testing with AI: Validate complex UI elements, including dynamic and canvas-based components, using human-like visual testing. Use natural language to verify visual states, eliminating the need for fragile scripts.

Self-Healing, Adaptive Test Maintenance

AI Test Automation eliminates manual test maintenance while improving test reliability:

- AI-Generated Selectors: Tests adapt automatically to UI and workflow changes, dramatically reducing test flakiness and maintenance by up to 70%.

- Run Stable Tests Across Environments: Smart Selector technology and automatic URL translation ensure tests work everywhere, with no modifications required.

AI Test Automation adapts to the UI changes with its smart selectors

Intelligent, Scalable Test Execution

AI Test Automation boosts efficiency and streamlines testing workflows:

- Intelligent Retries: AI Test Automation distinguishes between transient issues and real bugs, reducing false positives and debugging time.

- Parallel Execution: Effortlessly scale to thousands of tests running in parallel, optimizing validation as your application grows.

- Data-Driven Testing: Parameterized tests dynamically handle data at runtime, enabling flexible and efficient workflows.

Seamless Integration and Enterprise-Grade Security

AI Test Automation enables resilient end-to-end automation:

- Unified with Harness CI/CD: AI Test Automation is natively integrated with Harness pipelines, enabling true end-to-end automation from build to deploy.

- SOC 2 Type 2 Compliance: Enterprise-ready security and compliance, so you can trust your test automation at scale.

- No-Code Simplicity + Advanced Flexibility: Add custom JavaScript or Puppeteer scripts for complex scenarios — get the best of both worlds.

Real Results: Customer Success Stories

At Harness, we use what we build. We’ve adopted AI Test Automation across our own products, achieving 10x faster test creation and enabling visual testing with AI – all fully integrated into our CI/CD pipelines. Since AI can be nondeterministic, and testing AI workflows using traditional test automation tools can be hard, we also use AI Test Automation to test our internal AI workflows.

“With AI Test Automation, I just literally wrote out and wireframed all the test cases, and in a matter of 15–20 minutes, I was able to knock out one test. Using the templating functionality, we were able to come up from a suite of 0 to 55 tests in the span of 2 and a half weeks.” - Rohan Gupta, Principal Product Manager, Harness

Our customers are seeing dramatic results, too. For example, using Harness AI Test Automation, Siemens Healthineers slashed QA bottlenecks and transformed test creation from days to minutes.

Wasimil, a hotel booking and management platform, has reduced its test maintenance time by 50%, allowing it to release twice as frequently as it used to before using Playwright.

"With AI Test Automation, we could ship features, not just bug fixes. We don't wanna spend 30 to 40% of our engineering resources on fixing bugs because we can't be proud to ship bug fixes to our customers. Right? And they expect features, not bug fixes."

- Tom, CTO, Wasimil

Watch AI Test Automation in action

The Future Is Now: Join the Testing Revolution

With AI Test Automation, Harness becomes the first platform to offer true, fully automated software delivery, from build → test → deploy, without manual gaps or toolchain silos.

- Create robust, intent-based tests 10x faster using natural language

- Slash maintenance by up to 70% with self-healing, AI-powered tests

- Accelerate release cycles and improve developer experience

- Achieve higher quality, lower costs, and reduced risk—at enterprise scale

Be part of the revolution: start your AI-powered testing journey with Harness today.

Ready to see AI Test Automation in action? Contact us to get started!

End-to-end testing should not be guesswork

A common belief in the testing community is that quality test cases can be designed by predicting the end user’s behavior with an application based on an internal understanding of how the software will be used. As a result, end-to-end tests tend to be heavily dependent on assumptions about the user’s actions. You might expect the user to act in a certain way when using your app, but the end user’s flow is almost always unpredictable. And it’s the unpredictable interactions that most end-to-end tests miss that end up being the most significant bugs and customer escalations.

This article will explain how end-to-end testing is done today, common frameworks used in testing, what can go wrong when testing is based on internal teams, and how creating end-to-end tests based on data from actual user behavior rather than plain assumptions can improve an organization’s overall quality efforts.

What is end-to-end testing?

End-to-end testing, or E2E testing, simulates a user’s workflow from beginning to end. This can mean tracking and recording all possible flows and test cases from login to logout. The input and output data are identified and tested based on the conditions provided as well as the test cases.

E2E Testing Framework

A testing framework, or a collection of actions performed on an application, can be automated and used to improve the product. There are many open-source testing frameworks that can be used for E2E test automation. A framework helps make test automation scripts reusable, maintainable, and stable. It is important to have a test suite that tests several layers of an application.

Here are some popular open-source test automation frameworks for end-to-end testing.

- Gauge Framework is an open-source framework widely used for creating and running E2E tests based on user behavior. Gauge Framework is free and is based on Markdown syntax. Its architecture offers plugin support and helps create E2E tests that can be extensively maintained. It supports writing test code in multiple programming languages and across platforms, and it helps with data-driven tests.

- Cypress is a more developer-centric test automation framework that focuses on test-driven development for browser-based applications. Cypress offers real-time test writing and execution as well as CI debugging and a dashboard for collecting insights.

- Serenity is a Java-based framework and open-source library that integrates with behavior-driven development (BDD) tools like Cucumber and JBehave. It acts as a wrapper on top of Selenium WebDriver and BDD tools. It offers the ability to create easier end-to-end and regression tests and offers BDD training courses as well as enterprise support.

There are also frameworks like Citrus, OpenTest, WebDriverIO, and Galen that offer a wide range of support for different programming languages and testing.

E2E testing challenges

Cost and effort

E2E tests are expensive in terms of efficiency, time, and money. A QA engineer has to prioritize optimum flows based on experience and presumptions, but this often means the users report more bugs post-production because their environment and usage patterns are very different than those of internal QA teams. For example, for an online store, QA engineers typically test login, add to cart, and checkout as separate tests. Real-world customers, on the other hand, may perform these actions in a complex sequence, such as login → add to cart → go back and search for new products → read reviews → replace previously added items with new ones → add more products to the cart → checkout. Many software issues are encountered only during such complex interactions. However, creating tests for such complex E2E flows is extremely time- and effort-consuming, and such flows are, therefore, rarely tested before release.

Based on assumptions

Textbooks and traditional testing courses have taught engineers to create E2E tests based on assumptions about the end user’s behavior; these assumptions are based on the engineer’s experience, app requirements, and pure guesswork. Complex user interactions like the ones mentioned above are very hard to envision without user behavioral data. It is no surprise that users find bugs in a product after it goes live, raising concerns about a team’s testing capabilities. The tech industry changes with the times, and it is heavily focused on user experience. Positive UX, therefore, is hugely important.

Predictions are inaccurate

The gap between the test coverage and the bugs reported by consumers increases when engineers predict how consumers will use the application despite having no solid data. The tester’s job is to base test cases on the stories and original requirements by the product owner or a business analyst.

There could be multiple factors behind an increase in post-production bugs:

- Requirements could be outdated.

- Requirements may not account for a user’s real-life behavior.

- User experience may change faster than in-house requirements.

Why guesswork-based testing fails

There is an increased emphasis on real-time data because E2E testing based on engineers’ guesswork can lead to longer-term problems.

- Creating and automating end-to-end tests based on assumptions leads to more customers discovering bugs after release.

- The test suite may rely too heavily on tests for bugs that were fixed in previous releases.

- Hotfixes can address problems as soon as customers report them, but this interrupts a team’s workflow and takes time and effort away from writing or updating software.

- It is impossible to create end-to-end tests for large systems.

End-to-end tests are a collection of tests involving multiple services that are not isolated; they require mock services to test multiple flows. If the QA team does not analyze the tests based on the user data, it often leads to a break-fix loop that increases product downtime and damages the production cycle. This frustrates every member in the software development process, from developers to customers.

How to improve quality through the customer experience

Harness AI real-world testing

Harness AI addresses the above problems by generating E2E tests from real-time user flows, eliminating the need for guesswork. It analyzes the application from the customer’s standpoint, providing a more proactive way to detect and fix UX bugs before the customer ever sees them. This increases developer productivity as well as customer satisfaction.

In addition to real-time user sessions, Harness AI automates test runs in a CI/CD pipeline that can be viewed on a live Kanban board. Release readiness can be determined by the customer impact of broken flows and code changes that affect the overall quality score.

There are other ways of testing that have proved to be successful. The following methods are based on data collected from UX and actions on various applications.

Consumer-driven contract tests

Consumer-driven contract tests, or CDC tests, are used to test individual components of a system in isolation. They can be essential when testing microservices. Contract tests that are based on consumer behavior ensure that the user’s expectations are met. The tests verify and validate if the requests are accepted by the provider and return expected responses.

In this approach, the consumer drives the contract between the consumer and the provider (the server). There are APIs that fit the actual requirements and handle the payload effectively.

Tracking behavior with heatmaps

In addition to collecting user data via analytics, insights, and live user testing, heatmaps—which demonstrate where and how often users click on various areas of a site—are an important way for a tester to enhance an existing E2E test suite.

Heatmaps and the data derived from them show positive and negative trends of how customers are using an application, in terms of which areas are most popular and which may need more attention. The concentration of clicks, user flow, breakpoints, scrolling behavior, and user navigation trends can help create the right set of contract tests and a solid E2E test suite. Such tests would rapidly decrease the bugs reported in production since the test coverage would be a subset of user data.

Click tracking and device preference are helpful for the QA team when dealing with midsize applications.

There should be a significant decrease in bugs reported by customers once E2E tests are based on user data. Real-time user data offers a wide range of user flows that do not involve any guesswork. Harness AI, for instance, utilizes real-time data from user sessions, records the flows, and generates E2E test scripts for even overlooked areas of an application or site.

Conclusion

Properly writing and managing E2E testing requires less guesswork and more hard data, based on metrics, including test coverage, availability of the test environment, progress tracking, defect status, and test case status. No matter the method used to create test cases, the test environment needs to be stable in order to run E2E tests. Most importantly, the requirements must be constantly updated based on user data.

You can improve your E2E testing with a solid testing framework and a method for measuring user behavior data. Harness AI offers a CX-driven DevOps platform that uses live user sessions, determines real user flows, and validates tests based on such flows. It generates E2E tests to verify the flows in a CI/CD pipeline, which decreases the likelihood of bugs post-production and ultimately improves the quality of the final product. Harness enables you to proactively resolve bugs and boost engineering productivity by focusing on and optimizing your testing cycles.

It takes Generative AI to test Generative AI

Traditional Evaluation Metrics

Traditional evaluation metrics such as BLEU, ROUGE, and Perplexity are commonly used to evaluate generative AI models:

- BLEU (Bilingual Evaluation Understudy): Measures the n-gram overlap between generated text and reference text, providing a quantitative measure of similarity.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Focuses on the overlap of n-grams, particularly useful for evaluating summarization tasks.

- Perplexity: Measures how well a probability model predicts a sample. Lower perplexity indicates better performance and greater fluency of the generated text.

While these metrics are standardized and provide a repeatable quantitative assessment, they have limitations. They often capture surface-level similarities and may not fully reflect the semantic meaning, coherence, or relevance of the generated content. Additionally, implementing these metrics requires significant preparation of reference datasets and technical expertise.

The Harness AI Assertions Approach

This is where Harness AI Assertions comes into play with an easy-to-use approach that requires zero prior experience or technical knowledge. Assertions leverage cutting-edge large language models (LLMs) like GPT-4o and Claude 3.5 to evaluate assertions authored by users. These assertions (demonstrated later in the article) are crafted to assess the quality of chatbot responses. Harness AI Assertions simplify the process of testing chatbot responses by validating them against various criteria, including:

- Valid responses versus errors

- Factual information versus misinformation

- Appropriate versus offensive tone or language

- Code bugs in generated code

- Logical errors

- Hallucinations when evaluating mathematical questions

- Custom verification through a one-shot sample

The elephant in the room is why wouldn’t Harness AI Agent also hallucinate and have the same problems that the chatbots may exhibit.

While hallucinations can never be eliminated, they can be significantly mitigated, and the answer to this lies in the approach that Harness AI Agent takes to mitigate the impact:

Smarter models to test smaller models: Harness uses the smartest models cost-effectively as the testing load is a fraction of production loads. A smarter model (e.g., GPT-4o, Claude 3.5) can validate responses of smaller models more effectively, reducing the chances of hallucinations in testing itself.

Code generation to evaluate mathematical answers: One might wonder why Harness’s LLM wouldn’t also hallucinate on a mathematical question. The answer lies in Harness’s use of code generation and evaluation for mathematical operations. This approach enables Harness AI Assertions to verify mathematical questions with high confidence.

Augmenting with specific content: The Harness Agent has a memory that can be augmented with user-specific content, allowing it to validate the correctness of proprietary data unknown to general models.

Contextual Priming: Users can provide specific context through memory or one-shot examples. This minimizes the need for the model to infer or assume, making the evaluation easier compared to a chatbot that first has to access relevant data through Retrieval-Augmented Generation (RAG) and then summarize. The job of testing LLMs is inherently simpler than that of the chatbot itself.

Comparison with Chain of Verification (COVe)

Harness AI Assertions are similar to the Chain of Verification (COVe) prompt engineering technique, where a verification question is used to catch hallucinations. This technique has reduced hallucinations in LLM responses (Chain-of-Verification Reduces Hallucination in Large Language Models, arXiv:2309.11495). While in COVe, the LLM devises the verification question, in the case of Harness AI Assertions, the human in the loop devises a verification assertion. This human involvement can enhance the accuracy and relevance of the verification process.

Practical Application

In this article, we explore a sample chatbot that delivers both high-quality responses and occasional errors or misinformation. For demonstration purposes, we have instrumented the chatbot to include erroneous responses to certain questions. We use Harness AI Assertions to validate the chatbot directly through the user interface (UX). There is no need for backend API integration; all Harness requires is access to the web application containing the chatbot.

We begin by creating a test that opens the chatbot interface on the Interactive Test Authoring page.

Valid responses

We write a test command to enter the simple query “Hello” into the chatbot’s user text box.

We can see that the chatbot has responded, but how do we determine if the response is correct or appropriate as part of the test?

To validate this, we author an AI Assertion (a natural language question validated for truthfulness):

Assertion: “Did Ava respond to the user’s query appropriately?”

As we can see, the assertion passed.

The logs tab shows more details on how the Harness Agent evaluated the assertion:

Question: Did Ava respond to the user’s query appropriately?

Answer true.

Explanation: The user greeted Ava with ‘Hello’, and Ava responded

with ‘Hi there! How can I help you today?’.

This is an appropriate response to a greeting, indicating that Ava

is ready to assist the user.

Now, this was a simple query; let’s move on to something more interesting

We are going to ask Ava: “Is horse a mammal?”

Now we assert if the response is correct and factual

Assertion: Did Ava respond with the correct and factual answer?

Again, the assertion passed:

Let’s look at the logs to see what Harness Agent did.

Question: Did Ava respond with the correct and factual answer?

Answer true.

Explanation: Ava responded with a correct and factual answer.

The response states that a horse is a mammal and provides accurate information

about the characteristics of mammals, such as the presence of mammary glands,

neocortex, fur or hair, and three middle ear bones.

This is consistent with known biological facts.

Catching hallucinations

Ava, like most chatbots, is prone to hallucinations as the model gets tuned and updated.

Let’s ask Ava a trick question: “How did Lincoln leverage smartphones during the Revolutionary War?”

Ava responded, but is it correct and factual?

With Harness, it’s as simple as stating an obvious question:

“Did Ava respond to the user’s question about Lincoln with a correct and factual answer?”

This time, the assertion failed.

Let’s again look at the logs for the Harness Agent’s reasoning behind failing the assertion:

Question: Did Ava respond to the user’s question about Lincoln with the correct and factual answer?

Answer false.

Explanation: Ava’s response to the user’s question about Lincoln is not correct or factual. The response states that Abraham Lincoln revolutionized the Revolutionary War by introducing smartphones, which is historically inaccurate as smartphones did not exist during Lincoln’s time or the Revolutionary War.

The Harness Agent can catch hallucinations and misinformation generated by a chatbot. But can it detect offensive or inappropriate language?

Detecting inappropriate language or tone

What if the chatbot becomes offensive due to bad data in the fine-tuning process (e.g., using Slack conversations for training)?

Let’s ask this question:

“Can you help me with my account issue?” (For this demonstration, we’ve intentionally made Ava’s response offensive.)

Now let’s assert with Harness if the answer or the tone is offensive or inappropriate. Since assertion must have a true response, so we must frame the question accordingly

Assert: Is Ava’s response appropriate?

Harness Agent caught the inappropriate response, and the logs show the reasoning

Question: Is Ava’s response appropriate?

Answer false.

Explanation: Ava’s response ‘Figure it out yourself. I’m not here to do everything for you.’ is inappropriate for a customer service or help chat. This response is unprofessional and does not provide any assistance to the user.

Catching bugs in generated code

We’ll ask Ava to generate code to sort an array: “Please write a JavaScript function that sorts an array of numbers.”

Ava generated a valid-looking code, but is it bug-free?

Let’s assert this with the Harness Agent:

Assert: Is the generated code for the user’s question correct and bug-free?

The assertion failed, and we can see what the reason is in the log

Question: Is the generated code for the user’s question correct and bug-free?

Answer false.

Explanation: The generated JavaScript code for sorting an array of numbers

contains a bug. Specifically, in the nested for loop, the condition should be

‘j < n — i — 1’ instead of ‘j < n — i’. This ensures that the inner loop does not go out of bounds when accessing ‘sortedArray[j + 1]’.

Therefore, the answer to the question is NO

Harness Agent can catch bugs in generated code and fail the assertion.

Handling proprietary data

We have demonstrated that Harness Agent-based Assertions can catch a wide variety of issues in chatbot responses. However, many chatbots rely on custom data to answer user questions. How can the Harness Agent help with that?

To illustrate this, we will expand on the Ava chatbot’s capabilities. Let’s say this chatbot is a customer support bot that answers queries of users of an e-commerce application. In this case, Ava would have access to the product catalog and user’s order history.

One shot learning

In this case, the assertion itself provides the correct context that needs verification. For example, we will ask Ava a specific question from a test user about the status of their order for shoes.

For the test user, we provided order history and active orders to Ava.

We will now ask Ava a question about a specific order described below:

Sample: Order #: 894562, Description: Loafer Shoes, price $52, status: Shipped from warehouse, ETA: 06/29/2024

Knowing this is based on test data you can provide the correct context in the assertion itself. We now ask the Harness AI Agent using the ETA in the assertion. Even though the Harness agent has no prior knowledge of the order history, the context within the assertion provides enough details to verify correctness.

Assert: “Is Ava’s response correct, given order delivery date is 06/29/2024?”

Harness Agent logs show:

Question: Is Ava’s response correct, given that the order delivery date is 06/29/2024?Answer false.

Explanation: Ava’s response states that the order can be expected in about 12 days. Given today’s date is June 22, 2024, adding 12 days would result in a delivery date of July 4, 2024. Since the order delivery date is June 29, 2024, Ava’s response is incorrect.

Using Harness Memory

The Harness Agent has a feature called memory, allowing customers to upload custom content and make the Harness Agent aware of this relevant content. This enables the Harness Agent to assert and validate the correctness of responses based on proprietary data.

Ava has access to all user’s history through a RAG model, but for testing purpose, we can pick a particular test-user and provide the order history of just that user:

Here is a sample of the test user’s order history for illustration purposes.

Now, we will ask Ava a set of questions that are based on this test user order history

Harness Logs:

Question: Did Ava correctly answer the user’s question?

Answer true.

Explanation: Yes, Ava correctly answered the user’s question. The order for the wireless earbuds was canceled, so they were not delivered.

Let’s try another question that is usually the weakness of a typical chatbot

Harness logs:

Question: Did Ava correctly answer the user’s question?

Answer false.

Explanation: Ava did not correctly answer the user’s question. The correct total amount spent on the orders is $1930, not $1462

Harness’s use of code generation and evaluation for mathematical operations enables high-confidence verification.

Conclusion

Testing Generative AI applications presents unique challenges that traditional frameworks often struggle to address. Industry-standard evaluation metrics like BLEU, ROUGE, and Perplexity require deep expertise and the creation of extensive datasets, which can be time-consuming and complex. However, with Harness AI Assertions, testing becomes as straightforward as asking natural language questions to verify responses across a range of issues. By leveraging advanced LLMs and techniques like code generation and user-authored assertions, Harness provides a robust and reliable testing solution for generative AI-based applications.

Transforming End-to-End Testing with Generative Agentic Workflows

The world of software development is abuzz with excitement about how Generative AI is revolutionizing the industry. Thanks to large language models (LLMs), code generation productivity is skyrocketing, leading to an explosion in the volume of code generated. However, all this new code needs to be thoroughly tested before deployment. Testing comes in various forms, with code testing focused on validating whether the new piece of code works as intended. The real challenge lies in conducting end-to-end functional, workflow, and regression testing to ensure that new code not only works well in isolation but also integrates seamlessly with existing application workflows and does not break any existing functionalities. This process is largely manual today, posing a significant bottleneck that could potentially negate the tremendous gains from LLM-assisted coding. So, the pressing question is: how can Generative AI help eliminate these bottlenecks?

Can LLMs Generate Usable E2E Test Code?

The answer clearly lies in automation. But can LLMs help generate test automation code as easily as they generate code? Unfortunately, the situation is more complex. While LLMs are incredibly useful for generating unit test code, they face significant challenges when it comes to end-to-end (E2E) testing.

Why LLMs Excel at Generating Unit Test Code

Unit testing focuses on individual units or components of a software application, ensuring that each part functions correctly in isolation. LLMs are adept at unit testing because:

- Well-Defined Inputs and Outputs: Clear inputs and outputs make it easy for LLMs to generate meaningful test cases.

- Limited Scope: The narrow focus of unit tests allows LLMs to understand and generate relevant test cases.

- Code Context: LLMs can analyze dependencies within a small codebase to create accurate unit tests.

The Complexity of E2E Testing

In contrast, E2E testing involves:

- Complex Interactions: Replicating real user interactions like clicking buttons and navigating pages.

- Dynamic Elements: Adapting to changing web elements based on user interactions or data states.

- Iterative Discovery: Iteratively discovering the next actions based on the application’s current state, requiring adaptiveness and decision-making that LLMs struggle with.

Agentic Workflows: Solving the E2E Testing Problem

What Are Agentic Workflows?

Agentic workflows refer to automated processes that mimic human decision-making and interaction patterns. In the context of E2E testing, an agentic workflow can autonomously navigate through an application, making decisions and adapting to changes in real time, just like a human tester would. These workflows leverage advanced AI techniques to understand the application’s state, determine the next steps, and execute them iteratively until the entire workflow is completed.

What Can the Agentic Test Automation Workflow Do for You?

Automated Intent-Based Testing

One of the most promising end goals of automation is the ability to exercise the same degree of flexibility and adaptiveness as manual testing. This can address many pains traditionally associated with automation, such as brittle tests that frequently break whenever the UI changes. Intent-based testing allows the system to understand and execute tasks based on the user’s intent, making the automation process more resilient and adaptable to changes.

Dramatically Simplify Test Creation Using Natural Language Prompts