Featured Blogs

Recent Blogs

Top Continuous Integration Metrics Every Platform Engineering Leader Should Track

Your developers complain about 20-minute builds while your cloud bill spirals out of control. Pipeline sprawl across teams creates security gaps you can't even see. These aren't separate problems. They're symptoms of a lack of actionable data on what actually drives velocity and cost.

The right CI metrics transform reactive firefighting into proactive optimization. With analytics data from Harness CI, platform engineering leaders can cut build times, control spend, and maintain governance without slowing teams down.

Why Do CI Metrics Matter for Platform Engineering Leaders?

Platform teams who track the right CI metrics can quantify exactly how much developer time they're saving, control cloud spending, and maintain security standards while preserving development velocity. The importance of tracking CI/CD metrics lies in connecting pipeline performance directly to measurable business outcomes.

Reclaim Hours Through Speed Metrics

Build time, queue time, and failure rates directly translate to developer hours saved or lost. Research shows that 78% of developers feel more productive with CI, and most want builds under 10 minutes. Tracking median build duration and 95th percentile outliers can reveal your productivity bottlenecks.

Harness CI delivers builds up to 8X faster than traditional tools, turning this insight into action.

Turn Compute Minutes Into Budget Predictability

Cost per build and compute minutes by pipeline eliminate the guesswork from cloud spending. AWS CodePipeline charges $0.002 per action-execution-minute, making monthly costs straightforward to calculate from your pipeline metrics.

Measuring across teams helps you spot expensive pipelines, optimize resource usage, and justify infrastructure investments with concrete ROI.

Measure Artifact Integrity at Scale

SBOM completeness, artifact integrity, and policy pass rates ensure your software supply chain meets security standards without creating development bottlenecks. NIST and related EO 14028 guidance emphasize on machine-readable SBOMs and automated hash verification for all artifacts.

However, measurement consistency remains challenging. A recent systematic review found that SBOM tooling variance creates significant detection gaps, with tools reporting between 43,553 and 309,022 vulnerabilities across the same 1,151 SBOMs.

Standardized metrics help you monitor SBOM generation rates and policy enforcement without manual oversight.

10 CI/CD Metrics That Move the Needle

Not all metrics deserve your attention. Platform engineering leaders managing 200+ developers need measurements that reveal where time, money, and reliability break down, and where to fix them first.

- Performance metrics show where developers wait instead of code. High-performing organizations achieve up to 440 times faster lead times and deploy 46 times more frequently by tracking the right speed indicators.

- Cost and resource indicators expose hidden optimization opportunities. Organizations using intelligent caching can reduce infrastructure costs by up to 76% while maintaining speed, turning pipeline data into budget predictability.

- Quality and governance metrics scale security without slowing delivery. With developers increasingly handling DevOps responsibilities, compliance and reliability measurements keep distributed teams moving fast without sacrificing standards.

So what does this look like in practice? Let's examine the specific metrics.

- Build Duration (p50/p95): Pinpointing Bottlenecks and Outliers

Build duration becomes most valuable when you track both median (p50) and 95th percentile (p95) times rather than simple averages. Research shows that timeout builds have a median duration of 19.7 minutes compared to 3.4 minutes for normal builds. That’s over five times longer.

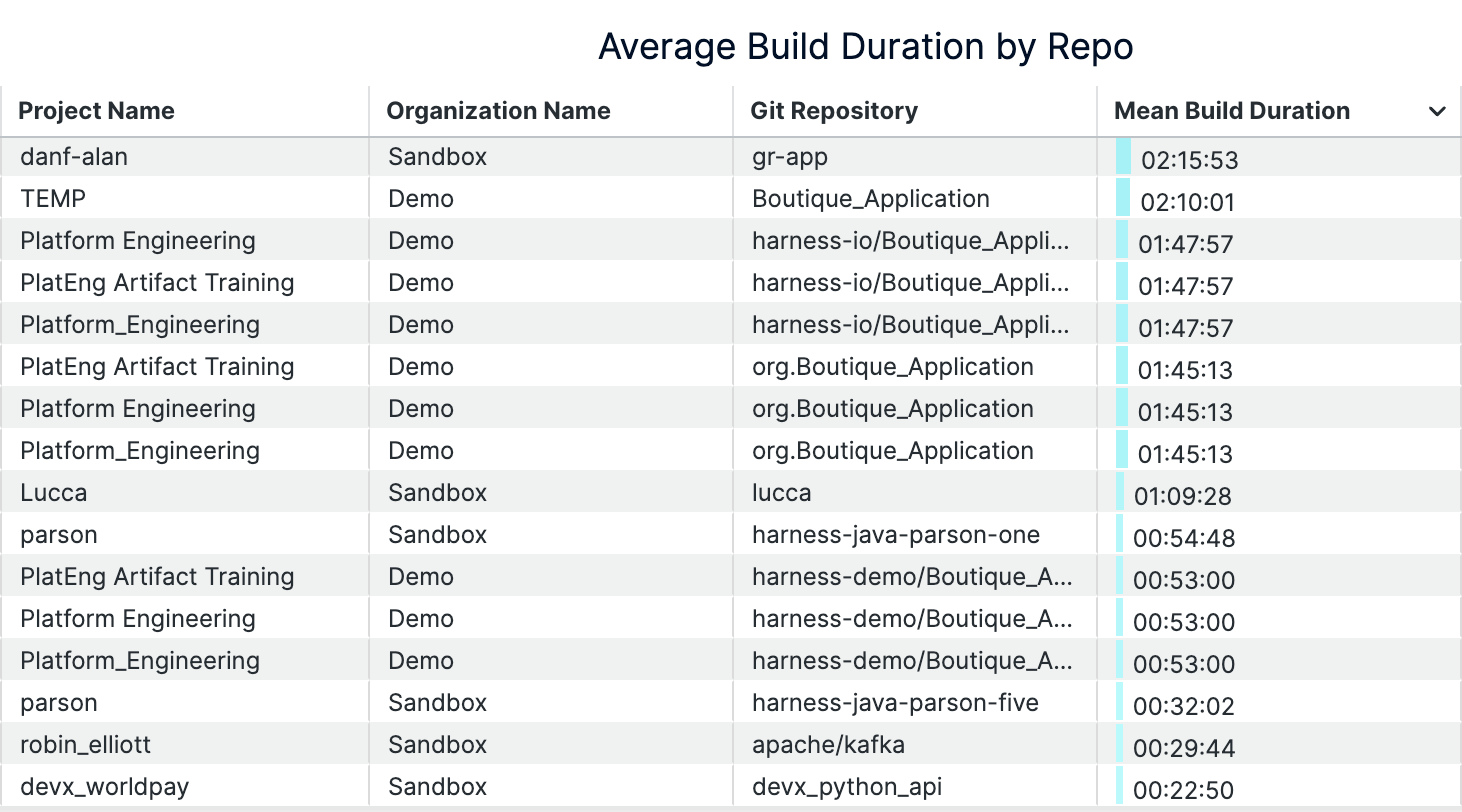

While p50 reveals your typical developer experience, p95 exposes the worst-case delays that reduce productivity and impact developer flow. These outliers often signal deeper issues like resource constraints, flaky tests, or inefficient build steps that averages would mask. Tracking trends in both percentiles over time helps you catch regressions before they become widespread problems. Build analytics platforms can surface when your p50 increases gradually or when p95 spikes indicate new bottlenecks.

Keep builds under seven minutes to maintain developer engagement. Anything over 15 minutes triggers costly context switching. By monitoring both typical and tail performance, you optimize for consistent, fast feedback loops that keep developers in flow. Intelligent test selection reduces overall build durations by up to 80% by selecting and running only tests affected by the code changes, rather than running all tests.

An example of build durations dashboard (on Harness)

- Queue Time: Measuring Infrastructure Constraints

Queue time measures how long builds wait before execution begins. This is a direct indicator of insufficient build capacity. When developers push code, builds shouldn't sit idle while runners or compute resources are tied up. Research shows that heterogeneous infrastructure with mixed processing speeds creates excessive queue times, especially when job routing doesn't account for worker capabilities. Queue time reveals when your infrastructure can't handle developer demand.

Rising queue times signal it's time to scale infrastructure or optimize resource allocation. Per-job waiting time thresholds directly impact throughput and quality outcomes. Platform teams can reduce queue time by moving to Harness Cloud's isolated build machines, implementing intelligent caching, or adding parallel execution capacity. Analytics dashboards track queue time trends across repositories and teams, enabling data-driven infrastructure decisions that keep developers productive.

- Build Success Rate: Ensuring Pipeline Reliability

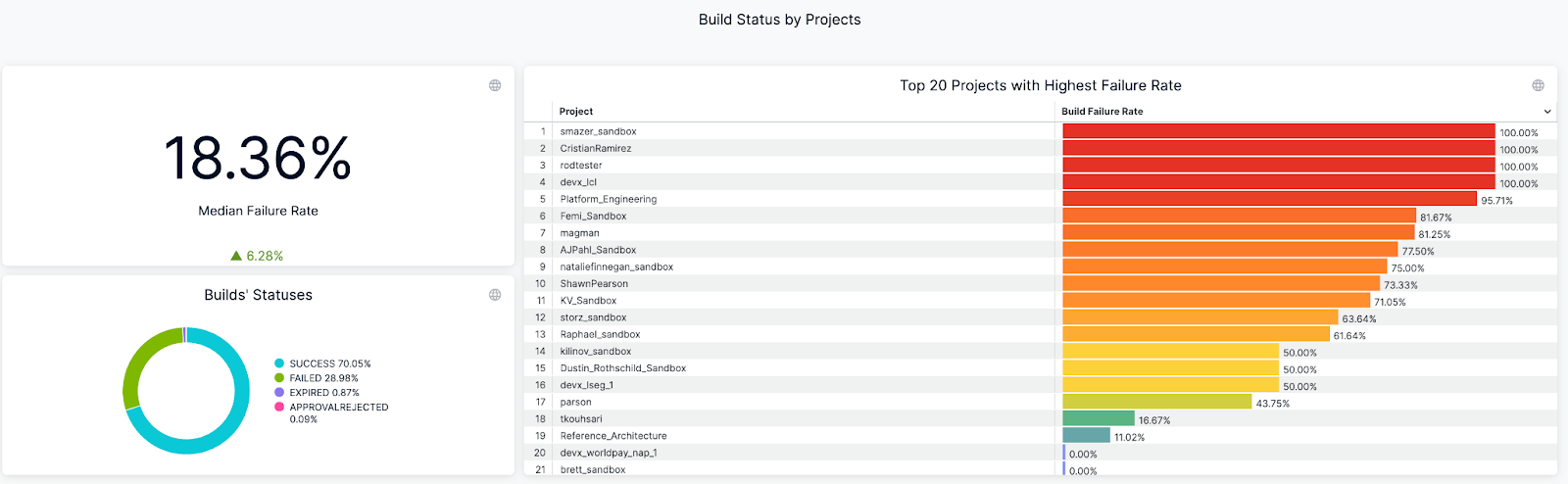

Build success rate measures the percentage of builds that complete successfully over time, revealing pipeline health and developer confidence levels. When teams consistently see success rates above 90% on their default branches, they trust their CI system to provide reliable feedback. Frequent failures signal deeper issues — flaky tests that pass and fail randomly, unstable build environments, or misconfigured pipeline steps that break under specific conditions.

Tracking success rate trends by branch, team, or service reveals where to focus improvement efforts. Slicing metrics by repository and pipeline helps you identify whether failures cluster around specific teams using legacy test frameworks or services with complex dependencies. This granular view separates legitimate experimental failures on feature branches from stability problems that undermine developer productivity and delivery confidence.

An example of Build Success/Failure Rate Dashboard (on Harness)

- Mean Time to Recovery (MTTR): Speeding Up Incident Response

Mean time to recovery measures how fast your team recovers from failed builds and broken pipelines, directly impacting developer productivity. Research shows organizations with mature CI/CD implementations see MTTR improvements of over 50% through automated detection and rollback mechanisms. When builds fail, developers experience context switching costs, feature delivery slows, and team velocity drops. The best-performing teams recover from incidents in under one hour, while others struggle with multi-hour outages that cascade across multiple teams.

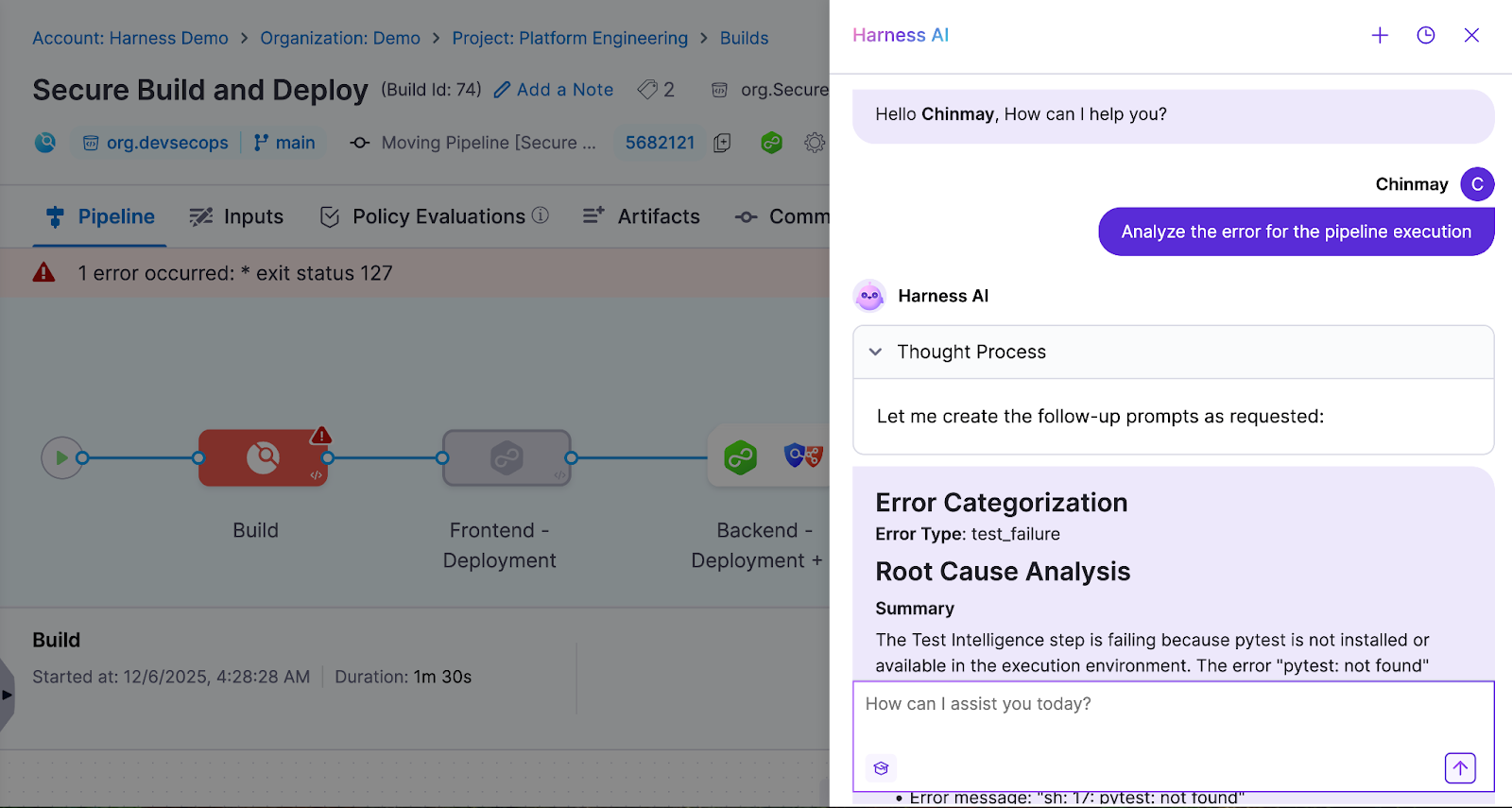

Automated alerts and root cause analysis tools slash recovery time by eliminating manual troubleshooting, reducing MTTR from 20 minutes to under 3 minutes for common failures. Harness CI's AI-powered troubleshooting surfaces failure patterns and provides instant remediation suggestions when builds break.

- Flaky Test Rate: Eliminating Developer Frustration

Flaky tests pass or fail non-deterministically on the same code, creating false signals that undermine developer trust in CI results. Research shows 59% of developers experience flaky tests monthly, weekly, or daily, while 47% of restarted failing builds eventually passed. This creates a cycle where developers waste time investigating false failures, rerunning builds, and questioning legitimate test results.

Tracking flaky test rate helps teams identify which tests exhibit unstable pass/fail behavior, enabling targeted stabilization efforts. Harness CI automatically detects problematic tests through failure rate analysis, quarantines flaky tests to prevent false alarms, and provides visibility into which tests exhibit the highest failure rates. This reduces developer context switching and restores confidence in CI feedback loops.

- Cost Per Build: Controlling CI Infrastructure Spend

Cost per build divides your monthly CI infrastructure spend by the number of successful builds, revealing the true economic impact of your development velocity. CI/CD pipelines consume 15-40% of overall cloud infrastructure budgets, with per-run compute costs ranging from $0.40 to $4.20 depending on application complexity, instance type, region, and duration. This normalized metric helps platform teams compare costs across different services, identify expensive outliers, and justify infrastructure investments with concrete dollar amounts rather than abstract performance gains.

Automated caching and ephemeral infrastructure deliver the biggest cost reductions per build. Intelligent caching automatically stores dependencies and Docker layers. This cuts repeated download and compilation time that drives up compute costs.

Ephemeral build machines eliminate idle resource waste. They spin up fresh instances only when the queue builds, then terminate immediately after completion. Combine these approaches with right-sized compute types to reduce infrastructure costs by 32-43% compared to oversized instances.

- Cache Hit Rate: Accelerating Builds With Smart Caching

Cache hit rate measures what percentage of build tasks can reuse previously cached results instead of rebuilding from scratch. When teams achieve high cache hit rates, they see dramatic build time reductions. Docker builds can drop from five to seven minutes to under 90 seconds with effective layer caching. Smart caching of dependencies like node_modules, Docker layers, and build artifacts creates these improvements by avoiding expensive regeneration of unchanged components.

Harness Build and Cache Intelligence eliminates the manual configuration overhead that traditionally plagues cache management. It handles dependency caching and Docker layer reuse automatically. No complex cache keys or storage management required.

Measure cache effectiveness by comparing clean builds against fully cached runs. Track hit rates over time to justify infrastructure investments and detect performance regressions.

- Test Cycle Time: Optimizing Feedback Loops

Test cycle time measures how long it takes to run your complete test suite from start to finish. This directly impacts developer productivity because longer test cycles mean developers wait longer for feedback on their code changes. When test cycles stretch beyond 10-15 minutes, developers often switch context to other tasks, losing focus and momentum. Recent research shows that optimized test selection can accelerate pipelines by 5.6x while maintaining high failure detection rates.

Smart test selection optimizes these feedback loops by running only tests relevant to code changes. Harness CI Test Intelligence can slash test cycle time by up to 80% using AI to identify which tests actually need to run. This eliminates the waste of running thousands of irrelevant tests while preserving confidence in your CI deployments.

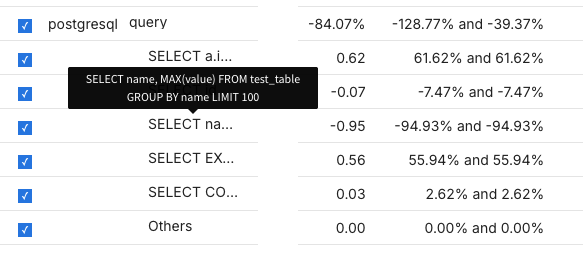

- Pipeline Failure Cause Distribution: Prioritizing Remediation

Categorizing pipeline issues into domains like code problems, infrastructure incidents, and dependency conflicts transforms chaotic build logs into actionable insights. Harness CI's AI-powered troubleshooting provides root cause analysis and remediation suggestions for build failures. This helps platform engineers focus remediation efforts on root causes that impact the most builds rather than chasing one-off incidents.

Visualizing issue distribution reveals whether problems are systemic or isolated events. Organizations using aggregated monitoring can distinguish between infrastructure spikes and persistent issues like flaky tests. Harness CI's analytics surface which pipelines and repositories have the highest failure rates. Platform teams can reduce overall pipeline issues by 20-30%.

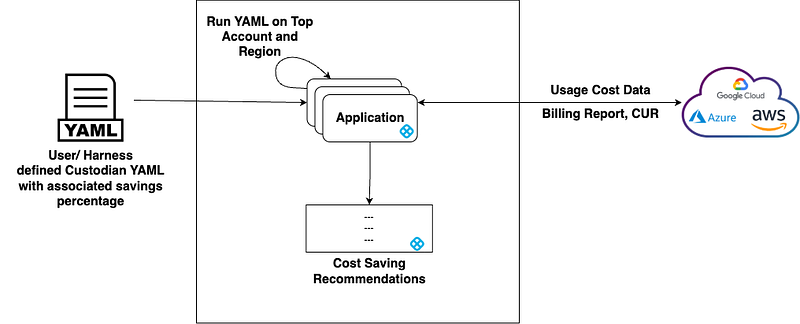

- Artifact Integrity Coverage: Securing the Software Supply Chain

Artifact integrity coverage measures the percentage of builds that produce signed, traceable artifacts with complete provenance documentation. This tracks whether each build generates Software Bills of Materials (SBOMs), digital signatures, and documentation proving where artifacts came from. While most organizations sign final software products, fewer than 20% deliver provenance data and only 3% consume SBOMs for dependency management. This makes the metric a leading indicator of supply chain security maturity.

Harness CI automatically generates SBOMs and attestations for every build, ensuring 100% coverage without developer intervention. The platform's SLSA L3 compliance capabilities generate verifiable provenance and sign artifacts using industry-standard frameworks. This eliminates the manual processes and key management challenges that prevent consistent artifact signing across CI pipelines.

Steps to Track CI/CD Metrics and Turn Insights Into Action

Tracking CI metrics effectively requires moving from raw data to measurable improvements. The most successful platform engineering teams build a systematic approach that transforms metrics into velocity gains, cost reductions, and reliable pipelines.

Step 1: Standardize Pipeline Metadata Across Teams

Tag every pipeline with service name, team identifier, repository, and cost center. This standardization creates the foundation for reliable aggregation across your entire CI infrastructure. Without consistent tags, you can't identify which teams drive the highest costs or longest build times.

Implement naming conventions that support automated analysis. Use structured formats like team-service-environment for pipeline names and standardize branch naming patterns. Centralize this metadata using automated tag enforcement to ensure organization-wide visibility.

Step 2: Automate Metric Collection and Visualization

Modern CI platforms eliminate manual metric tracking overhead. Harness CI provides dashboards that automatically surface build success rates, duration trends, and failure patterns in real-time. Teams can also integrate with monitoring stacks like Prometheus and Grafana for live visualization across multiple tools.

Configure threshold-based alerts for build duration spikes or failure rate increases. This shifts you from fixing issues after they happen to preventing them entirely.

Step 3: Analyze Metrics and Identify Optimization Opportunities

Focus on p95 and p99 percentiles rather than averages to identify critical performance outliers. Drill into failure causes and flaky tests to prioritize fixes with maximum developer impact. Categorize pipeline failures by root cause — environment issues, dependency problems, or test instability — then target the most frequent culprits first.

Benchmark cost per build and cache hit rates to uncover infrastructure savings. Optimized caching and build intelligence can reduce build times by 30-40% while cutting cloud expenses.

Step 4: Operationalize Improvements With Governance and Automation

Standardize CI pipelines using centralized templates and policy enforcement to eliminate pipeline sprawl. Store reusable templates in a central repository and require teams to extend from approved templates. This reduces maintenance overhead while ensuring consistent security scanning and artifact signing.

Establish Service Level Objectives (SLOs) for your most impactful metrics: build duration, queue time, and success rate. Set measurable targets like "95% of builds complete within 10 minutes" to drive accountability. Automate remediation wherever possible — auto-retry for transient failures, automated cache invalidation, and intelligent test selection to skip irrelevant tests.

Make Your CI Metrics Work

The difference between successful platform teams and those drowning in dashboards comes down to focus. Elite performers track build duration, queue time, flaky test rates, and cost per build because these metrics directly impact developer productivity and infrastructure spend.

Start with the measurements covered in this guide, establish baselines, and implement governance that prevents pipeline sprawl. Focus on the metrics that reveal bottlenecks, control costs, and maintain reliability — then use that data to optimize continuously.

Ready to transform your CI metrics from vanity to velocity? Experience how Harness CI accelerates builds while cutting infrastructure costs.

Continuous Integration Metrics FAQ

Platform engineering leaders often struggle with knowing which metrics actually move the needle versus creating metric overload. These answers focus on metrics that drive measurable improvements in developer velocity, cost control, and pipeline reliability.

What separates actionable CI metrics from vanity metrics?

Actionable metrics directly connect to developer experience and business outcomes. Build duration affects daily workflow, while deployment frequency impacts feature delivery speed. Vanity metrics look impressive, but don't guide decisions. Focus on measurements that help teams optimize specific bottlenecks rather than general health scores.

Which CI metrics have the biggest impact on developer productivity?

Build duration, queue time, and flaky test rate directly affect how fast developers get feedback. While coverage monitoring dominates current practices, build health and time-to-fix-broken-builds offer the highest productivity gains. Focus on metrics that reduce context switching and waiting.

How do CI metrics help reduce infrastructure costs without sacrificing quality?

Cost per build and cache hit rate reveal optimization opportunities that maintain quality while cutting spend. Intelligent caching and optimized test selection can significantly reduce both build times and infrastructure costs. Running only relevant tests instead of entire suites cuts waste without compromising coverage.

What's the most effective way to start tracking CI metrics across different tools?

Begin with pipeline metadata standardization using consistent tags for service, team, and cost center. Most CI platforms provide basic metrics through built-in dashboards. Start with DORA metrics, then add build-specific measurements as your monitoring matures.

How often should teams review CI metrics and take action?

Daily monitoring of build success rates and queue times enables immediate issue response. Weekly reviews of build duration trends and monthly cost analysis drive strategic improvements. Automated alerts for threshold breaches prevent small problems from becoming productivity killers.

Unit Testing in CI/CD: How to Accelerate Builds Without Sacrificing Quality

Modern unit testing in CI/CD can help teams avoid slow builds by using smart strategies. Choosing the right tests, running them in parallel, and using intelligent caching all help teams get faster feedback while keeping code quality high.

Platforms like Harness CI use AI-powered test intelligence to reduce test cycles by up to 80%, showing what’s possible with the right tools. This guide shares practical ways to speed up builds and improve code quality, from basic ideas to advanced techniques that also lower costs.

What Is a Unit Test?

Knowing what counts as a unit test is key to building software delivery pipelines that work.

The Smallest Testable Component

A unit test looks at a single part of your code, such as a function, class method, or a small group of related components. The main point is to test one behavior at a time. Unit tests are different from integration tests because they look at the logic of your code. This makes it easier to figure out what went wrong if something goes wrong.

Isolation Drives Speed and Reliability

Unit tests should only check code that you wrote and not things like databases, file systems, or network calls. This separation makes tests quick and dependable. Tests that don't rely on outside services run in milliseconds and give the same results no matter where they are run, like on your laptop or in a CI pipeline.

Foundation for CI/CD Quality Gates

Unit tests are one of the most important part of continuous integration in CI/CD pipelines because they show problems right away after code changes. Because they are so fast, developers can run them many times a minute while they are coding. This makes feedback loops very tight, which makes it easier to find bugs and stops them from getting to later stages of the pipeline.

Unit Testing Strategies: Designing for Speed and Reliability

Teams that run full test suites on every commit catch problems early by focusing on three things: making tests fast, choosing the right tests, and keeping tests organized. Good unit testing helps developers stay productive and keeps builds running quickly.

Deterministic Tests for Every Commit

Unit tests should finish in seconds, not minutes, so that they can be quickly checked. Google's engineering practices say that tests need to be "fast and reliable to give engineers immediate feedback on whether a change has broken expected behavior." To keep tests from being affected by outside factors, use mocks, stubs, and in-memory databases. Keep commit builds to less than ten minutes, and unit tests should be the basis of this quick feedback loop.

Intelligent Test Selection

As projects get bigger, running all tests on every commit can slow teams down. Test Impact Analysis looks at coverage data to figure out which tests really check the code that has been changed. AI-powered test selection chooses the right tests for you, so you don't have to guess or sort them by hand.

Parallelization and Caching

To get the most out of your infrastructure, use selective execution and run tests at the same time. Divide test suites into equal-sized groups and run them on different machines simultaneously. Smart caching of dependencies, build files, and test results helps you avoid doing the same work over and over. When used together, these methods cut down on build time a lot while keeping coverage high.

Standardized Organization for Scale

Using consistent names, tags, and organization for tests helps teams track performance and keep quality high as they grow. Set clear rules for test types (like unit, integration, or smoke) and use names that show what each test checks. Analytics dashboards can spot flaky tests, slow tests, and common failures. This helps teams improve test suites and keep things running smoothly without slowing down developers.

Unit Test Example: From Code to Assertion

A good unit test uses the Arrange-Act-Assert pattern. For example, you might test a function that calculates order totals with discounts:

def test_apply_discount_to_order_total():

# Arrange: Set up test data

order = Order(items=[Item(price=100), Item(price=50)])

discount = PercentageDiscount(10)

# Act: Execute the function under test

final_total = order.apply_discount(discount)

# Assert: Verify expected outcome

assert final_total == 135 # 150 - 10% discountIn the Arrange phase, you set up the objects and data you need. In the Act phase, you call the method you want to test. In the Assert phase, you check if the result is what you expected.

Testing Edge Cases

Real-world code needs to handle more than just the usual cases. Your tests should also check edge cases and errors:

def test_apply_discount_with_empty_cart_returns_zero():

order = Order(items=[])

discount = PercentageDiscount(10)

assert order.apply_discount(discount) == 0

def test_apply_discount_rejects_negative_percentage():

order = Order(items=[Item(price=100)])

with pytest.raises(ValueError):

PercentageDiscount(-5)Notice the naming style: test_apply_discount_rejects_negative_percentage clearly shows what’s being tested and what should happen. If this test fails in your CI pipeline, you’ll know right away what went wrong, without searching through logs.

Benefits of Unit Testing: Building Confidence and Saving Time

When teams want faster builds and fewer late-stage bugs, the benefits of unit testing are clear. Good unit tests help speed up development and keep quality high.

- Catch regressions right away: Unit tests run in seconds and find breaking changes before they get to integration or production environments.

- Allow fearless refactoring: A strong set of tests gives you the confidence to change code without adding bugs you didn't expect.

- Cut down on costly debugging: Research shows that unit tests cover a lot of ground and find bugs early when fixing them is cheapest.

- Encourage modular design: Writing code that can be tested naturally leads to better separation of concerns and a cleaner architecture.

When you use smart test execution in modern CI/CD pipelines, these benefits get even bigger.

Disadvantages of Unit Testing: Recognizing the Trade-Offs

Unit testing is valuable, but knowing its limits helps teams choose the right testing strategies. These downsides matter most when you’re trying to make CI/CD pipelines faster and more cost-effective.

- Maintenance overhead grows as automated tests expand, requiring ongoing effort to update brittle or overly granular tests.

- False confidence occurs when high unit test coverage hides integration problems and system-level failures.

- Slow execution times can bottleneck CI pipelines when test collections take hours instead of minutes to complete.

- Resource allocation shifts developer time from feature work to test maintenance and debugging flaky tests.

- Coverage gaps appear in areas like GUI components, external dependencies, and complex state interactions.

Research shows that automatically generated tests can be harder to understand and maintain. Studies also show that statement coverage doesn’t always mean better bug detection.

Industry surveys show that many organizations have trouble with slow test execution and unclear ROI for unit testing. Smart teams solve these problems by choosing the right tests, using smart caching, and working with modern CI platforms that make testing faster and more reliable.

How Do Developers Use Unit Tests in Real Workflows?

Developers use unit tests in three main ways that affect build speed and code quality. These practices turn testing into a tool that catches problems early and saves time on debugging.

Test-Driven Development and Rapid Feedback Loops

Before they start coding, developers write unit tests. They use test-driven development (TDD) to make the design better and cut down on debugging. According to research, TDD finds 84% of new bugs, while traditional testing only finds 62%. This method gives you feedback right away, so failing tests help you decide what to do next.

Regression Prevention and Bug Validation

Unit tests are like automated guards that catch bugs when code changes. Developers write tests to recreate bugs that have been reported, and then they check that the fixes work by running the tests again after the fixes have been made. Automated tools now generate test cases from issue reports. They are 30.4% successful at making tests that fail for the exact problem that was reported. To stop bugs that have already been fixed from coming back, teams run these regression tests in CI pipelines.

Strategic Focus on Business Logic and Public APIs

Good developer testing doesn't look at infrastructure or glue code; it looks at business logic, edge cases, and public interfaces. Testing public methods and properties is best; private details that change often should be left out. Test doubles help developers keep business logic separate from systems outside of their control, which makes tests more reliable. Integration and system tests are better for checking how parts work together, especially when it comes to things like database connections and full workflows.

Unit Testing Best Practices: Maximizing Value, Minimizing Pain

Slow, unreliable tests can slow down CI and hurt productivity, while also raising costs. The following proven strategies help teams check code quickly and cut both build times and cloud expenses.

- Write fast, isolated tests that run in milliseconds and avoid external dependencies like databases or APIs.

- Use descriptive test names that clearly explain the behavior being tested, not implementation details.

- Run only relevant tests using selective execution to cut cycle times by up to 80%.

- Monitor test health with failure analytics to identify flaky or slow tests before they impact productivity.

- Refactor tests regularly alongside production code to prevent technical debt and maintain suite reliability.

Types of Unit Testing: Manual vs. Automated

Choosing between manual and automated unit testing directly affects how fast and reliable your pipeline is.

Manual Unit Testing: Flexibility with Limitations

Manual unit testing means developers write and run tests by hand, usually early in development or when checking tricky edge cases that need human judgment. This works for old systems where automation is hard or when you need to understand complex behavior. But manual testing can’t be repeated easily and doesn’t scale well as projects grow.

Automated Unit Testing: Speed and Consistency at Scale

Automated testing transforms test execution into fast, repeatable processes that integrate seamlessly with modern development workflows. Modern platforms leverage AI-powered optimization to run only relevant tests, cutting cycle times significantly while maintaining comprehensive coverage.

Why High-Velocity Teams Prioritize Automation

Fast-moving teams use automated unit testing to keep up speed and quality. Manual testing is still useful for exploring and handling complex cases, but automation handles the repetitive checks that make deployments reliable and regular.

Difference Between Unit Testing and Other Types of Testing

Knowing the difference between unit, integration, and other test types helps teams build faster and more reliable CI/CD pipelines. Each type has its own purpose and trade-offs in speed, cost, and confidence.

Unit Tests: Fast and Isolated Validation

Unit tests are the most important part of your testing plan. They test single functions, methods, or classes without using any outside systems. You can run thousands of unit tests in just a few minutes on a good machine. This keeps you from having problems with databases or networks and gives you the quickest feedback in your pipeline.

Integration Tests: Validating Component Interactions

Integration testing makes sure that the different parts of your system work together. There are two main types of tests: narrow tests that use test doubles to check specific interactions (like testing an API client with a mock service) and broad tests that use real services (like checking your payment flow with real payment processors). Integration tests use real infrastructure to find problems that unit tests might miss.

End-to-End Tests: Complete User Journey Validation

The top of the testing pyramid is end-to-end tests. They mimic the full range of user tasks in your app. These tests are the most reliable, but they take a long time to run and are hard to fix. Unit tests can find bugs quickly, but end-to-end tests may take days to find the same bug. This method works, but it can be brittle.

The Test Pyramid: Balancing Speed and Coverage

The best testing strategy uses a pyramid: many small, fast unit tests at the bottom, some integration tests in the middle, and just a few end-to-end tests at the top.

Workflow of Unit Testing in CI/CD Pipelines

Modern development teams use a unit testing workflow that balances speed and quality. Knowing this process helps teams spot slow spots and find ways to speed up builds while keeping code reliable.

The Standard Development Cycle

Before making changes, developers write code on their own computers and run unit tests. They run tests on their own computers to find bugs early, and then they push the code to version control so that CI pipelines can take over. This step-by-step process helps developers stay productive by finding problems early, when they are easiest to fix.

Automated CI Pipeline Execution

Once code is in the pipeline, automation tools run unit tests on every commit and give feedback right away. If a test fails, the pipeline stops deployment and lets developers know right away. This automation stops bad code from getting into production. Research shows this method can cut critical defects by 40% and speed up deployments.

Accelerating the Workflow

Modern CI platforms use Test Intelligence to only run the tests that are affected by code changes in order to speed up this process. Parallel testing runs test groups in different environments at the same time. Smart caching saves dependencies and build files so you don't have to do the same work over and over. These steps can help keep coverage high while lowering the cost of infrastructure.

Results Analysis and Continuous Improvement

Teams analyze test results through dashboards that track failure rates, execution times, and coverage trends. Analytics platforms surface patterns like flaky tests or slow-running suites that need attention. This data drives decisions about test prioritization, infrastructure scaling, and process improvements. Regular analysis ensures the unit testing approach continues to deliver value as codebases grow and evolve.

Unit Testing Techniques: Tools for Reliable, Maintainable Tests

Using the right unit testing techniques can turn unreliable tests into a reliable way to speed up development. These proven methods help teams trust their code and keep CI pipelines running smoothly:

- Replace slow external dependencies with controllable test doubles that run consistently.

- Generate hundreds of test cases automatically to find edge cases you'd never write manually.

- Run identical test logic against multiple inputs to expand coverage without extra maintenance.

- Capture complex output snapshots to catch unintended changes in data structures.

- Verify behavior through isolated components that focus tests on your actual business logic.

These methods work together to build test suites that catch real bugs and stay easy to maintain as your codebase grows.

Isolation Through Test Doubles

As we've talked about with CI/CD workflows, the first step to good unit testing is to separate things. This means you should test your code without using outside systems that might be slow or not work at all. Dependency injection is helpful because it lets you use test doubles instead of real dependencies when you run tests.

It is easier for developers to choose the right test double if they know the differences between them. Fakes are simple working versions, such as in-memory databases. Stubs return set data that can be used to test queries. Mocks keep track of what happens so you can see if commands work as they should.

This method makes sure that tests are always quick and accurate, no matter when you run them. Tests run 60% faster and there are a lot fewer flaky failures that slow down development when teams use good isolation.

Teams need more ways to get more test coverage without having to do more work, in addition to isolation. You can set rules that should always be true with property-based testing, and it will automatically make hundreds of test cases. This method is great for finding edge cases and limits that manual tests might not catch.

Expanding Coverage with Smart Generation

Parameterized testing gives you similar benefits, but you have more control over the inputs. You don't have to write extra code to run the same test with different data. Tools like xUnit's Theory and InlineData make this possible. This helps find more bugs and makes it easier to keep track of your test suite.

Both methods work best when you choose the right tests to run. You only run the tests you need, so platforms that know which tests matter for each code change give you full coverage without slowing things down.

Verifying Complex Outputs

The last step is to test complicated data, such as JSON responses or code that was made. Golden tests and snapshot testing make things easier by saving the expected output as reference files, so you don't have to do complicated checks.

If your code’s output changes, the test fails and shows what’s different. This makes it easy to spot mistakes, and you can approve real changes by updating the snapshot. This method works well for testing APIs, config generators, or any code that creates structured output.

Teams that use full automated testing frameworks see code coverage go up by 32.8% and catch 74.2% more bugs per build. Golden tests help by making it easier to check complex cases that would otherwise need manual testing.

The main thing is to balance thoroughness with easy maintenance. Golden tests should check real behavior, not details that change often. When you get this balance right, you’ll spend less time fixing bugs and more time building features.

Unit Testing Tools: Frameworks That Power Modern Teams

Picking the right unit testing tools helps your team write tests efficiently, instead of wasting time on flaky tests or slow builds. The best frameworks work well with your language and fit smoothly into your CI/CD process.

- JUnit and TestNG dominate Java environments, with TestNG offering advanced features like parallel execution and seamless pipeline integration.

- pytest leads Python testing environments with powerful fixtures and minimal boilerplate, making it ideal for teams prioritizing developer experience.

- Jest provides zero-configuration testing for JavaScript/TypeScript projects, with built-in mocking and snapshot capabilities.

- RSpec delivers behavior-driven development for Ruby teams, emphasizing readable test specifications.

Modern teams use these frameworks along with CI platforms that offer analytics and automation. This mix of good tools and smart processes turns testing from a bottleneck into a productivity boost.

Transform Your Development Velocity Today

Smart unit testing can turn CI/CD from a bottleneck into an advantage. When tests are fast and reliable, developers spend less time waiting and more time releasing code. Harness Continuous Integration uses Test Intelligence, automated caching, and isolated build environments to speed up feedback without losing quality.

Want to speed up your team? Explore Harness CI and see what's possible.

Powering Harness Executions Page: Inside Our Flexible Filters Component

Filtering data is at the heart of developer productivity. Whether you’re looking for failed builds, debugging a service or analysing deployment patterns, the ability to quickly slice and dice execution data is critical.

At Harness, users across CI, CD and other modules rely on filtering to navigate complex execution data by status, time range, triggers, services and much more. While our legacy filtering worked, it had major pain points — hidden drawers, inconsistent behaviour and lost state on refresh — that slowed both developers and users.

This blog dives into how we built a new Filters component system in React: a reusable, type-safe and feature-rich framework that powers the filtering experience on the Execution Listing page (and beyond).

Prefer Watching? Here’s the Talk

The Starting Point: Challenges with Our Legacy Filters

Our old implementation revealed several weaknesses as Harness scaled:

- Poor Discoverability and UX: Filters were hidden in a side panel, disrupting workflow and making applied filters non-glanceable. Users didn’t get feedback until the filter was applied/saved.

- Inconsistency Across Modules: Custom logic in modules like CI and CD led to confusing behavioural differences.

- High Developer Overhead: Adding new filters was cumbersome, requiring edits to multiple files with brittle boilerplate.

These problems shaped our success criteria: discoverability, smooth UX, consistent behaviour, reusable design and decoupled components.

The Evolution of Filters: A Design Journey

Building a truly reusable and powerful filtering system required exploration and iteration. Our journey involved several key stages and learning from the pitfalls of each:

Iteration 1: React Components (Conditional Rendering)

Shifted to React functional components but kept logic centralised in the FilterFramework. Each filter was conditionally rendered based on visibleFilters array. Framework fetched filter options and passed them down as props.

COMPONENT FilterFramework:

STATE activeFilters = {}

STATE visibleFilters = []

STATE filterOptions = {}

ON visibleFilters CHANGE:

FOR EACH filter IN visibleFilters:

IF filterOptions[filter] NOT EXISTS:

options = FETCH filterData(filter)

filterOptions[filter] = options

ON activeFilters CHANGE:

makeAPICall(activeFilters)

RENDER:

<AllFilters setVisibleFilters={setVisibleFilters} />

IF 'services' IN visibleFilters:

<DropdownFilter

name="Services"

options={filterOptions.services}

onAdd={updateActiveFilters}

onRemove={removeFromVisible}

/>

IF 'environments' IN visibleFilters:

<DropdownFilter ... />

Pitfalls: Adding new filters required changes in multiple places, creating a maintenance nightmare and poor developer experience. The framework had minimal control over filter implementation, lacked proper abstraction and scattered filter logic across the codebase, making it neither “stupid-proof” nor scalable.

Iteration 2: React.cloneElement Pattern

Improved the previous approach by accepting filters as children and using React.cloneElement to inject callbacks (onAdd, onRemove) from the parent framework. This gave developers a cleaner API to add filters.

children.forEach(child => {

if (visibleFilters.includes(child.props.filterKey)) {

return React.cloneElement(child, {

onAdd: (label, value) => {

activeFilters[child.props.filterKey].push({ label, value });

},

onRemove: () => {

delete activeFilters[child.props.filterKey];

}

});

}

});Pitfalls: React.cloneElement is an expensive operation that causes performance issues with frequent re-renders and it’s considered an anti-pattern by the React team. The approach tightly coupled filters to the framework’s callback signature, made prop flow implicit and difficult to debug and created type safety issues since TypeScript struggles with dynamically injected props.

Final Solution: Context API

The winning design uses React Context API to provide filter state and actions to child components. Individual filters access setValue and removeFilter via useFiltersContext() hook. This decouples filters from the framework while maintaining control.

COMPONENT Filters({ children, onChange }):

STATE filtersMap = {} // { search: { value, query, state } }

STATE filtersOrder = [] // ['search', 'status']

FUNCTION updateFilter(key, newValue):

serialized = parser.serialize(newValue) // Type → String

filtersMap[key] = { value: newValue, query: serialized }

updateURL(serialized)

onChange(allValues)

ON URL_CHANGE:

parsed = parser.parse(urlString) // String → Type

filtersMap[key] = { value: parsed, query: urlString }

RENDER:

<Context.Provider value={{ updateFilter, filtersMap }}>

{children}

</Context.Provider>

END COMPONENTBenefits: This solution eliminated the performance overhead of cloneElement, decoupled filters from framework internals and made it easy to add new filters without touching framework code. The Context API provides clear data flow that’s easy to debug and test, with type safety through TypeScript.

Inversion of Control (IoC)

The Context API in React unlocks something truly powerful — Inversion of Control (IoC). This design principle is about delegating control to a framework instead of managing every detail yourself. It’s often summed up by the Hollywood Principle: “Don’t call us, we’ll call you.”

In React, this translates to building flexible components that let the consumer decide what to render, while the component itself handles how and when it happens.

Our Filters framework applies this principle: you don’t have to manage when to update state or synchronise the URL. You simply define your filter components and the framework orchestrates the rest — ensuring seamless, predictable updates without manual intervention.

How Filters Inverts Control

Our Filters framework demonstrates Inversion of Control in three key ways.

- Logic via Props: The framework doesn’t know how to save filters or fetch data — the parent injects those functions. The framework decides when to call them, but the parent defines what they do.

- Content via Children (Composition): The parent decides which filters to render.

- Actions via Callbacks: The framework triggers callbacks when users type, select or apply filters, but it’s your code that decides what happens next — fetch data, update cache or send analytics.

The result? A single, reusable Filters component that works across pipelines, services, deployments or repositories. By separating UI logic from business logic, we gain flexibility, testability and cleaner architecture — the true power of Inversion of Control.

COMPONENT DemoPage:

STATE filterValues

FilterHandler = createFilters()

FUNCTION applyFilters(data, filters):

result = data

IF filters.onlyActive == true:

result = result WHERE item.status == "Active"

RETURN result

filteredData = applyFilters(SAMPLE_DATA, filterValues)

RENDER:

<RouterContextProvider>

<FilterHandler onChange = (updatedFilters) => SET filterValues = updatedFilters>

// Dropdown to add filters dynamically

<FilterHandler.Dropdown>

RENDER FilterDropdownMenu with available filters

</FilterHandler.Dropdown>

// Active filters section

<FilterHandler.Content>

<FilterHandler.Component parser = booleanParser filterKey = "onlyActive">

RENDER CustomActiveOnlyFilter

</FilterHandler.Component>

</FilterHandler.Content>

</FilterHandler>

RENDER DemoTable(filteredData)

</RouterContextProvider>

END COMPONENTThe URL Problem

One of the key technical challenges in building a filtering system is URL synchronization. Browsers only understand strings, yet our applications deal with rich data types — dates, booleans, arrays and more. Without a structured solution, each component would need to manually convert these values, leading to repetitive, error-prone code.

The solution is our parser interface, a lightweight abstraction with just two methods: parse and serialize.

parseconverts a URL string into the type your app needs.serializedoes the opposite, turning that typed value back into a string for the URL.

This bidirectional system runs automatically — parsing when filters load from the URL and serialising when users update filters.

const booleanParser: Parser<boolean> = {

parse: (value: string) => value === 'true', // "true" → true

serialize: (value: boolean) => String(value) // true → "true"

}FiltersMap — The State Hub

At the heart of our framework lies the FiltersMap — a single, centralized object that holds the complete state of all active filters. It acts as the bridge between your React components and the browser, keeping UI state and URL state perfectly in sync.

Each entry in the FiltersMap contains three key fields:

- Value — the parsed, typed data your components actually use (e.g. Date objects, arrays, booleans).

- Query — the serialized string representation that’s written to the URL.

- State — the filter’s lifecycle status: hidden, visible or actively filtering.

You might ask — why store both the typed value and its string form? The answer is performance and reliability. If we only stored the URL string, every re-render would require re-parsing, which quickly becomes inefficient for complex filters like multi-selects. By storing both, we parse only once — when the value changes — and reuse the typed version afterward. This ensures type safety, faster URL synchronization and a clean separation between UI behavior and URL representation. The result is a system that’s predictable, scalable, and easy to maintain.

interface FilterType<T = any> {

value?: T // The actual filter value

query?: string // Serialized string for URL

state: FilterStatus // VISIBLE | FILTER_APPLIED | HIDDEN

}The Journey of a Filter Value

Let’s trace how a filter value moves through the system — from user interaction to URL synchronization.

It all starts when a user interacts with a filter component — for example, selecting a date. This triggers an onChange event with a typed value, such as a Date object. Before updating the state, the parser’s serialize method converts that typed value into a URL-safe string.

The framework then updates the FiltersMap with both versions:

- the typed value under

valueand - the serialized string under

query.

From here, two things happen simultaneously:

- The

onChangecallback fires, passing typed values back to the parent component — allowing the app to immediately fetch data or update visualizations. - The URL updates using the serialized query string, keeping the browser’s address bar in sync and making the current filter state instantly shareable or bookmarkable.

The reverse flow works just as seamlessly. When the URL changes — say, the user clicks the back button — the parser’s parse method converts the string back into a typed value, updates the FiltersMap and triggers a re-render of the UI.

All of this happens within milliseconds, enabling a smooth, bidirectional synchronization between the application state and the URL — a crucial piece of what makes the Filters framework feel so effortless.

Conclusion

For teams tackling similar challenges — complex UI state management, URL synchronization and reusable component design — this architecture offers a practical blueprint to build upon. The patterns used are not specific to Harness; they are broadly applicable to any modern frontend system that requires scalable, stateful and user-driven filtering.

The team’s core objectives — discoverability, smooth UX, consistent behavior, reusable design and decoupled elements — directly shaped every architectural decision. Through Inversion of Control, the framework manages the when and how of state updates, lifecycle events and URL synchronization, while developers define the what — business logic, API calls and filter behavior.

By treating the URL as part of the filter state, the architecture enables shareability, bookmarkability and native browser history support. The Context API serves as the control distribution layer, removing the need for prop drilling and allowing deeply nested components to seamlessly access shared logic and state.

Ultimately, Inversion of Control also paved the way for advanced capabilities such as saved filters, conditional rendering, and sticky filters — all while keeping the framework lightweight and maintainable. This approach demonstrates how clear objectives and sound architectural principles can lead to scalable, elegant solutions in complex UI systems.

Backstage Alternatives: IDP Options for Engineering Leaders

In most teams, the question is no longer "Do we need an internal developer portal?" but "Do we really want to run backstage ourselves?"

Backstage proved the internal developer portal (IDP) pattern, and it works. It gives you a flexible framework, plugins, and a central place for services and docs. It also gives you a long-term commitment: owning a React/TypeScript application, managing plugins, chasing upgrades, and justifying a dedicated platform squad to keep it all usable.

That's why there are Backstage alternatives like Harness IDP and managed Backstage services. It's also why so many platform teams are taking a long time to look at them before making a decision.

Why Teams Start Searching For Backstage Alternatives

Backstage was created by Spotify to fix real problems with platform engineering, such as problems with onboarding, scattered documentation, unclear ownership, and not having clear paths for new services. There was a clear goal when Spotify made Backstage open source in 2020. The main value props are good: a software catalog, templates for new services, and a place to put all the tools you need to work together.

The problem is not the concept. It is the operating model. Backstage is a framework, not a product. If you adopt it, you are committing to:

- Running and scaling the portal as a first-class internal product.

- Owning plugin selection, security reviews, and lifecycle management.

- Maintaining a consistent UX as more teams and use cases pile in.

Once Backstage moves beyond a proof of concept, it takes a lot of engineering work to keep it reliable, secure, and up to date. Many companies don't realize how much work it takes. At the same time, platforms like Harness are showing that you don't have to build everything yourself to get good results from a portal.

When you look at how Harness connects IDP to CI, CD, IaC Management, and AI-powered workflows, you start to see an alternate model: treat the portal as a product you adopt, then spend platform engineering energy on standards, golden paths, and self-service workflows instead of plumbing.

The Three Real Paths: Build, Buy, Or Go Hybrid

When you strip away branding, almost every Backstage alternative fits one of three patterns. The differences are in how much you own and how much you offload:

| Build (Self-Hosted Backstage) |

Hybrid (Managed Backstage) | Buy (Commercial IDP) | |

|---|---|---|---|

| You own | Everything: UI, plugins, infra, roadmap | Customization, plugin choices, catalog design | Standards, golden paths, workflows |

| Vendor owns | Nothing | Hosting, upgrades, security patches | Platform, upgrades, governance tooling, support |

| Engineering investment |

High (2–5+ dedicated engineers) | Medium (1–2 engineers for customization) | Low (configuration, not code) |

| Time to value | Months | Weeks to months | Weeks |

| Flexibility | Unlimited | High, within Backstage conventions | Moderate, within vendor abstractions |

| Governance & RBAC |

Build it yourself | Build or plugin-based | Built-in |

| Best for | Large orgs wanting full control | Teams standardized on Backstage who want less ops | Teams prioritizing speed, governance, and actionability |

1. Build: Self-Hosted Backstage Or Fully DIY Portal

What This Actually Means

You fork or deploy OSS Backstage, install the plugins you need, and host it yourself. Or you build your own internal portal from scratch. Either way, you now own:

- The UI and UX.

- The plugin ecosystem and compatibility matrix.

- Security, upgrades, and infra.

- Roadmapping and feature decisions.

Backstage gives you the most flexibility because you can add your own custom plugins, model your internal world however you want, and connect it to any tool. If you're willing to put a lot of money into it, that freedom is very powerful.

Where It Breaks Down

In practice, that freedom has a price:

- You need a dedicated team (often several engineers) to keep the portal healthy as adoption grows.

- You own every design decision and every piece of technical debt, forever.

- Plugin sprawl becomes real, especially when different teams install different components for similar problems.

- Scaling governance, RBAC, and standards enforcement almost always requires custom code.

This path could still work. If you run a very large organization and want to make the portal a core product, you need to have strong React/TypeScript and platform skills, and you really want to be able to customize it however you want, building on Backstage is a good idea. Just remember that you are not choosing a tool; you are hiring people to work on a long-term project.

2. Hybrid: Managed Backstage

What This Actually Means

Managed Backstage providers run and host Backstage for you. You still get the framework and everything that goes with it, but you don't have to fix Kubernetes manifests at 2 a.m. or investigate upstream patch releases.

Vendor responsibilities typically include:

- Running the control plane and handling infra.

- Coordinating upgrades and security fixes.

- Creating a curated library of high-value plugins.

You get "Backstage without the server babysitting."

Where The Trade-Offs Show Up

You also inherit Backstage's structural limits:

- The data model and catalog schema still look like Backstage.

- UI and interaction patterns follow Backstage's rules, which may not fit every team's mental model.

- Deeply customized plugins or data models still require serious engineering work.

Hybrid works well if you have already standardized on Backstage concepts, want to keep the ecosystem, and simply refuse to run your own instance. If you're just starting out with IDPs and are still looking into things like golden paths, self-service workflows, and platform-managed scorecards, it might be helpful to compare hybrid Backstage to commercial IDPs that were made to be products from the start.

3. Buy: Commercial IDPs

What This Actually Means

Commercial IDPs approach the space from the opposite angle. You do not start with a framework, you start with a product. You get a portal that ships with:

- A software catalog.

- Ownership and scorecards.

- Self-service workflows.

- RBAC and governance tools.

The main point that sets them apart is how well that portal is connected to the systems that your developers use every day. Some products act as a metadata hub, bringing together information from your current tools. Harness does things differently. The IDP is built right on top of a software delivery platform that already has CI, CD, IaC Management, Feature Flags, and more.

Why Teams Go This Route

Teams that choose commercial Backstage alternatives tend to prioritize:

- Time to value in weeks, not quarters.

- Predictable total cost of ownership instead of wandering portal roadmaps.

- Built-in governance and security rather than "we'll build RBAC later."

- A real customer success partnership and roadmap, as opposed to depending on open-source momentum.

You trade some of Backstage's absolute freedom for a more focused, maintainable platform. For most organizations, that is a win.

Open Source Backstage Vs. Commercial Backstage Alternatives: Real Trade-Offs

People often think that the difference is "Backstage is free; commercial IDPs are expensive." In reality, the choice is "Where do you want to spend?"

When you use open source, you save money but lose engineering capacity. With commercial IDPs like Harness, you do the opposite: you pay to keep developers focused on the platform and save time. A platform's main purpose is to serve the teams that build on it. Who does the hard work depends on whether you build or buy.

This is how it works in practice:

| Dimension | Open-Source Backstage | Commercial IDP (e.g., Harness) |

|---|---|---|

| Upfront cost | Free (no license fees) | Subscription or usage-based pricing |

| Engineering staffing | 2–5+ engineers dedicated at scale | Minimal—vendor handles core platform |

| Customization freedom | Unlimited—you own the code | Flexible within vendor abstractions |

| UX consistency | Drifts as teams extend the portal | Controlled by product design |

| AI/automation depth | Add-on or custom build | Native, grounded in delivery data |

| Vendor lock-in risk | Low (open source) | Medium (tied to platform ecosystem) |

| Long-term TCO (3–5 years) | High (hidden in headcount) | Predictable (visible in contract) |

Backstage is a solid choice if you explicitly want to own design, UX, and technical debt. Just be honest about how much that will cost over the next three to five years.

Commercial IDPs like Harness come with pre-made catalogs, scorecards, workflows, and governance that show you the best ways to do things. In short, it's ready to use right away. You get faster rollout of golden paths, self-service workflows, and environment management, as well as predictable roadmaps and vendor support.

The real question is what you want your platform team to do: shipping features in your portal framework, or defining and evolving the standards that drive better software delivery.

Where Commercial IDPs Fit Among Backstage Alternatives

When compared to other Backstage options, Harness IDP is best understood as a platform-based choice rather than a separate portal. It runs on Backstage where it makes sense (for example, to use the plugin ecosystem), but it is packaged as a curated product that sits on top of the Harness Software Delivery Platform as a whole.

There are a few design principles stand out:

- Start from a product, not a bare framework. Backstage is intentionally a framework. Harness IDP is shipped as a product. Teams can start using the software right away because it already has a software catalog, scorecards, self-service workflows, RBAC, and policy-as-code. You add to it and shape it, but you don't put the basics together so that anyone can use it.

- Make governance a first-class concern. Harness bakes environment-aware RBAC, policy-as-code (OPA), approvals, freeze windows, audit trails, and standards enforcement into the platform. Instead of adding custom plugins later, governance and security are built in from the start.

- Prioritize actionability over passive visibility. Harness IDP does not stop at showing data. Because it runs directly over Harness CI, CD, IaC Management, Feature Flags, and related capabilities, it can drive workflows: spinning up new services from golden paths, managing environments, shutting down ephemeral resources, and wiring in repeatable self-service runbooks. The result is a portal that behaves more like an operational control plane.

- Use AI where it can safely take action. The Harness Knowledge Agent is based on real delivery data, such as services, pipelines, environments, and scorecards. It can answer questions about who owns what and what happened in the past. It can also suggest or start safe actions under governance controls. That is not the same as AI features that only give a brief overview of catalog entries.

When you think about Backstage alternatives in terms of "How much of this work do we want to own?" and "Should our portal be a UI or a control plane?" Harness naturally fits into the group that sees the IDP as part of a connected delivery platform rather than as a separate piece of infrastructure.

Migration Realities: Moving Off Backstage Is Not A Free Undo Button

A lot of teams say, "We'll start with Backstage, and if it gets too hard, we'll move to something else." That sounds safe on paper. In production, moving from Backstage gets harder over time.

Common points where things go wrong include:

- Custom plugins and extensions: One of Backstage's best features is its plugin ecosystem. It also keeps teams together. Over time, you build up a lot of custom plugins, scaffolder actions, and UI panels that are closely linked to your internal systems. Moving those to a different portal often means rewriting them completely, checking for compatibility, and sometimes even refactoring them.

- Catalog complexity: Backstage catalogs tend to grow into hundreds or thousands of catalog-info.yaml files, custom entity kinds, and annotations. Moving this to a commercial IDP means putting that structure into the new system's data model while keeping ownership, relationships, and rules for governance. Trust in the new portal is directly affected by an incomplete migration here.

- Golden path and scaffolder differences: Your existing scaffolder templates are wired into specific CI/CD tools and habits. Moving them to Harness IDP usually means changing the templates so that they run Harness pipelines, Harness environments, and IaC workflows instead of jobs from outside. That refactor is usually worth it, but it is still a lot of work.

- Developer UX and "who moved my cheese?": Developers get used to Backstage's interaction patterns and custom dashboards. Changing to a new IDP always causes problems with adoption. The only way to avoid a revolt is to run portals at the same time and slowly roll out new golden paths.

- Parallel system complexity: Running Backstage next to a new portal uses up a lot of platform bandwidth and makes things confusing for users if timelines aren't clear. Commercial vendors like Harness can help with this by providing migration tools and hands-on help, but you still need to plan for a migration window, not just flipping a switch.

The point isn't "never choose Backstage." The point is that if you do, you should think of it as a strategic choice, not an experiment you can easily undo in a year.

How To Evaluate Backstage Alternatives With A Clear Head

Whether you are comparing Backstage alone, Backstage in a managed form, or commercial platforms like Harness, use a lens that goes beyond feature checklists. These seven questions will help you cut through the noise.

- Time to first value

- Can you deliver a useful portal (catalog plus a couple of golden paths) in weeks?

- Who owns upgrades, patches, and production reliability?

- Total cost of ownership

- How many engineers will this realistically consume over 3 years?

- Is that time spent on differentiated work or reinvention?

- How many engineers will this realistically consume over 3 years?

- Governance and security maturity

- Do you get RBAC, policy-as-code, approvals, and audit trails out of the box?

- Can you express environment-aware rules without writing custom code for every edge case?

- Data model and extensibility

- How hard is it to model services, infra, teams, and dependencies in a way that reflects reality?

- Can you evolve the model as your architecture and org change?

- Automation and actionability

- Does the portal only aggregate data, or can it drive workflows like service creation, environment provisioning, and deployment rollbacks?

- How directly does it connect to your CI/CD, IaC, and incident tooling?

- AI and "agentic" workflows

- Is AI just summarizing what you already see on dashboards, or can it actually update environments, run pipelines, and enforce policies safely?

- How well grounded is that AI in your real delivery platform versus a generic data lake?

- Exit strategy and lock-in

- If you have to move in three to five years, how portable are your catalogs, templates, and automation?

- Are you comfortable tying your IDP to a broader platform (like Harness) to gain deeper integration and efficiency?

If a solution cannot give you concrete answers here, it is not the right Backstage alternative for you.

Why Harness IDP Belongs On Your Shortlist

Choosing among Backstage alternatives comes down to one question: what kind of work do you want your platform team to own?

Open source Backstage gives you maximum flexibility and maximum responsibility. Managed Backstage reduces ops burden but keeps you within Backstage's conventions. Commercial IDPs like Harness narrow the surface area you maintain and connect your portal directly to CI/CD, environments, and governance.

If you want fast time to value, built-in governance, and a portal that acts rather than just displays, connect with Harness.

Architecting Trust: The Blueprint for a "Golden Standard" Software Supply Chain

We’ve all seen it happen. A DevOps initiative starts with high energy, but two years later, you’re left with a sprawl of "fragile agile" pipelines. Every team has built their own bespoke scripts, security checks are inconsistent (or non-existent), and maintaining the system feels like playing whack-a-mole.

This is where the industry is shifting from simple DevOps execution to Platform Engineering.

The goal of a modern platform team isn't to be a help desk that writes YAML files for developers. The goal is to architect a "Golden Path"—a standardized, pre-vetted route to production that is actually easier to use than the alternative. It reduces the cognitive load for developers while ensuring that organizational governance isn't just a policy document, but a reality baked into every commit.

In this post, I want to walk through the architecture of a Golden Standard Pipeline. We’re going to look beyond simple task automation and explore how to weave Governance, Security, and Supply Chain integrity into a unified workflow that stands the test of time.

The Architectural Blueprint

A Golden Standard Pipeline isn't defined by the tools you use—Harness, Gitlab, GitHub Actions—but by its layers of validation. It’s not enough to simply "build and deploy" anymore. We need to architect a system that establishes trust at every single stage.

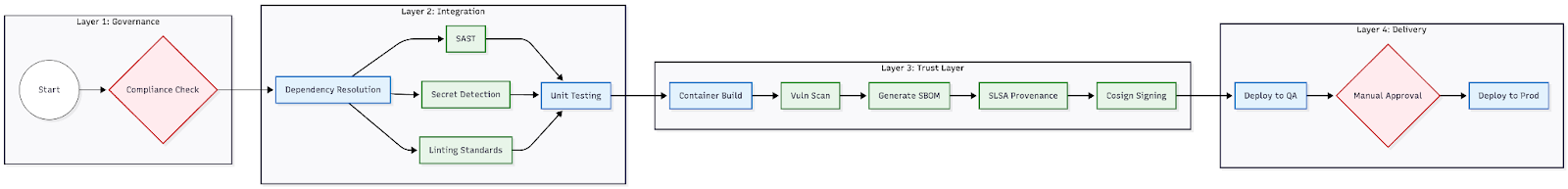

I like to break this architecture down into four distinct domains:

- Governance Domain: checking if we should run this pipeline before we even start.

- Integration Domain (The Inner Loop): getting fast feedback to developers.

- Trust Domain (Supply Chain): creating proof that the software is safe.

- Delivery Domain (The Outer Loop): getting it to production reliably.

Visualizing the Flow

Layer 1: Governance as the First Gate

The Principle: Don't process what you can't approve.

In traditional pipelines, we often see compliance checks shoehorned in right before production deployment. This is painful. There is nothing worse than waiting 20 minutes for a build and test cycle, only to be told you can't deploy because you used a non-compliant base image.

In a Golden Standard architecture, we shift governance to Step Zero.

By implementing Policy as Code (using frameworks like OPA) at the very start of execution, we solve a few problems:

- Drift Prevention: Pipelines simply won't run if they’ve been hacked to bypass standard steps.

- Resource Efficiency: We don't waste expensive compute building artifacts that are doomed to fail compliance.

- Security Baseline: Unauthorized workflows are stopped dead before they can access secrets or internal networks.

Layer 2: Parallelized Security Orchestration

The Principle: Security must speed the developer up, not slow them down.

The "Inner Loop" is sacred ground. This is where developers live. If your security scanning adds friction or takes too long, developers will find a way to bypass it. To solve this, we rely on Parallel Orchestration.

Instead of running checks linearly (Lint → then SAST → then Secrets), we group "Code Smells," "Linting," and "Security Scanners" to run simultaneously.

This gives us a huge architectural advantage:

- Reduced Latency: We squash the wall-clock time by running I/O heavy checks in parallel.

- Cost Optimization: We only trigger expensive Unit Test runners after the cheap, fast security checks pass. There is zero value in running a heavy test suite on a codebase that contains a hardcoded API key.

Layer 3: The Trust Layer (Supply Chain Security)

The Principle: Prove the origin and ingredients of your software.

This is the biggest evolution we've seen in CI/CD recently. We need to stop treating the build artifact (Docker image/Binary) as a black box. Instead, we generate three critical pieces of metadata that travel with the artifact:

- SBOM (Software Bill of Materials): Think of this as the ingredients list on a food packet. It’s a machine-readable inventory of every library inside your container. When the next Log4j happens, you don't need to scan the world; you just query your inventory.

- SLSA Provenance: This is an unforgeable ID card for your build. It proves where the build happened, when it happened, and what inputs were used. This is your defense against tampering attacks (like SolarWinds).

- Cryptographic Signing: Finally, we sign the artifact using a private key (via Cosign). This acts like a digital wax seal; if the image is modified by even one bit after the build, the seal breaks, and your cluster will refuse to run it.

Layer 4: Immutable Delivery

The Principle: Build once, deploy everywhere.

A common anti-pattern I see is rebuilding artifacts for different environments—building a "QA image" and then rebuilding a "Prod image" later. This introduces risk.

In the Golden Standard, the artifact generated and signed in Layer 3 is the exact same immutable object deployed to QA and Production. We use a Rolling Deployment strategy with an Approval Gate between environments. The production stage explicitly references the digest of the artifact verified in QA, ensuring zero drift.

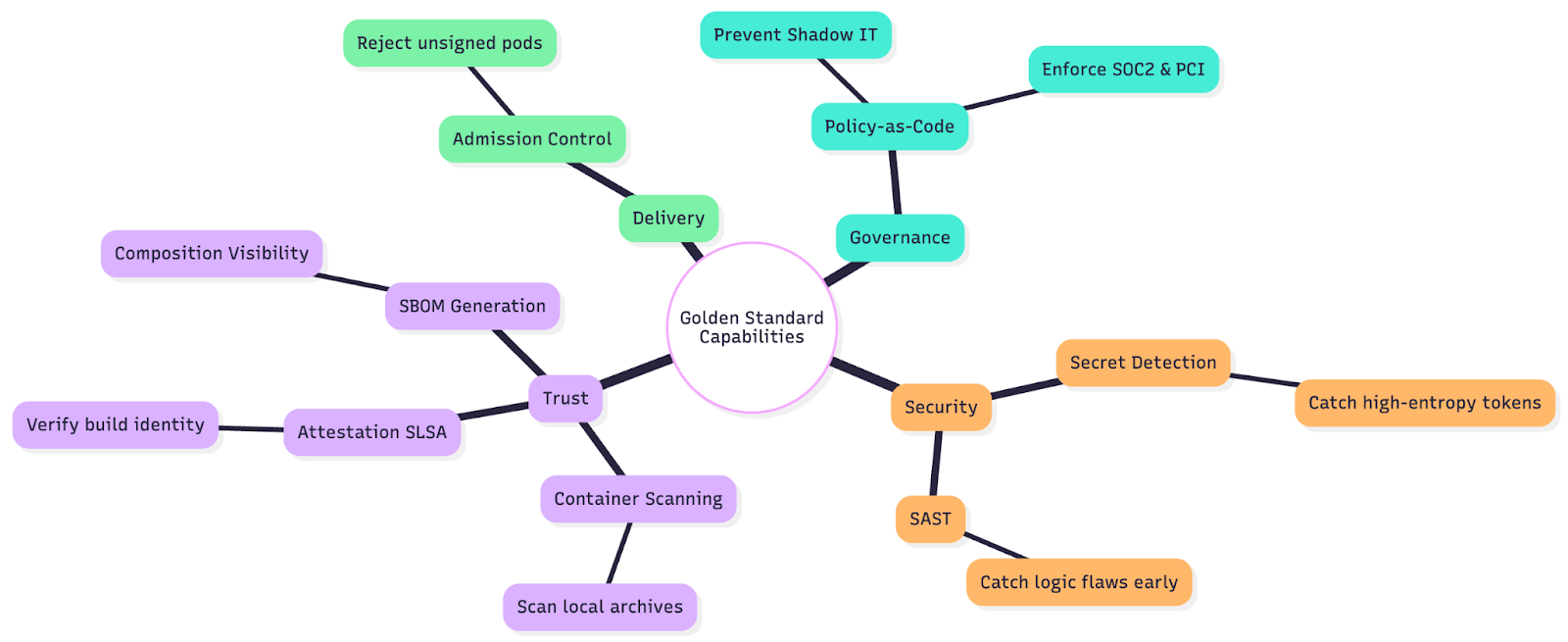

The Capability Map

To successfully build this, your platform needs to provide specific capabilities mapped to these layers.

Future-Proofing Your Platform

Tools change. Jenkins, Harness, GitHub Actions—they all evolve. But the Architecture remains constant. If you adhere to these principles, you future-proof your organization:

- Decouple Policy from Pipeline: Store your policies separately from your pipeline YAML. This lets you update security rules globally without needing a massive migration project to edit hundreds of pipelines.

- Standardize Interfaces: Use standard formats for your metadata (SPDX for SBOMs, In-toto for attestations). This prevents vendor lock-in and ensures your data is portable.

- Invest in "Shift Left" Culture: The best architecture in the world fails if developers see it as a hurdle. Position the Golden Pipeline as a product that solves developer pain points (like setting up environments or managing credentials) while silently enforcing security in the background.

Conclusion

Adopting a Golden Standard architecture transforms the CI/CD pipeline from a simple task runner into a governance engine. By abstracting security and compliance into these reusable layers, Platform Engineering teams can guarantee that every microservice—regardless of the language or framework—adheres to the organization's highest standards of trust.

Kubernetes Cost Traps: Fixing What Your Scheduler Won’t

Kubernetes is a powerhouse of modern infrastructure — elastic, resilient, and beautifully abstracted. It lets you scale with ease, roll out deployments seamlessly, and sleep at night knowing your apps are self-healing.

But if you’re not careful, it can also silently drain your cloud budget.

In most teams, cost comes as an afterthought — only noticed when the monthly cloud bill starts to resemble a phone number. The truth is simple:

Kubernetes isn’t expensive by default.

Inefficient scheduling decisions are.

These inefficiencies don’t come from massive architectural mistakes. It’s the small, hidden inefficiencies — configuration-level choices — that pile up into significant cloud waste.

In this post, let’s unpack the hidden costs lurking in your Kubernetes clusters and how you can take control using smarter scheduling, bin packing, right-sizing, and better node selection.

The Hidden Costs Nobody Talks About

Over-Provisioned Requests and Limits

Most teams play it safe by over-provisioning resource requests — sometimes doubling or tripling what the workload needs. This leads to wasted CPU and memory that sit idle, but still costs money because the scheduler reserves them.

Your cluster is “full” — but your nodes are barely sweating.

Low Bin-Packing Efficiency

Kubernetes’s default scheduler optimizes for availability and spreading, not cost. As a result, workloads are often spread across more nodes than necessary. This leads to fragmented resource usage, like:

- A node with 2 free cores that no pod can “fit” into

- Nodes stuck at 5–10% utilization because of a single oversized pod

- Non-evictable pods holding on to almost empty nodes

Wrong Node Choices (Intel vs AMD, Spot vs On-Demand)

Choosing the wrong instance type can be surprisingly expensive:

- AMD-based nodes are 20–30% cheaper in many clouds

- Spot instances can cut costs dramatically for stateless workloads up to 70%

- ARM (e.g., Graviton in AWS) can offer up to 40% savings

But without node affinity, taints, or custom scheduling, workloads might not land where they should.

Zombie Workloads and Forgotten Jobs

Old cron jobs, demo deployments, and failed jobs that never got cleaned up — they all add up. Worse, they might be on expensive nodes or keeping the autoscaler from scaling down.

Node Pool Fragmentation

Mixing too many node types across zones, architectures, or families without careful coordination leads to bin-packing failure. A pod that fits only one node type can prevent the scale-down of others, leading to stranded resources.

Always-On Clusters and Idle Infrastructure