Featured Blogs

Today, we're thrilled to announce a significant leap forward in our commitment to AI-driven innovation. Harness, a leader in AI-native software delivery, is proud to introduce three powerful AI agents designed to transform how teams create, test, and deliver software.

Since introducing Continuous Verification in 2018, Harness has been at the forefront of leveraging AI and machine learning to enhance software delivery processes. Our latest announcement reinforces our position as an industry pioneer, offering a comprehensive suite of AI-powered tools that address critical challenges across the entire software delivery lifecycle (SDLC).

Our vision is a multi-agent architecture embedded directly into the fabric of the Harness platform. We’re building a powerful library of ‘assistants’ designed to make software delivery faster, more efficient, and more enjoyable for developers. These AI-driven agents will work seamlessly within our platform, handling everything from automating complex tasks to providing real-time insights, freeing developers to focus on what they do best: creating innovative software.

Let's explore the capabilities of these new AI agents and see how they will reshape the future of software delivery.

AI QA Assistant: End-to-End Test Automation

The Harness AI QA Assistant is a game-changer in the world of software testing. This generative AI agent is purpose-built to simplify end-to-end automation and accelerate the transition from manual to automated testing. End-to-end tests have been plagued by slow authoring experiences that yield brittle tests, which need to be tended to every time the UI changes.

By harnessing the power of AI, this assistant offers a range of benefits that can dramatically improve your testing processes:

- 10x Faster Test Creation: One of the most significant advantages of the AI QA Assistant is its ability to accelerate test creation. Through a generative AI-powered, no-code platform, teams can now create high-quality tests in a fraction of the time it would take to hand-script them. This capability democratizes test creation, enabling team members across various skill levels to contribute to the testing process effectively. Harness will help teams enhance their tests as well. By proactively recommending additional checks and assertions, the assistant makes it easy to improve the depth of your testing.

- 70% Less Test Maintenance: Test maintenance has long been a thorn in the side of QA teams, consuming valuable time and resources. The AI QA Assistant addresses this pain point head-on with AI-driven test execution and self-healing capabilities. The AI understands the intent behind a test. If the UI changes, it will identify the new path to achieving the same intent. By automatically adapting tests to application changes, it minimizes the need for manual maintenance, freeing up your team to focus on more strategic tasks.

- 5x Faster Release Cycles: By eliminating manual testing bottlenecks, the AI QA Assistant paves the way for significantly faster release cycles. Integrating AI-powered automated testing into your CI/CD pipeline streamlines the entire process, allowing you to ship faster and more confidently.

Sign up today for early access to the AI QA Assistant.

AI DevOps Assistant: Better Pipelines Faster

Crafting pipelines can be challenging. You need to consider your core build and deployment activities, as well as best practices around security scans, testing, quality gates, and more. The new Harness AI DevOps Assistant will make creating great pipelines much easier.

- Create Pipelines in Seconds: Gone are the days of spending hours configuring complex pipelines. The AI DevOps Assistant allows you to create comprehensive pipelines in mere seconds. By leveraging AI to understand your application’s requirements and your teams’ preferred tools, policies, and patterns, it can generate optimized pipeline configurations rapidly, saving valuable time and reducing the potential for mistakes.

- Effortless Pipeline Modification with Natural Language: You can also use the DevOps assistant to refine existing pipelines. The AI DevOps Assistant understands natural language commands, allowing you to modify your pipelines effortlessly. Whether you need to add a new stage, adjust deployment parameters, or integrate a new tool, you can simply describe the changes you want, and the assistant will implement them accurately.

- Proactive Suggestions: The DevOps Assistant provides intelligent suggestions to optimize your pipelines. It analyzes your current pipeline configurations against Harness recommended best practices and identifies areas for improvement. These suggestions can help reduce build times, enhance reliability, and ensure your pipelines align with industry standards.

- Automatic Diagnosis and Remediation of Common Failures: Minimizing downtime is crucial. The AI DevOps Assistant excels in this area by automatically diagnosing common pipeline failures and providing immediate remediation steps. This proactive approach to problem-solving can significantly reduce the mean time to recovery (MTTR) for your pipelines, ensuring smoother, more reliable software delivery.

The introduction of the AI DevOps Assistant marks a significant milestone in our mission to simplify and streamline the software delivery process for the world’s developers. By automating complex tasks, and providing intelligent insights, this capability empowers teams to focus on innovation rather than getting bogged down in pipeline management intricacies.

Sign up today for early access to the AI DevOps Assistant.

AI Code Assistant: Boosting Developer Productivity

The Harness AI Code Assistant accelerates developer productivity by streamlining coding processes and providing instant access to relevant information. This intelligent tool integrates seamlessly into the development workflow, offering a range of features that enhance coding efficiency and quality:

- Intelligent Code Completion: As developers write code, the AI Code Assistant recommends relevant code snippets, helping to accelerate the coding process. This feature is particularly useful for repetitive tasks or when working with unfamiliar libraries or frameworks.

- Natural Language Function Generation: Developers can describe the desired functionality using natural language, and the AI Code Assistant will generate entire functions based on that description. This capability bridges the gap between concept and implementation, allowing developers to prototype ideas or tackle complex coding challenges quickly.

- Code Refactoring and Debugging: Harness helps improve code quality by offering refactoring suggestions and helping identify and resolve bugs. This proactive approach to code improvement can lead to more robust and maintainable codebases.

- Interactive Code Explanation: An intuitive chatbot feature allows developers to ask questions about specific pieces of code and receive clear explanations that enhance understanding and facilitate knowledge sharing within teams.

- Semantic Search: When integrated with the Harness Code Repository, the AI Code Assistant enables powerful semantic search capabilities using natural language queries. This feature is particularly valuable for quickly navigating and understanding complex repositories, making it easier for new team members to onboard and for experienced developers to explore unfamiliar parts of the codebase.

- Pull Request Generation: The AI Code Assistant frees up valuable developer time by taking care of routine tasks like generating pull request comments, allowing teams to focus on high-value activities that drive innovation and product improvement.

The Harness AI Code Assistant is more than just a coding tool; it's a comprehensive solution that enhances developer productivity, improves code quality, and fosters a more efficient and collaborative development environment. The AI Code Assistant is available today for all Harness customers at no additional charge.

Embracing the AI-Powered Future of Software Delivery

Software delivery is changing fast. Generative AI has helped organizations code faster than ever. The rest of the delivery pipeline must keep up to take full advantage of these efficiencies.

These tools - the Harness AI QA Assistant, AI DevOps Assistant, and AI Code Assistant represent more than just technological advancements. They embody a shift in how we approach software development, testing, and delivery. By automating routine tasks, providing intelligent assistance, and offering deep insights into development processes, these AI agents eliminate toil, freeing up human creativity and expertise to focus on solving complex problems and driving innovation.

As we move forward, the integration of AI into software delivery processes will become increasingly crucial for organizations looking to maintain a competitive edge. The ability to deliver high-quality software faster, more reliably, and with greater insight will be a key differentiator in the digital marketplace.

Harness is committed to leading this AI-driven transformation of the software delivery landscape. We invite you to join us on this exciting journey toward a future where AI and human expertise work in harmony to create exceptional software experiences.

Stay tuned for more updates as we continue to innovate and shape the future of software delivery. If you want to try any of these capabilities early, sign up here.

Checkout Event: Revolutionizing Software Testing with AI

Checkout Harness AI Code Agent

Explore more resources: 3 Ways to Optimize Software Delivery and Operational Efficiency

Recent Blogs

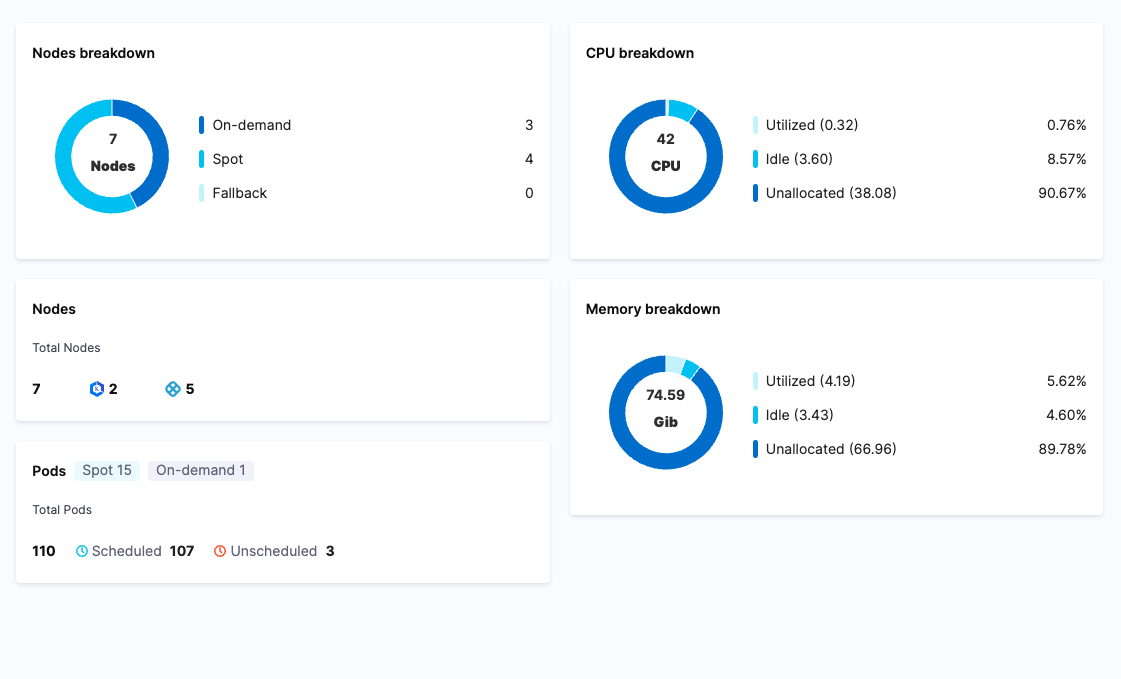

Kubernetes Cost Traps: Fixing What Your Scheduler Won’t

Kubernetes is a powerhouse of modern infrastructure — elastic, resilient, and beautifully abstracted. It lets you scale with ease, roll out deployments seamlessly, and sleep at night knowing your apps are self-healing.

But if you’re not careful, it can also silently drain your cloud budget.

In most teams, cost comes as an afterthought — only noticed when the monthly cloud bill starts to resemble a phone number. The truth is simple:

Kubernetes isn’t expensive by default.

Inefficient scheduling decisions are.

These inefficiencies don’t come from massive architectural mistakes. It’s the small, hidden inefficiencies — configuration-level choices — that pile up into significant cloud waste.

In this post, let’s unpack the hidden costs lurking in your Kubernetes clusters and how you can take control using smarter scheduling, bin packing, right-sizing, and better node selection.

The Hidden Costs Nobody Talks About

Over-Provisioned Requests and Limits

Most teams play it safe by over-provisioning resource requests — sometimes doubling or tripling what the workload needs. This leads to wasted CPU and memory that sit idle, but still costs money because the scheduler reserves them.

Your cluster is “full” — but your nodes are barely sweating.

Low Bin-Packing Efficiency

Kubernetes’s default scheduler optimizes for availability and spreading, not cost. As a result, workloads are often spread across more nodes than necessary. This leads to fragmented resource usage, like:

- A node with 2 free cores that no pod can “fit” into

- Nodes stuck at 5–10% utilization because of a single oversized pod

- Non-evictable pods holding on to almost empty nodes

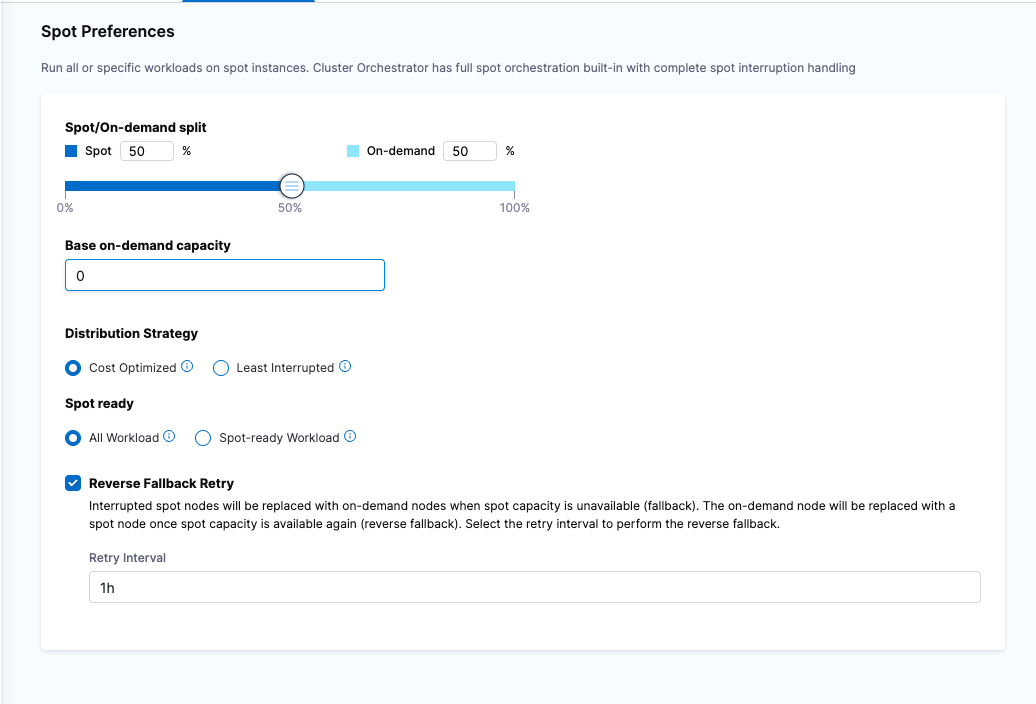

Wrong Node Choices (Intel vs AMD, Spot vs On-Demand)

Choosing the wrong instance type can be surprisingly expensive:

- AMD-based nodes are 20–30% cheaper in many clouds

- Spot instances can cut costs dramatically for stateless workloads up to 70%

- ARM (e.g., Graviton in AWS) can offer up to 40% savings

But without node affinity, taints, or custom scheduling, workloads might not land where they should.

Zombie Workloads and Forgotten Jobs

Old cron jobs, demo deployments, and failed jobs that never got cleaned up — they all add up. Worse, they might be on expensive nodes or keeping the autoscaler from scaling down.

Node Pool Fragmentation

Mixing too many node types across zones, architectures, or families without careful coordination leads to bin-packing failure. A pod that fits only one node type can prevent the scale-down of others, leading to stranded resources.

Always-On Clusters and Idle Infrastructure

Many Kubernetes environments run 24/7 by default, even when there is little or no real activity. Development clusters, staging environments, and non-critical workloads often sit idle for large portions of the day, quietly accumulating cost.

This is one of the most overlooked cost traps.

Even a well-sized cluster becomes expensive if it runs continuously while doing nothing.

Because this waste doesn’t show up as obvious inefficiency — no failed pods, no over-provisioned nodes — it often goes unnoticed until teams review monthly cloud bills. By then, the cost is already sunk.

Idle infrastructure is still infrastructure you pay for.

Smarter Scheduling: Cost-Aware Techniques

Kubernetes doesn’t natively optimize for cost, but you can make it.

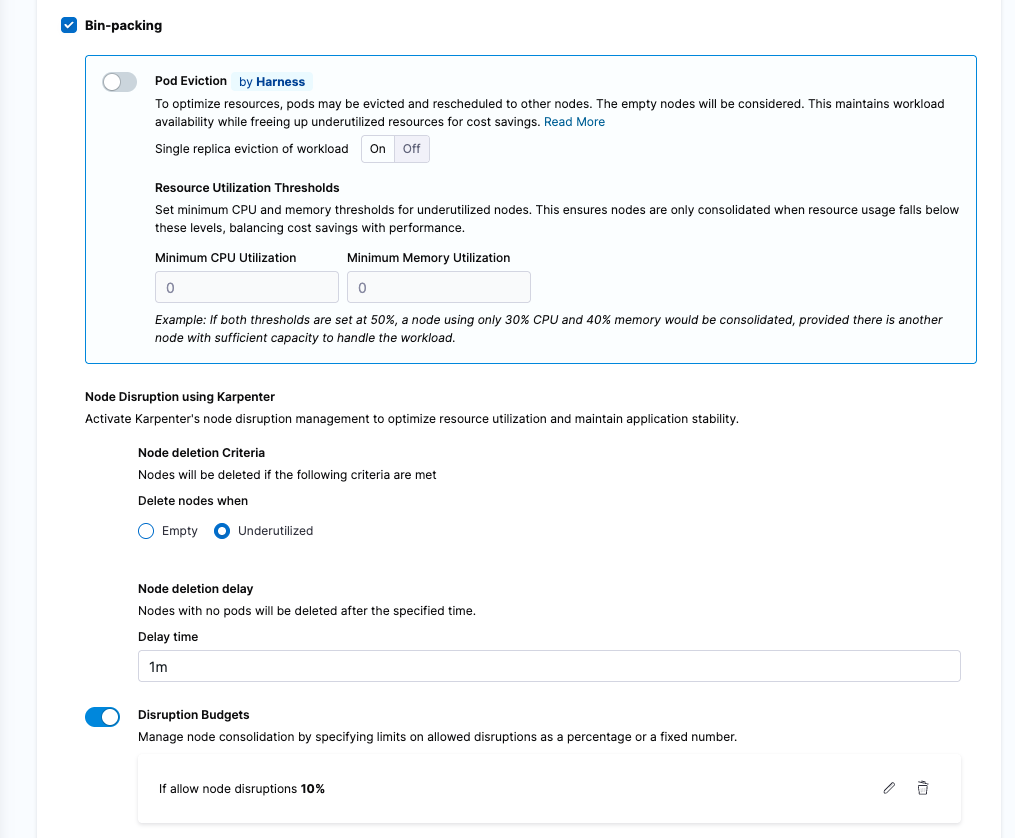

Bin Packing with Intent: Taints, Affinity, and Custom Schedulers

Encourage consolidation by:

- Using taints and tolerations to isolate high-memory or GPU workloads

- Applying pod affinity/anti-affinity to co-locate or separate workloads

- Leveraging Cluster Orchestrator with Karpenter to intelligently place pods based on actual resource availability and cost

- Use Smart Placement Strategies to place non-evictable pods efficiently

In addition to affinity and anti-affinity, teams can use topology spread constraints to control the explicit distribution of pods across zones or nodes. While they’re often used for high availability, overly strict spread requirements can work against bin-packing and prevent efficient scale-down, making them another lever that needs cost-aware tuning.

Scheduled Scaledown of Idle Resources

All of us go through a state where all of our resources are running 24/7 but are barely getting used and racking up costs even when everything is idle.A tried and proved way to avoid this is to scale down these resources either based on schedules or based on idleness.

Harness CCM Kubernetes AutoStopping let’s you scale down your Kubernetes workloads, AutoScaling Groups, VMs and many more based on either their activity or based on Fixed schedules to save you from these idle costs.

Cluster Orchestrator can help you to scale down the entire cluster or specific Nodepools when they are not needed, based on schedules

Right-Sizing Workloads

It’s often shocking how many pods can run on half the resources they’re requesting. Instead of guessing resource requests:

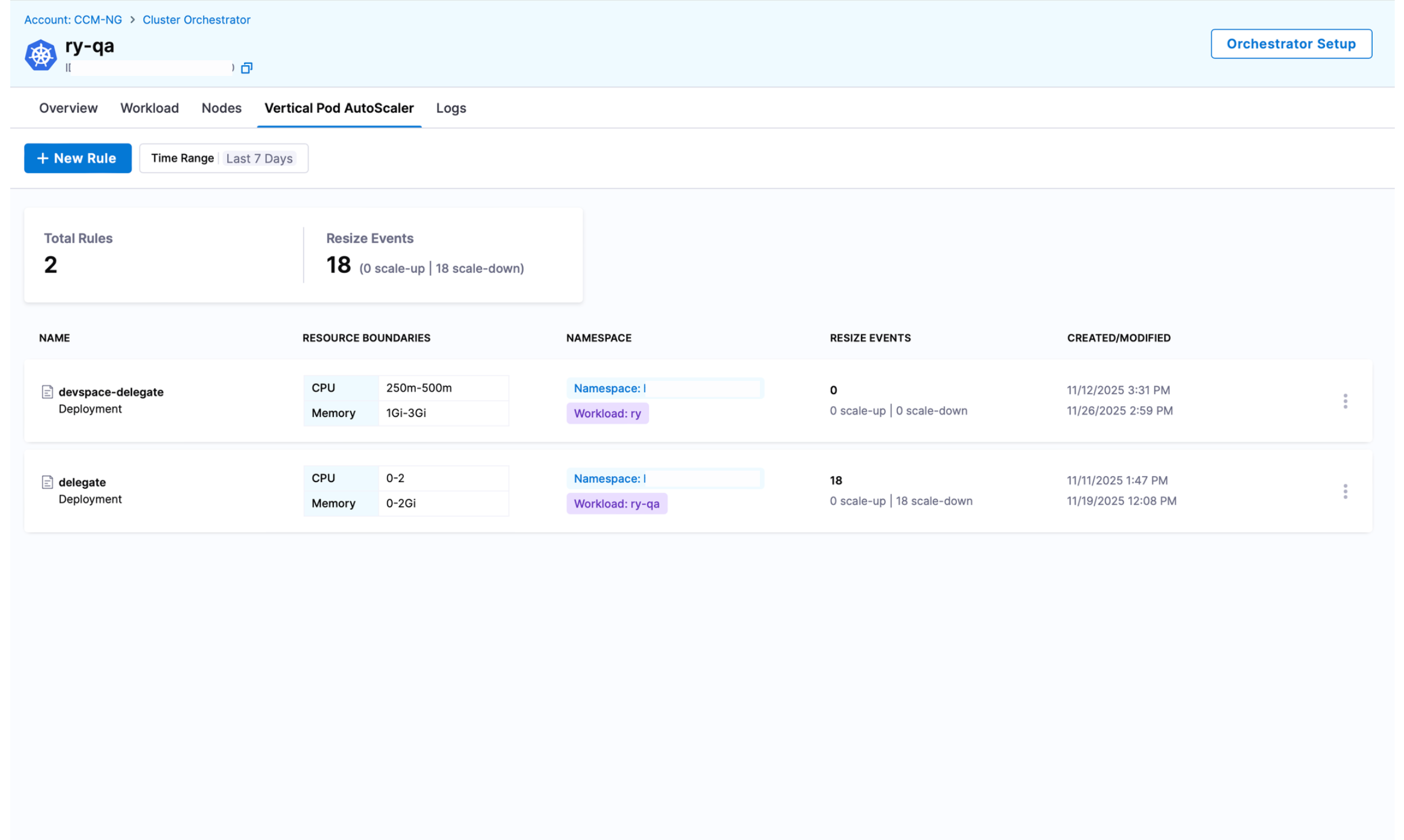

- Try Cluster Orchestrator’s Vertical Pod Autoscaler (VPA) with a single click

- Use Prometheus metrics to measure actual usage

- Analyze reports from visibility tools

Leverage Spot, AMD, or ARM-based Nodes

Make architecture and pricing work in your favor:

- Use node selectors or affinity rules to schedule less critical workloads to Spot nodes. You can use Harness’s Cluster Orchestrator to run your workloads partially in Spot instances. Spot nodes are up to 90% cheaper compared to On-Demand nodes

- Prefer AMD or Graviton nodes for stateless or batch jobs

- Separate workloads by architecture to avoid mixed pools

Use Fewer, More Efficient Node Pools

Instead of 10 specialized pools, consider:

- Consolidating into fewer, well-utilized pools

- Using node-level bin-packing strategies via Karpenter or Cluster Orchestrator

- Tuning autoscaler thresholds to enable more aggressive scale-down

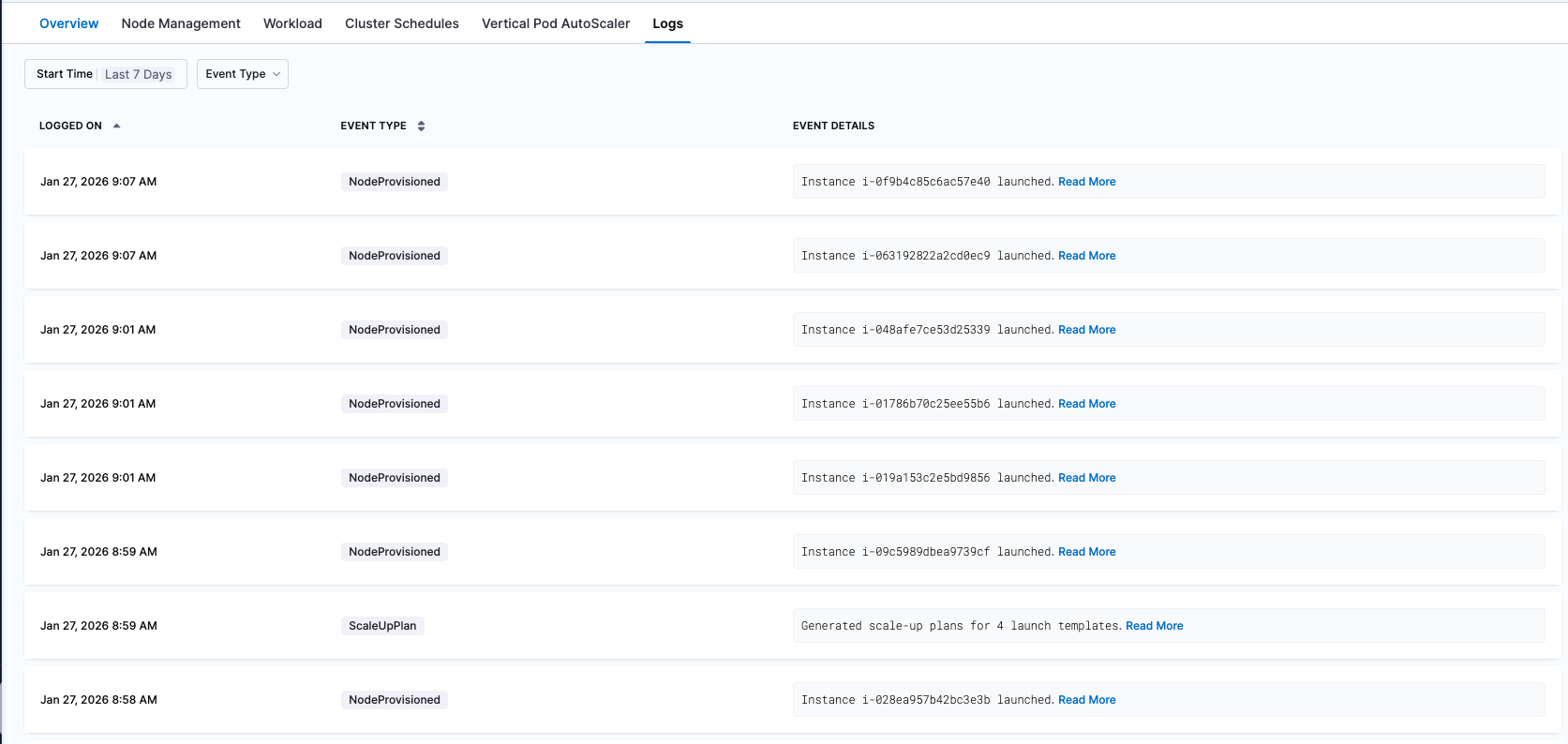

Invisible Decisions Are Expensive

One overlooked reason why Kubernetes cost optimization is hard is that most scaling decisions are opaque. Nodes appear and disappear, but teams rarely know why a particular scale-up or scale-down happened.

Was it CPU fragmentation? A pod affinity rule? A disruption budget? A cost constraint?

Without decision-level visibility, teams are forced to guess — and that makes cost optimization feel risky instead of intentional.

Cost-aware systems work best when they don’t just act, but explain. Clear event-level insights into why a node was added, removed, or preserved help teams build trust, validate policies, and iterate safely on optimization strategies.

Scheduled Scale-Down of Idle Resources

One of the most effective ways to eliminate idle cost is time- or activity-based scaling. Instead of keeping clusters and workloads always on, resources can be scaled down when they are not needed and restored only when activity resumes.

With Harness CCM Kubernetes AutoStopping, teams can automatically scale down Kubernetes workloads, Auto Scaling Groups, VMs, and other resources based on usage signals or fixed schedules. This removes idle spend without requiring manual intervention.

Cluster Orchestrator extends this concept to the cluster level. It enables scheduled scale-down of entire clusters or specific node pools, making it practical to turn off unused capacity during nights, weekends, or other predictable idle windows.

Sometimes, the biggest savings come from not running infrastructure at all when it isn’t needed.

Treat Cost Like a First-Class Metric

Cost is not just a financial problem. It’s an engineering challenge — and one that we, as developers, can tackle with the same tools we use for performance, resilience, and scalability.

Start small. Review a few workloads. Test new node types. Measure bin-packing efficiency weekly.

You don’t need to sacrifice performance — just be intentional with your cluster design.

Check out Cluster Orchestrator by Harness CCM today!

Kubernetes doesn’t have to be expensive — just smarter.

Build a scalable cloud cost optimization recommendation system

How we built a scalable system of generating recommendations for cloud cost optimization

Overview

As cloud adoption continues to rise, efficient cost management demands a robust and automated strategy. Native cloud provider recommendations, while helpful, often have limitations — they primarily focus on vendor-specific optimizations and may not fully align with unique business requirements. Additionally, cloud providers have little incentive to highlight cost-saving opportunities beyond a certain extent, making it essential for organisations to implement customised, independent cost optimization strategies.

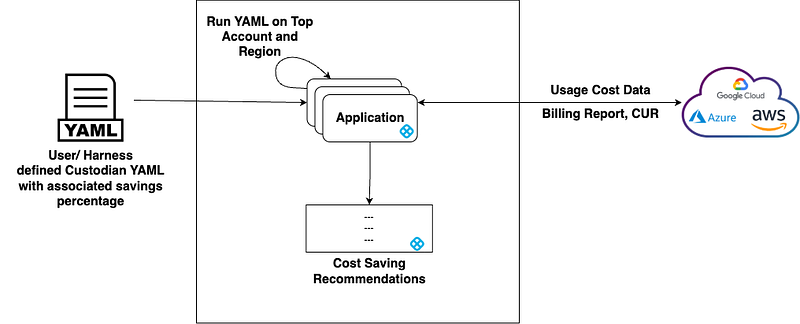

At Harness, we developed a Policy-Based Cloud Cost Optimization Recommendations Engine that is highly customisable and operates across AWS, Azure, and Google Cloud. This engine leverages YAML-based policies powered by Cloud Custodian, allowing organisations to define and execute cost-saving rules at scale. The system continuously analyses cloud resources, estimates potential savings, and provides actionable recommendations, ensuring cost efficiency across cloud environments.

Benefits of a policy based Cloud Cost Optimization Recommendations Engine

- Customisability: Users can define policies tailored to their organisation’s cost optimization strategy. Policies allow filtering resources based on conditions such as resource metrics, operational state (e.g., running, stopped), lifecycle phase (e.g., age, creation date), and compliance attributes to enforce governance and cost optimization.

- Multi-Cloud Support: Works across AWS, Azure, and GCP, ensuring a consistent and unified cost-saving strategy. Avoids vendor lock-in by not relying solely on provider-native cost recommendations.

- Automated Cost Optimization: Automatically applies policies across multiple accounts and regions to detect and remediate cost inefficiencies. Continuously refines recommendations as cloud resources evolve.

- Transparent and Scalable Approach: Cost-saving logic is fully visible in YAML policies, unlike opaque cloud provider recommendations. Scales effortlessly as new resources and accounts are added.

Key Components Involved

Cloud Custodian

Cloud Custodian, an open-source CNCF-backed tool, is at the core of our policy-based engine. It enables defining governance rules in YAML, which are then executed as API calls against cloud accounts. This allows seamless policy execution across different cloud environments.

Cloud Cost Data Sources

The system relies on detailed billing and usage reports from cloud providers to calculate cost savings:

- AWS Cost and Usage Report (CUR) — Provides granular cost breakdowns at a per-resource level.

- Azure Billing Report — Offers insights into cloud usage, pricing models, and applied discounts.

- Google Cloud Cost Usage Report — Captures detailed billing data, including SKU-level pricing and committed use discounts.

Solution Overview

The solution leverages Cloud Custodian to define YAML-based policies that identify cloud resources based on specific filters. The cost of these resources is retrieved from relevant cost data sources (AWS Cost and Usage Report (CUR), Azure Billing Report, and GCP Cost Usage Data). The identified cost is then multiplied by the predefined savings percentage to estimate the potential savings from the recommendation.

The diagram above illustrates the workflow of the recommendation engine. It begins with user-defined or Harness-defined cloud custodian policies, which are executed across various accounts and regions. The Harness application processes these policies, fetches cost data from cloud provider reports (AWS CUR, Azure Billing Report, GCP Cost Usage Data), and computes savings. The final output is a set of cost-saving recommendations that help users optimize their cloud spending.

How It Works — A Step-by-Step Breakdown with an example

Below is an example YAML rule that deletes unattached Amazon Elastic Block Store (EBS) volumes. When this policy is executed against any account and region, it filters out and deletes all unattached EBS volumes.

- Policy Definition: YAML policies are written using Cloud Custodian to target specific resource inefficiencies.

- Policy Execution: Policies are executed across multi-cloud accounts and regions, filtering unoptimized resources.

- Cost Data Integration: The system retrieves the cost of filtered resources from AWS CUR, Azure Billing Report, and GCP Cost Usage Data.

- Savings Calculation: The estimated cost savings is derived by applying the predefined savings percentage associated with each policy.

- Recommendation Generation: The final output is a set of actionable cost-saving recommendations that users can review and apply.

Conclusion

Harness CCM’s Policy-Based Recommendation Engine offers an intelligent, automated, and scalable approach to optimizing cloud costs. Unlike native cloud provider tools, it is designed for multi-cloud environments, allowing organisations to define custom cost-saving policies and gain transparent, data-driven insights for continuous optimization.

With over 50 built-in policies and full support for user-defined rules, Harness enables businesses to maximise savings, enhance cost visibility, and automate cloud cost management at scale. By reducing unnecessary cloud spend, companies can reinvest those savings into innovation, growth, and core business initiatives — rather than increasing the profits of cloud vendors.

Sign up for Harness CCM today and experience the power of automated cloud cost optimization firsthand!

How Top Engineering Teams Use Software Engineering Insights (SEI) to Drive Measurable Business Impact

Visibility Isn’t Enough, You Need Insights

Engineering organizations today don’t lack data—they lack clarity. Delivery timelines, developer activity, and code quality metrics are scattered across systems, making it hard to answer simple but critical questions: Where are we losing time? Are we investing in the right work? Who needs support or coaching?

This is where Harness Software Engineering Insights (SEI) steps in. Unlike traditional dashboards, SEI offers opinionated, role-based insights that connect engineering execution with business value.

In this post, we’ll walk through a proven rollout framework, real customer success stories, and a practical guide for any organization looking to implement an engineering metrics program (EMP) that actually drives impact.

Step 1: Start With Purpose—Define Objectives That Matter

Rolling out SEI without a clear objective is like configuring CI/CD pipelines without deployment goals. Before diving into dashboards or metrics, align internally on what you’re trying to improve.

Most organizations fall into one or more of the following categories:

- Efficiency: Speed up software delivery and reduce cycle time.

- Productivity: Boost developer engagement and output consistency.

- Alignment: Ensure engineering effort maps to strategic priorities, like building new features vs. maintaining legacy systems.

💡 A powerful first step is simply asking: What are the top 3 decisions you wish you could make with data but currently can't?

Step 2: Choose the Right Engineering Metrics to Track

Once your objectives are clear, it’s time to define the key performance indicators (KPIs) that reflect progress. At Harness, we recommend starting with 5 core metrics that align with your goals:

- DORA Metrics: Lead Time for Change, Change Failure Rate (CFR), Deployment Frequency, MTTR.

- Pull Request Cycle Time: Time from PR creation to merge—an essential signal of workflow health.

- Commit-to-Done Ratio & Scope Creep: Help measure sprint execution quality.

- Effort Allocation (Business Alignment): Understand time spent on KTLO vs. innovation.

- Trellis Score & Coding Days: Surface team and individual developer engagement patterns.

These metrics aren’t just about numbers—they tell a story. And SEI’s pre-built dashboards help visualize that story from day one.

Step 3: Configure SEI for Meaningful Insights

Out-of-the-box data isn’t enough—you need context. SEI allows deep configuration across integrations, people, and workflows to ensure accuracy and actionability.

Integrate the Right Systems

Start with the essentials: Jira or ADO (issue tracking), GitHub or Bitbucket (SCM), Jenkins or Harness CI (build/deploy). Validate data ingestion and set up monitoring for failed syncs.

Set Up Contributor Attributes

Merge developer identities across systems and tag them with meaningful metadata: Role, Team, Location, Manager, and Employee Type (FTE, contractor). This enables advanced filtering, benchmarking, and team-level coaching.

Create Focused Collections

Use Asset-Based Collections for things like repositories or services (ideal for DORA/Sprint metrics) and People-Based Collections for teams, departments, or geographies (perfect for Dev Insights, Trellis, and Business Alignment).

Define Profiles & Widgets

SEI lets you build custom profiles for DORA metrics, Business Alignment, and Trellis. These profiles allow you to set your own definitions for “Lead Time,” “MTTR,” or what constitutes “New Work.” Configurable widgets ensure the insights match your team’s workflows—not the other way around.

Step 4: Align Metrics to Roles & Personas

One of SEI’s most valuable capabilities is persona-based reporting. Not every stakeholder needs to see every metric. Instead, create tailored views based on what matters to them.

| Persona | Primary Metrics | Cadence |

|---|---|---|

| CTO / VP Engineering | DORA, Effort Allocation, Innovation % | Quarterly |

| Director of Engineering | Sprint Trends, PR Cycle Time, MTTR | Monthly |

| Engineering Manager | Coding Days, PR Approval Rate, Rework | Weekly |

| Scrum Master / TPM | Commit-to-Done, Scope Creep, Sprint Hygiene | Weekly/Daily |

| Product Manager | Feature Delivery Lead Time, KTLO vs. New Work | Bi-weekly |

By aligning metrics to what stakeholders actually care about, you reduce dashboard fatigue and increase engagement.

Step 5: Build a Rhythm of Review & Ownership

Rolling out dashboards isn’t enough—you need cadence and accountability.

Successful SEI customers establish regular reviews, such as:

- Weekly Ops Syncs: Review PR trends, coding days, scope creep.

- Monthly Product/Engineering Syncs: Review business alignment and new work %.

- Quarterly QBRs: Revisit OKRs, refine metrics, show trend improvements.

Each dashboard or collection should have an owner, responsible for interpreting and acting on the insights.

Step 6: Scale with Persona-Based Metrics

Once the foundation is in place, go deeper. SEI allows you to scale insight delivery across the org by:

- Creating role-specific dashboards (e.g., VP of Engineering vs. Scrum Master)

- Using Trellis to coach teams and surface hidden top performers

- Integrating with HR systems to layer in context like tenure, geography, or team churn

This is how SEI becomes more than a dashboard—it becomes your engineering operating system.

Step 7: Set Strategic, Measurable Engineering OKRs

Data without goals is directionless. Use SEI to establish stretch goals tied to organizational outcomes.

Here are common SEI-aligned OKRs:

- 📉 Reduce PR Cycle Time by 25%

- 🧠 Improve Coding Days per Developer to 3.5+/week

- ⚖️ Increase Innovation Work (New Features) to 60% of total effort

- 🔧 Decrease Change Failure Rate (CFR) to under 10%

Because SEI continuously measures these metrics, you can track OKR progress in real time.

Real-World Examples: How Leading Companies Use SEI

Cybersecurity: Driving Innovation While Improving Quality

🧭 Objective:

Improve engineering velocity without compromising security or code quality, while ensuring more effort is spent on new feature development.

📈 Key Results:

- Increased New Work allocation to 62.6% of total engineering effort

- Reduced Unapproved Pull Requests by 32%, decreasing potential backlog risk

- Cut PR Cycle Time from 10.4 days to 6.6 days (36% improvement)

💥 Impact:

By using SEI’s Dev Insights and Business Alignment dashboards, the customer was able to shift engineering focus toward innovation. Unapproved PR backlog reductions improved code review discipline, while faster PR cycle times helped the team deliver secure, high-quality features faster.

Information Technology: Scaling Delivery Velocity Without Sacrificing Stability

🧭 Objective:

Accelerate delivery cadence, reduce lead times, and establish a baseline for operational resilience across distributed teams.

📈 Key Results:

- PR Cycle Time dropped from 2.9 days to 47.1 minutes (98.9% reduction)

- Deployment Frequency increased from 1.09/day to 33.91/day

- Lead Time for Change reduced from 9.3 months to 2.8 months

💥 Impact:

SEI enabled visibility into every stage of the SDLC — from PRs to production. Dashboards helped engineering leadership identify workflow bottlenecks, while improved cycle time allowed the team to launch features continuously. The organization was also able to define new goals around MTTR reduction for future sprints.

Financial Services: Balancing Risk and Speed During Transformation

🧭 Objective:

Improve release predictability, reduce change failure rates, and maintain quality during large-scale technology transformations.

📈 Key Results:

- Lead Time reduced by 78% (from 1.3 months to 9.4 days)

- Change Failure Rate (CFR) dropped from 39.9% to 14.4%

- PR Merge Time decreased from 22 hours to 6.4 minutes (98% faster)

💥 Impact:

Using SEI’s DORA and Sprint Insights dashboards, engineering teams surfaced high-risk areas and improved review discipline. Leadership used Business Alignment reports to visualize time allocation, allowing them to rebalance priorities between legacy maintenance and innovation initiatives — critical for de-risking digital transformation.

Hospitality: Optimizing Hybrid Teams and Engineering Workflows

🧭 Objective:

Improve collaboration and execution within hybrid teams (FTEs and contractors), while accelerating delivery with fewer blockers.

📈 Key Results:

- PR Approval Time dropped from 10.9 to 8.2 days

- Average Coding Days per Week sustained at 4.2 days, exceeding industry average

- Contributor collaboration scores highlighted imbalances between contractor and FTE output

💥 Impact:

SEI helped the customer restructure their hybrid engineering model by revealing top contributors, low-collaboration patterns, and team-specific bottlenecks. By tagging contributors by type, team, and location, the organization realigned review ownership and improved handoff speed across distributed groups.

Gaming: Enhancing Stability and Predictability in Feature Delivery

🧭 Objective:

Reduce production risk while accelerating feature releases in a highly agile environment.

📈 Key Results:

- Change Failure Rate improved by 83% (from 23.7% to 3.9%)

- Lead Time dropped from 2 months to 18.7 days

- Unapproved PRs decreased by 60%, improving review throughput

💥 Impact:

SEI’s DORA metrics helped the team move from reactive issue management to proactive release planning. With improved scope hygiene and PR discipline, the organization was able to deliver features at a faster pace while maintaining platform stability — a crucial balance in gaming environments where user experience is paramount.

API Security: Accelerating High-Throughput Development with Balance

🧭 Objective:

Speed up secure development without compromising engineering discipline or quality during rapid team expansion.

📈 Key Results:

- PR Lead Time reduced by 95.7% (from 3.2 months to 4.1 days)

- Coding Days increased from 0.4 to 4.9 days/week (10x improvement)

- Deployment Frequency scaled to 95.79/day, enabling near-continuous delivery

💥 Impact:

The customer used SEI to quantify the tradeoff between speed and review quality. By highlighting areas with excessive unapproved PRs and scope creep, the team set up opinionated OKRs to strike a balance between velocity and sustainability. Trellis and Dev Insights dashboards were used to coach developers and improve overall workflow consistency.

Further Resources

Build an Insight-Driven Engineering Culture

The most successful engineering organizations don’t just collect metrics—they operationalize them. Harness SEI enables your teams to go beyond dashboards and build a culture of insight, accountability, and impact.

By following a structured rollout, aligning metrics to personas, and setting outcome-focused OKRs, SEI can become the backbone of your engineering excellence strategy.

About the Author

Adeeb Valiulla leads the Quality Assurance & Resilience, Cost & Productivity function at Harness, where he works closely with Fortune 500 customers to drive engineering efficiency, improve developer experience, and align software delivery efforts with business outcomes. With a focus on measurable insights, Adeeb helps organizations turn engineering data into actionable intelligence that fuels continuous improvement. He brings a unique blend of technical depth and strategic vision, helping teams unlock their full potential through data-driven transformation.

Elite Engineering Teams Don’t Guess—They Prove Impact

Introduction: The Game Has Changed

Engineering leadership used to be about gut feel, strong opinions, and shipping fast. But that playbook is expiring—quickly.

The world we’re building software in today is fundamentally different. Economic pressure, AI disruption, rising complexity, and the demand for hyper-efficiency have converged. Old-school metrics, instinct-led prioritization, and managing by velocity charts won’t cut it.

What today’s engineering leaders need isn’t more dashboards. They need clarity. They need trust. They need a new way to lead.

And most of all? They need to stop guessing.

Stop Guessing. Lead with Clarity.

You shouldn’t have to start every leadership meeting explaining what your teams are working on, why something slipped, or where time is going.

With Harness Software Engineering Insights (SEI), you don’t guess. You know.

You see where bottlenecks are forming. You know when PRs are aging in silence. You understand whether your teams are overcommitted, burned out, or executing beautifully. You know the tradeoffs being made between tech debt, features, and KTLO—before someone asks.

SEI replaces opinions with insight. It surfaces the friction you can’t see in a sprint report, and helps you make smarter decisions based on what’s actually happening—not what you hope is happening.

Because in the new era of engineering, clarity is leadership.

Show the Full Story of Engineering

But when you only measure output—story points, releases, burnup—you miss the nuance. You miss the tradeoffs. You miss the why behind the work.

Harness SEI helps leaders tell the complete story:

- How time is spent (KTLO vs. Building New Stuff vs. Improving Existing Stuff)

- How long it takes to turn ideas into reality (lead time, PR cycle time)

- How well teams plan, execute, and adapt (commit-to-done, scope creep)

- And how all of this connects to business value

This is the story your CFO, CPO, and CEO need to hear—not how many tickets you closed last sprint.

Engineering deserves to be understood. SEI makes it possible.

Efficiency is the New Growth

Let’s be honest: we’re no longer in a “hire at all costs” era. Efficiency is the new growth. The mandate is clear:

- Do more with less.

- Move faster with smaller teams.

- Prove impact, not just activity.

And that’s not a burden—it’s an opportunity.

With Harness SEI, leaders can finally quantify engineering capacity, align work with outcomes, and invest where it matters most. You can see which teams are stretched too thin, where tech debt is slowing you down, and which initiatives are driving measurable business value.

This isn’t about pushing harder. It’s about working smarter, leading sharper, and delivering more strategically.

Protect Creativity. Automate the Repetition.

Great engineering happens when teams have clarity, focus, and space to build. But too often, they’re stuck in the weeds—fighting fires, filling out status reports, and guessing what matters.

With SEI, that changes.

- No more asking devs for their weekly updates—you already have the data.

- No more chasing sprint reports—they’re live and self-serve.

- No more guessing which efforts are paying off—you can prove it.

This frees up energy for real engineering. It protects time for hackathons, R&D spikes, creative sprints—the things that move the business forward and keep developers fulfilled.

Because in a world full of AI and automation, the one thing we can’t afford to lose is human creativity.

SEI helps you protect it—by getting rid of everything that wastes it.

A Better Developer Experience Starts with Better Leadership

Burnout doesn’t start with bad code. It starts with bad leaders.

When developers don’t know where their work is going, why it matters, or what success looks like, morale suffers. When they’re forced to do status updates instead of shipping, they disengage. When PRs sit for days, they lose momentum.

SEI enables developers to see how their work connects to outcomes. It enables faster feedback, less friction, and clearer focus.

And for leaders? It means fewer surprises, better retention, and more meaningful 1:1s.

The Future of Engineering Leadership Starts Now

The best engineering leaders of the next decade won’t just be great technologists, they’ll be clear communicators, business strategists, and defenders of engineering best practices.

They’ll lead with data, empathy, and decisiveness.

They’ll connect effort to impact.

They’ll stop guessing. And they’ll lead better because of it.

If you're ready to lead in this new era, Harness SEI is your competitive advantage.

The Executive Playbook: Communicating Engineering Metrics for Maximum Business Impact

Introduction:

For too long, engineering has been seen as a black box—an opaque function that takes in business requirements and delivers software without clear visibility into the process. But in today’s data-driven, business-first world, engineering leaders must do more than execute; they must influence, align, and communicate with executive peers to drive business outcomes.

CTOs, VPs of Engineering, and other technical leaders who can effectively translate engineering metrics into business impact gain a seat at the strategic table. Instead of reacting to business requests, they help shape company priorities, resource allocation, and long-term growth strategies.

But here’s the challenge: Traditional engineering metrics don’t resonate with executives. Story points, commit counts, and deployment logs mean little to a CFO, CMO, or CEO. To gain influence, engineering leaders need to frame their work in business terms—think predictability, customer impact, cost efficiency, and revenue acceleration.

That’s where Harness Software Engineering Insights (SEI) comes in. SEI transforms engineering metrics into clear, actionable insights that bridge the gap between technical execution and business strategy. This blog will show you how to use SEI to speak the language of executives, drive cross-functional alignment, and elevate engineering’s strategic role in your organization.

Understanding Executive Priorities: Tailoring Metrics to Your Audience

Before presenting engineering metrics, it’s critical to understand what matters to your executive peers. Different leaders prioritize different business drivers, and aligning your communication style accordingly makes your insights more relevant and impactful.

How Different Executives Think About Metrics

| Executive | Key Priorities | How Engineering Metrics Apply |

|---|---|---|

| CEO (Chief Executive Officer) | Revenue growth, competitive differentiation, innovation | Engineering’s impact on faster time-to-market, scalability, and business alignment |

| CFO (Chief Financial Officer) | Cost efficiency, budget predictability, ROI | Engineering capacity, cost of technical debt, and efficiency improvements |

| CRO (Chief Revenue Officer) | Sales velocity, customer retention, revenue expansion | Feature delivery timelines, system reliability, customer-impacting defects |

| CPO (Chief Product Officer) | Product roadmap execution, user experience, feature adoption | Lead Time for Change, deployment frequency, engineering capacity for innovation |

| CMO (Chief Marketing Officer) | Digital transformation, campaign execution, website/app performance | Site reliability, system uptime, infrastructure scalability, release predictability |

🔹 Takeaway: Before presenting engineering data, frame it in terms of the business goals that resonate with each executive stakeholder.

Choosing the Right Metrics: Moving from Engineering-Centric to Business-Focused

Many engineering leaders fall into the trap of reporting on vanity metrics—like total commits, number of deployments, or story points completed—without connecting them to business outcomes.

The key is choosing the right metrics that executives care about. Harness SEI helps track engineering performance across three core areas:

- Efficiency → How quickly and predictably does engineering deliver value?

- Productivity → How effectively are engineering resources utilized?

- Business Alignment → How well does engineering effort map to company priorities?

Let’s explore which SEI metrics best support each area.

Key Engineering Metrics for Executive Communication

1. On-Time Delivery → Engineering Predictability Matters

- Why It Matters: Executives need confidence in engineering’s ability to deliver on time and as planned to align sales, marketing, and customer expectations.

- SEI Advantage: Track commit-to-done ratio, sprint hygiene, and release cadence to demonstrate predictable execution.

🎯 How to Communicate It: “Over the past quarter, engineering has improved on-time delivery from 67% to 85%, reducing last-minute delays and improving cross-team alignment.”

2. Engineering Capacity → Balancing Innovation & Maintenance

- Why It Matters: Business leaders must understand how much of engineering’s effort is spent on innovation vs. sustaining work.

- SEI Advantage: SEI’s Business Alignment Dashboard helps track engineering investments in:

- KTLO (Keep the Lights On) – Maintenance and bug fixes

- Build New Stuff – Feature development and innovation

- Improve Existing Stuff – Enhancements and refactoring

- KTLO (Keep the Lights On) – Maintenance and bug fixes

🎯 How to Communicate It: “Currently, 54% of engineering work is dedicated to new feature development, while 32% is spent on maintenance and 14% on technical debt reduction.”

3. Lead Time for Change & Deployment Frequency → Measuring Agility & Business Impact

- Why It Matters: Faster feature delivery leads to quicker customer adoption and revenue growth.

- SEI Advantage:

- Lead Time for Change → Measures how quickly engineering can deliver value from ideation to production.

- Deployment Frequency → Indicates the speed and efficiency of software releases.

- Lead Time for Change → Measures how quickly engineering can deliver value from ideation to production.

🎯 How to Communicate It: “We’ve reduced Lead Time for Change from 14 days to 9 days, improving our ability to respond to market demands faster.”

4. Ramp Time for Engineers → Setting Realistic Hiring Expectations

- Why It Matters: Hiring new engineers is only part of scaling; onboarding efficiency determines how quickly they contribute.

- SEI Advantage: SEI tracks coding activity, PR contributions, and review cycles to measure how long it takes new hires to become productive.

🎯 How to Communicate It: “New engineers ramp up to full productivity in 6 weeks on average, down from 8 weeks last year.”

How SEI Helps Engineering Leaders Communicate Impact

Harness SEI provides efficiency, productivity and alignment dashboards that make engineering metrics clear, visual, and actionable for executives.

1. Executive-Friendly Reports

SEI’s DORA, Sprint Insights, and Business Alignment Dashboards provide high-level summaries while allowing leaders to drill into details when needed.

2. Proactive Risk & Opportunity Insights

Rather than waiting for executives to ask, SEI highlights risks upfront (e.g., increasing cycle time, declining deployment frequency) and identifies bottlenecks..

3. Data Storytelling

Numbers alone don’t drive action—framing metrics as stories do. SEI allows engineering leaders to present data in a way that connects to business goals and influences decisions.

Best Practices for Cross-Functional Communication

- Set a Regular Reporting Cadence: Weekly syncs for tactical updates, quarterly reports for strategy alignment.

- Use Data Storytelling: Turn raw data into compelling narratives that explain what happened, why it matters, and what actions to take.

- Be Transparent About Risks: Use SEI insights to identify bottlenecks and propose solutions before they become executive concerns.

Conclusion: Elevate Engineering’s Role with SEI

Engineering is no longer just about writing code—it’s about driving business value. By using Harness SEI to track and communicate on-time delivery, engineering capacity, deployment frequency, and business alignment, engineering leaders can:

✅ Influence executive decisions by aligning engineering work with company priorities.

✅ Improve collaboration across teams by providing visibility into engineering efforts.

✅ Proactively drive impact instead of reacting to business requests.

Ready to communicate engineering’s impact more effectively? Start leveraging SEI today to gain visibility, efficiency, and alignment across your organization.

👉 Learn more about Harness SEI here.

8 Essential Questions to Boost Developer Productivity

Developer productivity has become a critical factor in today's fast-paced software development world. Organizations constantly seek methods to enhance productivity, improve engineering efficiency, and align their development teams with strategic business goals. But navigating the complexities of developer productivity isn't always straightforward.

In this blog, we’ll hear from Adeeb Valiulla, Director of Engineering Excellence at Harness, as we answer some of the most pressing questions on developer productivity to help you optimize your teams and processes effectively.

1. What is Developer Productivity?

Developer productivity refers to the efficiency and effectiveness with which software developers deliver high-quality software solutions. It encompasses the speed and quality of coding, reliability of deployments, the ability to quickly recover from failures, and alignment of development efforts with strategic business goals. High developer productivity means achieving more impactful outcomes with fewer resources, enabling organizations to stay competitive and agile in rapidly evolving markets.

2. Why Is Developer Productivity Important?

Developer productivity directly impacts an organization's ability to deliver software quickly, reliably, and with high quality. High productivity enhances agility, reduces costs, accelerates feature delivery, and ultimately drives customer satisfaction and competitive advantage. Improving productivity not only benefits the business but also increases developer satisfaction by removing bottlenecks and empowering teams.

Real-World Example from __LINK_1__

“In the hardware technology industry, a well-known global hardware company implemented an engineering metrics program under Harness’s and my guidance. This led to significantly boosted developer productivity. Their PR cycle time improved dramatically from nearly 3 days to under an hour, greatly enhancing delivery speed and agility.”

3. Can Software Developer Productivity Really Be Measured?

Yes, software developer productivity can be effectively measured. While measuring productivity isn't always simple due to the complexity of software development, several key metrics have emerged as valuable indicators:

- Lead Time for Changes

- How quickly code moves from commit to production.

- Deployment Frequency

- Frequency of deployments to production.

- Mean Time to Restore (MTTR)

- Speed of recovery from incidents.

- Change Failure Rate

- Stability and reliability of code deployments.

- Commit to Done Ratio

- Rate of planned tasks successfully completed.

These metrics, when applied carefully and contextually, provide actionable insights into developer productivity.

Real-World Example from __LINK_2__

“In the Gaming Industry, Harness’ holistic approach to productivity, which emphasizes consistent developer engagement and effective scope management, enabled a gaming company to manage scope creep and improve their weekly coding days significantly. This strengthened their development workflow and productivity.”

4. Can Generative AI (GenAI) Improve Developer Productivity?

Generative AI certainly has the potential to improve developer productivity. But. the verdict is still out on whether GenAI provides any significant net improvements. GenAI certainly helps developers write code faster while they are coding by automating repetitive coding tasks, enhancing code reviews, predicting potential errors, and accelerating problem-solving. The vision is that AI-powered tools will help developers write cleaner, more reliable code faster, freeing them to focus on strategic, high-value tasks.

However, the time saved by using GenAI is not guaranteed to net as a productivity gain vs. new challenges GenAI brings, such as learning to prompt optimally, time spent learning and fixing the code it produces, and the potential system and software delivery lifecycle (SDLC) bottlenecks that can occur with the increased pace of new code that needs to be handled, deployed, and tested.

Tools such as Harness Software Engineering Insights (SEI) and AI Productivity Insights (AIPI) can help measure how, where, and with who, AI is causing impact (both positive and potential negative) so that you can optimize the likelihood that GenAI will have a positive impact on your developer productivity.

Additionally, most GenAI developer tool focus has been on AI coding assistants. However, coding is 30-40% of the work that needs to be done to get software updates and enhancements delivered (the pipeline and SDLC stages, as mentioned above). This leaves 60-70% of the overall process that GenAI is not yet helping with. The Harness AI-Native Software Delivery Platform provides many AI agents that help to automate about 40% of the part of the SDLC process that is not coding.

5. How Do You Measure Developer Productivity?

Measuring developer productivity involves:

- Defining clear and relevant metrics (like DORA metrics).

- Utilizing productivity platforms, such as Harness Software Engineering Insights (SEI), to integrate with your SDLC toolset, configure and analyze.

- Regularly reviewing dashboards for insights and making data-driven adjustments to improve processes and workflows.

When measuring developer productivity, focus on outcome-based metrics rather than activity counts. DORA metrics (deployment frequency, lead time, change failure rate, and recovery time) provide valuable insights into team performance and delivery efficiency. Complement these with contextual data like PR cycle times, coding days per week, and the ratio of building versus waiting time.

Harness SEI implements dashboards that visualize these metrics by role, enabling managers to identify bottlenecks, engineers to track personal progress, and executives to monitor overall delivery health. To learn more, read our blog on Persona-Based Metrics.

Remember that measurement should drive improvement, not punishment—create a psychologically safe environment where data informs positive change rather than triggering defensive behavior.

6. How Do You Improve Developer Productivity?

Improving developer productivity requires a multi-faceted approach that addresses both technical and organizational constraints. Start by eliminating common friction points: reduce build times through better CI/CD pipelines, implement robust code review processes that prevent bottlenecks, and adopt standardized development environments that minimize "it works on my machine" issues. Investment in developer tooling often yields outsized returns.

Improving developer productivity requires:

- Process Optimization

- Streamline workflows and eliminate unnecessary steps.

- Tooling Enhancements

- Adopt modern tools and automate repetitive tasks.

- Culture and Collaboration

- Foster collaborative practices, knowledge sharing, and peer review.

- Focus on Developer Experience

- Reduce cognitive load and distractions, enabling deeper, uninterrupted work.

Creating focused work environments is equally crucial. Research shows that developers need uninterrupted blocks of at least 2-3 hours to reach flow state—the mental zone where complex problem-solving happens most efficiently. Consider implementing "no-meeting days" or core collaboration hours to protect deep work time. Google's approach of 20% innovation time and Atlassian's "ShipIt Days" demonstrate how structured creative periods can boost both productivity and engagement.

Finally, regularly audit and reduce technical debt; Etsy's practice of dedicating 20% of engineering resources to infrastructure improvements ensures their codebase remains maintainable as it grows. The most productive engineering cultures view developer experience as a product itself—one that requires continuous investment and refinement.

Real-World Example from __LINK_8__

“In the cybersecurity sector, teams following Harness’ Engineering Metrics Program, consistently averaged over 4.5 coding days per week, demonstrating high developer engagement and productivity.”

7. How Do You Measure Developer Productivity in Agile?

In Agile environments, a deeper analysis of key metrics provides valuable insights into developer productivity:

Sprint Velocity

Sprint Velocity serves as more than just a workload counter—it's a team's productivity fingerprint. High-performing teams focus less on increasing raw velocity and more on velocity stability, which indicates predictable delivery. By tracking velocity variance across sprints (aiming for less than 20% fluctuation), teams can identify external factors disrupting productivity. Leading organizations complement this with complexity-adjusted velocity, weighting story points based on technical challenge to reveal where teams excel or struggle with certain types of work.

Sprint Burndown Charts

Sprint Burndown Charts reveal productivity patterns beyond simple progress tracking. Teams should analyze the chart's shape—a consistently flat line followed by steep drops indicates batched work and potential bottlenecks, while a jagged but steady decline suggests healthier continuous delivery. Advanced teams overlay their burndown with blocker indicators, clearly marking when and why progress stalled, creating accountability for removing impediments quickly.

Commit to Done Ratio

Commit to Done Ratio offers insights into planning accuracy and execution capability. The most productive teams maintain ratios above 80% while avoiding artificial padding of estimates. By categorizing incomplete work (technical obstacles, scope changes, or estimation errors), teams can systematically address root causes rather than symptoms. Some organizations track this metric over multiple sprints to identify trends and measure the effectiveness of process improvements.

PR Cycle Time

PR Cycle Time deserves granular analysis, as code review often becomes a hidden productivity drain. Break this metric into component parts—time to first review, rounds of feedback, and time to final merge—to pinpoint specific improvement areas. Top-performing teams establish service-level objectives for each stage (e.g., initial reviews within 4 hours), supported by automated notifications and team norms. This detailed approach turns PR management from a black box into a well-optimized workflow with predictable throughput.

8. How Do You Track Developer Productivity Using Harness SEI?

Harness SEI provides robust tracking of developer productivity by:

- Integrating with your Issue Management, Source Code Management, CI and CD tools

- Providing seamless data integration and comprehensive tracking.

- Creating visibility into your efficiency

- Leveraging DORA and Sprint Insights to measure and enhance engineering efficiency.

- Providing productivity insights

- Utilizing Dev Insights and Personalized Development Metrics to pinpoint productivity opportunities.

- Ensuring alignment

- Offering Business Alignment metrics to ensure your team’s efforts are strategically aligned.

- Actionable Insights

- Identifying bottlenecks, enabling proactive adjustments, and delivering targeted recommendations for continuous improvement.

- Implementing an Engineering Metrics Program

- Creating a structured approach to consistently measure and optimize engineering performance.

- Creating Persona-Based Metrics

- Customizing insights and dashboards tailored specifically to different roles for maximum impact.

Harness SEI empowers teams to enhance productivity by clearly visualizing critical productivity metrics.

Harness' View On What Improves Developer Productivity

Adeeb emphasizes that

Improving developer productivity requires a holistic and human-centric approach. It's not merely about tools and metrics but fundamentally about creating an environment where developers can consistently deliver high-quality output without unnecessary friction.

According to Adeeb, the key factors include:

- Empowering Autonomy and Ownership

- Developers must feel a sense of ownership over their work, which empowers them to make decisions and contribute creatively.

- Fostering Psychological Safety

- Creating a culture where team members can openly communicate, experiment, and learn from failures without fear.

- Balancing KTLO and Innovation

- Effectively balancing "Keep the Lights On" (KTLO) tasks with new feature development to ensure continuous innovation and technical stability.

- Effective Tooling and Automation

- Providing the right tools that automate mundane tasks, reducing cognitive load, and allowing developers to focus on critical, high-value tasks.

- Continuous Learning and Growth

- Encouraging ongoing professional development and learning opportunities to maintain engagement and adapt to technological advancements.

- Clear Alignment and Communication

- Ensuring clear communication of business goals and alignment between development efforts and strategic business outcomes.

Harness' approach advocates for an integrated strategy that aligns technology, processes, and culture, emphasizing developer well-being as central to sustainable productivity improvements.

Boost Your Team's Productivity Today

Harnessing the right insights and strategies can transform your software development processes, driving efficiency, innovation, and growth. Ready to elevate your developer productivity to the next level? Discover the power of Harness Software Engineering Insights (SEI) and start achieving measurable improvements today.

Request a meeting or demo

Learn more: The causes of developer downtime and how to address them

Top 7 Cloud Cost Reporting Strategies Every FinOps Team Should Know

Cloud cost management is crucial for organizations seeking to optimize their cloud spending while achieving maximum return on investment. With the rapid growth of cloud services, managing costs has become increasingly complex, and data teams often struggle to track and analyze spending effectively. This complexity makes it essential for organizations to implement effective cost reporting processes that can provide visibility into cloud expenses and enable informed decision-making.

Importance of Cloud Cost Reporting

Cloud cost reporting is critical for tracking, analyzing, and controlling cloud expenditures to ensure that the investment in cloud services aligns with business goals. Here’s why cloud cost reporting is essential and how it supports better decision-making, cost control, and overall financial management.

- Enhancing Financial Visibility

Cloud cost reporting gives businesses a clear view of where and how they are spending their resources across various cloud services. Without a detailed report, costs can quickly spiral out of control, especially in complex, multi-cloud environments.

- Improving Budgeting and Forecasting

A well-documented report on cloud expenses enables accurate budgeting and forecasting. Data teams, for example, can predict future cloud spending based on past usage trends and seasonal demand variations. Budgeting becomes more strategic when teams can see not only the current spending but also how those costs might change based on usage patterns.

- Supporting Cost Optimization Efforts

Cloud cost reports often highlight areas where resources are underutilized or unnecessarily duplicated. By reviewing these reports, organizations can identify optimization opportunities, such as rightsizing resources, terminating idle instances, or shifting workloads to more cost-effective options..

- Enabling Accountability Across Teams

A breakdown of cloud expenses across departments and projects can promote accountability. With cost allocation tags and perspectives, teams gain ownership of their cloud spending, leading to more mindful consumption of resources. When departments have transparency in their spending, they are more likely to take proactive measures to stay within budget.

- Facilitating Decision-Making and Strategic Planning

Detailed cloud cost reporting helps stakeholders make data-driven decisions on resource allocation, tool investment, and scaling strategies. When cloud costs are accurately reported, organizations can align their technology spend with business goals and adjust their cloud strategy based on financial performance and expected returns.

Best Practices for Cloud Cost Reporting

- Define Clear Objectives and KPIs

Define specific goals for cloud cost reporting, like reducing spend or improving transparency, and set measurable Key Performance Indicators (KPIs) that align with these objectives.

- Use Cost Allocation and Tagging Standards

Establish and enforce a consistent tagging strategy to label cloud resources by meaningful attributes (e.g., project, department, environment) to improve cost tracking. Consistent tagging allows for accurate cost allocation, accountability, and budgeting across teams, while grouping resources using Cost Categories further enhances reporting precision by organizing expenses according to logical divisions like business units or departments.

- Implement Role-Based Perspectives for Cost Visibility

Customize cloud cost reports for different roles by configuring perspectives that present relevant information to finance, engineering, and executive teams. Perspectives allow you to break down costs by team, project, or service, giving stakeholders a clear view of their impact on cloud spend.

- Regularly Review and Optimize Reports

Analyze cloud cost trends to identify savings opportunities and ensure reports remain accurate and relevant over time. This includes periodically reviewing data sources and tagging practices, adjusting reporting metrics to reflect evolving business needs, and validating that each report’s data is current. Routine review of reports supports informed decision-making and sustained cost optimization.

- Maintain Real-Time Cost Visibility Through Dashboards

Use custom dashboards to monitor key metrics and cloud cost trends in real time, helping teams stay informed and make timely adjustments. Role-specific dashboards reduce information overload by presenting only relevant data to each team, while visualizing recurring patterns, like weekly cost spikes, reveals insights into seasonal spending.

- Use Anomaly Detection to Prevent Overspending

Establish a baseline cost expectation for each department or service to help detect anomalies, using tools like Harness CCM that compare historical spending with current costs to flag unusual expenses. When anomalies occur, investigate root causes promptly and take corrective actions. Anomaly detection minimizes overspending by identifying issues like waste or misconfigurations before they escalate.

- Monitor Resource Utilization and Rightsize as Needed

Regularly assess resource utilization to identify idle or underused assets that could be decommissioned or downsized, and apply rightsizing based on current workload demands to avoid unnecessary costs. For workloads that aren’t time-sensitive, consider scheduling usage during off-peak hours to capitalize on lower-cost periods. Proactive monitoring and rightsizing can significantly reduce wasted cloud spend.

Harness Cloud Cost Management (CCM) for Effective Cloud Cost Reporting

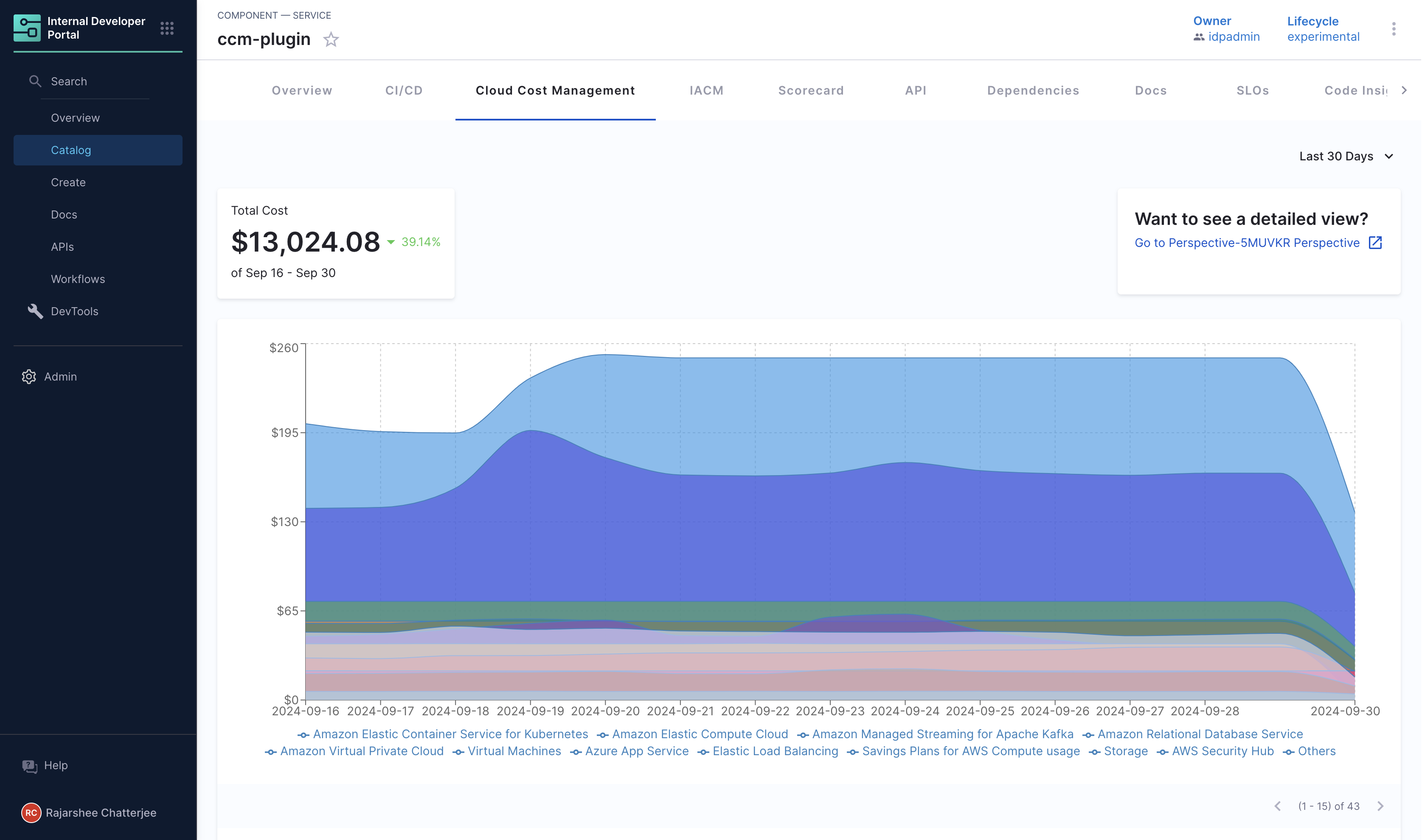

Harness Cloud Cost Management (CCM) offers comprehensive reporting tools designed to help businesses gain visibility and control over their cloud expenses. Harness CCM’s has different components that contribute to CCM's reporting capabilities, making it easier to track, analyze, and optimize cloud costs across various platforms.

The anomaly detection feature in CCM helps organizations proactively monitor and manage cloud expenses by identifying instances of abnormally high costs.

Perspectives allow users to organize cloud resources in ways that align with specific business needs, such as by department, project, or region.

CCM's dashboards provide an interactive platform for visualizing and analyzing cloud cost data. Users can create custom dashboards to monitor various metrics relevant to their business, aiding in data-driven decision-making.

The Cost Categories feature in CCM enables users to organize and allocate costs effectively. By grouping expenses by business units, projects, or departments, users can gain a detailed view of where money is being spent. This feature is ideal for organizations that need to allocate cloud costs accurately across various internal groups or external clients.

Learn more about Cloud Cost Management by Harness, or book a demo today.

Understanding cloud cost automation and its key benefits

What is Cloud Cost Automation?

Cloud cost automation refers to the use of automated tools and processes to manage and optimize cloud spending. It involves the implementation of technologies that automatically analyze billing data, track resource utilization, and manage cloud resources in real-time. By automating tasks such as resource provisioning, scaling, and monitoring, organizations can efficiently control their cloud costs without manual intervention.

Cloud cost optimization can be achieved using cloud cost management tools. These tools track and categorize all cloud-related expenses, attributing them to the respective teams responsible for their consumption. This promotes accountability, encouraging teams to use resources judiciously while discouraging wasteful practices.

Ultimately, by implementing effective cloud cost management strategies and leveraging appropriate tools, organizations can achieve greater financial efficiency and align their cloud spending with business objectives and key results (OKRs). This proactive approach not only safeguards profit margins but also positions organizations for sustainable growth in a dynamic cloud landscape.

The Benefits of Using Cloud Cost Automation Tools for Cloud Cost Management

Utilizing external tools for cloud cost management brings a range of significant advantages that enhance financial efficiency and strategic alignment for organizations leveraging cloud services. Here are some of the key benefits:

- Accurate Forecasting and Budgeting: By analyzing historical data and usage patterns, these tools can predict future costs and help avoid unexpected surges in spending that could disrupt cash flow.

- Empowering Engineering Teams: With access to detailed cost analytics, engineering teams can see the financial impact of their work. This visibility encourages accountability and informed decision-making.

- Insight into Profitability: External tools allow organizations to identify their least profitable technologies, customers, and projects within the cloud. This insight enables data-driven decisions.

- Optimizing Resource Utilization: Cloud cost management tools help organizations assess the effectiveness of load balancing, autoscaling, capacity reservation, and volume discounts. This analysis allows companies to make informed decisions about which services to use for specific use cases.

What to Consider When Choosing a Cloud Cost Management Tool

Selecting the right cloud cost management tool is essential for optimizing your cloud spending and ensuring operational efficiency. Here are some key factors to consider in more detail:

- Cost Visibility: A robust cloud cost management tool should provide clear and transparent visibility into your cloud costs. It should break down expenses by service, team, project, or other relevant metrics, allowing you to pinpoint where your money is being spent.

- Cost Optimization Recommendations: Look for a tool that not only tracks costs but also provides actionable recommendations for optimizing spending. This may include insights on rightsizing resources (adjusting resource allocations based on usage patterns), utilizing Reserved Instances, or identifying idle resources that can be decommissioned or downsized. Such recommendations can significantly improve your cost efficiency.

- Real-Time Monitoring and Alerts: Real-time monitoring capabilities allow you to track your cloud spending as it occurs. Choose a tool that offers alerts to notify you of unexpected cost spikes or anomalies. This enables you to take immediate corrective action, ensuring you stay within budget and avoid unnecessary overspending.

- Integration with Multiple Cloud Providers: Your cloud cost management tool should seamlessly integrate with all the cloud providers your organization uses, whether it’s AWS, Microsoft Azure, Google Cloud, or others. This integration ensures a unified view of costs across different cloud services, simplifying management and reporting processes.

- Budgeting and Forecasting: Effective cloud cost management involves setting budgets and forecasting future spending. The tool should facilitate budget creation, allowing you to track projected versus actual costs. This capability helps you manage financial resources more effectively and make informed decisions based on accurate projections.

- Scalability: Ensure the cloud cost management tool can scale with your organization. It should handle increased cloud usage as your business grows and be able to support additional cloud providers. A scalable tool will provide long-term value and adaptability as your cloud strategy evolves.

The Best Cloud Cost Management Tools

- Harness CCM

Harness Cloud Cost Management is designed to empower engineers and DevOps teams with detailed, real-time visibility into cloud costs.Harness Cloud Cost Management provides comprehensive tools to optimize cloud spending. It allows you to group resources based on business needs for improved cost tracking and classify expenses through cost categories for better allocation. Features like anomaly detection automatically identify unexpected cost spikes, while customizable dashboards offer a clear visualization of spending.

The platform also provides recommendations to optimize resource usage and reduce costs, with AutoStopping preventing unnecessary expenses by shutting down idle resources. Additional tools like the Cluster Orchestrator for AWS EKS (beta) and Commitment Orchestrator help manage Kubernetes clusters and maximize savings from reserved instances. Asset governance ensures compliance with resource utilization rules, and budgets help track spending by setting thresholds and receiving alerts when limits are approached.

Each feature emphasizes CCM’s focus on making cloud cost management accessible and actionable for engineers and DevOps teams.

- AWS Cloud Financial Management Tools

AWS offers tools like consolidated billing, budgeting, and pricing optimization to help users control cloud costs. AWS Cost Explorer provides detailed cost analysis, while AWS Budgets lets users set spending limits with alerts. Cost Allocation Tags allow for transparent cost assignment to projects or departments. AWS Reserved Instances offer discounted rates for upfront commitments, and Cost Anomaly Detection uses machine learning to flag unusual cost spikes for quick resolution.

- Azure Cost Management + Billing

Azure’s Cost Management + Billing helps businesses optimize cloud spend by providing visibility and accountability. Users can monitor expenses, set budgets, and receive cost optimization suggestions through the Azure portal. Key features include granular cost analysis, AI-driven anomaly detection, and reserved instance optimization for discounts. Azure’s integration with other tools ensures streamlined resource management.

- Google Cloud Cost Management

Google Cloud provides cost management tools through its Cloud Console, offering insights into spending via Cloud Billing Reports and Cost Tables. Users can set budgets, receive alerts, and get cost-saving recommendations. Detailed reports break down spending by projects or resources, while reserved instance management simplifies cost predictability. Integration with other Google Cloud tools ensures effective cost control and resource management.

- Spot by NetApp

Spot by NetApp (formerly Spot.io) is a CloudOps platform that automates the deployment and operation of cloud infrastructure, focusing on cost efficiency and reliability. By leveraging machine learning, Spot continuously analyzes cloud resource usage to identify cost-saving opportunities and automatically scale workloads across various cloud providers. While Spot excels in automating cloud cost control, it does have some limitations, such as the absence of resource scheduling guidelines and real-time pricing details.

- Flexera Cloud Cost Management

Flexera Cloud Cost Management is ideal for teams seeking enhanced visibility across multi-cloud environments. It offers essential cost management features like cost analysis, reporting, and forecasting, while also supporting cost allocation by cost center and team. The platform provides automatic budget alerts and delivers insights into both private and public cloud environments, helping users track and optimize cloud spend effectively.

- Zesty

Zesty’s cloud cost management technology allows users to optimize costs by adjusting cloud usage in real time. Initially focused on storage optimization, Zesty has expanded to include commitment discount optimization through its Zesty Commitment Manager, available exclusively for AWS users. This feature automates the buying and selling of AWS Reserved Instances (RIs) based on real-time application needs. Additionally, Zesty Disk optimizes storage costs by dynamically resizing disk capacity to align with actual usage, ensuring efficient resource utilization.

- Turbonomic