Featured Blogs

Feature flags are table stakes for modern software development. They allow teams to ship features safely, test new functionality, and iterate quickly, all without re-deploying their applications. As teams grow and ship across multiple services, environments, and languages, consistently managing feature flags becomes a significant challenge.

Harness Feature Management & Experimentation (FME) continues its investment in OpenFeature, building on our early support and adoption of the CNCF standard for feature flagging since 2022. OpenFeature provides a single, vendor-agnostic API that allows developers to interact with multiple feature management providers while maintaining consistent flag behavior.

With OpenFeature, you can standardize flag behavior across services and applications, and integrate feature flags across multiple languages and SDKs, including Node.js, Python, Java, .NET, Android, iOS, Angular, React, and Web.

Why OpenFeature matters today

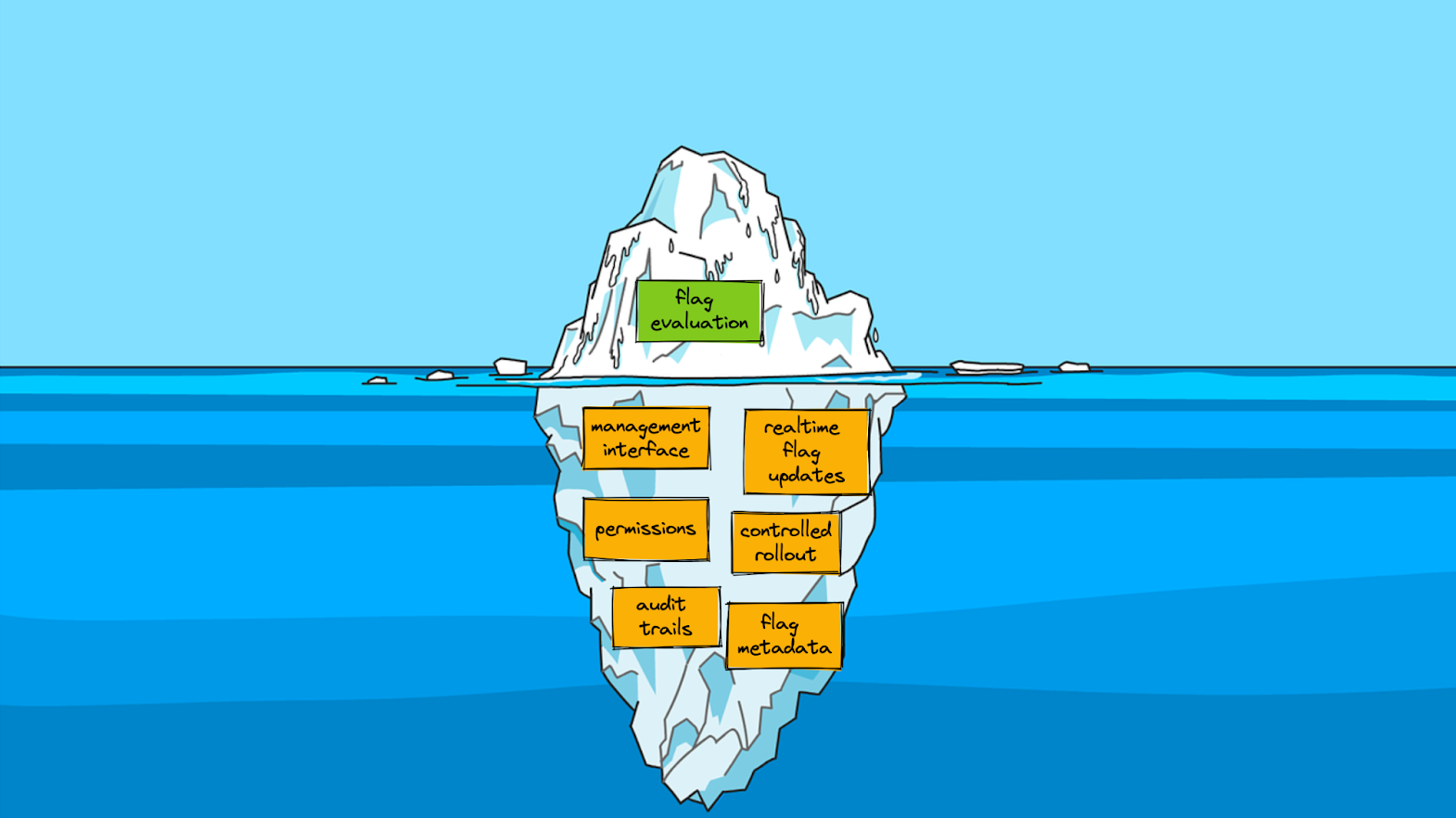

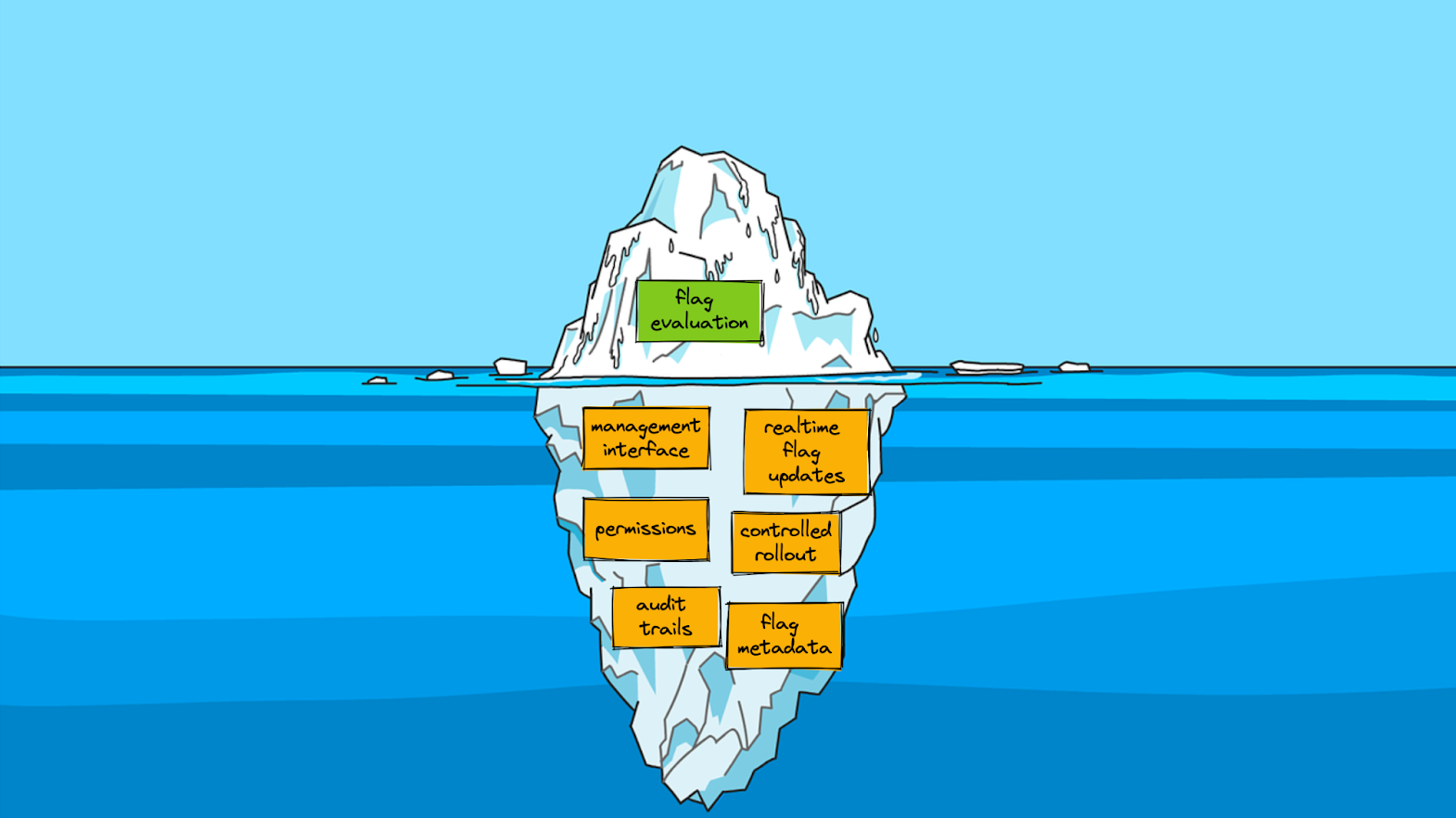

Feature flagging may appear simple on the surface; you check a boolean, push up a branch, and move on. But as Pete Hodgson describes in his blog post about OpenFeature:

When I talk to people about adopting feature flags, I often describe feature flag management as a bit of an iceberg. On the surface, feature flagging seems really simple… However, once you get into it, there’s a fair bit of complexity lurking under the surface.

At scale, feature management is more than toggling booleans; it's about auditing configurations, controlling incremental rollouts, ensuring governance and operational best practices, tracking events, and integrating with analytics systems. OpenFeature provides a standard interface for consistent execution across SDKs and providers. Once teams hit those hidden layers of complexity, a standardized approach is no longer optional.

This need for standardization isn’t new. In fact, Harness FME (previously known as Split.io) was an early supporter of OpenFeature because teams were already running into the limits of proprietary, SDK-specific flag implementations. From a blog post about OpenFeature published in 2022:

While feature flags alone are very powerful, organizations that use flagging at scale quickly learn that additional functionality is needed for a proper, long-term feature management approach.

This post highlights challenges that are now commonplace in most organizations: maintaining several SDKs across services, inconsistent flag definitions between teams, and friction in integrating feature flags with analytics, monitoring, and CI/CD systems.

What’s changed since then isn’t the problem; it’s the urgency. Teams are now shipping faster, across more languages and environments, with higher expectations around governance, experimentation, and observability. OpenFeature is a solution that enables teams to meet those expectations without increasing complexity.

Integrate OpenFeature with Harness FME

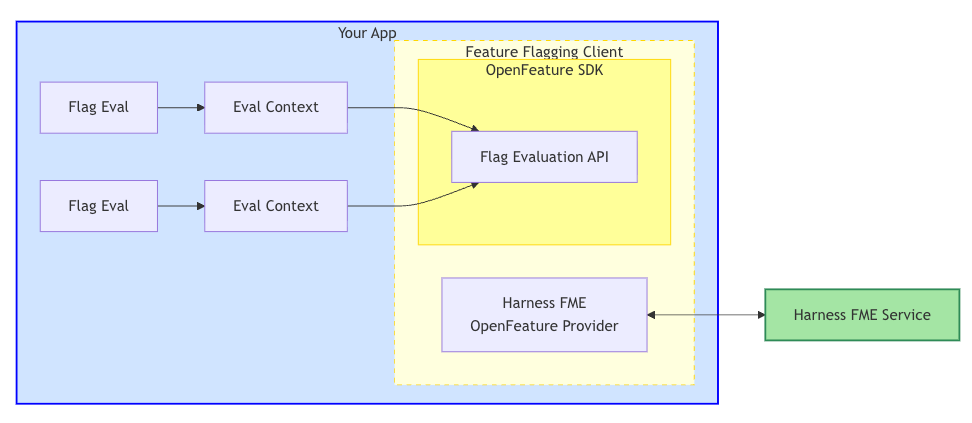

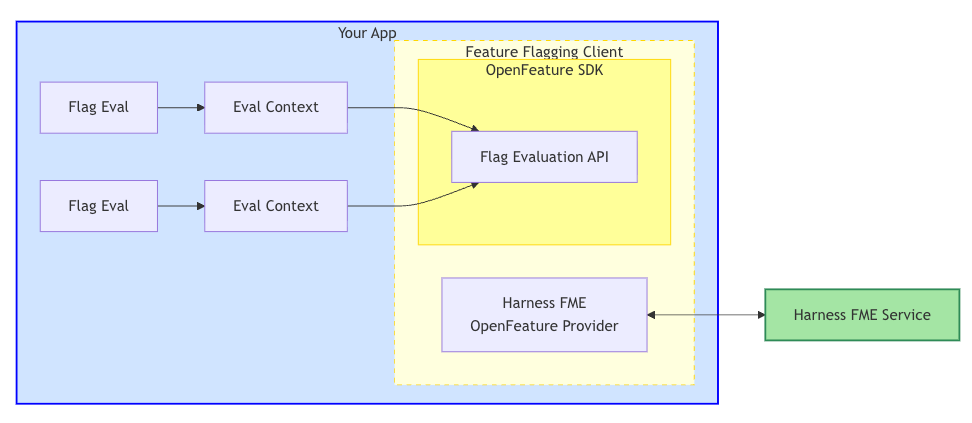

Feature flagging with OpenFeature provides your team with a consistent API to evaluate flags across environments and SDKs. With Harness FME, you can plug OpenFeature directly into your applications to standardize flag evaluations, simplify rollouts, and track feature impact, all from your existing workflow.

The Harness FME OpenFeature Provider wraps the Harness FME SDK, bridging the OpenFeature SDK with the Harness FME service. The provider maps OpenFeature's interface to the FME SDK, which handles communication with Harness services to evaluate feature flags and retrieve configuration updates.

In the following example, we’ll use the Harness FME Node.js OpenFeature Provider to evaluate and track feature flags in a sample application.

Prerequisites

Before you begin, ensure you have the following requirements:

- A valid Harness FME SDK key for your project

- Node.js 14.x+

- Access to npm or yarn to install dependencies

Setup

- Install the Node.js OpenFeature provider and dependencies.

- Initialize and register the provider with OpenFeature using your Harness FME SDK key.

- Evaluate feature flags with context. Target specific users, accounts, or segments by passing an evaluation context.

- If you reuse the same targeting key frequently, set the context once at the client or API level:

- Optionally, track user events like user actions or conversion events to measure flag impact. Event tracking links user behavior directly to your feature flags, helping you understand the real-world impact of each rollout.

With the provider registered and your evaluation context configured, your Node.js service can now evaluate flags, track events, and access flag metadata through OpenFeature without needing custom clients or SDK rewrites. From here, you can add additional flags, expand your targeting attributes, configure rollout rules in Harness FME, and feed event data directly into your experimentation workflows.

Start using Harness FME OpenFeature providers today

Feature management at scale is a common operational challenge. Much like the feature flagging iceberg where the simple on/off switch is just the visible tip, most of the real work happens underneath the surface: consistent evaluation logic, targeting, auditing, event tracking, and rollout safety. Harness FME and OpenFeature help teams manage these hidden operational complexities in a unified, predictable way.

Looking ahead, we’re extending support to additional server-side providers such as Go and Ruby, continuing to broaden OpenFeature’s reach across your entire stack.

To learn more about supported providers and how teams use OpenFeature with Harness FME in practice, see the Harness FME OpenFeature documentation. If you’re brand new to Harness FME, sign up for a free trial today.

Get a demo switch to Harness FME

Product and experimentation teams need confidence in their data when making high-impact product decisions. Today, experiment results often require copying behavioral data into external systems, which creates delays, security risks, and black-box calculations that are difficult to trust or validate.

Warehouse Native Experimentation keeps experiment data directly in your data warehouse, enabling you to analyze results with full transparency and governance control.

With Warehouse Native Experimentation, you can:

- Run experiments without exporting data

- Use transparent SQL logic that you control

- Maintain alignment with internal data models

- Accelerate experimentation without depending on streaming data pipelines

Why Warehouse Native Experimentation matters today

Product velocity has become a competitive differentiator, but experimentation often lags behind. AI-accelerated development means teams are shipping code faster than ever, while maintaining confidence in data-driven decisions is becoming increasingly challenging.

Modern teams face increasing pressure to move faster while reducing operational costs, reducing risk when launching high-impact features, maintaining strict data compliance and governance, and aligning product decisions with reliable, shared business metrics.

Executives are recognizing that sustainable velocity requires trustworthy insights. According to the 2025 State of AI in Software Engineering report, 81% of engineering leaders surveyed agreed that:

“Purpose-built platforms that automate the end-to-end SDLC will be far more valuable than solutions that target just one specific task in the future.”

At the same time, investments in data warehouses such as Snowflake and Amazon Redshift have increased. These platforms have become the trusted source of truth for customer behavior, financial reporting, and operational metrics.

This shift creates a new expectation where experiments must run where data already lives, results must be fully transparent to data stakeholders, and insights must be trustworthy from the get-go.

Warehouse Native Experimentation enables teams to scale experimentation without relying on streaming data pipelines, vendor lock-in, or black-box calculations, as trust and speed are now critical to business success.

Experiment where your data lives

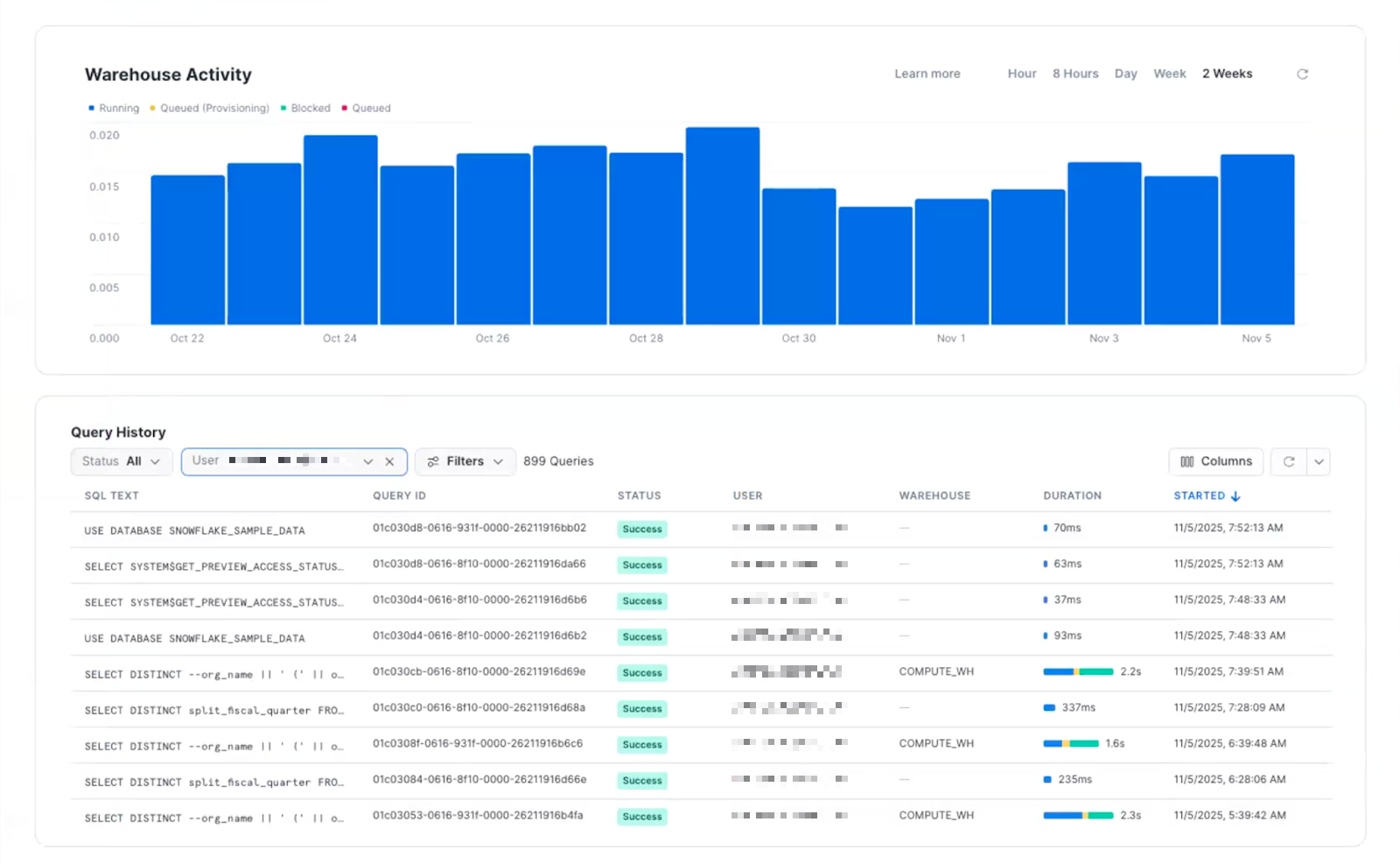

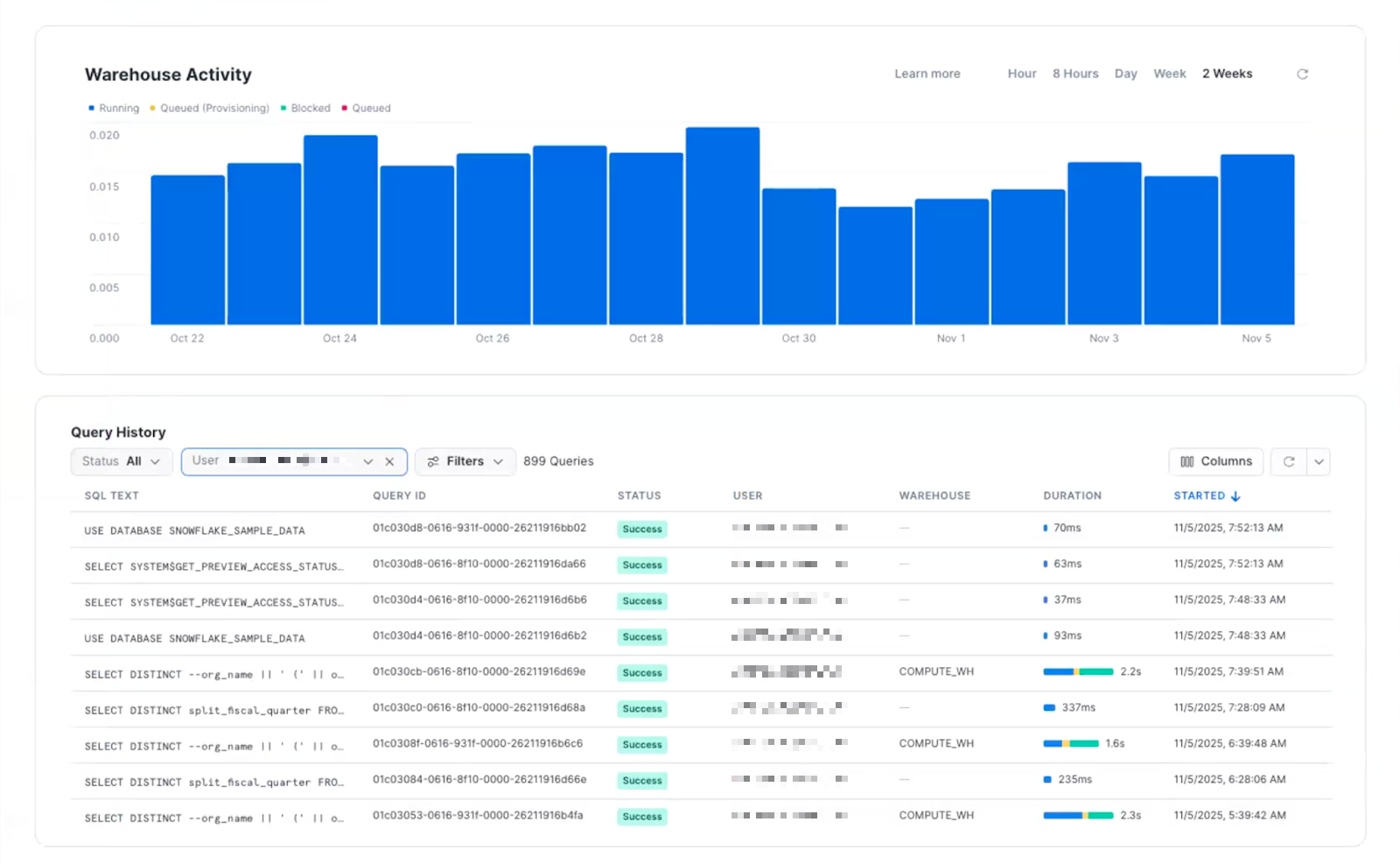

Warehouse Native Experimentation integrates with Snowflake and Amazon Redshift, allowing you to analyze assignments and events within your data warehouse.

Because all queries run inside your warehouse, you benefit from full visibility into data schemas and transformation logic, higher trust in experiment outcomes, and the ability to validate, troubleshoot, and customize queries.

When Warehouse Native experiment results are generated from the same source of truth for your organization, decision-making becomes faster and more confident.

Create metrics that reflect your business

Metrics define success, and Warehouse Native Experimentation enables teams to define them using data that already adheres to internal governance rules. You can build metrics using existing warehouse tables, reuse them across multiple experiments, and include guardrail metrics (such as latency, revenue, or stability) to ensure consistency and accuracy. As experimentation needs evolve, metrics evolve with them, without duplicate data definitions.

Experiments generate value when success metrics represent business reality. By codifying business logic into metrics, you can monitor the performance of what matters to your business, such as checkout conversion based on purchase events, average page load time as a performance guardrail, and revenue per user associated with e-commerce goals.

Understand experiment impact with transparent results

Once you've defined your metrics, Warehouse Native Experimentation automatically computes results on a daily recalculation or manual refresh and provides clear statistical significance indicators.

Because every result is generated with SQL that you can view in your data warehouse, teams can validate transformations, debug anomalies, and collaborate with data stakeholders. When everyone, from product to data science, can inspect the results, everyone trusts the decision.

Set up Warehouse Native Experimentation

Warehouse Native Experimentation requires connecting your data warehouse and ensuring your experiment and event data are ready for analysis. Warehouse Native Experimentation does not require streaming or ingestion; Harness FME reads directly from assignment and metric source tables.

To get started:

- Connect your data warehouse to Harness FME. Warehouse Native Experimentation requires the ability to read behavioral event and assignment tables, write results into a dedicated Harness schema, and run scheduled query jobs.

- Prepare your data model. In your data warehouse, assignment source tables track who was exposed to which variant, ensuring that users are correctly mapped to treatments and environments. Metric source tables, on the other hand, contain event-level data used in metric definitions, ensuring that analyses are grounded in a consistent, verifiable reality.

- Configure sources in Harness FME. Assignment sources define the exposure table structure and mappings, while metric sources define the event structure and metadata context. This ensures experiment analysis aligns with your warehouse schemas.

- Define metrics and create experiments. Once your data warehouse is connected, you can add key metrics and guardrail metrics, run experiments, and view the latest results in Harness FME.

From setting up Warehouse Native Experimentation to accessing your first Warehouse Native experiment result, organizations can efficiently move from raw data to validated insights, without building data pipelines.

Start running Warehouse Native experiments today

Warehouse Native Experimentation is ideal for organizations that already capture behavioral data in their warehouse, want experimentation without data exporting, and value transparency, governance, and flexibility in metrics.

Whether you're optimizing checkout or testing a new onboarding experience, Warehouse Native Experimentation enables you to make informed decisions, powered by the data sources your business already trusts.

Looking ahead, Harness FME will extend these workflows toward a shift-left approach, bringing experimentation closer to the release process with data checks in CI/CD pipelines, Harness RBAC permissioning, and policy-as-code governance. This alignment ensures product, experimentation, and engineering teams can release faster while maintaining confidence and compliance in every change.

To start running experiments in a supported data warehouse, see the Warehouse Native Experimentation documentation. If you're brand new to Harness FME, sign up for a free trial today.

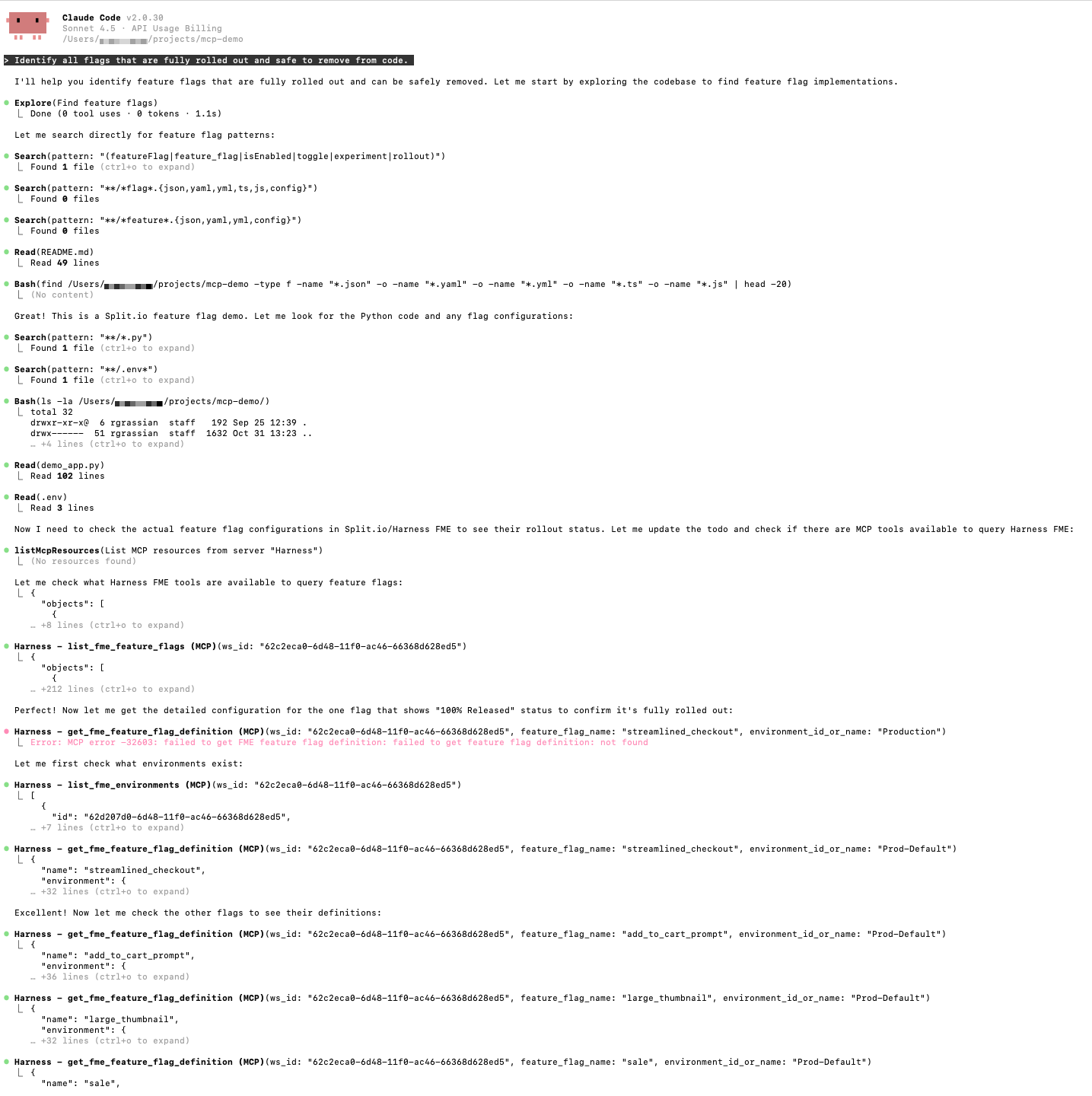

Managing feature flags can be complex, especially across multiple projects and environments. Teams often need to navigate dashboards, APIs, and documentation to understand which flags exist, their configurations, and where they are deployed. What if you could handle these tasks using simple natural language prompts directly within your AI-powered IDE?

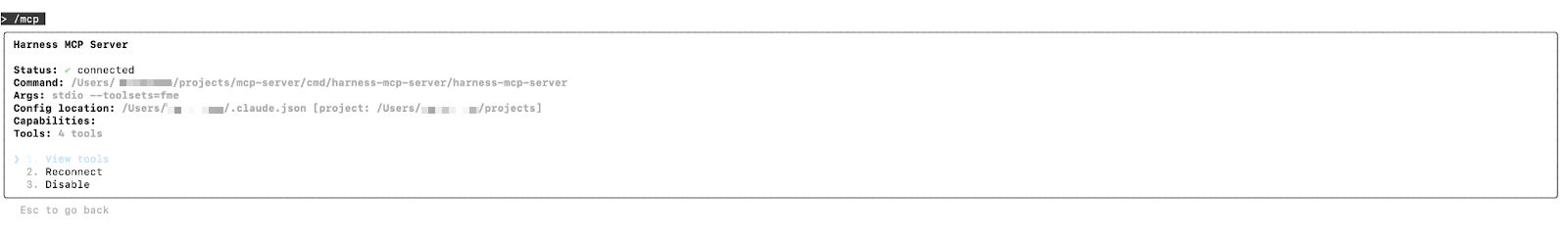

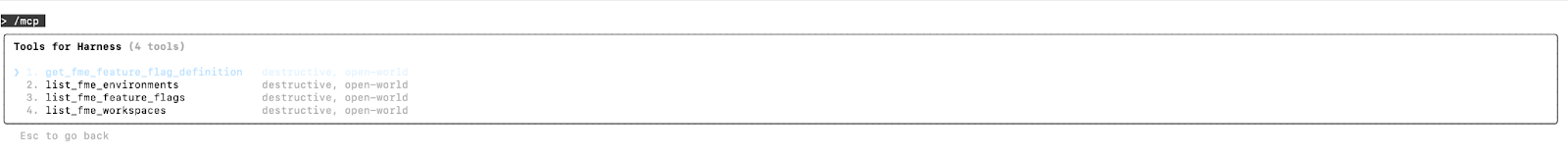

Harness Model Context Protocol (MCP) tools make this possible. By integrating with Claude Code, Windsurf, Cursor, or VS Code, developers and product managers can discover projects, list feature flags, and inspect flag definitions, all without leaving their development environment.

By using one of many AI-powered IDE agents, you can query your feature management data using natural language. They analyze your projects and flags to generate structured outputs that the agent can interpret to accurately answer questions and make recommendations for release planning.

With these agents, non-technical stakeholders can query and understand feature flags without deeper technical expertise. This approach reduces context switching, lowers the learning curve, and enables teams to make faster, data-driven decisions about feature management and rollout.

According to Harness and LeadDev’s survey of 500 engineering leaders in 2024:

82% of teams that are successful with feature management actively monitor system performance and user behavior at the feature level, and 78% prioritize risk mitigation and optimization when releasing new features.

Harness MCP tools help teams address these priorities by enabling developers and release engineers to audit, compare, and inspect feature flags across projects and environments in real time, aligning with industry best practices for governance, risk mitigation, and operational visibility.

Simplifying Feature Management Workflows

Traditional feature flag management practices can present several challenges:

- Complexity: Understanding flag configurations and environment setups can be time-consuming.

- Context Switching: Teams frequently shift between dashboards, APIs, and documentation.

- Governance and Consistency: Ensuring flags are correctly configured across environments requires manual auditing.

Harness MCP tools address these pain points by providing a conversational interface for interacting with your FME data, democratizing access to feature management insights across teams.

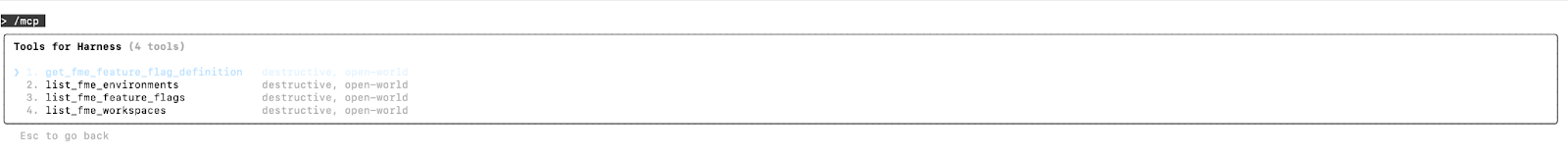

How MCP Tools Work for Harness FME

The FME MCP integration supports several capabilities:

You can also generate quick summaries of flag configurations or compare flag settings across environments directly in Claude Code using natural language prompts.

Some example prompts to get you started include the following:

"List all feature flags in the `checkout-service` project."

"Describe the rollout strategy and targeting rules for `enable_new_checkout`."

"Compare the `enable_checkout_flow` flag between staging and production."

"Show me all active flags in the `payment-service` project."

“Show me all environments defined for the `checkout-service` project.”

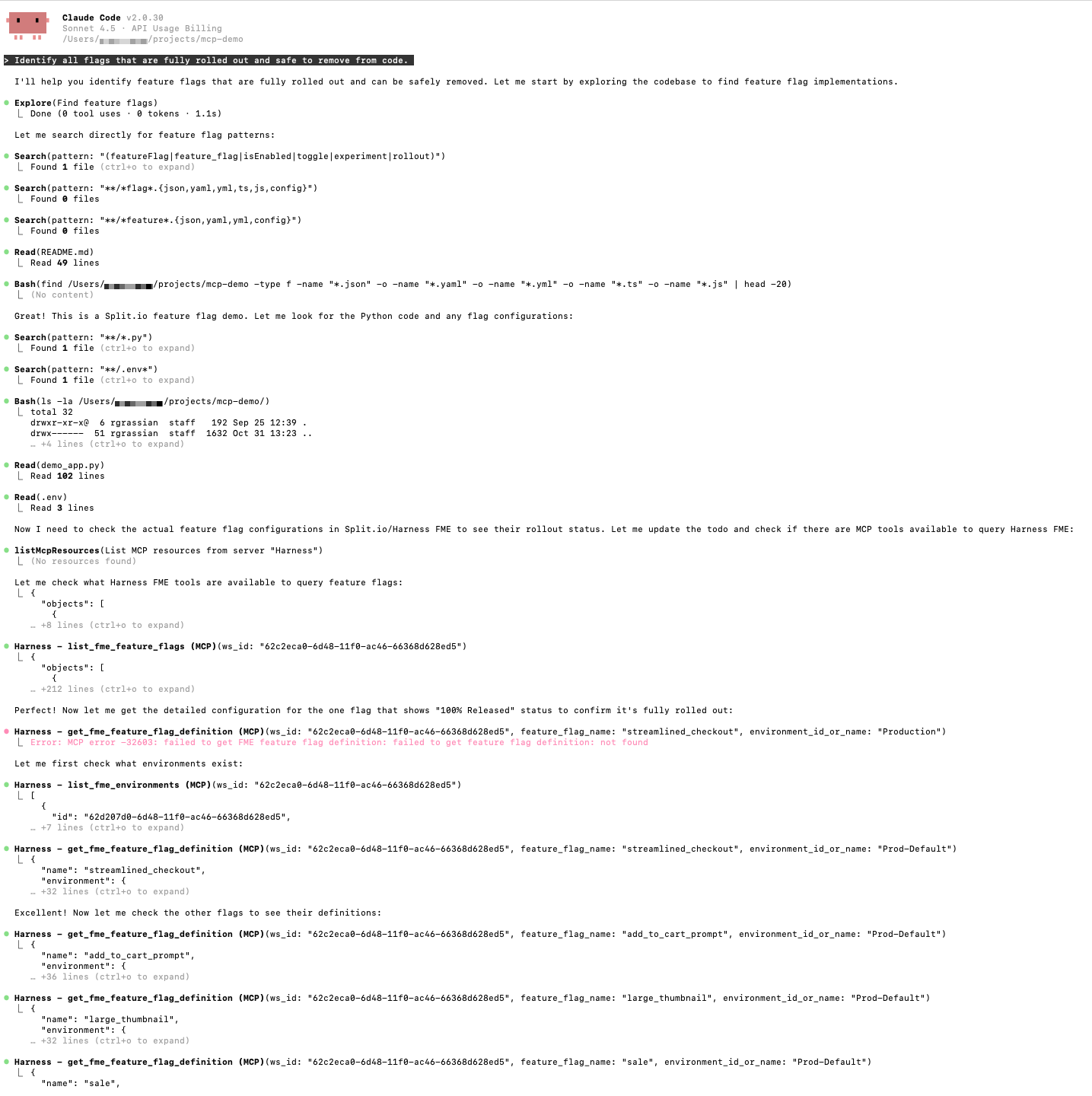

“Identify all flags that are fully rolled out and safe to remove from code.”

These prompts produce actionable insights in Claude Code (or your IDE of choice).

Getting Started

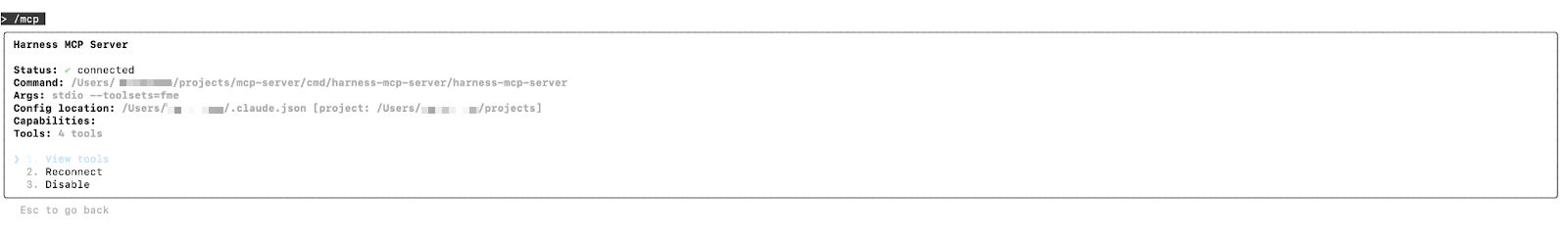

To start using Harness MCP tools for FME, ensure you have access to Claude Code and the Harness platform with FME enabled. Then, interact with the tools via natural language prompts to discover projects, explore flags, and inspect flag configurations.

Installation & Configuration

Harness MCP tools transform feature management into a conversational, AI-assisted workflow, making it easier to audit and manage your feature flags consistently across environments.

Prerequisites

- Go version 1.23 or later

- Claude Code (paid version) or another MCP-compatible AI tool

- Access to the Harness Platform with Feature Management & Experimentation (FME) enabled

- A Harness API key for authentication

Build the MCP Server Binary

- Clone the Harness MCP Server GitHub repository.

- Build the binary from source.

- Copy the binary to a directory accessible by Claude Code.

Configure Claude Code

- Open your Claude configuration file at `~/claude.json`. If it doesn’t exist already, you can create it.

- Add the Harness FME MCP server configuration:

{

...

"mcpServers": {

"harness": {

"command": "/path/to/harness-mcp-server",

"args": [

"stdio",

"--toolsets=fme"

],

"env": {

"HARNESS_API_KEY": "your-api-key-here",

"HARNESS_DEFAULT_ORG_ID": "your-org-id",

"HARNESS_DEFAULT_PROJECT_ID": "your-project-id",

"HARNESS_BASE_URL": "https://your-harness-instance.harness.io"

}

}

}

}- Save the file and restart Claude Code for the changes to take effect.

To configure additional MCP-compatible AI tools like Windsurf, Cursor, or VS Code, see the Harness MCP Server documentation, which includes detailed setup instructions for all supported platforms.

Verify Installation

- Open Claude Code (or the AI tool that you configured).

- Navigate to the Tools/MCP section.

- Verify Harness tools are available.

What’s Next

Feature management at scale is a common operational challenge. With Harness MCP tools and AI-powered IDEs, teams can already discover, inspect, and summarize flag configurations conversationally, reducing context switching and speeding up audits.

Looking ahead, this workflow extends itself towards a DevOps-focused approach, where developers and release engineers can prompt tools like Claude Code to identify inconsistencies or misconfigurations in feature flags across environments and take action to address them.

By embedding these capabilities directly into the development workflow, feature management becomes more operational and code-aware, enabling teams to maintain governance and reliability in real time.

For more information about the Harness MCP Server, see the Harness MCP Server documentation and the GitHub repository. If you’re brand new to Harness FME, sign up for a free trial today.

Recent Blogs

Simplify Feature Flag Management with Harness FME and OpenFeature

Feature flags are table stakes for modern software development. They allow teams to ship features safely, test new functionality, and iterate quickly, all without re-deploying their applications. As teams grow and ship across multiple services, environments, and languages, consistently managing feature flags becomes a significant challenge.

Harness Feature Management & Experimentation (FME) continues its investment in OpenFeature, building on our early support and adoption of the CNCF standard for feature flagging since 2022. OpenFeature provides a single, vendor-agnostic API that allows developers to interact with multiple feature management providers while maintaining consistent flag behavior.

With OpenFeature, you can standardize flag behavior across services and applications, and integrate feature flags across multiple languages and SDKs, including Node.js, Python, Java, .NET, Android, iOS, Angular, React, and Web.

Why OpenFeature matters today

Feature flagging may appear simple on the surface; you check a boolean, push up a branch, and move on. But as Pete Hodgson describes in his blog post about OpenFeature:

When I talk to people about adopting feature flags, I often describe feature flag management as a bit of an iceberg. On the surface, feature flagging seems really simple… However, once you get into it, there’s a fair bit of complexity lurking under the surface.

At scale, feature management is more than toggling booleans; it's about auditing configurations, controlling incremental rollouts, ensuring governance and operational best practices, tracking events, and integrating with analytics systems. OpenFeature provides a standard interface for consistent execution across SDKs and providers. Once teams hit those hidden layers of complexity, a standardized approach is no longer optional.

This need for standardization isn’t new. In fact, Harness FME (previously known as Split.io) was an early supporter of OpenFeature because teams were already running into the limits of proprietary, SDK-specific flag implementations. From a blog post about OpenFeature published in 2022:

While feature flags alone are very powerful, organizations that use flagging at scale quickly learn that additional functionality is needed for a proper, long-term feature management approach.

This post highlights challenges that are now commonplace in most organizations: maintaining several SDKs across services, inconsistent flag definitions between teams, and friction in integrating feature flags with analytics, monitoring, and CI/CD systems.

What’s changed since then isn’t the problem; it’s the urgency. Teams are now shipping faster, across more languages and environments, with higher expectations around governance, experimentation, and observability. OpenFeature is a solution that enables teams to meet those expectations without increasing complexity.

Integrate OpenFeature with Harness FME

Feature flagging with OpenFeature provides your team with a consistent API to evaluate flags across environments and SDKs. With Harness FME, you can plug OpenFeature directly into your applications to standardize flag evaluations, simplify rollouts, and track feature impact, all from your existing workflow.

The Harness FME OpenFeature Provider wraps the Harness FME SDK, bridging the OpenFeature SDK with the Harness FME service. The provider maps OpenFeature's interface to the FME SDK, which handles communication with Harness services to evaluate feature flags and retrieve configuration updates.

In the following example, we’ll use the Harness FME Node.js OpenFeature Provider to evaluate and track feature flags in a sample application.

Prerequisites

Before you begin, ensure you have the following requirements:

- A valid Harness FME SDK key for your project

- Node.js 14.x+

- Access to npm or yarn to install dependencies

Setup

- Install the Node.js OpenFeature provider and dependencies.

- Initialize and register the provider with OpenFeature using your Harness FME SDK key.

- Evaluate feature flags with context. Target specific users, accounts, or segments by passing an evaluation context.

- If you reuse the same targeting key frequently, set the context once at the client or API level:

- Optionally, track user events like user actions or conversion events to measure flag impact. Event tracking links user behavior directly to your feature flags, helping you understand the real-world impact of each rollout.

With the provider registered and your evaluation context configured, your Node.js service can now evaluate flags, track events, and access flag metadata through OpenFeature without needing custom clients or SDK rewrites. From here, you can add additional flags, expand your targeting attributes, configure rollout rules in Harness FME, and feed event data directly into your experimentation workflows.

Start using Harness FME OpenFeature providers today

Feature management at scale is a common operational challenge. Much like the feature flagging iceberg where the simple on/off switch is just the visible tip, most of the real work happens underneath the surface: consistent evaluation logic, targeting, auditing, event tracking, and rollout safety. Harness FME and OpenFeature help teams manage these hidden operational complexities in a unified, predictable way.

Looking ahead, we’re extending support to additional server-side providers such as Go and Ruby, continuing to broaden OpenFeature’s reach across your entire stack.

To learn more about supported providers and how teams use OpenFeature with Harness FME in practice, see the Harness FME OpenFeature documentation. If you’re brand new to Harness FME, sign up for a free trial today.

Get a demo switch to Harness FME

Make Data-Driven Decisions with Warehouse Native Experimentation

Product and experimentation teams need confidence in their data when making high-impact product decisions. Today, experiment results often require copying behavioral data into external systems, which creates delays, security risks, and black-box calculations that are difficult to trust or validate.

Warehouse Native Experimentation keeps experiment data directly in your data warehouse, enabling you to analyze results with full transparency and governance control.

With Warehouse Native Experimentation, you can:

- Run experiments without exporting data

- Use transparent SQL logic that you control

- Maintain alignment with internal data models

- Accelerate experimentation without depending on streaming data pipelines

Why Warehouse Native Experimentation matters today

Product velocity has become a competitive differentiator, but experimentation often lags behind. AI-accelerated development means teams are shipping code faster than ever, while maintaining confidence in data-driven decisions is becoming increasingly challenging.

Modern teams face increasing pressure to move faster while reducing operational costs, reducing risk when launching high-impact features, maintaining strict data compliance and governance, and aligning product decisions with reliable, shared business metrics.

Executives are recognizing that sustainable velocity requires trustworthy insights. According to the 2025 State of AI in Software Engineering report, 81% of engineering leaders surveyed agreed that:

“Purpose-built platforms that automate the end-to-end SDLC will be far more valuable than solutions that target just one specific task in the future.”

At the same time, investments in data warehouses such as Snowflake and Amazon Redshift have increased. These platforms have become the trusted source of truth for customer behavior, financial reporting, and operational metrics.

This shift creates a new expectation where experiments must run where data already lives, results must be fully transparent to data stakeholders, and insights must be trustworthy from the get-go.

Warehouse Native Experimentation enables teams to scale experimentation without relying on streaming data pipelines, vendor lock-in, or black-box calculations, as trust and speed are now critical to business success.

Experiment where your data lives

Warehouse Native Experimentation integrates with Snowflake and Amazon Redshift, allowing you to analyze assignments and events within your data warehouse.

Because all queries run inside your warehouse, you benefit from full visibility into data schemas and transformation logic, higher trust in experiment outcomes, and the ability to validate, troubleshoot, and customize queries.

When Warehouse Native experiment results are generated from the same source of truth for your organization, decision-making becomes faster and more confident.

Create metrics that reflect your business

Metrics define success, and Warehouse Native Experimentation enables teams to define them using data that already adheres to internal governance rules. You can build metrics using existing warehouse tables, reuse them across multiple experiments, and include guardrail metrics (such as latency, revenue, or stability) to ensure consistency and accuracy. As experimentation needs evolve, metrics evolve with them, without duplicate data definitions.

Experiments generate value when success metrics represent business reality. By codifying business logic into metrics, you can monitor the performance of what matters to your business, such as checkout conversion based on purchase events, average page load time as a performance guardrail, and revenue per user associated with e-commerce goals.

Understand experiment impact with transparent results

Once you've defined your metrics, Warehouse Native Experimentation automatically computes results on a daily recalculation or manual refresh and provides clear statistical significance indicators.

Because every result is generated with SQL that you can view in your data warehouse, teams can validate transformations, debug anomalies, and collaborate with data stakeholders. When everyone, from product to data science, can inspect the results, everyone trusts the decision.

Set up Warehouse Native Experimentation

Warehouse Native Experimentation requires connecting your data warehouse and ensuring your experiment and event data are ready for analysis. Warehouse Native Experimentation does not require streaming or ingestion; Harness FME reads directly from assignment and metric source tables.

To get started:

- Connect your data warehouse to Harness FME. Warehouse Native Experimentation requires the ability to read behavioral event and assignment tables, write results into a dedicated Harness schema, and run scheduled query jobs.

- Prepare your data model. In your data warehouse, assignment source tables track who was exposed to which variant, ensuring that users are correctly mapped to treatments and environments. Metric source tables, on the other hand, contain event-level data used in metric definitions, ensuring that analyses are grounded in a consistent, verifiable reality.

- Configure sources in Harness FME. Assignment sources define the exposure table structure and mappings, while metric sources define the event structure and metadata context. This ensures experiment analysis aligns with your warehouse schemas.

- Define metrics and create experiments. Once your data warehouse is connected, you can add key metrics and guardrail metrics, run experiments, and view the latest results in Harness FME.

From setting up Warehouse Native Experimentation to accessing your first Warehouse Native experiment result, organizations can efficiently move from raw data to validated insights, without building data pipelines.

Start running Warehouse Native experiments today

Warehouse Native Experimentation is ideal for organizations that already capture behavioral data in their warehouse, want experimentation without data exporting, and value transparency, governance, and flexibility in metrics.

Whether you're optimizing checkout or testing a new onboarding experience, Warehouse Native Experimentation enables you to make informed decisions, powered by the data sources your business already trusts.

Looking ahead, Harness FME will extend these workflows toward a shift-left approach, bringing experimentation closer to the release process with data checks in CI/CD pipelines, Harness RBAC permissioning, and policy-as-code governance. This alignment ensures product, experimentation, and engineering teams can release faster while maintaining confidence and compliance in every change.

To start running experiments in a supported data warehouse, see the Warehouse Native Experimentation documentation. If you're brand new to Harness FME, sign up for a free trial today.

.png)

.png)

Harness FME Fast and Furious

Over the past six months, we have been hard at work building an integrated experience to take full advantage of the new platform made available after the Split.io merger with Harness. We have shipped a unified Harness UI for migrated Split customers, added enterprise-grade controls for experiments and rollouts, and doubled down on AI to help teams see impact faster and act with confidence. Highlights include OpenFeature providers, Warehouse Native Experimentation (beta), AI Experiment Summaries, rule-based segments, SDK fallback treatments, dimensional analysis support, and new FME MCP tools that connect your flags to AI-assisted IDEs.

And our efforts are being noticed. Just last month, Forrester released the 2025 Forrester Wave™ for Continuous Delivery & Release Automation where Harness was ranked as a leader in part due to our platform approach including CI/CD and FME. This helps us uniquely solve some of the most challenging problems facing DevOps teams today.

A more integrated experience: from Split UI to Harness UI

This year we completed the front-end migration path that moves customers from app.split.io to app.harness.io, giving teams a consistent, modern experience across the Harness platform with no developer code changes required. Day-to-day user flows remain familiar, while admins gain Harness-native RBAC, SSO, and API management with personal access token and service account token support.

What this means for you:

- No more switching UIs for customers who use FME and other Harness products

- No SDK or proxy changes required for production apps. Your flag evaluations continue as before.

- Harness RBAC and SSO now govern FME access. Migrations include clear before and after guides for roles, groups, and SCIM.

- Admin API parity with a documented before and after map and examples for the few endpoints that moved.

For admins, the quick confidence checklist, logging steps, and side-by-side screens make the switch straightforward. FME Settings routes you into the standard Harness RBAC screens for long-term consistency where appropriate.

Built for AI-driven workloads

Two themes shaped our AI investments: explainability and in-flow assist.

- Explainable measurement: AI Summaries help teams move faster from what happened to what should we do, without forcing deep dives into raw statistics.

- AI in the developer loop: FME MCP tools help developers inspect, compare, and adjust flag states without context switching. This shortens the loop between finding an issue and safely changing a treatment.

- Data where you need it: Warehouse Native Experimentation runs analyses where your data already lives, improving transparency and aligning with the way modern AI and analytics teams operate.

To learn more, watch this video!

Warehouse Native Experimentation (beta)

Warehouse Native Experimentation lets you run analyses directly in your own data warehouse using your assignment and event data for more transparent, flexible measurement. We are pleased to announce that this feature is now available in beta. Customers can request access through their account team and read more about it in our docs.

What else is new in Harness FME

As you can see from all the new features below, we have been running hard and we are accelerating into the turn as we head toward the end of the year. We take pride in the partnerships we have with our customers. As we listen to your concerns, our engineering teams are working hard to implement the features you need to be successful.

October 2025

- FME MCP tools connect feature flags and experiments to AI-assisted IDEs such as Claude Code, Windsurf, Cursor, and VS Code. Explore flags, compare flag definitions, and audit rules conversationally to speed up release workflows.

- OpenFeature providers for Android, iOS, Web, Java, Python, .NET, Node.js, React, and Angular help standardize evaluations and reduce lock-in across services and teams.

- Harness Proxy centralizes and secures outgoing SDK traffic with support for OAuth and mTLS, easing egress-control needs at scale. It also works with other Harness products like Database DevOps.

- Owners as metadata clarifies accountability while edit privileges remain governed by RBAC.

September 2025

- SDK Fallback treatments allow you to avoid unexpected control treatments by offering a centralized, simple and scalable way to set these across your SDKs and applications.

- Experiment entry event filter keeps analysis clean by including only users who actually hit the experiment entry point.

July 2025

- Dimensional analysis on experiments reveals effects by browser, device, region, and more so you can catch segment-level regressions early.

- AI Summarize on experiments and metrics gives fast, accurate summaries for non-technical stakeholders and busy teams.

June 2025

- Rule-based segments target users dynamically using attribute conditions, reducing static list maintenance and simplifying targeting rules reusability.

- Experiment tags improve searchability and at-scale organization.

- Client-side cache controls for Browser, iOS, and Android SDKs let you tune rollout cache expiration and initialization behavior.

Foundation laid earlier in 2025

- Experiments Dashboard for easier setup and multi-treatment analysis.

- Release Agent, rebranded from Switch, adds follow-up Q and A on metric summaries with admin-controlled AI settings.

As always, you can find details on all our new features by reading our release notes.

What customers can expect next

We are excited to add more value for our customers by continuing to integrate Split with Harness to achieve the best of both worlds. Harness CI/CD customers can expect familiar and proven methodologies to show up in FME like pipelines, RBAC, SSO support and more. To see the full roadmap and get a sneak peak at what is coming, reach out to us to schedule a call with your account representative.

Get started

- Attending AWS ReInvent? Come see us at booth #731 and get a live demo.

- Already migrated from Split? Sign in at app.harness.io. If you are an admin, review the RBAC and SSO guides to validate group mappings and SCIM.

- New to Harness FME? Explore the product page and datasheet, then try FME in your environment.

Want the full details? Read the latest FME release notes for all features, dates, and docs.

Checkout The Feature Management & Experimentation Summit

Read comparison of Harness FME with Unleash

When Cloud Providers Have an Outage, Your Feature Flags Shouldn’t

Over the past few weeks, the software industry has experienced multiple cloud outages that have caused widespread disruptions across hundreds of applications and services. When systems went down, the difference between chaos and continuity came down to architecture. In feature management, reliability is not a nice-to-have; it is designed in. When an outage occurs, it’s often not the failure itself that defines the customer experience, but how the system is designed to respond.

During the event, Harness Feature Management & Experimentation (FME) maintained 100% flag-delivery uptime across all regions—no redeploys, no configuration changes, no missed evaluations. This wasn’t luck. It’s the result of an architecture built from day one for failure resilience. FME was built from the ground up with fault tolerance and continuity in mind. From automatic fallback mechanisms to distributed decision engines and managed streaming infrastructure, every layer of our architecture is designed to ensure feature flag delivery remains resilient, even in the face of unexpected events.

Automatic Fallback: Zero-Touch Continuity

One of the most important architectural principles in FME is graceful degradation, ensuring that even when one service experiences disruption, the system continues to function seamlessly. Our SDKs are designed to automatically fall back to polling if there is any issue connecting to the streaming service. This means developers and operators never have to manually intervene or redeploy code during an outage. The fallback happens instantly and intelligently, preserving continuity and minimizing operational burden. In contrast, many legacy systems in the market rely on manual configuration changes to fallback to polling and restore flag delivery, an approach that adds risk and friction exactly when teams can least afford it.

Resilient Client-Side Decisioning: Real Evaluations, Not Stale Caches

Client-side SDKs are often the first point of impact during a network disruption. In many architectures, these SDKs can serve only cached flag values when connectivity issues arise, leaving new users or sessions without the ability to evaluate flags. Harness FME takes a different approach. Each client SDK functions as a self-contained decision engine, capable of evaluating flag rules locally and automatically switching to polling when needed. Combined with local caching and retrieval from CDN edge locations, this design ensures that even during service interruptions, both existing and new users continue to receive flag evaluations without delay or degradation.

Distributed Streaming Architecture: Built for Continuous Availability

Harness FME’s distributed streaming architecture is engineered for global reach and high availability. If a region or node experiences issues, traffic automatically reroutes to healthy endpoints. Combined with instant SDK fallback to polling, this ensures uninterrupted flag delivery and real-time responsiveness, regardless of the scale of disruption. During the recent outages, as users of our own feature flags, we served each customer their targeted experience with no disruptions.

Separation of control-plane and delivery-plane: Reliability at the Core

Even with strong backend continuity, user experience matters. Both the web console and APIs are engineered for graceful degradation. During transient internet instability, a subset of users may experience slowdowns, challenges accessing the web console, or issues posting back flag evaluation records; however, feature flag delivery and evaluation remain unaffected. This separation of control plane and delivery plane ensures that UI performance issues never impact your SDK evaluations and customer traffic. It is a key architectural decision that protects live customer experiences even in volatile network conditions.

The Outcome: Reliability as a Competitive Advantage

Reliability isn’t just about surviving outages - it’s about designing for them. Building for resilience requires intentional architectural choices such as automatic fallback mechanisms, self-sufficient SDKs, and isolation between control and delivery planes. That’s why, at Harness, we are using these opportunities to learn while following best practices to continuously improve our products, minimize the impact of outages on our customers, and deliver uninterrupted feature management at a global scale. It’s not about avoiding every failure; that’s virtually impossible. However, it's essential to ensure that when failure does happen, your product continues to work for your customers.

If you’re brand new to Harness FME, get a demo here or sign up for a free trial today.

DB Performance Testing with Harness FME

DB Performance Testing with Harness FME

Databases have been crucial to web applications since their beginning, serving as the core storage for all functional aspects. They manage user identities, profiles, activities, and application-specific data, acting as the authoritative source of truth. Without databases, the interconnected information driving functionality and personalized experiences would not exist. Their integrity, performance, and scalability are vital for application success, and their strategic importance grows with increasing data complexity. In this article we are going to show you how you can leverage feature flags to compare different databases.

Let’s say you want to test and compare two different databases against one another. A common use case could be to compare the performance of two of the most popular open source databases. MariaDB and PostgreSQL.

MariaDB and PostgreSQL logos

Let’s think about how we want to do this. We want to compare the experience of our users with these different database. In this example we will be doing a 50/50 experiment. In a production environment doing real testing in all likelihood you already use one database and would use a very small percentage based rollout to the other one, such as a 90/10 (or even 95/5) to reduce the blast radius of potential issues.

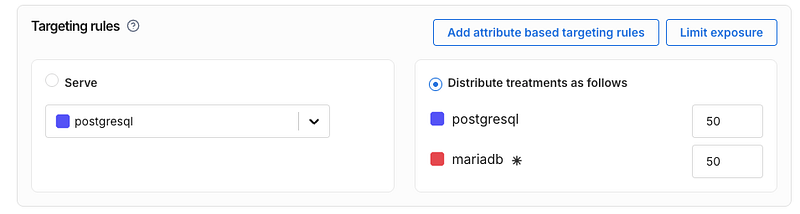

To do this experiment, first, let’s make a Harness FME feature flag that distributes users 50/50 between MariaDB and PostgreSQL

Now for this experiment we need to have a reasonable amount of sample data in the db. In this sample experiment we will actually just load the same data into both databases. In production you’d want to build something like a read replica using a CDC (change data capture) tool so that your experimental database matches with your production data

Our code will generate 100,000 rows of this data table and load it into both before the experiment. This is not too big to cause issues with db query speed but big enough to see if some kind of change between database technologies. This table also has three different data types — text (varchar), numbers, and timestamps.

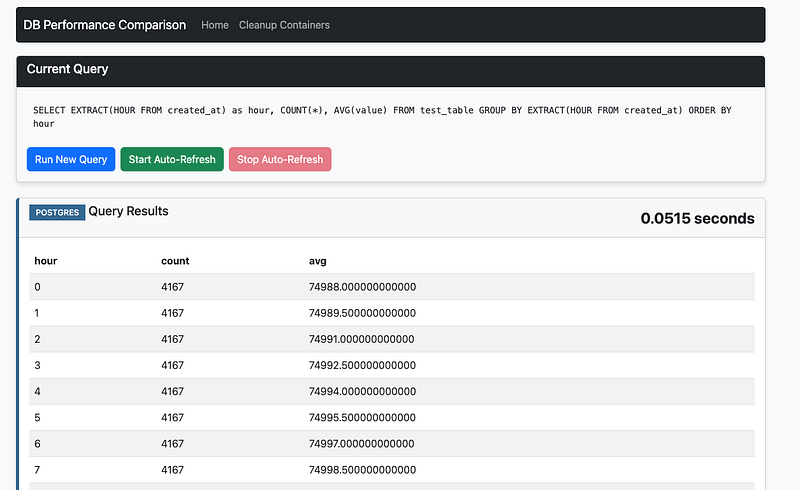

Now let’s make a basic app that simulates making our queries. Using Python we will make an app that executes queries from a list and displays the result.

Below you can see the basic architecture of our design. We will run MariaDB and Postgres on Docker and the application code will connect to both, using the Harness FME feature flag to determine which one to use for the request.

The sample queries we used can be seen below. We are using 5 queries with a variety of SQL keywords. We include joins, limits, ordering, functions, and grouping.

We use the Harness FME SDK to do the decisioning here for our user id values. It will determine if the incoming user experiences the Postgres or MariaDB treatment using the get_treatment method of the SDK based upon the rules we defined in the Harness FME console above.

Afterwards within the application we will run the query and then track the query_executionevent using the SDK’s track method.

See below for some key parts of our Python based app.

This code will initialize our Split (Harness FME) client for the SDK.

We will generate a sample user ID, just with an integer from 1–10,000

Now we need to get whether our user will be using Postgres or MariaDB. We also do some defensive programming here to ensure that we have a default if it’s not either postgres or mariadb

Now let’s run the query and track the query_executionevent. From the app you can select the query you want to run, or if you don’t it’ll just run one of the five sample queries at random.

The db_manager class handles maintaining the connections to the databases as well as tracking the execution time for the query. Here we can see it using Python’s time to track how long the query took. The object that the db_manager returns includes this value

Tracking the event allows us to see the impact of which database was faster for our users. The signature for the Harness FME SDK’s track method includes both a value and properties. In this case we supply the query execution time as the value and the actual query that ran as a property of the event that can be used later on for filtering and , as we will see later, dimensional analysis.

You can see a screenshot of what the app looks like below. There’s a simple bootstrap themed frontend that does the display here.

app screenshot

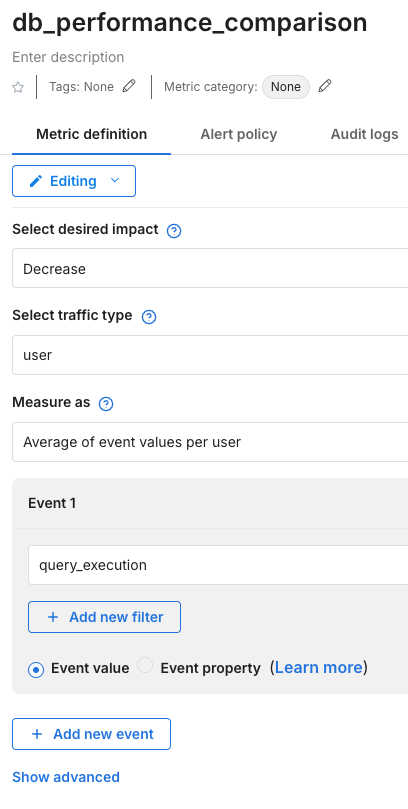

The last step here is that we need to build a metric to do the comparison.

Here we built a metric called db_performance_comparison . In this metric we set up our desired impact — we want the query time to decrease. Our traffic type is of user.

Metric configuration

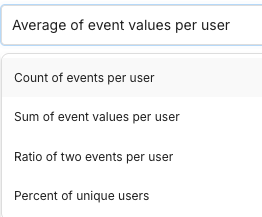

One of the most important questions is what we will select for the Measure as option. Here we have a few options, as can be seen below

Measure as options

We want to compare across users, and are interested in faster average query execution times, so we select Average of event values per user. Count, sum, ratio, and percent don’t make sense here.

Lastly, we are measuring the query_execution event.

We added this metric as a key metric for our db_performance_comparison feature flag.

Selection of our metric as a key metric

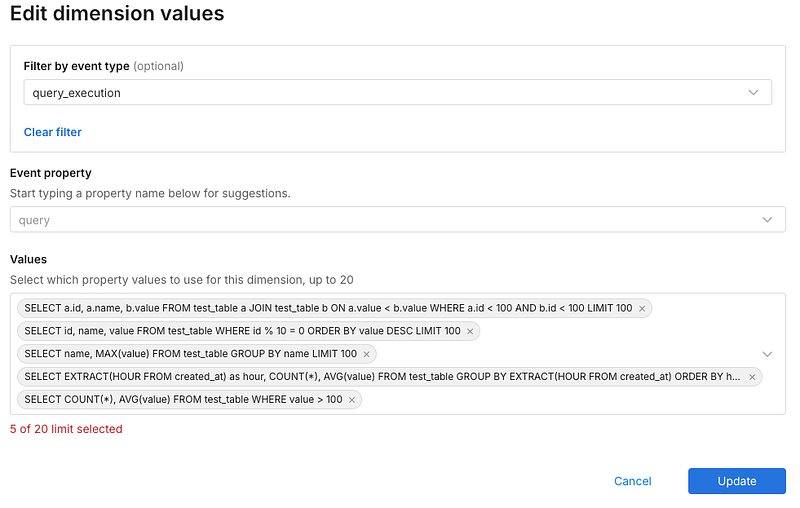

One additional thing we will want to do is set up dimensional analysis, like we mentioned above. Dimensional analysis will let us drill down into the individual queries to see which one(s) were more or less performant on each database. We can have up to 20 values in here. If we’ve already been sending events they can simply be selected as we keep track of them internally — otherwise, we will input our queries here.

selection of values for dimensional analysis

Now that we have our dimensions, our metric, and our application set to use our feature flag, we can now send traffic to the application.

For this example, I’ve created a load testing script that uses Selenium to load up my application. This will send enough traffic so that I’ll be able to get significance on my db_performance_comparison metric.

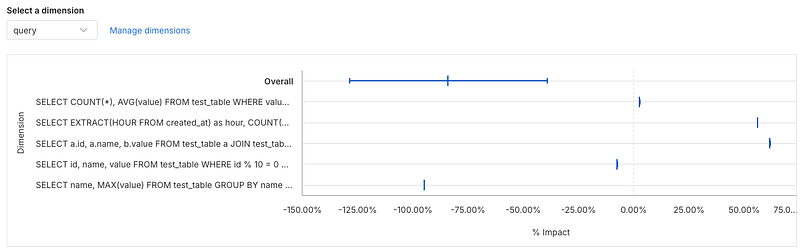

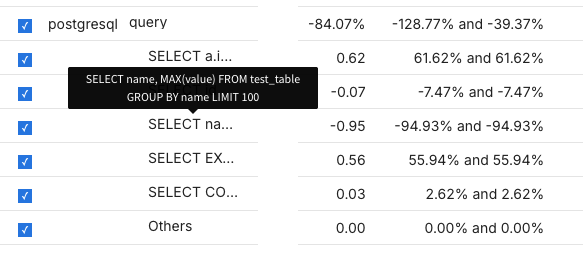

I got some pretty interesting results, if we look at the metrics impact screen we can see that Postgres resulted in a 84% drop in query time.

Even more, if we drill down to the dimensional analysis for the metric, we can see which queries were faster and which were actually slower using Postgres.

So some queries were faster and some were slower, but the faster queries were MUCH faster. This allows you to pinpoint the performance you would get by changing database engines.

You can also see the statistics in a table below — seems like the query with the most significant speedup was one that used grouping and limits.

However, the query that used a join was much slower in Postgres — you can see it’s the query that starts with SELECT a.i... , since we are doing a self-join the table alias is a. Also the query that uses EXTRACT (an SQL date function) is nearly 56% slower as well.

Conclusion

In summary, running experiments on backend infrastructure like databases using Harness FME can yield significant insights and performance improvements. As demonstrated, testing MariaDB against PostgreSQL revealed an 84% drop in query time with Postgres. Furthermore, dimensional analysis allowed us to identify specific queries that benefited the most, specifically those involving grouping and limits, and which queries were slower. This level of detailed performance data enables you to make informed decisions about your database engine and infrastructure, leading to optimization, efficiency, and ultimately, better user experience. Harness FME provides a robust platform for conducting such experiments and extracting actionable insights. For example — if we had an application that used a lot of join based queries or used SQL date functions like EXTRACT it may end up showing that MariaDB would be faster than Postgres and it wouldn’t make sense to consider a migration to it.

The full code for our experiment lives here: https://github.com/Split-Community/DB-Speed-Test

Streamline feature management with Harness MCP and Claude Code

Managing feature flags can be complex, especially across multiple projects and environments. Teams often need to navigate dashboards, APIs, and documentation to understand which flags exist, their configurations, and where they are deployed. What if you could handle these tasks using simple natural language prompts directly within your AI-powered IDE?

Harness Model Context Protocol (MCP) tools make this possible. By integrating with Claude Code, Windsurf, Cursor, or VS Code, developers and product managers can discover projects, list feature flags, and inspect flag definitions, all without leaving their development environment.

By using one of many AI-powered IDE agents, you can query your feature management data using natural language. They analyze your projects and flags to generate structured outputs that the agent can interpret to accurately answer questions and make recommendations for release planning.

With these agents, non-technical stakeholders can query and understand feature flags without deeper technical expertise. This approach reduces context switching, lowers the learning curve, and enables teams to make faster, data-driven decisions about feature management and rollout.

According to Harness and LeadDev’s survey of 500 engineering leaders in 2024:

82% of teams that are successful with feature management actively monitor system performance and user behavior at the feature level, and 78% prioritize risk mitigation and optimization when releasing new features.

Harness MCP tools help teams address these priorities by enabling developers and release engineers to audit, compare, and inspect feature flags across projects and environments in real time, aligning with industry best practices for governance, risk mitigation, and operational visibility.

Simplifying Feature Management Workflows

Traditional feature flag management practices can present several challenges:

- Complexity: Understanding flag configurations and environment setups can be time-consuming.

- Context Switching: Teams frequently shift between dashboards, APIs, and documentation.

- Governance and Consistency: Ensuring flags are correctly configured across environments requires manual auditing.

Harness MCP tools address these pain points by providing a conversational interface for interacting with your FME data, democratizing access to feature management insights across teams.

How MCP Tools Work for Harness FME

The FME MCP integration supports several capabilities:

You can also generate quick summaries of flag configurations or compare flag settings across environments directly in Claude Code using natural language prompts.

Some example prompts to get you started include the following:

"List all feature flags in the `checkout-service` project."

"Describe the rollout strategy and targeting rules for `enable_new_checkout`."

"Compare the `enable_checkout_flow` flag between staging and production."

"Show me all active flags in the `payment-service` project."

“Show me all environments defined for the `checkout-service` project.”

“Identify all flags that are fully rolled out and safe to remove from code.”

These prompts produce actionable insights in Claude Code (or your IDE of choice).

Getting Started

To start using Harness MCP tools for FME, ensure you have access to Claude Code and the Harness platform with FME enabled. Then, interact with the tools via natural language prompts to discover projects, explore flags, and inspect flag configurations.

Installation & Configuration

Harness MCP tools transform feature management into a conversational, AI-assisted workflow, making it easier to audit and manage your feature flags consistently across environments.

Prerequisites

- Go version 1.23 or later

- Claude Code (paid version) or another MCP-compatible AI tool

- Access to the Harness Platform with Feature Management & Experimentation (FME) enabled

- A Harness API key for authentication

Build the MCP Server Binary

- Clone the Harness MCP Server GitHub repository.

- Build the binary from source.

- Copy the binary to a directory accessible by Claude Code.

Configure Claude Code

- Open your Claude configuration file at `~/claude.json`. If it doesn’t exist already, you can create it.

- Add the Harness FME MCP server configuration:

{

...

"mcpServers": {

"harness": {

"command": "/path/to/harness-mcp-server",

"args": [

"stdio",

"--toolsets=fme"

],

"env": {

"HARNESS_API_KEY": "your-api-key-here",

"HARNESS_DEFAULT_ORG_ID": "your-org-id",

"HARNESS_DEFAULT_PROJECT_ID": "your-project-id",

"HARNESS_BASE_URL": "https://your-harness-instance.harness.io"

}

}

}

}- Save the file and restart Claude Code for the changes to take effect.

To configure additional MCP-compatible AI tools like Windsurf, Cursor, or VS Code, see the Harness MCP Server documentation, which includes detailed setup instructions for all supported platforms.

Verify Installation

- Open Claude Code (or the AI tool that you configured).

- Navigate to the Tools/MCP section.

- Verify Harness tools are available.

What’s Next

Feature management at scale is a common operational challenge. With Harness MCP tools and AI-powered IDEs, teams can already discover, inspect, and summarize flag configurations conversationally, reducing context switching and speeding up audits.

Looking ahead, this workflow extends itself towards a DevOps-focused approach, where developers and release engineers can prompt tools like Claude Code to identify inconsistencies or misconfigurations in feature flags across environments and take action to address them.

By embedding these capabilities directly into the development workflow, feature management becomes more operational and code-aware, enabling teams to maintain governance and reliability in real time.

For more information about the Harness MCP Server, see the Harness MCP Server documentation and the GitHub repository. If you’re brand new to Harness FME, sign up for a free trial today.

Split Embraces OpenFeature

Driving Feature Flag Standardization with OpenFeature

Split is excited to announce participation in OpenFeature, an initiative led by Dynatrace and recently submitted to the Cloud Native Computing Foundation (CNCF) for consideration as a sandbox program.

As part of an effort to define a new open standard for feature flag management, this project brings together an industry consortium of top leaders. Together, we aim to provide a vendor-neutral approach to integrating with feature flagging and management solutions. By defining a standard API and SDK for feature flagging, OpenFeature is meant to reduce issues or friction commonly experienced today with the end goal of helping all development teams ramp reliable release cycles at scale and, ultimately, move towards a progressive delivery model.

At Split, we believe this effort is a strong signal that feature flagging is truly going “mainstream” and will be the standard best practice across all industries in the near future.

What Is Feature Flagging?

Feature flagging is a simple, yet powerful technique that can be used for a range of purposes to improve the entire software development lifecycle. Other common terms include things like “feature toggle” or “feature gate.” Despite sometimes going by different names, the basic concept underlying feature flags is the same:

A feature flag is a mechanism that allows you to decouple a feature release from a deployment and choose between different code paths in your system at runtime.

Because feature flags enable software development and delivery teams to turn functionality on and off at runtime without deploying new code, feature management has become a mission-critical component for delivering cloud-native applications. In fact, feature management supports a range of practices rooted in achieving continuous delivery, and it is especially key for progressive delivery’s goal of limiting blast radius by learning early.

Think about all the use cases. Feature flags allow you to run controlled rollouts, automate kill switches, a/b test in production, implement entitlements, manage large-scale architectural migrations, and more. More fundamentally, feature flags enable trunk-based development, which eliminates the need to maintain multiple long-lived feature branches within your source code, simplifying and accelerating release cycles.

Where Does Split Come in?

While feature flags alone are very powerful, organizations that use flagging at scale quickly learn that additional functionality is needed for a proper, long-term feature management approach. This requires functionality like a management interface, the ability to perform controlled rollouts, automated scheduling, permissions and audit trails, integration into analytics systems, and more. For companies who want to start feature flagging at scale, and eventually move towards a true progressive delivery model, this is where companies like Split come into the mix.

Split offers full support for progressive delivery. We provide sophisticated targeting for controlled rollouts but also flag-aware monitoring to protect your KPIs for every release, as well as feature-level experimentation to optimize for impact. Additionally, we invite you to learn more about our enterprise-readiness, API-first approach, and leading integration ecosystem.

So, Why Is OpenFeature Needed?

Feature flag tools, like Split, all use their proprietary SDKs with frameworks, definitions, and data/event types unique to their platform. There are differences across the feature management landscape in how we define, document, and integrate feature flags with 3rd party solutions, and with this, issues can arise.

For one, we all end up maintaining a library of feature flagging SDKs in various tech stacks. This can be quite a lot of effort, and that all is duplicated by each feature management solution. Additionally, while it is commonly accepted that feature management solutions are essential in modern software delivery, for some, these differences also make the barrier to entry seem too high. Rather, standardizing feature management will allow organizations to worry less about easy integration across their tech stack, so they can just get started using feature flags!

Ultimately, we see OpenFeature as an important opportunity to promote good software practices through developing a vendor-neutral approach and building greater feature flag awareness.

Introducing OpenFeature

Created to support a robust feature flag ecosystem using cloud-native technologies, OpenFeature is a collective effort across multiple vendors and verticals. The mission of OpenFeature is to improve the software development lifecycle, no matter the size of the project, by standardizing feature flagging for developers.

By defining a standard API and providing a common SDK, OpenFeature will provide a language-agnostic, vendor-neutral standard for feature flagging. This provides flexibility for organizations, and their application integrators, to choose the solutions that best fit their current requirements while avoiding code-level lock-in.

Feature management solutions, like Split, will implement “providers” which integrate into the OpenFeature SDK, allowing users to rely on a single, standard API for flag evaluation across every tech stack. Ultimately, the hope is that this standardization will provide the confidence for more development teams to get started with feature flagging.

Final Thoughts

“OpenFeature is a timely initiative to promote a standardized implementation of feature flags. Time and again we’ve seen companies reinventing the wheel and hand-rolling their feature flags. At Split, we believe that every feature should be behind a feature flag, and that feature flags are best when paired with data. OpenFeature support for Open Telemetry is a great step in the right direction,” Pato Echagüe, Split CTO and sitting member of the OpenFeature consortium.

We are confident in the power of feature flagging and know that the future of software delivery will be done progressively using feature management solutions, like Split. Our hope is that OpenFeature provides a win for both development teams as well as vendors, including feature management tools and 3rd party solutions across the tech stack. Most importantly, this initiative will continue to push forward the concept of feature flagging as a standard best practice for all modern software delivery.

To learn more about OpenFeature, we invite you to visit: https://openfeature.dev.

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done. Switch on a free account today, schedule a demo, or contact us for further questions.

The benefits of streaming architecture for feature flags

Delivering feature flags with lightning speed and reliability has always been one of our top priorities at Split. We’ve continuously improved our architecture as we’ve served more and more traffic over the past few years (We served half a trillion flags last month!). To support this growth, we use a stable and simple polling architecture to propagate all feature flag changes to our SDKs.

At the same time, we’ve maintained our focus on honoring one of our company values, “Every Customer”. We’ve been listening to customer feedback and weighing that feedback during each of our quarterly prioritization sessions. Over the course of those sessions, we’ve recognized that our ability to immediately propagate changes to SDKs was important for many customers so we decided to invest in a real-time streaming architecture.

Our Approach to Streaming Architecture

Early this year we began to work on our new streaming architecture that broadcasts feature flag changes immediately. We plan for this new architecture to become the new default as we fully roll it out in the next two months.

For this streaming architecture, we chose Server-Sent Events (SSE from now on) as the preferred mechanism. SSE allows a server to send data asynchronously to a client (or a server) once a connection is established. It works over the HTTPS transport layer, which is an advantage over other protocols as it offers a standard JavaScript client API named EventSource implemented in most modern browsers as part of the HTML5 standard.

While real-time streaming using SSE will be the default going forward, customers will still have the option to choose polling by setting the configuration on the SDK side.

Streaming Architecture Performance

Running a benchmark to measure latencies over the Internet is always tricky and controversial as there is a lot of variability in the networks. To that point, describing the testing scenario is a key component of such tests.

We created several testing scenarios which measured:

- Latencies from the time in which a feature flag (split) change was made

- The time the push notification arrived

- The time until the last piece of the message payload was received

We then ran this test several times from different locations to see how latency varies from one place to another.

In all those scenarios, the push notifications arrived within a few hundred milliseconds and the full message containing all the feature flag changes were consistently under a second latency. This last measurement includes the time until the last byte of the payload arrives.

As we march toward the general availability of this functionality, we’ll continue to perform more of these benchmarks and from new locations so we can continue to tune the systems to achieve acceptable performance and latency. So far we are pleased with the results and we look forward to rolling it out to everyone soon.

Choosing when Streaming or Polling is Best for You

Both streaming and polling offer a reliable, highly performant platform to serve splits to your apps.

By default, we will move to a streaming mode because it offers:

- Immediate propagation time when changes are made to flags.

- Reduced network traffic, as the server will initiate the request when there is data to be sent (aside from small traffic being sent to keep the connection alive).

- Native browser support to handle sophisticated use cases like reconnections when using SSE.

In case the SDK detects any issues with the streaming service, it will use polling as a fallback mechanism.

In some cases, a polling technique is preferable. Rather than react to a push message, in polling mode, the client asks the server for new data on a user-defined interval. The benefits of using a polling approach include:

- Easier to scale, stateless, and less memory-demanding as each connection is treated as an independent request.

- More tolerant of unreliable connectivity environments, such as mobile networks.

- Avoids security concerns around keeping connections open through firewalls.

Streaming Architecture and an Exciting Future for Split

We are excited about the capabilities that this new streaming architecture approach to delivering feature flag changes will deliver. We’re rolling out the new streaming architecture in stages starting in early May. If you are interested in having early access to this functionality, contact your Split account manager or email support at support@split.io to be part of the beta.

To learn about other upcoming features and be the first to see all our content, we’d love to have you follow us on Twitter!

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done. Switch on a free account today, schedule a demo, or contact us for further questions.

Overcoming Experimentation Obstacles In B2B

Determine the Optimal Traffic Type

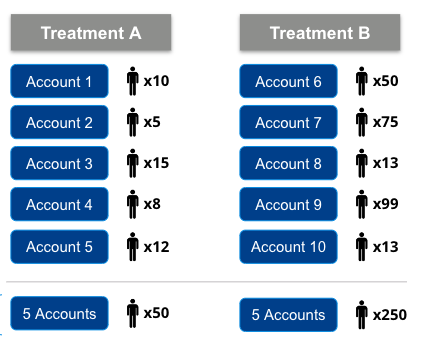

Consider the advantages and disadvantages of employing a tenant (e.g., account-based) traffic type versus a conventional user traffic type for each experiment. Unless it is crucial to provide a consistent experience for all users within a specific account, opt for a user traffic type to facilitate experimentation and measurement. This will significantly increase your sample size, unlocking greater potential for insights and analysis.

Important to note: In Split, the traffic type for an experiment can be decided on a case-by-case basis, depending on the feature change, the test’s success metrics, and the sample size needed.

Even if using a tenant traffic type is the only logical choice for your experiment, there are strategies you can employ to increase the likelihood of a successful (i.e., statistically significant) test.

Make a Plan for Lower Sample Sizes

Utilize the 10 Tips for Running Experiments With Low Traffic guide. You can thank us later!

Normalize Data for Tenant (Account) Traffic Types

Split’s application ensures that a 50/50 experiment divides tenants according to that percentage utilizing its deterministic hashing algorithm and Sample Ratio Mismatch calculator, but doesn’t consider that some tenants may have more users than others.

This can result in an unbalanced user allocation across treatments, as shown below, using “Accounts” as the tenant type.

The following tips can be applied to normalize the data when users aren’t balanced across tenants:

- Utilize a “percent of” metric type: Use this metric type when the event you want to measure only needs to occur once per tenant to be considered a success (e.g., percent of accounts that upgraded plans). Instead of leveling your users across treatments, it equalizes the data.

- Utilize a “ratio of two events” metric type: Use this metric type when measuring total engagement volume with a feature. This lets you control the numerator and denominator to equalize the metric data.

A reminder: The numerator is set to the event you want to count (e.g., number of clicks to “download desktop app”). The denominator is set to an event that occurs leading up to the numerator event (e.g., number of impressions or screen views where the user is prompted to “download desktop app”). The denominator can also be a generic event that tracks the number of users who saw the treatment.

If you follow these steps, you should be able to overcome most obstacles when running a B2B experiment. And remember: Split offers the unique flexibility to run experiments based on the traffic type that suits your needs. Learn more here.

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done.

Switch on a free account today, schedule a demo to learn more, or contact us for further questions and support.

Serverless Applications Powered by Split Feature Flags

The concept of Serverless Computing(https://en.wikipedia.org/wiki/Serverless_computing), also called Functions as a Service (FaaS) is fast becoming a trend in software development. This blog post will highlight steps and best practices for integrating Split feature flags into a serverless environment.

A quick look into Serverless Architecture

Serverless architectures enable you to add custom logic to other provider services, or to break up your system (or just a part of it) into a set of event-driven stateless functions that will execute on a certain trigger, perform some processing, and act on the result — either sending it to the next function in the pipeline, or by returning it as result of a request, or by storing it in a database. One interesting use case for FaaS is image processing where there is a need to validate the data before storing it in a database, retrieving assets from an S3 bucket, etc.

Some advantages of this architecture include:

- Lower costs: Pay only for what you run and eliminate paying for idle servers. With the pay-per-use model server costs will be proportional to the time required to execute only on requests made.

- Low maintenance: The infrastructure provider takes care of everything required to run and scale the code on demand and with high availability, eliminating the need to pre-plan and pre-provision servers servers to host these functions.

- Easier to deploy: Just upload new function code and configure a trigger to have it up and running.

- Faster prototyping: Using third party API’s for authentication, social, tracking, etc. minimizes time spent, resulting in an up-and-running prototype within just minutes.

Some of the main providers for serverless architecture include, Amazon: AWS Lambda; Google: Cloud Functions; and Microsoft: Azure Functions. Regardless of which provider you may choose, you will still reap the benefits of feature flagging without real servers.

In this blog post, we’ll focus on AWS lambda with functions written in JavaScript running on Node.js. Additionally we’ll highlight one approach to interacting with Split feature flags on a serverless application. It’s worth noting that there are several ways in which one can interact with Split on a serverless application, but we will highlight just one of them in this post.

Externalizing state

If we are using Lambda functions in Amazon AWS, the best approach would be to use ElastiCache (Redis flavor) as an in-memory external data store, where we can store our feature rules that will be used by the Split SDKs running on Lambda functions to generate the feature flags.

One way to achieve this is to set up the Split Synchronizer, a background service created to synchronize Split information for multiple SDKs onto an external cache, Redis. To learn more about Split Synchronizer, check out our recent blog post.

On the other hand, the Split Node SDK has a built-in Redis integration that can be used to communicate with a Redis ElastiCache cluster. The diagram below illustrates the set up:

Step 1: Preparing the ElastiCache cluster

Start by going to the ElastiCache console and create a cluster within the same VPC that you’ll be running the Lambda functions from. Make sure to select Redis as the engine:

Step 2: Run Split Synchronizer as a Docker Container Using ECS

The next step would be to deploy the Split Synchronizer on ECS (in synchronizer mode) using the existing Split Synchronizer Docker image. Refer to this guide on how to deploy docker containers.

Now from the EC2 Container Service (ECS) console create an ECS cluster within the same VPC as before. As a next step create the task definition that will be used on the service by going to the Task Definitions page. This is where docker image repository will be specified, including any environment variables that will be required.

As images on Docker Hub are available by default, specify the organization/image:

And environment variables (specifics can be found on the Split Synchronizer docs):