Featured Blogs

.png)

Google's GKE Autopilot provides fully managed Kubernetes without the operational overhead of node management, security patches, or capacity planning. However, running chaos engineering experiments on Autopilot has been challenging due to its security restrictions.

We've solved that problem.

Why This Matters

Chaos engineering helps you identify issues before they impact your users. The approach involves intentionally introducing controlled failures to understand how your system responds. Think of it as a fire drill for your infrastructure.

GKE Autopilot secures clusters by restricting many permissions, which is excellent for security. However, this made running chaos experiments difficult. You couldn't simply deploy Harness Chaos Engineering and begin testing.

That changes today.

What Changed

We collaborated with Google to add Harness Chaos Engineering to GKE Autopilot's official allowlist. This integration enables Harness to run chaos experiments while operating entirely within Autopilot's security boundaries.

No workarounds required. Just chaos engineering that works as expected.

How to Set It Up

1. Apply the Allowlist

First, you need to tell GKE Autopilot that Harness chaos workloads are okay to run. Copy this command:

kubectl apply -f - <<'EOF'

apiVersion: auto.gke.io/v1

kind: AllowlistSynchronizer

metadata:

name: harness-chaos-allowlist-synchronizer

spec:

allowlistPaths:

- Harness/allowlists/chaos/v1.62/*

- Harness/allowlists/service-discovery/v0.42/*

EOF

Then wait for it to be ready:

kubectl wait --for=condition=Ready allowlistsynchronizer/harness-chaos-allowlist-synchronizer --timeout=60s

That's it for the cluster configuration.

2. Enable Autopilot Mode in Harness

Next, configure Harness to work with GKE Autopilot. You have several options:

If you're setting up chaos for the first time, just use the 1-click chaos setup and toggle on "Use static name for configmap and secret" during setup.

If you already have infrastructure configured, go to Chaos Engineering > Environments, find your infrastructure, and enable that same toggle.

You can also set this up when creating a new discovery agent, or update an existing one in Project Settings > Discovery.

What You Can Test

You can run most of the chaos experiments you'd expect:

The integration supports a comprehensive range of chaos experiments:

Resource stress: Pod CPU Hog, Pod Memory Hog, Pod IO Stress, Disk Fill. These experiments help you understand how your pods behave under resource constraints.

Network chaos: Pod Network Latency, Pod Network Loss, Pod Network Corruption, Pod Network Duplication, Pod Network Partition, Pod Network Rate Limit. Production networks experience imperfections, and your application needs to handle them gracefully.

DNS problems: Pod DNS Error to disrupt resolution, Pod DNS Spoof to redirect traffic.

HTTP faults: Pod HTTP Latency, Pod HTTP Modify Body, Pod HTTP Modify Header, Pod HTTP Reset Peer, Pod HTTP Status Code. These experiments test how your APIs respond to unexpected behavior.

API-level chaos: Pod API Block, Pod API Latency, Pod API Modify Body, Pod API Modify Header, Pod API Status Code. Good for testing service mesh and gateway behavior.

File system chaos: Pod IO Attribute Override, Pod IO Error, Pod IO Latency, Pod IO Mistake. These experiments reveal how your application handles storage issues.

Container lifecycle: Container Kill and Pod Delete to test recovery. Pod Autoscaler to see if scaling works under pressure.

JVM chaos if you're running Java: Pod JVM CPU Stress, Pod JVM Method Exception, Pod JVM Method Latency, Pod JVM Modify Return, Pod JVM Trigger GC.

Database chaos for Java apps: Pod JVM SQL Exception, Pod JVM SQL Latency, Pod JVM Mongo Exception, Pod JVM Mongo Latency, Pod JVM Solace Exception, Pod JVM Solace Latency.

Cache problems: Redis Cache Expire, Redis Cache Limit, Redis Cache Penetration.

Time manipulation: Time Chaos to introduce controlled time offsets.

What This Means for You

If you're running GKE Autopilot and want to implement chaos engineering with Harness, you can now do both without compromise. There's no need to choose between Google's managed experience and resilience testing.

For teams new to chaos engineering, Autopilot provides an ideal starting point. The managed environment reduces infrastructure complexity, allowing you to focus on understanding application behavior under stress.

Getting Started

Start with a simple CPU stress test. Select a non-critical pod and run a low-intensity Pod CPU Hog experiment in Harness. Observe the results: Does your application degrade gracefully? Do your alerts trigger as expected? Does it recover when the experiment completes?

Start small, understand your system's behavior, then explore more complex scenarios.

You can configure Service Discovery to visualize your services in Application Maps, add probes to validate resilience during experiments, and progressively explore more sophisticated fault injection scenarios.

Check out the documentation for the complete setup guide and all supported experiments.

The goal of chaos engineering isn't to break things. It's to understand what breaks before it impacts your users.

Recent Blogs

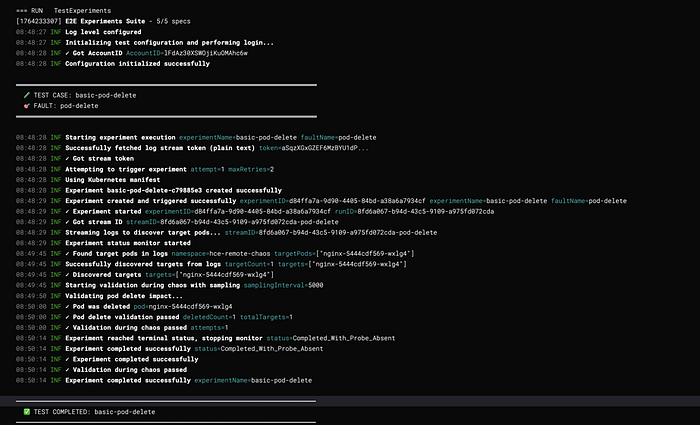

Theory to Turbulence: Building a Developer-Friendly E2E Testing Framework for Chaos Platform

As an enterprise chaos engineering platform vendor, validating chaos faults is not optional — it’s foundational. Every fault we ship must behave predictably, fail safely, and produce measurable impact across real-world environments.

When we began building our end-to-end (E2E) testing framework, we quickly ran into a familiar problem: the barrier to entry was painfully high.

Running even a single test required a long and fragile setup process:

- Installing multiple dependencies by hand

- Configuring a maze of environment variables

- Writing YAML-based chaos experiments manually

- Debugging cryptic validation failures

- Only then… executing the first test

This approach slowed feedback loops, discouraged adoption, and made iterative testing expensive — exactly the opposite of what chaos engineering should enable.

The Solution: A Simplified Chaos Fault Validation Framework

To solve this, we built a comprehensive yet developer-friendly E2E testing framework for chaos fault validation. The goal was simple: reduce setup friction without sacrificing control or correctness.

The result is a framework that offers:

- An API-driven execution model instead of manual YAML wiring

- Real-time log streaming for faster debugging and observability

- Intelligent target discovery to eliminate repetitive configuration

- Dual-phase validation to verify both fault injection and system impact

What previously took 30 minutes (or more) to set up and run can now be executed in under 5 minutes — consistently and at scale.

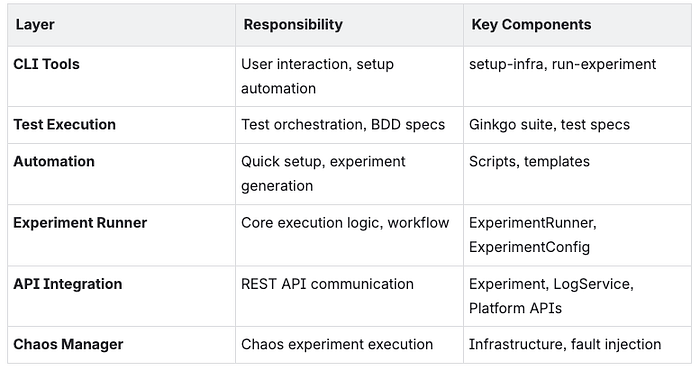

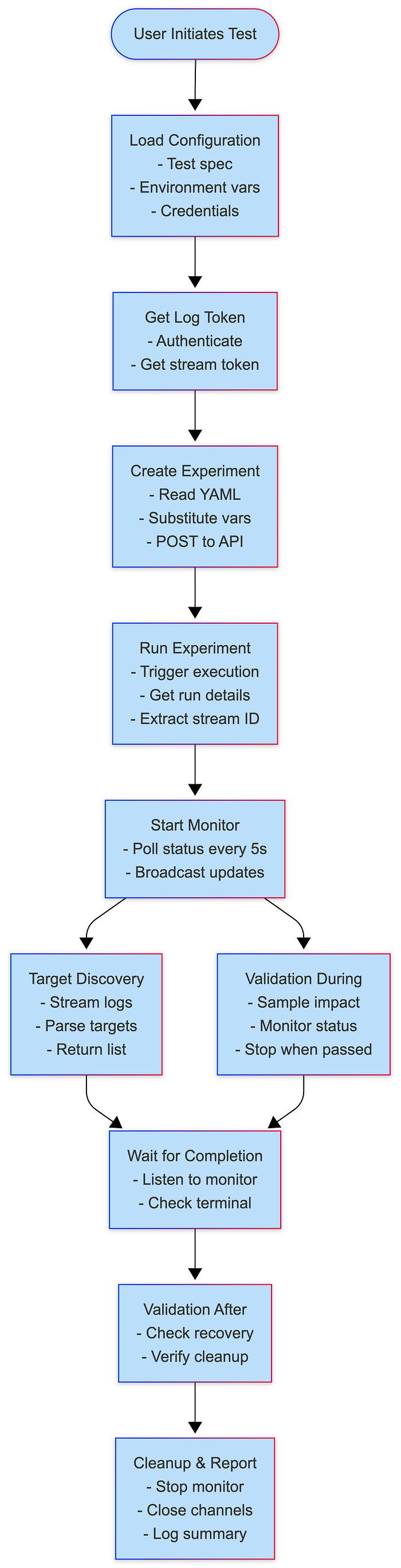

System Architecture

High-Level Architecture

Layer Responsibilities

Core Components

1. Experiment Runner

Purpose: Orchestrates the complete chaos experiment lifecycle from creation to validation.

Key Responsibilities:

- Experiment creation with variable substitution

- Log streaming and target discovery

- Concurrent validation management

- Status monitoring and completion detection

- Error handling and retry logic

Architecture Pattern: Template Method + Observer

type ExperimentRunner struct {

identifiers utils.Identifiers

config ExperimentConfig

}

type ExperimentConfig struct {

Name string

FaultName string

ExperimentYAML string

InfraID string

InfraType string

TargetNamespace string

TargetLabel string

TargetKind string

FaultEnv map[string]string

Timeout time.Duration

SkipTargetDiscovery bool

ValidationDuringChaos ValidationFunc

ValidationAfterChaos ValidationFunc

SamplingInterval time.Duration

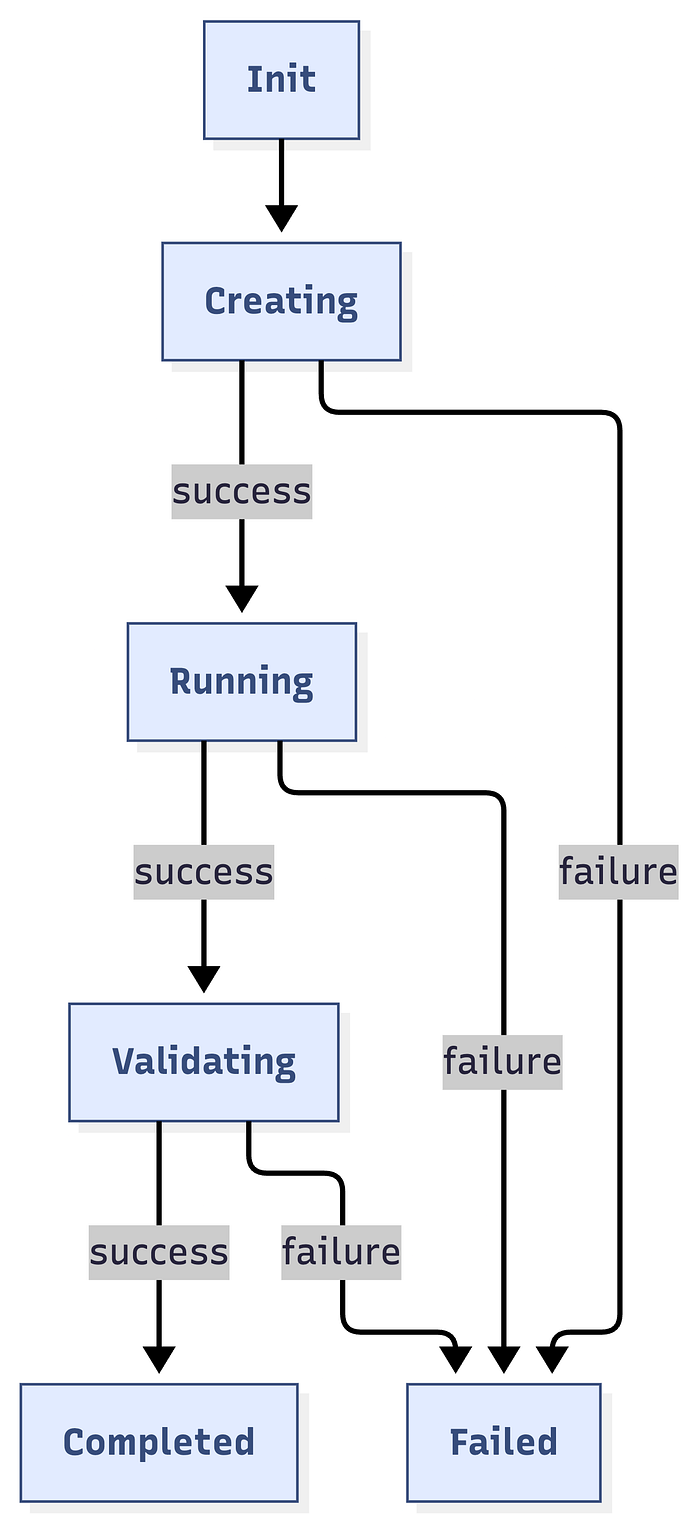

}Execution Flow:

Run() →

1. getLogToken()

2. triggerExperimentWithRetry()

3. Start experimentMonitor

4. extractStreamID()

5. getTargetsFromLogs()

6. runValidationDuringChaos() [parallel]

7. waitForCompletion()

8. Validate ValidationAfterChaos2. Experiment Monitor

Purpose: Centralized experiment status tracking with publish-subscribe pattern.

Architecture Pattern: Observer Pattern

type experimentMonitor struct {

experimentID string

runResp *experiments.ExperimentRunResponse

identifiers utils.Identifiers

stopChan chan bool

statusChan chan string

subscribers []chan string

}Key Methods:

start(): Begin monitoring (go-routine)subscribe(): Create subscriber channelbroadcast(status): Notify all subscribersstop(): Signal monitoring to stop

Benefits:

- 80% reduction in API calls

- 92% faster failure detection

- Single source of truth

- Easy to add new consumers

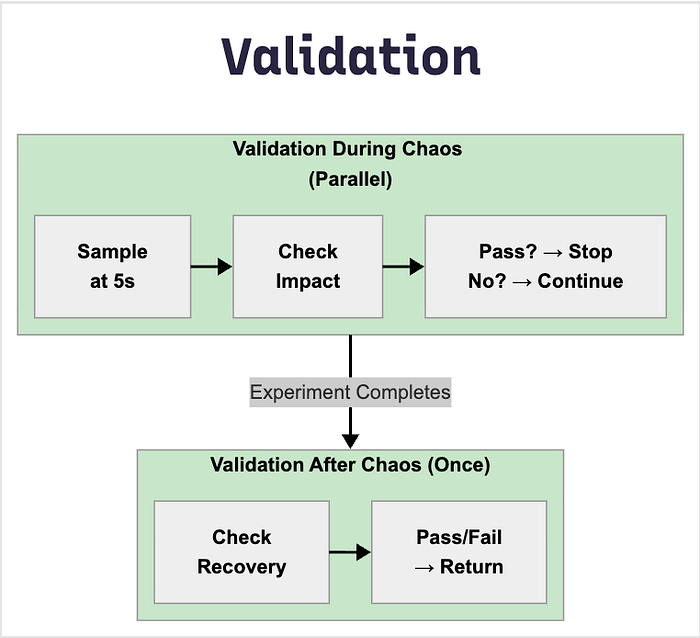

3. Validation Framework

Purpose: Dual-phase validation system for concrete chaos impact verification.

ValidationDuringChaos

- Runs in parallel during experiment

- Continuous sampling at configurable intervals

- Stops when validation passes

- Use case: Verify active fault impact

ValidationAfterChaos

- Runs once after experiment completes

- Single execution for final state

- Use case: Verify recovery and cleanup

Function Signature:

type ValidationFunc func(targets []string, namespace string) (bool, error)

// Returns: (passed bool, error)Sample Validation Categories:

Experiment Execution Engine

Execution Phases

Phase 1: Setup

├─ Load configuration

├─ Authenticate with API

└─ Validate environment

Phase 2: Preparation

├─ Get log stream token

├─ Resolve experiment YAML path

├─ Substitute template variables

└─ Create experiment via API

Phase 3: Execution

├─ Trigger experiment run

├─ Start status monitor

├─ Extract stream ID

└─ Discover targets from logs

Phase 4: Validation (Concurrent)

├─ Validation During Chaos (parallel)

│ ├─ Sample at intervals

│ ├─ Check fault impact

│ └─ Stop when passed/completed

└─ Wait for completion

Phase 5: Post-Validation

├─ Validation After Chaos

├─ Check recovery

└─ Final assertions

Phase 6: Cleanup

├─ Stop monitor

├─ Close channels

└─ Log resultsState Machine

Concurrency Model

Main Thread:

├─ Create experiment

├─ Start monitor goroutine

├─ Start target discovery goroutine

├─ Start validation goroutine [if provided]

└─ Wait for completion

Monitor Goroutine:

├─ Poll status every 5s

├─ Broadcast to subscribers

└─ Stop on terminal status

Target Discovery Goroutine:

├─ Subscribe to monitor

├─ Poll for targets every 5s

├─ Listen for failures

└─ Return when found or failed

Validation Goroutine:

├─ Subscribe to monitor

├─ Run validation at intervals

├─ Listen for completion

└─ Stop when passed or completedAPI Integration Layer

API Client Architecture

Variable Substitution System

Template Format: {{ VARIABLE_NAME }}

Built-in Variables:

INFRA_NAMESPACE // Infrastructure namespace

FAULT_INFRA_ID // Infrastructure ID (without env prefix)

EXPERIMENT_INFRA_ID // Full infrastructure ID (env/infra)

TARGET_WORKLOAD_KIND // deployment, statefulset, daemonset

TARGET_WORKLOAD_NAMESPACE // Target namespace

TARGET_WORKLOAD_NAMES // Specific workload names (or empty)

TARGET_WORKLOAD_LABELS // Label selector

EXPERIMENT_NAME // Experiment name

FAULT_NAME // Fault type

TOTAL_CHAOS_DURATION // Duration in seconds

CHAOS_INTERVAL // Interval between chaos actions

ADDITIONAL_ENV_VARS // Fault-specific environment variablesCustom Variables: Passed via FaultEnv map in ExperimentConfig.

Validation Framework

Architecture

Validation Categories

1. Resource Validators

ValidatePodCPUStress(targets, namespace) (bool, error)

ValidatePodMemoryStress(targets, namespace) (bool, error)

ValidateDiskFill(targets, namespace) (bool, error)

ValidateIOStress(targets, namespace) (bool, error)Detection Logic:

- CPU: Usage > baseline + 30%

- Memory: Usage > baseline + 20%

- Disk: Usage > 80%

- I/O: Read/write operations elevated

2. Network Validators

ValidateNetworkLatency(targets, namespace) (bool, error)

ValidateNetworkLoss(targets, namespace) (bool, error)

ValidateNetworkCorruption(targets, namespace) (bool, error)Detection Methods:

- Ping latency measurements

- Packet loss percentage

- Checksum errors

3. Pod Lifecycle Validators

ValidatePodDelete(targets, namespace) (bool, error)

ValidatePodRestarted(targets, namespace) (bool, error)

ValidatePodsRunning(targets, namespace) (bool, error)Verification:

- Pod age comparison

- Restart count increase

- Ready status check

4. Application Validators

ValidateAPIBlock(targets, namespace) (bool, error)

ValidateAPILatency(targets, namespace) (bool, error)

ValidateAPIStatusCode(targets, namespace) (bool, error)

ValidateFunctionError(targets, namespace) (bool, error)5. Redis Validators

ValidateRedisCacheLimit(targets, namespace) (bool, error)

ValidateRedisCachePenetration(targets, namespace) (bool, error)

ValidateRedisCacheExpire(targets, namespace) (bool, error)Direct Validation: Executes redis-cli INFO in pod, parses metrics

Validation Best Practices

Data Flow & Lifecycle

Complete Experiment Lifecycle

Data Structures Flow

// Input

ExperimentConfig

↓

// API Creation

ExperimentPayload (JSON)

↓

// API Response

ExperimentResponse {ExperimentID, Name}

↓

// Run Request

ExperimentRunRequest {NotifyID}

↓

// Run Response

ExperimentRunResponse {ExperimentRunID, Status, Nodes}

↓

// Log Streaming

StreamToken + StreamID

↓

// Target Discovery

[]string (target pod names)

↓

// Validation

ValidationFunc(targets, namespace) → (bool, error)

↓

// Final Result

Test Pass/Fail with error detailsPerformance & Scalability

Performance Metrics

Concurrent Test Execution

- Each test gets isolated namespace

- Separate experiment instances

- No shared state between tests

- Parallel execution supported

Example Usage of Framework

RunExperiment(ExperimentConfig{

Name: "CPU Stress Test",

FaultName: "pod-cpu-hog",

InfraID: infraID,

ProjectID: projectId,

TargetNamespace: targetNamespace,

TargetLabel: "app=nginx", // Customize based on your test app

TargetKind: "deployment",

FaultEnv: map[string]string{

"CPU_CORES": "1",

"TOTAL_CHAOS_DURATION": "60",

"PODS_AFFECTED_PERC": "100",

"RAMP_TIME": "0",

},

Timeout: timeout,

SamplingInterval: 5 * time.Second, // Check every 5 seconds during chaos

// Verify CPU is stressed during chaos

ValidationDuringChaos: func(targets []string, namespace string) (bool, error) {

clientset, err := faultcommon.GetKubeClient()

if err != nil {

return false, err

}

return validations.ValidatePodCPUStress(clientset, targets, namespace)

},

// Verify pods recovered after chaos

ValidationAfterChaos: func(targets []string, namespace string) (bool,error) {

clientset, err := faultcommon.GetKubeClient()

if err != nil {

return false, err

}

return validations.ValidateTargetAppsHealthy(clientset, targets, namespace)

},

})Knowledge Sharing and Learning

While this framework is proprietary and used internally, we believe in sharing knowledge and best practices. The patterns and approaches we’ve developed can help other teams building similar testing infrastructure:

Key Takeaways for Your Team

Whether you’re building a chaos engineering platform, testing distributed systems, or creating any complex testing infrastructure, these principles apply:

- Measure your baseline — Know how long things take today

- Set ambitious goals — 10x improvements are possible

- Prioritize DX — Developer experience drives adoption

- Automate ruthlessly — Eliminate manual steps

- Share your learnings — Help others avoid the same pitfalls

- Collect user feedback

- Celebrate improvements!

We hope these insights help you build better testing infrastructure for your team!

Questions? Feedback? Ideas? Join Harness community. We’d love to hear about your testing challenges and how you’re solving them!

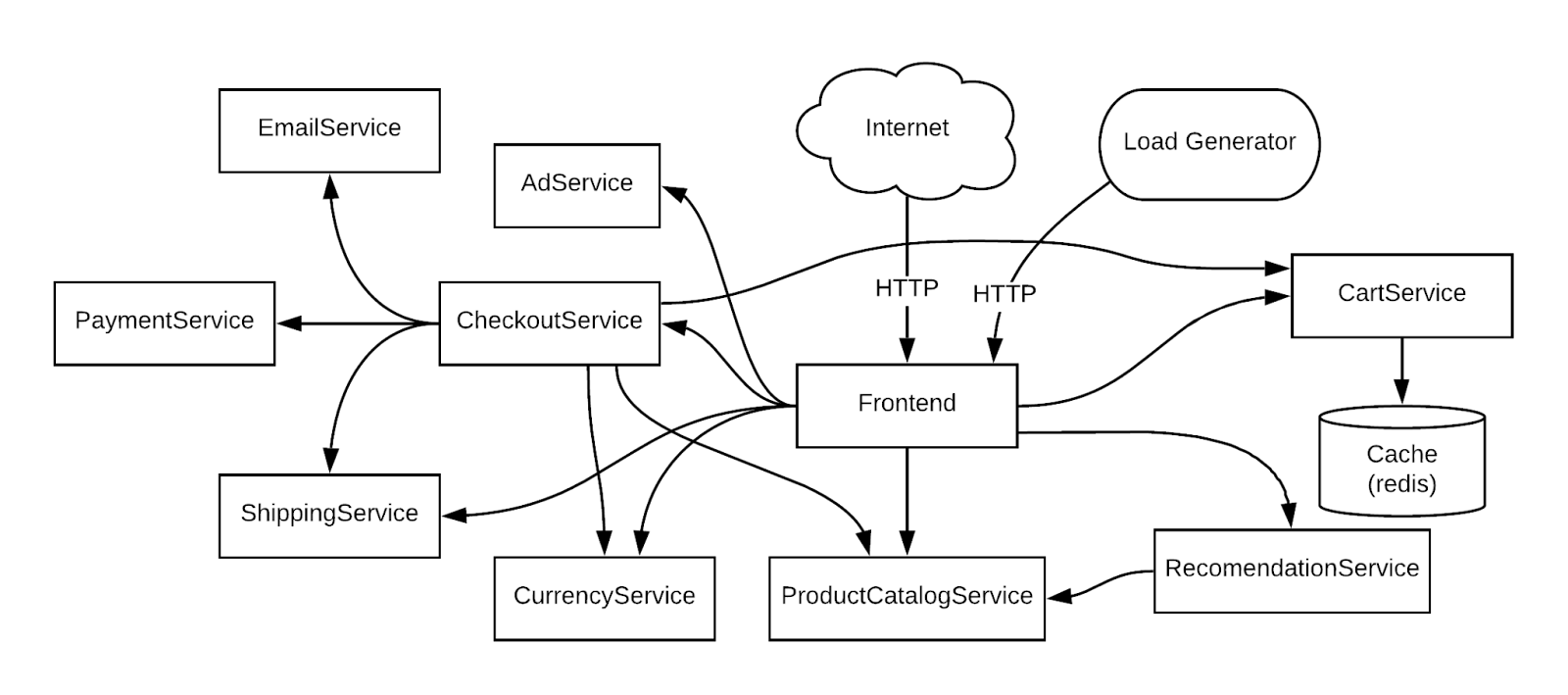

Recommended Experiments for Production Resilience in Harness Chaos Engineering

Building reliable distributed systems isn't just about writing good code. It's about understanding how your systems behave when things go wrong. That's where chaos engineering comes in.

If you've been wondering where to start with chaos experiments or what scenarios matter most for your infrastructure, this guide walks through battle-tested experiments that engineering teams use to validate production resilience.

Why These Experiments Matter

Here's the thing about production failures: they're not just theoretical. Network issues happen. Availability zones go down. Resources get exhausted. The question isn't whether these failures will occur, but whether your system can handle them gracefully when they do.

The experiments we'll cover are based on real-world failure scenarios that teams encounter in production. We've organized them by infrastructure type so you can quickly find what's relevant to your stack.

A quick tip before we dive in: Start with lower blast radius experiments (like pod-level faults) before progressing to higher impact scenarios (like node or zone failures). This gives you confidence in your testing approach and helps you understand your system's behavior patterns.

Understanding Your Infrastructure Needs

Different infrastructure types face different challenges. Here's what we'll cover:

- Kubernetes Experiments

- AWS Experiments

- Azure Experiments

- GCP Experiments

Let's explore each of these in detail.

Kubernetes: The Foundation of Modern Applications

For Kubernetes environments, chaos experiments typically focus on four key areas. Let's walk through each one.

Network Resilience Testing

Network-related failures are among the most common issues in distributed systems. Your application might be perfectly coded, but if it can't handle network degradation, you're setting yourself up for production incidents.

Here are the experiments that matter:

Pod Network Loss tests application resilience to network packet loss at the pod level. This is your first line of defense for understanding how individual components handle network issues.

Node Network Loss simulates network issues affecting entire nodes. This is a node-level experiment that helps you understand how your system behaves when an entire node becomes unreachable.

Pod Network Latency tests application behavior under high latency conditions at the pod level. Latency often reveals performance bottlenecks and timeout configuration issues.

Pod API Block allows you to block specific API endpoints or services at the pod level. This is particularly useful for testing service dependencies and circuit breaker implementations.

Resource Exhaustion Testing

Resource exhaustion is another common failure mode. How does your application behave when CPU or memory becomes constrained? These experiments help you understand whether your resource limits are set correctly and how your application handles resource constraints before they become production problems.

Pod CPU Hog tests application behavior under CPU pressure at the pod level. This helps validate whether your CPU limits are appropriate and how your application degrades under CPU constraints.

Pod Memory Hog validates memory limit handling and out-of-memory (OOM) scenarios at the pod level. Understanding memory behavior prevents unexpected pod restarts in production.

Node CPU Hog tests node-level CPU exhaustion. This experiment reveals how your cluster handles resource pressure when an entire node's CPU is saturated.

Node Memory Hog simulates node memory pressure at the node level. This is critical for understanding how Kubernetes evicts pods and manages memory across your cluster.

Availability Zone Failures

Multi-AZ deployments are great for resilience, but only if they're actually resilient. Zone failure experiments validate that your multi-AZ setup works as expected.

Node Network Loss can simulate complete zone failure when configured with node labels to target specific zones. This is your primary tool for validating zone-level resilience.

Pod Network Loss enables zone-level pod network isolation by targeting pods in specific zones. This gives you more granular control over which applications you test during zone failures.

For detailed zone failure configurations, see the Simulating Zonal Failures section below.

Pod Lifecycle Testing

Pods come and go. That's the nature of Kubernetes. But does your application handle these transitions gracefully? These experiments ensure your application handles the dynamic nature of Kubernetes without dropping requests or losing data.

Pod Delete tests graceful shutdown and restart behavior at the pod level. This is fundamental for validating that your application can handle rolling updates and scaling events.

Container Kill validates container restart policies at the container level. This ensures that individual container failures don't cascade into broader application issues.

Pod Autoscaler tests Horizontal Pod Autoscaler (HPA) behavior under load at the pod level. This validates that your autoscaling configuration responds appropriately to demand changes.

Simulating Zonal Failures

Zonal failures simulate complete availability zone outages, which are critical for validating multi-AZ deployments. Let's look at how to configure these experiments properly.

Node Network Loss for Zonal Failures

The Node Network Loss experiment simulates a complete zone failure by blocking all network traffic to nodes in a specific availability zone.

Key Parameters:

TOTAL_CHAOS_DURATION should be set to 300 seconds (5 minutes) for realistic zone failure testing. This duration gives you enough time to observe failover behavior and recovery processes.

NETWORK_PACKET_LOSS_PERCENTAGE should be set to 100% to achieve complete network isolation, simulating a total zone failure rather than degraded connectivity.

NETWORK_INTERFACE typically uses eth0 as the primary network interface. Verify your cluster's network configuration if you're using a different interface name.

NODES_AFFECTED_PERC should be set to 100 to affect all nodes matching the target label, ensuring complete zone isolation.

NODE_LABEL is critical for targeting specific availability zones. Use topology.kubernetes.io/zone=<zone-name> to select nodes in a particular zone.

Common Zone Labels:

For AWS deployments, use topology.kubernetes.io/zone=us-east-1a (or your specific zone).

For GCP deployments, use topology.kubernetes.io/zone=us-central1-a (or your specific zone).

For Azure deployments, use topology.kubernetes.io/zone=eastus-1 (or your specific zone).

Pod Network Loss for Zonal Failures

The Pod Network Loss experiment provides more granular control by targeting specific applications within a zone. This is useful when you want to test how individual services handle zone failures without affecting your entire infrastructure.

Key Parameters:

TARGET_NAMESPACE specifies the namespace containing your target application. This allows you to isolate experiments to specific environments or teams.

APP_LABEL uses an application label selector (e.g., app=frontend) to target specific applications. This gives you precise control over which services are affected.

TOTAL_CHAOS_DURATION should be set to 300 seconds for realistic zone failure scenarios, matching the duration used in node-level experiments.

NETWORK_PACKET_LOSS_PERCENTAGE should be 100% to simulate complete network isolation for the targeted pods.

PODS_AFFECTED_PERC determines the percentage of pods matching your criteria to affect. Set to 100 for complete zone failure simulation, or lower values for partial failures.

NETWORK_INTERFACE typically uses eth0 as the primary network interface for pod networking.

NODE_LABEL should use topology.kubernetes.io/zone=<zone-name> to target pods running in a specific availability zone.

Network Experiment Best Practices

When running network experiments, there are some important considerations to keep in mind.

General Guidelines

Start Small: Begin with shorter durations (30-60 seconds) and gradually increase as you build confidence in your experiments and understand your system's behavior.

Use Probes: Always configure health probes to validate application behavior during experiments. This gives you objective data about whether your hypothesis was correct.

Monitor Metrics: Track application and infrastructure metrics during experiments. CPU usage, memory consumption, request latency, and error rates are all critical indicators.

Schedule Wisely: Run experiments during maintenance windows or low-traffic periods initially. As you build confidence, you can move to running experiments during normal operations.

Document Results: Keep records of experiment outcomes and system behavior. This creates institutional knowledge and helps track improvements over time.

Pod Network Loss Considerations

One important thing to understand: Pod Network Loss experiments always block egress traffic from the target pods. This is crucial for experiment design. You can configure specific destination hosts or IPs to block, or you can simulate complete network isolation.

Important Parameters:

TARGET_NAMESPACE specifies your target namespace (e.g., production). This ensures experiments run in the correct environment.

APP_LABEL uses an application label selector like app=api-service to target specific applications precisely.

TOTAL_CHAOS_DURATION sets the experiment duration, typically 180 seconds (3 minutes) for most scenarios.

DESTINATION_HOSTS allows you to specify particular services to block using comma-separated hostnames (e.g., database.example.com). Leave empty to block all egress traffic.

DESTINATION_IPS lets you block specific IP addresses using comma-separated values (e.g., 10.0.1.50). This is useful when you know the exact IPs of backend services.

PODS_AFFECTED_PERC determines what percentage of matching pods to affect. Set to 100 to test complete service isolation.

NETWORK_INTERFACE specifies the network interface to target, typically eth0 for standard Kubernetes deployments.

Pod API Block for Egress Traffic

When using Pod API Block, you have fine-grained control. You can block specific API paths, target particular services, and choose whether to block egress or ingress traffic.

Important Parameters for Egress:

TARGET_CONTAINER specifies the container name within the pod that will experience the API block.

TARGET_SERVICE_PORT sets the target service port (e.g., 8080) for the API endpoint you're testing.

TOTAL_CHAOS_DURATION determines experiment duration, typically 180 seconds for API-level testing.

PATH_FILTER allows you to block a specific API path like /api/v1/users, enabling surgical testing of individual endpoints.

DESTINATION_HOSTS specifies target service hostnames using comma-separated values (e.g., api.example.com).

SERVICE_DIRECTION should be set to egress for blocking outbound API calls from the target container.

PODS_AFFECTED_PERC determines the percentage of pods to affect, typically 100 for comprehensive testing.

Pod API Block for Ingress Traffic

For ingress testing, you could block incoming health check requests to see how your monitoring responds.

Important Parameters for Ingress:

TARGET_CONTAINER specifies the container name within the pod that will block incoming requests.

TARGET_SERVICE_PORT sets the port receiving traffic, typically 8080 or your application's serving port.

TOTAL_CHAOS_DURATION determines the experiment duration, usually 180 seconds for health check testing.

PATH_FILTER allows you to block a specific incoming path like /health to test monitoring resilience.

SOURCE_HOSTS specifies source hostnames using comma-separated values (e.g., monitoring.example.com).

SOURCE_IPS lets you target specific source IP addresses using comma-separated values (e.g., 10.0.2.100).

SERVICE_DIRECTION should be set to ingress for blocking incoming requests to the target container.

PODS_AFFECTED_PERC determines the percentage of pods to affect, typically 100 for complete testing.

AWS: Cloud Infrastructure Resilience

AWS infrastructure brings its own set of failure modes. Here's what matters most for AWS workloads.

Recommended AWS Experiments

EC2 Stop simulates EC2 instance failure with high impact. This tests your application's ability to handle sudden instance termination and validates auto-scaling group behavior.

EBS Loss tests application behavior on volume detachment with high impact. This is critical for applications with persistent storage requirements.

ALB AZ Down simulates load balancer AZ failure with medium impact. This validates that your multi-AZ load balancer configuration works as expected.

RDS Reboot tests database failover with high impact. This ensures your database layer can handle planned and unplanned reboots.

Important: AWS experiments require proper IAM permissions. See AWS Fault Permissions for details.

EC2 Stop by ID

The EC2 Stop by ID experiment stops EC2 instances to test application resilience to instance failures and validate failover capabilities.

Key Parameters:

EC2_INSTANCE_ID accepts a comma-separated list of target EC2 instance IDs. You can target a single instance or multiple instances simultaneously.

REGION specifies the AWS region name of the target instances (e.g., us-east-1). All instances in a single experiment must be in the same region.

TOTAL_CHAOS_DURATION is typically set to 30 seconds, which is long enough to trigger failover mechanisms while minimizing impact.

CHAOS_INTERVAL determines the interval between successive instance terminations, typically 30 seconds for sequential failures.

SEQUENCE can be either parallel or serial. Use parallel to stop all instances simultaneously, or serial to stop them one at a time.

MANAGED_NODEGROUP should be set to disable for standard EC2 instances, or enable for self-managed node groups in EKS.

EBS Loss by ID

The EBS Loss by ID experiment detaches EBS volumes to test application behavior when storage becomes unavailable.

Key Parameters:

EBS_VOLUME_ID accepts a comma-separated list of EBS volume IDs to detach. Choose volumes that are critical to your application's operation.

REGION specifies the region name for the target volumes (e.g., us-east-1). Ensure volumes and instances are in the same region.

TOTAL_CHAOS_DURATION is typically 30 seconds, giving you enough time to observe storage failure behavior without extended downtime.

CHAOS_INTERVAL sets the interval between attachment and detachment cycles, usually 30 seconds.

SEQUENCE determines whether volumes are detached in parallel or serial order. Parallel tests simultaneous storage failures.

ALB AZ Down

The ALB AZ Down experiment detaches availability zones from Application Load Balancer to test multi-AZ resilience.

Key Parameters:

LOAD_BALANCER_ARN specifies the target load balancer ARN. You can find this in your AWS console or CLI.

ZONES accepts comma-separated zones to detach (e.g., us-east-1a). Choose zones strategically to test failover behavior.

REGION specifies the region name for the target ALB (e.g., us-east-1).

TOTAL_CHAOS_DURATION is typically 30 seconds for ALB experiments, sufficient to test traffic redistribution.

CHAOS_INTERVAL determines the interval between detachment and attachment cycles, usually 30 seconds.

SEQUENCE can be parallel or serial for detaching multiple zones.

Note: A minimum of two AZs must remain attached to the ALB after chaos injection.

RDS Instance Reboot

The RDS Instance Reboot experiment reboots RDS instances to test database failover and application recovery.

Key Parameters:

CLUSTER_NAME specifies the name of the target RDS cluster. This is required for cluster-level operations.

RDS_INSTANCE_IDENTIFIER sets the name of the target RDS instance within the cluster.

REGION specifies the region name for the target RDS (e.g., us-east-1).

TOTAL_CHAOS_DURATION is typically 30 seconds for the chaos duration, though the actual reboot may take longer.

INSTANCE_AFFECTED_PERC determines the percentage of RDS instances to target. Set to 0 to target exactly 1 instance.

SEQUENCE can be parallel or serial for rebooting multiple instances.

Azure: Testing Your Azure Workloads

For Azure deployments, focus on these key experiments to validate resilience to Azure-specific failures and service disruptions.

Recommended Azure Experiments

Azure Instance Stop simulates VM failure with high impact. This validates that your Azure-based applications can handle unexpected VM termination.

Azure Disk Loss tests disk detachment scenarios with high impact. This is essential for applications with persistent storage on Azure.

Azure Web App Stop validates App Service resilience with medium impact. This tests your PaaS-based applications' ability to handle service disruptions.

Azure Instance Stop

The Azure Instance Stop experiment powers off Azure VM instances to test application resilience to unexpected VM failures.

Key Parameters:

AZURE_INSTANCE_NAMES specifies the name of target Azure instances. For AKS clusters, use the Scale Set name, not the node name from the AKS node pool.

RESOURCE_GROUP sets the name of the resource group containing the target instance. This is required for Azure resource identification.

SCALE_SET should be set to disable for standalone VMs, or enable if the instance is part of a Virtual Machine Scale Set.

TOTAL_CHAOS_DURATION is typically 30 seconds, providing enough time to observe failover without extended disruption.

CHAOS_INTERVAL determines the interval between successive instance power-offs, usually 30 seconds.

SEQUENCE can be parallel or serial for stopping multiple instances.

Tip: For AKS nodes, use the Scale Set instance name from Azure, not the node name from AKS node pool.

GCP: Google Cloud Platform Resilience

For GCP workloads, these experiments validate compute and storage resilience.

Recommended GCP Experiments

GCP VM Instance Stop simulates compute instance failure with high impact. This tests your GCP-based applications' resilience to unexpected instance termination.

GCP VM Disk Loss tests persistent disk detachment with high impact. This validates how your applications handle storage failures on GCP.

GCP VM Instance Stop

The GCP VM Instance Stop experiment powers off GCP VM instances to test application resilience to unexpected instance failures.

Key Parameters:

GCP_PROJECT_ID specifies the ID of the GCP project containing the VM instances. This is required for resource identification.

VM_INSTANCE_NAMES accepts a comma-separated list of target VM instance names within the project.

ZONES specifies the zones of target instances in the same order as instance names. Each instance needs its corresponding zone.

TOTAL_CHAOS_DURATION is typically 30 seconds, sufficient for testing instance failure scenarios.

CHAOS_INTERVAL determines the interval between successive instance terminations, usually 30 seconds.

MANAGED_INSTANCE_GROUP should be set to disable for standalone VMs, or enable if instances are part of a managed instance group.

SEQUENCE can be parallel or serial for stopping multiple instances.

Required IAM Permissions:

Your service account needs compute.instances.get to retrieve instance information, compute.instances.stop to power off instances, and compute.instances.start to restore instances after the experiment.

Experiment Design Best Practices

Now that we've covered the experiments, let's talk about how to run them effectively.

1. Define Clear Hypotheses

Before running any experiment, define what you expect to happen. For example: "When 50% of pods lose network connectivity, the application should continue serving requests with increased latency but no errors."

This clarity helps you know what to measure and when something unexpected happens.

2. Use Resilience Probes

Always configure probes to validate your hypothesis:

HTTP Probes monitor application endpoints to verify they're responding correctly during chaos.

Command Probes check system state by running commands and validating output.

Prometheus Probes validate metrics thresholds to ensure performance stays within acceptable bounds.

Learn more about Resilience Probes.

3. Gradual Blast Radius Increase

Follow this progression:

Single Pod/Container experiments test individual component resilience. Start here to understand how your smallest units behave.

Multiple Pods validate load balancing and failover at the service level. This ensures traffic distributes correctly.

Node Level tests infrastructure resilience by affecting entire nodes. This reveals cluster-level behaviors.

Zone Level validates multi-AZ deployments by simulating complete zone failures. This is your ultimate resilience test.

4. Schedule Regular Experiments

Make chaos engineering a continuous practice:

Weekly: Run low-impact experiments like pod delete and network latency. These keep your team sharp and validate recent changes.

Monthly: Execute medium-impact experiments including node failures and resource exhaustion. These catch configuration drift.

Quarterly: Conduct high-impact scenarios like zone failures and major service disruptions. These validate your disaster recovery plans.

Use GameDays to organize team chaos engineering events.

5. Monitor and Alert

Ensure proper observability during experiments:

Configure alerts for critical metrics before running experiments. You want to know immediately if something goes wrong.

Monitor application logs in real-time during experiments. Logs often reveal issues before metrics do.

Track infrastructure metrics including CPU, memory, and network utilization. These help you understand resource consumption patterns.

Use Chaos Dashboard for visualization and real-time monitoring of your experiments.

Getting Started

The best way to get started with chaos engineering is to pick one experiment that addresses your biggest concern. Are you worried about network reliability? Start with Pod Network Loss. Concerned about failover? Try Pod Delete or EC2 Stop.

Run the experiment in a test environment first. Observe what happens. Refine your hypothesis. Then gradually move toward production environments as you build confidence.

Here are some helpful resources to continue your chaos engineering journey:

- Create Your First Experiment

- Configure Resilience Probes

- Set Up GameDays

- Integrate with CI/CD

- Explore All Kubernetes Faults

Remember, chaos engineering isn't about breaking things for the sake of breaking them. It's about understanding your system's behavior under stress so you can build more resilient applications. Start small, learn continuously, and gradually expand your chaos engineering practice.

What failure scenarios keep you up at night? Those are probably the best experiments to start with.

Making Your Business Resilient Against Cloudflare Like Outages

In the fast-paced digital world, a single point of failure can ripple across the globe, halting operations and frustrating millions. On November 18, 2025, that's exactly what happened when Cloudflare—a backbone for internet infrastructure—experienced a major outage. Sites like X (formerly Twitter), ChatGPT, and countless businesses relying on Cloudflare's CDN, DNS, and security services ground to a halt, serving 5xx errors and leaving users staring at blank screens. If your business depends on cloud services, this event is a stark reminder: resilience isn't optional; it's essential.

As sponsors of the Chaos Engineering tool LitmusChaos and as providers of resilience testing solutions from Harness, we've seen firsthand how proactive testing can turn potential disasters into minor blips. In this post, we'll break down what went wrong, the ripple effects on businesses, proven strategies to bounce back stronger, and why tools like ours are game-changers. Let's dive in.

What Happened During The Cloudflare Outage?

The outage kicked off around 11:20 UTC on November 18, with a surge in 5xx errors hitting a "huge portion of the internet." Cloudflare's internal systems degraded due to a configuration or database schema mismatch during a software rollout, triggering panic in shared mutable state initialization. This wasn't a cyberattack but a classic case of human error amplified by scale—think of it as deploying a patch that accidentally locks the front door while everyone's inside.

Affected services spanned the board: the Cloudflare Dashboard saw intermittent login failures, Access and WARP clients reported elevated error rates (with WARP temporarily disabled in London during fixes), and application services like DNS resolution and content delivery faltered globally. High-profile casualties included X, where thousands of users couldn't load feeds, and OpenAI's ChatGPT, which became unreachable for many. The disruption lasted about eight hours, with full resolution by 19:28 UTC after deploying a rollback and monitoring fixes.

Cloudflare's transparency in their post-mortem is commendable, but the event underscores how even giants aren't immune. For businesses, it was a costly lesson in third party dependency and not having enough confidence on the service being resilient.

How Can Your Business Be affected?

You may be depending on service providers like Cloudflare for handling DNS, DDoS protection, and edge caching. When they hiccup, the fallout is immediate and far-reaching:

- Revenue Loss: Online retailers like Shopify stores or Amazon affiliates saw carts abandoned mid-checkout. A single hour of downtime can cost mid-sized e-commerce businesses $10,000–$100,000 in lost sales, per industry benchmarks.

- User Experience Degradation: Streaming services buffered endlessly, social platforms froze, and collaboration tools like Slack integrations failed, eroding trust. Frustrated users churn—studies show a 7% drop in conversions per second of delay.

- Operational Chaos: DevOps teams scrambled with alerts firing, while customer support lines lit up. For global firms, the staggered impact across time zones meant 24/7 firefighting.

- Long-Term Hits: SEO rankings dip from crawl errors, and compliance headaches arise if SLAs are breached. In regulated sectors like finance or healthcare, this could trigger audits or fines.

This outage hit during peak hours for Europe and the Americas, amplifying the pain for businesses already stretched thin post-pandemic. It's a reminder: your uptime is only as strong as your weakest link.

Recommended Resilience Architecture For Your Business Services

Staying resilient doesn't require reinventing the wheel—just smart layering. Here are five battle-tested practices, each with a quick how-to:

1. Multi-Provider Redundancy: Don't put all eggs in one basket. Route traffic through alternatives like Akamai or Fastly for failover. Tip: Use anycast DNS to auto-switch providers in under 60 seconds.

2. Aggressive Caching and Edge Computing: Pre-load static assets at the edge to survive backend blips. Tip: Implement immutable caching with TTLs of 24+ hours for non-volatile content.

3. Robust Monitoring and Alerting: Tools like Datadog, Dynatrace or Prometheus can detect anomalies early. Tip: Set up synthetic monitors that simulate user journeys, alerting on >1% error rates.

4. Graceful Degradation and Offline Modes: Design apps to work partially offline—queue actions for retry. Tip: Use service workers in PWAs to cache critical paths.

These aren't silver bullets, but combined, they can cut recovery time from hours to minutes.

Cloudflare also must be doing everything that is possible to stay resilient. However, small failures either in the infrastructure, or applications or third party dependencies are inevitable. Your services must continue to stay resilient against potential failures. How? The answer lies in verifying as frequently as possible that your business services are resilient and if not, keep making corrections.

Why Is Regular Resilience Testing Non-Negotiable?

Outages like Cloudflare's expose the "unknown unknowns"—flaws that only surface under stress. Regular testing flips the script: instead of reactive firefighting, you're proactive architects.

Even though you have architected and implemented the good practices for resilience, there are lot of variables which can change your resiliency assumptions.

- Code changes are deployed and software is updated on your application or underlying infrastructure clusters.

- Configurations/behavior of the underlying infrastructure is updated. E.g: One of the services is moved from one VM to another VM with a lower configuration.

- New dependent services are introduced.

Unless you have enough resilience testing coverage with every change, you always will have unknown unknowns. With known unknowns, you at least have a tested mechanism on how to respond and recovery quickly.

Harness Chaos Engineering Strengthens Your Resilience Posture

- Network Latency and Packet Loss: Mimic DNS resolution delays or edge routing fails. Probe how your app handles 500ms+ lags—perfect for testing failover to secondary CDNs.

- Service Outage Simulation: "Kill" external dependencies like API calls to Cloudflare services. Use resilience probes to verify if your system auto-retries or degrades gracefully.

- Resource Contention Faults: Stress CPU/memory to echo overload from traffic spikes. ChaosGuard ensures experiments stay within guardrails, preventing cascade failures.

- Pod/Node Terminations (for K8s Users): Randomly evict resources to test scaling. Integrate with GitOps for automated rollbacks if thresholds breach.

- File or Disk size increases: Increase the files size, fill the underly disks or fill the database tables with fillers. Use resilience probes to verify if other services are functional. In the case of the Cloudflare outage, the root cause on their side appears to be increased file size or database changes, which might have been averted with regular resilience testing practice.

These aren't one-offs; run them in steady-state probes for baseline metrics, then blast radius tests for full-system validation. With AI-driven insights, Harness flags weak spots pre-outage—like over-reliance on a single provider—and suggests fixes. Early adopters report 30% uptime gains and halved incident severity.

Harness Chaos Engineering provides hundreds of ready to use fault templates to create required faulty scenarios and integrations with your APM systems to verify the resilience of your business services. The created chaos experiments are easy to add to either your deployment piplelines like Harness CD, GitLab, GitHub actions or to your GameDays.

Ready To Outage-Proof Your Business?

The Cloudflare outage was a global gut-check, but it's also an opportunity. By auditing dependencies today and layering in resilience practices—capped with tools like Harness—you'll sleep better knowing your services can weather the storm.

What's your first step? Audit your Cloudflare integrations or spin up a quick chaos experiment. Head to our Chaos Engineering page to learn more or sign up for our free tier with all the features that only limits the number of chaos experiments you can run in a month.

If you wish to learn more about resilience testing practices using Harness, this article will help.

Are you ready to outage-proof your business? Let's build a more unbreakable internet together, one test at a time.

Automating Chaos Engineering with Terraform

Infrastructure as Code (IaC) has revolutionized how we manage and provision infrastructure. But what about chaos engineering? Can you automate the setup of your chaos experiments the same way you provision your infrastructure?

The answer is yes. In this guide, I'll walk you through how to integrate Harness Chaos Engineering into your infrastructure using Terraform, making it easier to maintain resilient systems at scale.

Why Automate Chaos Engineering?

Before diving into the technical details, let's talk about why this matters.

Managing chaos engineering manually across multiple environments is time-consuming and error-prone. You need to set up infrastructures, configure service discovery, manage security policies, and maintain consistency across dev, staging, and production environments.

With Terraform, you can:

- Version control your entire chaos engineering setup

- Replicate configurations across environments reliably

- Integrate chaos engineering into your existing IaC workflows

- Collaborate with your team using familiar tools

What You Can Automate

The Harness Terraform provider lets you automate several key aspects of chaos engineering:

Infrastructure Setup - Enable chaos engineering on your existing Kubernetes clusters or provision new ones with chaos capabilities built in.

Service Discovery - Automatically detect services that can be targeted for chaos experiments, eliminating manual configuration.

Image Registries - Configure custom image registries for your chaos experiment workloads, giving you control over where container images are pulled from.

Security Governance - Define and enforce policies that control when and how chaos experiments can run, particularly important for production environments.

ChaosHub Management - Manage repositories of reusable chaos experiments, probes, and actions at the organization or project level.

Getting Started

Before you begin, make sure you have:

- Terraform installed and configured

- The Harness Terraform provider set up (see the official documentation)

- A Kubernetes infrastructure where you want to enable chaos engineering

Currently, the Harness Terraform provider for chaos engineering supports Kubernetes infrastructures.

Building Your Configuration

Let's walk through the key resources you'll need.

Setting Up Common Configuration

Start by defining common variables that will be used across all your resources:

locals {

org_id = var.org_identifier != null ? var.org_identifier : harness_platform_organization.this[0].id

project_id = var.project_identifier != null ? var.project_identifier : (

var.org_identifier != null ? "${var.org_identifier}_${replace(lower(var.project_name), " ", "_")}" :

"${harness_platform_organization.this[0].id}_${replace(lower(var.project_name), " ", "_")}"

)

common_tags = merge(

var.tags,

{

"module" = "harness-chaos-engineering"

}

)

tags_set = [for k, v in local.common_tags : "${k}=${v}"]

}

This approach keeps your configuration DRY and makes it easy to reference organization and project identifiers throughout your setup.

Creating Organization and Project

If you don't have an existing organization or project, Terraform can create them:

resource "harness_platform_organization" "this" {

count = var.org_identifier == null ? 1 : 0

identifier = replace(lower(var.org_name), " ", "_")

name = var.org_name

description = "Organization for Chaos Engineering"

tags = local.tags_set

}

resource "harness_platform_project" "this" {

depends_on = [harness_platform_organization.this]

count = var.project_identifier == null ? 1 : 0

org_id = local.org_id

identifier = local.project_id

name = var.project_name

color = var.project_color

description = "Project for Chaos Engineering"

tags = local.tags_set

}

Setting Up Kubernetes Connector

Connect your Kubernetes cluster to Harness:

resource "harness_platform_connector_kubernetes" "this" {

depends_on = [harness_platform_project.this]

identifier = var.k8s_connector_name

name = var.k8s_connector_name

org_id = local.org_id

project_id = local.project_id

inherit_from_delegate {

delegate_selectors = var.delegate_selectors

}

tags = local.tags_set

}

Creating Environment and Infrastructure

Set up your environment and infrastructure definition:

resource "harness_platform_environment" "this" {

depends_on = [

harness_platform_project.this,

harness_platform_connector_kubernetes.this

]

identifier = var.environment_identifier

name = var.environment_name

org_id = local.org_id

project_id = local.project_id

type = "PreProduction"

tags = local.tags_set

}

resource "harness_platform_infrastructure" "this" {

depends_on = [

harness_platform_environment.this,

harness_platform_connector_kubernetes.this

]

identifier = var.infrastructure_identifier

name = var.infrastructure_name

org_id = local.org_id

project_id = local.project_id

env_id = harness_platform_environment.this.id

deployment_type = var.deployment_type

type = "KubernetesDirect"

yaml = <<-EOT

infrastructureDefinition:

name: ${var.infrastructure_name}

identifier: ${var.infrastructure_identifier}

orgIdentifier: ${local.org_id}

projectIdentifier: ${local.project_id}

environmentRef: ${harness_platform_environment.this.id}

type: KubernetesDirect

deploymentType: ${var.deployment_type}

allowSimultaneousDeployments: false

spec:

connectorRef: ${var.k8s_connector_name}

namespace: ${var.namespace}

releaseName: release-${var.infrastructure_identifier}

EOT

tags = local.tags_set

}

Enabling Chaos Infrastructure

Now enable chaos engineering capabilities on your infrastructure:

resource "harness_chaos_infrastructure_v2" "this" {

depends_on = [harness_platform_infrastructure.this]

org_id = local.org_id

project_id = local.project_id

environment_id = harness_platform_environment.this.id

infra_id = harness_platform_infrastructure.this.id

name = var.chaos_infra_name

description = var.chaos_infra_description

namespace = var.chaos_infra_namespace

infra_type = var.chaos_infra_type

ai_enabled = var.chaos_ai_enabled

insecure_skip_verify = var.chaos_insecure_skip_verify

service_account = var.service_account_name

tags = local.tags_set

}

Automating Service Discovery

Service discovery eliminates the need to manually register services for chaos experiments:

resource "harness_service_discovery_agent" "this" {

depends_on = [harness_chaos_infrastructure_v2.this]

name = var.service_discovery_agent_name

org_identifier = local.org_id

project_identifier = local.project_id

environment_identifier = harness_platform_environment.this.id

infra_identifier = harness_platform_infrastructure.this.id

installation_type = var.sd_installation_type

config {

kubernetes {

namespace = var.sd_namespace

}

}

}

Once deployed, the agent will automatically detect services running in your cluster, making them available for chaos experiments.

Configuring Custom Image Registries

For organizations that use private registries or have specific image sourcing requirements, you can configure custom image registries at both organization and project levels:

resource "harness_chaos_image_registry" "org_level" {

depends_on = [harness_platform_organization.this]

count = var.setup_custom_registry ? 1 : 0

org_id = local.org_id

registry_server = var.registry_server

registry_account = var.registry_account

is_default = var.is_default_registry

is_override_allowed = var.is_override_allowed

is_private = var.is_private_registry

secret_name = var.registry_secret_name != "" ? var.registry_secret_name : null

use_custom_images = var.use_custom_images

dynamic "custom_images" {

for_each = var.use_custom_images ? [1] : []

content {

log_watcher = var.log_watcher_image != "" ? var.log_watcher_image : null

ddcr = var.ddcr_image != "" ? var.ddcr_image : null

ddcr_lib = var.ddcr_lib_image != "" ? var.ddcr_lib_image : null

ddcr_fault = var.ddcr_fault_image != "" ? var.ddcr_fault_image : null

}

}

}

resource "harness_chaos_image_registry" "project_level" {

depends_on = [harness_chaos_image_registry.org_level]

count = var.setup_custom_registry ? 1 : 0

org_id = local.org_id

project_id = local.project_id

registry_server = var.registry_server

registry_account = var.registry_account

is_default = var.is_default_registry

is_override_allowed = var.is_override_allowed

is_private = var.is_private_registry

secret_name = var.registry_secret_name != "" ? var.registry_secret_name : null

use_custom_images = var.use_custom_images

dynamic "custom_images" {

for_each = var.use_custom_images ? [1] : []

content {

log_watcher = var.log_watcher_image != "" ? var.log_watcher_image : null

ddcr = var.ddcr_image != "" ? var.ddcr_image : null

ddcr_lib = var.ddcr_lib_image != "" ? var.ddcr_lib_image : null

ddcr_fault = var.ddcr_fault_image != "" ? var.ddcr_fault_image : null

}

}

}

Setting Up Git Connector for ChaosHub

To manage your chaos experiments in Git repositories, first create a Git connector:

resource "harness_platform_connector_git" "chaos_hub" {

depends_on = [

harness_platform_organization.this,

harness_platform_project.this

]

count = var.create_git_connector ? 1 : 0

identifier = replace(lower(var.git_connector_name), " ", "-")

name = var.git_connector_name

description = "Git connector for Chaos Hub"

org_id = local.org_id

project_id = local.project_id

url = var.git_connector_url

connection_type = "Account"

dynamic "credentials" {

for_each = var.git_connector_ssh_key != "" ? [1] : []

content {

ssh {

ssh_key_ref = var.git_connector_ssh_key

}

}

}

dynamic "credentials" {

for_each = var.git_connector_ssh_key == "" ? [1] : []

content {

http {

username = var.git_connector_username != "" ? var.git_connector_username : null

password_ref = var.git_connector_password != "" ? var.git_connector_password : null

dynamic "github_app" {

for_each = var.github_app_id != "" ? [1] : []

content {

application_id = var.github_app_id

installation_id = var.github_installation_id

private_key_ref = var.github_private_key_ref

}

}

}

}

}

validation_repo = var.git_connector_validation_repo

tags = merge(

{ for k, v in var.chaos_hub_tags : k => v },

{

"managed_by" = "terraform"

"purpose" = "chaos-hub-git-connector"

}

)

}

This connector supports multiple authentication methods including SSH keys, HTTP credentials, and GitHub Apps, making it flexible for different Git hosting providers.

Managing ChaosHubs

ChaosHubs let you create libraries of reusable chaos experiments:

resource "harness_chaos_hub" "this" {

depends_on = [harness_platform_connector_git.chaos_hub]

count = var.create_chaos_hub ? 1 : 0

org_id = local.org_id

project_id = local.project_id

name = var.chaos_hub_name

description = var.chaos_hub_description

connector_id = var.create_git_connector ? one(harness_platform_connector_git.chaos_hub[*].id) : var.chaos_hub_connector_id

repo_branch = var.chaos_hub_repo_branch

repo_name = var.chaos_hub_repo_name

is_default = var.chaos_hub_is_default

connector_scope = var.chaos_hub_connector_scope

tags = var.chaos_hub_tags

lifecycle {

ignore_changes = [tags]

}

}

The configuration intelligently uses either a newly created Git connector or an existing one based on your variables, providing flexibility in how you manage your infrastructure.

Implementing Security Governance

This is where things get interesting. Chaos Guard lets you define rules that control chaos experiment execution.

First, create conditions that define what you want to control:

resource "harness_chaos_security_governance_condition" "this" {

depends_on = [

harness_platform_environment.this,

harness_platform_infrastructure.this,

harness_chaos_infrastructure_v2.this,

]

name = var.security_governance_condition_name

description = "Condition to block destructive experiments"

org_id = local.org_id

project_id = local.project_id

infra_type = var.security_governance_condition_infra_type

fault_spec {

operator = var.security_governance_condition_operator

dynamic "faults" {

for_each = var.security_governance_condition_faults

content {

fault_type = faults.value.fault_type

name = faults.value.name

}

}

}

dynamic "k8s_spec" {

for_each = var.security_governance_condition_infra_type == "KubernetesV2" ? [1] : []

content {

infra_spec {

operator = var.security_governance_condition_infra_operator

infra_ids = ["${harness_platform_environment.this.id}/${harness_chaos_infrastructure_v2.this.id}"]

}

dynamic "application_spec" {

for_each = var.security_governance_condition_application_spec != null ? [1] : []

content {

operator = var.security_governance_condition_application_spec.operator

dynamic "workloads" {

for_each = var.security_governance_condition_application_spec.workloads

content {

namespace = workloads.value.namespace

kind = workloads.value.kind

}

}

}

}

dynamic "chaos_service_account_spec" {

for_each = var.security_governance_condition_service_account_spec != null ? [1] : []

content {

operator = var.security_governance_condition_service_account_spec.operator

service_accounts = var.security_governance_condition_service_account_spec.service_accounts

}

}

}

}

dynamic "machine_spec" {

for_each = contains(["Windows", "Linux"], var.security_governance_condition_infra_type) ? [1] : []

content {

infra_spec {

operator = var.security_governance_condition_infra_operator

infra_ids = var.security_governance_condition_infra_ids

}

}

}

lifecycle {

ignore_changes = [name]

}

tags = [

for k, v in merge(

local.common_tags,

{

"platform" = lower(var.security_governance_condition_infra_type)

}

) : "${k}=${v}"

]

}

This configuration supports multiple infrastructure types including Kubernetes, Windows, and Linux, with specific specifications for each platform type.

Then, create rules that apply these conditions with specific actions:

resource "harness_chaos_security_governance_rule" "this" {

depends_on = [harness_chaos_security_governance_condition.this]

name = var.security_governance_rule_name

description = var.security_governance_rule_description

org_id = local.org_id

project_id = local.project_id

is_enabled = var.security_governance_rule_is_enabled

condition_ids = [harness_chaos_security_governance_condition.this.id]

user_group_ids = var.security_governance_rule_user_group_ids

dynamic "time_windows" {

for_each = var.security_governance_rule_time_windows

content {

time_zone = time_windows.value.time_zone

start_time = time_windows.value.start_time

duration = time_windows.value.duration

dynamic "recurrence" {

for_each = time_windows.value.recurrence != null ? [time_windows.value.recurrence] : []

content {

type = recurrence.value.type

until = recurrence.value.until

}

}

}

}

lifecycle {

ignore_changes = [name]

}

tags = [

for k, v in merge(

local.common_tags,

{

"platform" = lower(var.security_governance_condition_infra_type)

}

) : "${k}=${v}"

]

}

This setup ensures that certain types of chaos experiments require approval or are blocked entirely in production environments, giving you confidence to enable chaos engineering without fear of accidental damage. You can also configure time windows for when experiments are allowed to run.

What Happens After Deployment

Once you've applied your Terraform configuration:

- Your service discovery agent starts detecting applications in your configured environments automatically

- Your security governance rules are active, controlling how chaos experiments can be executed

- Your custom ChaosHubs are synchronized and available for use

- Custom image registries are configured if you're using private registries

At this point, you can use the Harness UI to create and configure specific chaos experiments, then execute them against your discovered services. The infrastructure and governance layer is handled by Terraform, while the experiment design remains flexible and can be adjusted through the UI.

Putting It All Together

Here's a practical example of what a complete module structure might look like:

module "chaos_engineering" {

source = "./modules/chaos-engineering"

# Organization and Project

org_identifier = "my-org"

project_identifier = "production"

# Infrastructure

environment_id = "prod-k8s"

infrastructure_id = "k8s-cluster-01"

namespace = "default"

# Chaos Infrastructure

chaos_infra_name = "prod-chaos-infra"

chaos_infra_namespace = "harness-chaos"

chaos_ai_enabled = true

# Service Discovery

service_discovery_agent_name = "prod-service-discovery"

sd_namespace = "harness-delegate-ng"

# Custom Registry (optional)

setup_custom_registry = true

registry_server = "my-registry.io"

registry_account = "chaos-experiments"

is_private_registry = true

# Git Connector for ChaosHub

create_git_connector = true

git_connector_name = "chaos-experiments-git"

git_connector_url = "https://github.com/myorg/chaos-experiments"

git_connector_username = "myuser"

git_connector_password = "account.github_token"

# ChaosHub

create_chaos_hub = true

chaos_hub_name = "production-experiments"

chaos_hub_repo_branch = "main"

chaos_hub_repo_name = "chaos-experiments"

# Security Governance

security_governance_condition_name = "block-destructive-faults"

security_governance_condition_faults = [

{

fault_type = "pod-delete"

name = "pod-delete"

}

]

security_governance_rule_name = "production-safety-rule"

security_governance_rule_user_group_ids = ["platform-team"]

security_governance_rule_is_enabled = true

# Tags

tags = {

environment = "production"

managed_by = "terraform"

team = "platform"

}

}

Best Practices

As you build out your chaos engineering automation, keep these practices in mind:

Start with non-production environments - Test your Terraform configurations and governance rules in development or staging before rolling out to production.

Use separate state files - Maintain separate Terraform state files for different environments to prevent accidental cross-environment changes.

Version your chaos experiments - Store experiment definitions in Git repositories and reference them through ChaosHubs for better collaboration and change tracking.

Leverage conditional resource creation - Use count parameters to optionally create resources like custom registries or Git connectors based on your needs.

Implement proper authentication - Use Harness secrets management for storing sensitive credentials like registry passwords and Git authentication tokens.

Review governance rules regularly - As your understanding of system resilience grows, update your governance conditions and rules to reflect new insights.

Use time windows strategically - Configure governance rules with time windows to allow experiments only during business hours or maintenance windows.

Tag everything - Proper tagging helps with cost tracking, resource management, and understanding relationships between resources.

Combine with CI/CD - Integrate your chaos engineering Terraform configurations into your CI/CD pipelines for fully automated infrastructure deployment.

Moving Forward

Automating chaos engineering with Terraform removes friction from adopting resilience testing practices. You can now treat your chaos engineering setup like any other infrastructure component, with version control, code review, and automated deployment.

The key is starting small. Pick one environment, set up the basic infrastructure and service discovery, then gradually add governance rules and custom experiments as you learn what works for your systems.

For more details on specific resources and configuration options, check out the Harness Terraform Provider documentation.

What aspects of chaos engineering do you think would benefit most from automation in your organization?

Important Links:

New to Harness Chaos Engineering? Signup here.

Trying to find the documentation for Chaos Engineering? Go here: Chaos Engineering

Learn more: What is Terraform

.png)

.png)

Running Chaos Engineering on GKE Autopilot Just Got Easier

Google's GKE Autopilot provides fully managed Kubernetes without the operational overhead of node management, security patches, or capacity planning. However, running chaos engineering experiments on Autopilot has been challenging due to its security restrictions.

We've solved that problem.

Why This Matters

Chaos engineering helps you identify issues before they impact your users. The approach involves intentionally introducing controlled failures to understand how your system responds. Think of it as a fire drill for your infrastructure.

GKE Autopilot secures clusters by restricting many permissions, which is excellent for security. However, this made running chaos experiments difficult. You couldn't simply deploy Harness Chaos Engineering and begin testing.

That changes today.

What Changed

We collaborated with Google to add Harness Chaos Engineering to GKE Autopilot's official allowlist. This integration enables Harness to run chaos experiments while operating entirely within Autopilot's security boundaries.

No workarounds required. Just chaos engineering that works as expected.

How to Set It Up

1. Apply the Allowlist

First, you need to tell GKE Autopilot that Harness chaos workloads are okay to run. Copy this command:

kubectl apply -f - <<'EOF'

apiVersion: auto.gke.io/v1

kind: AllowlistSynchronizer

metadata:

name: harness-chaos-allowlist-synchronizer

spec:

allowlistPaths:

- Harness/allowlists/chaos/v1.62/*

- Harness/allowlists/service-discovery/v0.42/*

EOF

Then wait for it to be ready:

kubectl wait --for=condition=Ready allowlistsynchronizer/harness-chaos-allowlist-synchronizer --timeout=60s

That's it for the cluster configuration.

2. Enable Autopilot Mode in Harness

Next, configure Harness to work with GKE Autopilot. You have several options:

If you're setting up chaos for the first time, just use the 1-click chaos setup and toggle on "Use static name for configmap and secret" during setup.

If you already have infrastructure configured, go to Chaos Engineering > Environments, find your infrastructure, and enable that same toggle.

You can also set this up when creating a new discovery agent, or update an existing one in Project Settings > Discovery.

What You Can Test

You can run most of the chaos experiments you'd expect:

The integration supports a comprehensive range of chaos experiments:

Resource stress: Pod CPU Hog, Pod Memory Hog, Pod IO Stress, Disk Fill. These experiments help you understand how your pods behave under resource constraints.

Network chaos: Pod Network Latency, Pod Network Loss, Pod Network Corruption, Pod Network Duplication, Pod Network Partition, Pod Network Rate Limit. Production networks experience imperfections, and your application needs to handle them gracefully.

DNS problems: Pod DNS Error to disrupt resolution, Pod DNS Spoof to redirect traffic.

HTTP faults: Pod HTTP Latency, Pod HTTP Modify Body, Pod HTTP Modify Header, Pod HTTP Reset Peer, Pod HTTP Status Code. These experiments test how your APIs respond to unexpected behavior.

API-level chaos: Pod API Block, Pod API Latency, Pod API Modify Body, Pod API Modify Header, Pod API Status Code. Good for testing service mesh and gateway behavior.

File system chaos: Pod IO Attribute Override, Pod IO Error, Pod IO Latency, Pod IO Mistake. These experiments reveal how your application handles storage issues.

Container lifecycle: Container Kill and Pod Delete to test recovery. Pod Autoscaler to see if scaling works under pressure.

JVM chaos if you're running Java: Pod JVM CPU Stress, Pod JVM Method Exception, Pod JVM Method Latency, Pod JVM Modify Return, Pod JVM Trigger GC.

Database chaos for Java apps: Pod JVM SQL Exception, Pod JVM SQL Latency, Pod JVM Mongo Exception, Pod JVM Mongo Latency, Pod JVM Solace Exception, Pod JVM Solace Latency.

Cache problems: Redis Cache Expire, Redis Cache Limit, Redis Cache Penetration.

Time manipulation: Time Chaos to introduce controlled time offsets.

What This Means for You

If you're running GKE Autopilot and want to implement chaos engineering with Harness, you can now do both without compromise. There's no need to choose between Google's managed experience and resilience testing.

For teams new to chaos engineering, Autopilot provides an ideal starting point. The managed environment reduces infrastructure complexity, allowing you to focus on understanding application behavior under stress.

Getting Started

Start with a simple CPU stress test. Select a non-critical pod and run a low-intensity Pod CPU Hog experiment in Harness. Observe the results: Does your application degrade gracefully? Do your alerts trigger as expected? Does it recover when the experiment completes?

Start small, understand your system's behavior, then explore more complex scenarios.

You can configure Service Discovery to visualize your services in Application Maps, add probes to validate resilience during experiments, and progressively explore more sophisticated fault injection scenarios.

Check out the documentation for the complete setup guide and all supported experiments.

The goal of chaos engineering isn't to break things. It's to understand what breaks before it impacts your users.

.png)

.png)

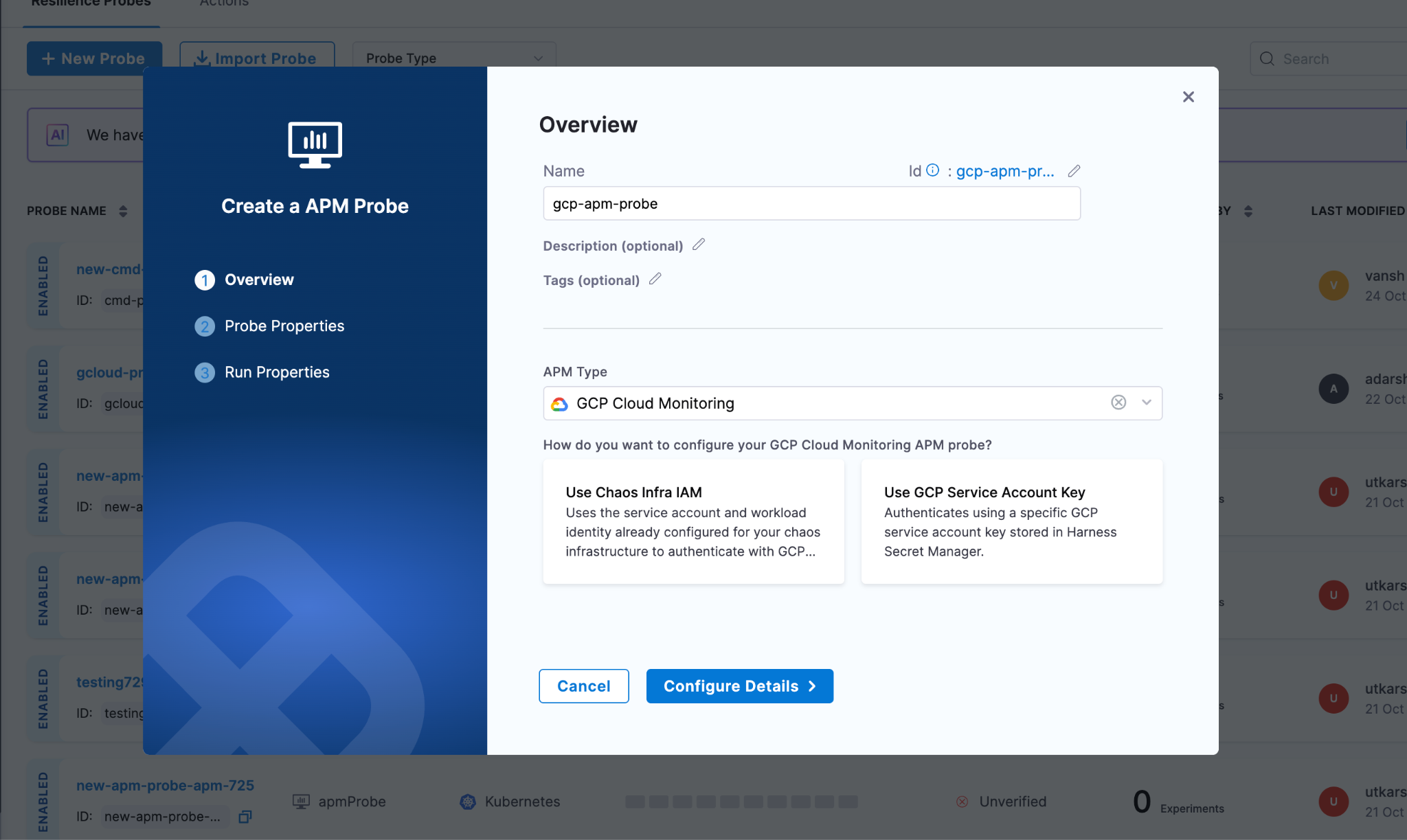

Validating chaos experiments with GCP Cloud Monitoring probes

Running infrastructure on Google Cloud Platform means you're already collecting metrics through Cloud Monitoring. But here's the question: when you deliberately break things during chaos experiments, how do you know if your systems actually stayed healthy?

The GCP Cloud Monitoring probe in Harness Chaos Engineering answers this by letting you query your existing GCP metrics using PromQL and automatically validate them against your SLOs. No manual dashboard watching, no guessing whether that CPU spike was acceptable. Just automated, pass/fail validation of whether your infrastructure held up during controlled chaos.

The Challenge with GCP Infrastructure Testing

Here's a common scenario: you run a chaos experiment that kills pods in your GKE cluster. You watch your GCP Console, see some metrics fluctuate, and everything seems fine. But was it actually fine? Did CPU stay under 80%? Did memory pressure trigger any OOM kills? Did disk I/O queues grow beyond acceptable levels?

Without objective measurement, you're relying on gut feel. GCP Cloud Monitoring probes solve this by turning your existing monitoring into automated test assertions for chaos experiments.

The beauty is that you're already collecting these metrics. GCP Cloud Monitoring tracks everything from compute instance performance to Cloud Run request latency. These probes simply tap into that data stream during chaos experiments and validate it against your defined thresholds.

What You'll Need

Before configuring a GCP Cloud Monitoring probe, ensure you have:

- An active GCP account with Cloud Monitoring enabled for your project

- Network access to the GCP Cloud Monitoring API from your Kubernetes execution plane