Featured Blogs

When you toggle a feature flag, you're changing the behavior of your application; sometimes, in subtle ways that are hard to detect through logs or metrics alone. By adding feature flag attributes directly to spans, you can make these changes observable at the trace level. This enables you to correlate performance, errors, or unusual behavior with the exact flag treatment a user received.

In practice, adding feature flag attributes to your spans allows faster debugging, clearer insights, and more confidence when rolling out flags in production. As teams ship code faster than ever, often with the help of AI, feature flags have become a primary tactic for controlling risk in production. However, when something goes wrong, it’s not enough to know that a request was slow or errored; you need to know which feature flag configuration caused the issue.

Without surfacing feature flag context in traces, teams are left to guess which rollout, experiment, or configuration change affected the behavior. Adding feature flag treatments directly to spans closes this gap by making flag-driven behavior observable, debuggable, and auditable in real time.

Enhancing Observability with Feature Flags and OpenTelemetry

If you’re already using OpenTelemetry, you may want to understand how to surface feature flag behavior in your traces. This article walks you through one approach to achieving this: manually enriching spans with feature flag attributes, allowing you to query traces based on specific flag states.

While this isn’t a native Harness FME integration, you can apply a simple pattern in your own applications to improve observability:

- Identify the spans in your code where feature flag behavior impacts execution. This could be a request handler, a background job, or any logical unit of work.

- Start a span (or use an existing one) for that unit of work using your OpenTelemetry tracer.

- Retrieve the relevant feature flag treatments for the context (for example, a user ID or session).

- Add each flag treatment as a span attribute so your traces can capture the state of feature flags during execution.

- Use these attributes in your observability platform (e.g., Honeycomb) to filter or query traces by flag state.

This approach requires adding feature flag treatments as span attributes in your application code. Feature flags are not automatically exported to OpenTelemetry in Harness FME.

For this demonstration, we will use Honeycomb’s Java Agent and a small sample application (a threaded echo server) to show how feature flag treatments can be added to spans for improved visibility. While this example uses Java, this pattern is language-agnostic and can be applied in any application that supports OpenTelemetry. The same steps apply to web services, background jobs, or any application logic where you want to track the impact of feature flags.

Prerequisites

Before you begin, ensure you have the following requirements:

- Java installed (v11 or later)

- A working local development environment

- Basic familiarity with Java sockets and threads

- Permission to bind to local ports (the sample server listens on port 5009)

Setup

Follow these instructions to prepare your workspace for running the sample threaded echo server:

- Create a working directory for your project by running the following command:

mkdir threaded-echo-server && cd threaded-echo-server. - Add your Java files module (for example, `ThreadedEchoServer.java` and `ClientHandler.java`).

- Compile the server by running the following command:

javac ThreadedEchoServer.java. - Run the server with

java ThreadedEchoServer.

How the Threaded Echo Server works

To illustrate this approach, we’ll use a small Java example: a threaded socket server that listens on port 5009 and echoes back whatever text the client sends.

The example below introduces a simple Java-based Threaded Echo Server. This server acts as our testbed for adding flag-aware span instrumentation.

When the feature flag next_step is on, the server sleeps for two seconds. The sleep is wrapped with a span named "next_step" / "span2". When the flag is off, the server executes the normal doSomeWork behavior without the added wait time.

This produces the visible difference in performance shown by OpenTelemetry in the chart below. With the flag turned on, the spans appear in your Honeycomb trace.

In this trace, the client sends four words. Each word shows nearly two seconds of processing time, which is the exact duration introduced by the feature flag.

With the flag turned off, the resulting trace shows the normal, faster echo processing flow:

The feature flag impacts the trace in two ways:

- A new nested span appears, named after the feature flag. These green bars displayed in each span show how the flag creates explicit instrumented regions within a single client session.

- Two seconds of artificial latency make the spans easy to identify.

Adding Feature Flag Treatments to Spans

So far, we’ve seen that feature flags can create additional spans in a trace. We can take this a step further: making the flags themselves queryable by adding their treatments as attributes to the top-level span. This lets you filter and analyze traces based on flag behavior.

The example below shows how the server evaluates its feature flags and attaches each treatment to the root echo span.

The program evaluates three feature flags: next_step, multivariant_demo, and new_onboarding. Using Harness FME, all flags are evaluated up front and stored in a flag2treatments map. Any dynamic changes to a flag during execution are ignored for the remainder of the program's run; however, there are ways to handle this in more advanced scenarios.

For this example, caching the treatments is fine, and each treatment is also added as a span attribute. By including the flag “impression” in the span, you can query traces to see which sessions were affected by a particular flag or treatment. This makes it easier to isolate and analyze trace behavior driven by specific feature flags.

In Honeycomb, you can query traces by feature flag “impressions” by setting COUNT in the Visualize section and adding split.next_step = on in the Where section (using AND if you have multiple conditions).

Next Steps for Feature Flag Observability

Feature flags aren’t ideal candidates for bytecode instrumentation. The challenge here isn’t in the SDK itself, but rather in determining what behavior you want to observe when a flag is toggled on or off.

Looking ahead, one possible approach is to treat spans as proxies for flags: a span could represent a flag, allowing you to enable or disable entire sections of live application code by identifying the associated spans. While conceptually powerful, this approach can be complex and may not scale well, depending on the number of spans your application uses.

In the short term, a simpler pattern works well: manually wrap feature flag changes with a span and add the flag treatments as span attributes. This provides you with visibility, powered by OpenTelemetry, into how feature flags impact your application's behavior, enabling better traceability and faster debugging.

To get started with feature flags, see the Harness FME Feature Management documentation. If you’re brand new to Harness FME, sign up for a free trial today.

Recent Blogs

.png)

.png)

Harness FME Fast and Furious

Over the past six months, we have been hard at work building an integrated experience to take full advantage of the new platform made available after the Split.io merger with Harness. We have shipped a unified Harness UI for migrated Split customers, added enterprise-grade controls for experiments and rollouts, and doubled down on AI to help teams see impact faster and act with confidence. Highlights include OpenFeature providers, Warehouse Native Experimentation (beta), AI Experiment Summaries, rule-based segments, SDK fallback treatments, dimensional analysis support, and new FME MCP tools that connect your flags to AI-assisted IDEs.

And our efforts are being noticed. Just last month, Forrester released the 2025 Forrester Wave™ for Continuous Delivery & Release Automation where Harness was ranked as a leader in part due to our platform approach including CI/CD and FME. This helps us uniquely solve some of the most challenging problems facing DevOps teams today.

A more integrated experience: from Split UI to Harness UI

This year we completed the front-end migration path that moves customers from app.split.io to app.harness.io, giving teams a consistent, modern experience across the Harness platform with no developer code changes required. Day-to-day user flows remain familiar, while admins gain Harness-native RBAC, SSO, and API management with personal access token and service account token support.

What this means for you:

- No more switching UIs for customers who use FME and other Harness products

- No SDK or proxy changes required for production apps. Your flag evaluations continue as before.

- Harness RBAC and SSO now govern FME access. Migrations include clear before and after guides for roles, groups, and SCIM.

- Admin API parity with a documented before and after map and examples for the few endpoints that moved.

For admins, the quick confidence checklist, logging steps, and side-by-side screens make the switch straightforward. FME Settings routes you into the standard Harness RBAC screens for long-term consistency where appropriate.

Built for AI-driven workloads

Two themes shaped our AI investments: explainability and in-flow assist.

- Explainable measurement: AI Summaries help teams move faster from what happened to what should we do, without forcing deep dives into raw statistics.

- AI in the developer loop: FME MCP tools help developers inspect, compare, and adjust flag states without context switching. This shortens the loop between finding an issue and safely changing a treatment.

- Data where you need it: Warehouse Native Experimentation runs analyses where your data already lives, improving transparency and aligning with the way modern AI and analytics teams operate.

To learn more, watch this video!

Warehouse Native Experimentation (beta)

Warehouse Native Experimentation lets you run analyses directly in your own data warehouse using your assignment and event data for more transparent, flexible measurement. We are pleased to announce that this feature is now available in beta. Customers can request access through their account team and read more about it in our docs.

What else is new in Harness FME

As you can see from all the new features below, we have been running hard and we are accelerating into the turn as we head toward the end of the year. We take pride in the partnerships we have with our customers. As we listen to your concerns, our engineering teams are working hard to implement the features you need to be successful.

October 2025

- FME MCP tools connect feature flags and experiments to AI-assisted IDEs such as Claude Code, Windsurf, Cursor, and VS Code. Explore flags, compare flag definitions, and audit rules conversationally to speed up release workflows.

- OpenFeature providers for Android, iOS, Web, Java, Python, .NET, Node.js, React, and Angular help standardize evaluations and reduce lock-in across services and teams.

- Harness Proxy centralizes and secures outgoing SDK traffic with support for OAuth and mTLS, easing egress-control needs at scale. It also works with other Harness products like Database DevOps.

- Owners as metadata clarifies accountability while edit privileges remain governed by RBAC.

September 2025

- SDK Fallback treatments allow you to avoid unexpected control treatments by offering a centralized, simple and scalable way to set these across your SDKs and applications.

- Experiment entry event filter keeps analysis clean by including only users who actually hit the experiment entry point.

July 2025

- Dimensional analysis on experiments reveals effects by browser, device, region, and more so you can catch segment-level regressions early.

- AI Summarize on experiments and metrics gives fast, accurate summaries for non-technical stakeholders and busy teams.

June 2025

- Rule-based segments target users dynamically using attribute conditions, reducing static list maintenance and simplifying targeting rules reusability.

- Experiment tags improve searchability and at-scale organization.

- Client-side cache controls for Browser, iOS, and Android SDKs let you tune rollout cache expiration and initialization behavior.

Foundation laid earlier in 2025

- Experiments Dashboard for easier setup and multi-treatment analysis.

- Release Agent, rebranded from Switch, adds follow-up Q and A on metric summaries with admin-controlled AI settings.

As always, you can find details on all our new features by reading our release notes.

What customers can expect next

We are excited to add more value for our customers by continuing to integrate Split with Harness to achieve the best of both worlds. Harness CI/CD customers can expect familiar and proven methodologies to show up in FME like pipelines, RBAC, SSO support and more. To see the full roadmap and get a sneak peak at what is coming, reach out to us to schedule a call with your account representative.

Get started

- Attending AWS ReInvent? Come see us at booth #731 and get a live demo.

- Already migrated from Split? Sign in at app.harness.io. If you are an admin, review the RBAC and SSO guides to validate group mappings and SCIM.

- New to Harness FME? Explore the product page and datasheet, then try FME in your environment.

Want the full details? Read the latest FME release notes for all features, dates, and docs.

Checkout The Feature Management & Experimentation Summit

Read comparison of Harness FME with Unleash

Intent-Driven Assertions are Redefining How We Test Software

Picture this: your QA team just rolled out a comprehensive new test suite ; polished, precise, and built to catch every bug. Yet soon after, half the tests fail. Not because the code is broken, but because the design team shifted a button slightly. And even when the tests pass, users still find issues in production. A familiar story?

End-to-end testing was meant to bridge that gap. This is how teams verify that complete user workflows actually work the way users expect them to. It's testing from the user's perspective; can they log in, complete a transaction, see their data?

The Real Problem Isn't Maintenance. It's Misplaced Focus.

Maintaining traditional UI tests often feels endless. Hard-coded selectors break with every UI tweak, which happens nearly every sprint. A clean, well-structured test suite quickly turns into a maintenance marathon. Then come the flaky tests: scripts that fail because a button isn’t visible yet or an overlay momentarily blocks it. The application might work perfectly, yet the test still fails, creating unpredictable false alarms and eroding trust in test results.

The real issue lies in what’s being validated. Conventional assertions often focus on technical details- like whether a div.class-name-xy exists or a CSS selector returns a value, rather than confirming that the user experience actually works.

The problem with this approach is that it tests how something is implemented, not whether it works for the user. As a result, a test might pass even when the actual experience is broken, giving teams a false sense of confidence and wasting valuable debugging time.

Some common solutions attempt to bridge that gap. Teams experiment with smarter locators, dynamic waits, self-healing scripts, or visual validation tools to reduce flakiness. Others lean on behavior-driven frameworks such as Cucumber, SpecFlow, or Gauge to describe tests in plain, human-readable language. These approaches make progress, but they still rely on predefined selectors and rigid code structures that don’t always adapt when the UI or business logic changes.

What’s really needed is a shift in perspective : one that focuses on intent rather than implementation. Testing should understand what you’re trying to validate, not just how the test is written.

That’s exactly where Harness builds on these foundations. By combining AI understanding with intent-driven, natural language assertions, it goes beyond behavior-driven testing, actually turning human intent directly into executable validation.

What Are Intent-Driven Natural Language Assertions?

Harness AI Test Automation reimagines testing from the ground up. Instead of writing brittle scripts tied to UI selectors, it allows testers to describe what they actually want to verify, in plain, human language.

Think of it as moving from technical validation to intent validation. Rather than writing code to confirm whether a button exists, you can simply ask:

- “Did the login succeed?” or

- “Is the latest transaction a deposit?”.

Behind the scenes, Harness AI interprets these statements dynamically, understanding both the context and the intent of the test. It evaluates the live state of the application to ensure assertions reflect real business logic, not just surface-level UI details.

This shift is more than a technical improvement; it’s a cultural one. It democratizes testing, empowering anyone on the team, from developers to product managers, to contribute meaningful, resilient checks. The result is faster test creation, easier maintenance, and validations that truly align with what users care about: a working, seamless experience.

Harness describes this as "Intent-based Testing", where tests express what matters rather than how to check it, enabling developers and QA teams to focus on outcomes, not implementation details.

Harness AI Test Automation Solving Traditional Testing Issues

Traditional automation for end-to-end testing/UI testing often breaks when UIs change, leading to high maintenance overhead and flaky results. Playwright, Selenium, or Cypress scripts frequently fail because they depend on exact element paths or hardcoded data, which makes CI/CD pipelines brittle.

Industry statistics reveal that 70-80% of organizations still rely heavily on manual testing methods, creating significant bottlenecks in otherwise automated DevOps toolchains. Source

Harness AI Test Automation addresses these issues by leveraging AI-powered assertions that dynamically adapt to the live page or API context. Benefits include:

- Reduced flakiness: Tests automatically handle UI changes without manual intervention

- Lower maintenance costs: AI-generated selectors eliminate constant rewriting of selectors or brittle logic

- Focus on business logic: Teams concentrate on verifying user-centric outcomes rather than technical details

- Faster and No-Code test creation: Organizations report 10x faster test creation and the ability to cut test creation time by up to 90%

Organizations using AI Test Automation see up to 70% less maintenance effort and significant improvements in release velocity.

How Harness AI Test Understands and Validates Your Intent

Harness uses large language models (LLMs) optimized for testing contexts. The AI:

- Understands Your Intent: The AI parses your natural language assertion to grasp what you're trying to verify, for example, “Did the login succeed?" or “Is the button visible after submission?"

- Analyzes Real Application Context: It evaluates the live state of your application by analyzing the HTML DOM and the rendered viewport. This provides the AI with a comprehensive understanding of the app's current behavior, structure, and visual presentation.

- Maintains Context History: it keeps a record of previous steps and results, so the AI can use historical context when validating new assertions.

- Learns from Past Runs: Outputs from prior test executions are stored and referenced, allowing future assertions to become more accurate and context-aware over time.

- Provides Detailed Reasoning: Instead of just marking a test as “pass” or “fail,” the AI explains why, offering insights backed by both visual and structural evidence.

Together, these layers of intelligence make Harness AI Assertions not just smarter but contextually aware, giving you a more human-like and reliable testing experience every time you run your pipeline.

This context-aware approach identifies subtle bugs that are often missed by traditional tests and reduces the risks associated with AI “hallucinations.” Hybrid verification techniques cross-check outputs against real-time data, ensuring reliability.

For example, when testing a dynamic transaction table, an assertion like “Verify the latest transaction is a deposit over $500” will succeed even if the table order changes or new rows are added. Harness adapts automatically without requiring code changes

Harness Blog on AI Test Automation.

Crucially, we are not asking the AI to generate code (although for some math questions it might) and then never consult it again; we actually ask the AI this question with the context of the webpage every time you run the test.

Successful or not, the assertion will also give you back reasoning as to why it is true:

How Teams Use Harness AI Assertions

Organizations across fintech, SaaS, and e-commerce are using Harness AI to simplify complex testing scenarios:

- Financial services: Validating transaction tables and workflows with natural language assertions.

- SaaS platforms: Checking onboarding flows and dynamic permission rules.

- E-commerce: Confirming discount logic and inventory updates dynamically.

- Healthcare: Transforming test creation from days to minutes

Even less-technical users can author and maintain robust tests. Auto-suggested assertions and natural language prompts accelerate collaboration across QA, developers, and product teams.

You can also perform assertions based on parameters.

An early adopter reported that after integrating Harness AI Assertions, release verification time dropped by more than 50%, freeing QA teams to focus on higher-value work. DevOpsDigest coverage

Transforming QA with Harness AI: Faster, Smarter, Reliable

Harness AI Test Automation empowers teams to move faster with confidence. Key benefits include:

- Faster test creation: Write robust assertions in minutes rather than hours.

- Reduced test maintenance: Fewer broken scripts and less manual debugging.

- Improved collaboration: Align developers, testers, and product managers around shared intent.

- Future-ready QA: Supports modern DevOps practices and continuous delivery pipelines.

Harness AI Test Automation turns traditional QA challenges into opportunities for smarter, more reliable automation, enabling organizations to release software faster while maintaining high quality.

Harness AI is to test what intelligent assistants are to coding: it allows humans to focus on strategy, intent, and value, while the AI handles repetitive validation (Harness AI Test Automation).

Harness AI Test Automation represents a paradigm shift in testing. By combining intent-driven natural language assertions, AI-powered context awareness, and self-adapting validation, it empowers teams to deliver reliable software faster and with less friction.

If you are excited about and want to simplify maintenance while improving test reliability, contact us to learn more about how intent-driven, natural-language assertions can transform your testing experience.

DB Performance Testing with Harness FME

DB Performance Testing with Harness FME

Databases have been crucial to web applications since their beginning, serving as the core storage for all functional aspects. They manage user identities, profiles, activities, and application-specific data, acting as the authoritative source of truth. Without databases, the interconnected information driving functionality and personalized experiences would not exist. Their integrity, performance, and scalability are vital for application success, and their strategic importance grows with increasing data complexity. In this article we are going to show you how you can leverage feature flags to compare different databases.

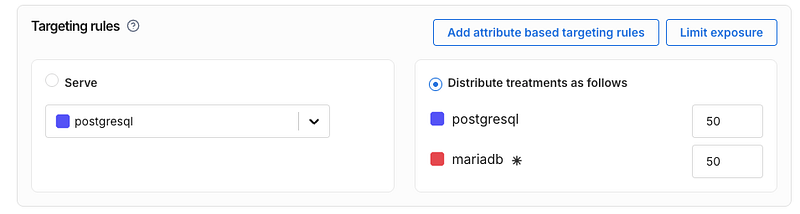

Let’s say you want to test and compare two different databases against one another. A common use case could be to compare the performance of two of the most popular open source databases. MariaDB and PostgreSQL.

MariaDB and PostgreSQL logos

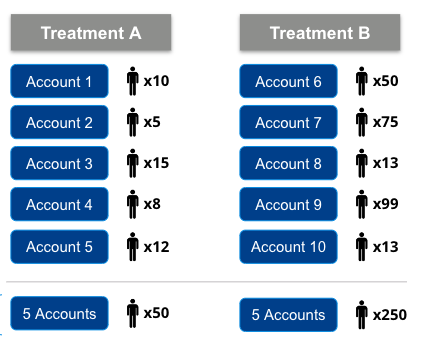

Let’s think about how we want to do this. We want to compare the experience of our users with these different database. In this example we will be doing a 50/50 experiment. In a production environment doing real testing in all likelihood you already use one database and would use a very small percentage based rollout to the other one, such as a 90/10 (or even 95/5) to reduce the blast radius of potential issues.

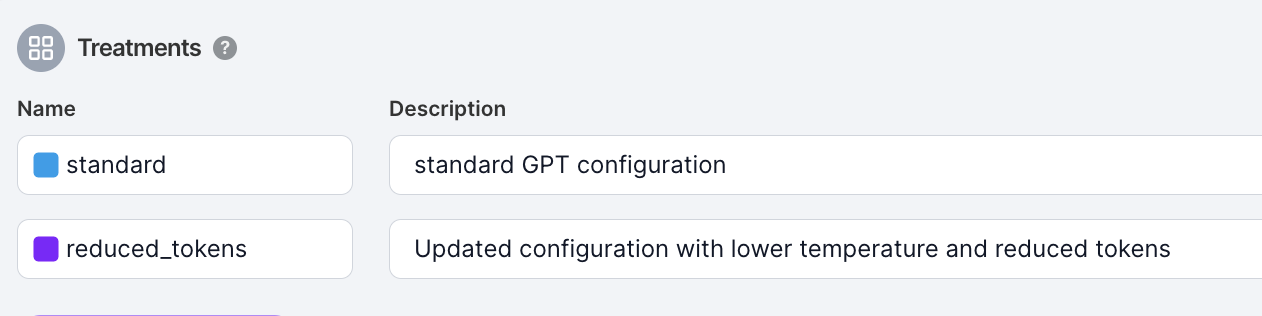

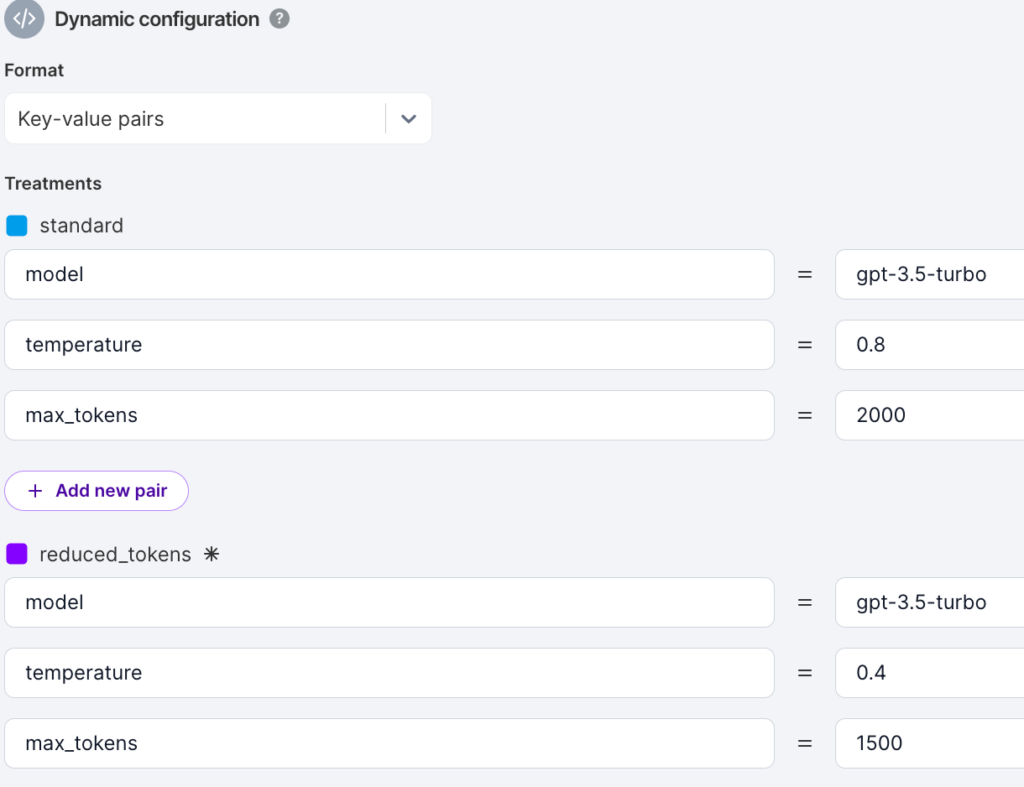

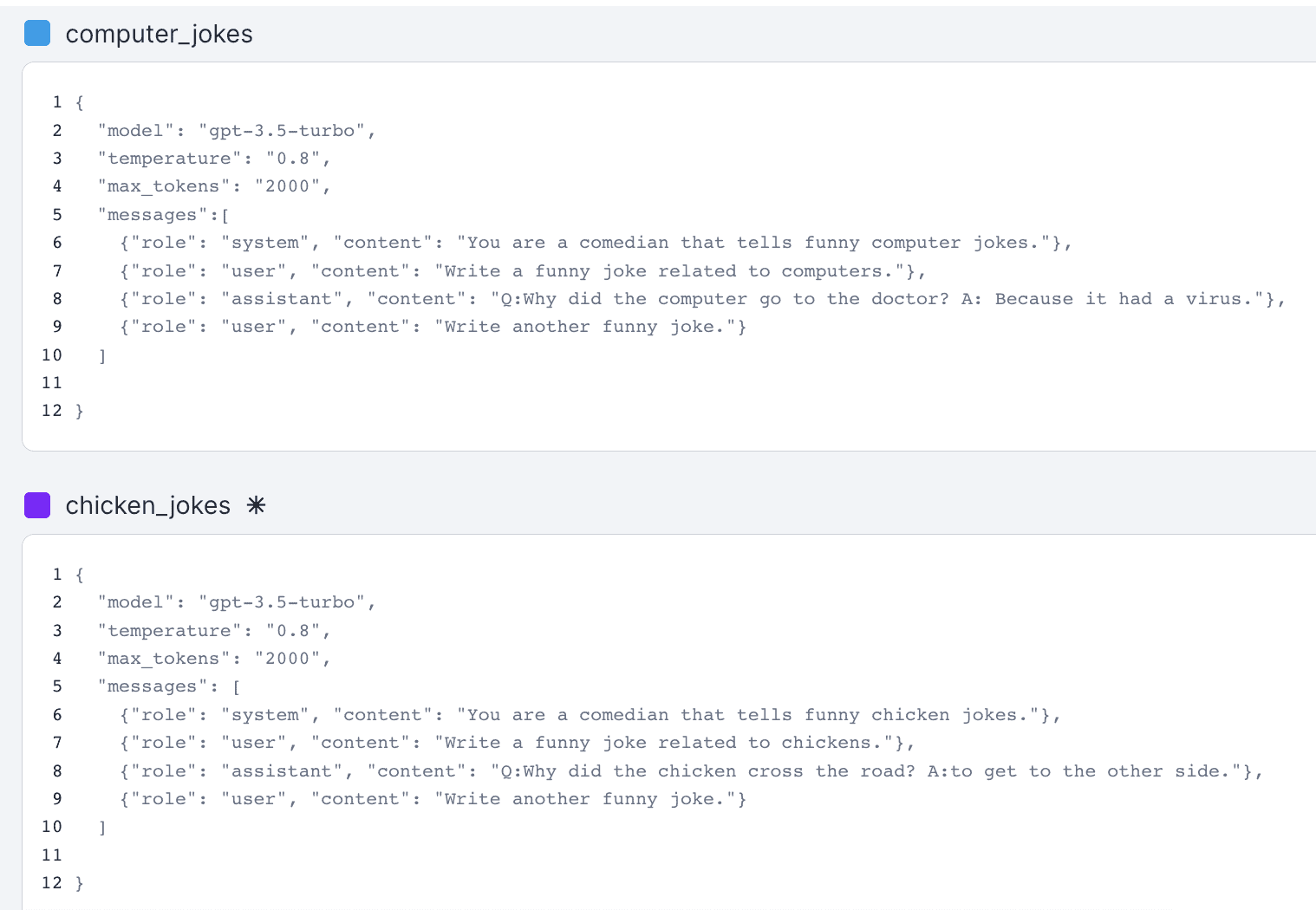

To do this experiment, first, let’s make a Harness FME feature flag that distributes users 50/50 between MariaDB and PostgreSQL

Now for this experiment we need to have a reasonable amount of sample data in the db. In this sample experiment we will actually just load the same data into both databases. In production you’d want to build something like a read replica using a CDC (change data capture) tool so that your experimental database matches with your production data

Our code will generate 100,000 rows of this data table and load it into both before the experiment. This is not too big to cause issues with db query speed but big enough to see if some kind of change between database technologies. This table also has three different data types — text (varchar), numbers, and timestamps.

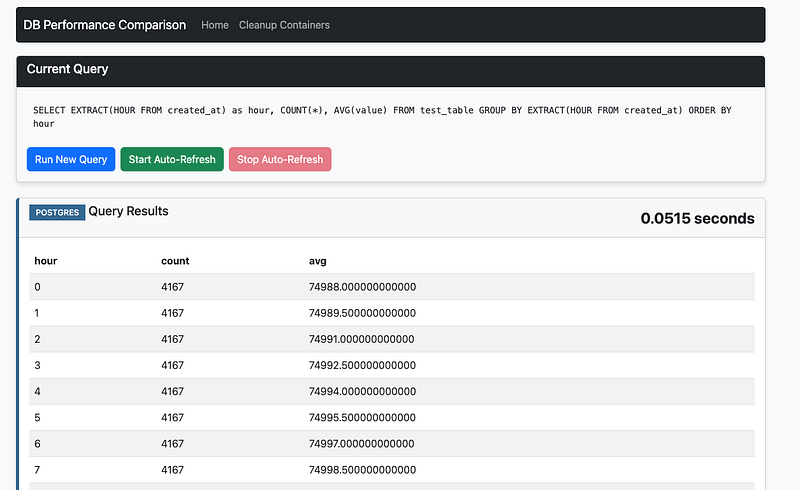

Now let’s make a basic app that simulates making our queries. Using Python we will make an app that executes queries from a list and displays the result.

Below you can see the basic architecture of our design. We will run MariaDB and Postgres on Docker and the application code will connect to both, using the Harness FME feature flag to determine which one to use for the request.

The sample queries we used can be seen below. We are using 5 queries with a variety of SQL keywords. We include joins, limits, ordering, functions, and grouping.

We use the Harness FME SDK to do the decisioning here for our user id values. It will determine if the incoming user experiences the Postgres or MariaDB treatment using the get_treatment method of the SDK based upon the rules we defined in the Harness FME console above.

Afterwards within the application we will run the query and then track the query_executionevent using the SDK’s track method.

See below for some key parts of our Python based app.

This code will initialize our Split (Harness FME) client for the SDK.

We will generate a sample user ID, just with an integer from 1–10,000

Now we need to get whether our user will be using Postgres or MariaDB. We also do some defensive programming here to ensure that we have a default if it’s not either postgres or mariadb

Now let’s run the query and track the query_executionevent. From the app you can select the query you want to run, or if you don’t it’ll just run one of the five sample queries at random.

The db_manager class handles maintaining the connections to the databases as well as tracking the execution time for the query. Here we can see it using Python’s time to track how long the query took. The object that the db_manager returns includes this value

Tracking the event allows us to see the impact of which database was faster for our users. The signature for the Harness FME SDK’s track method includes both a value and properties. In this case we supply the query execution time as the value and the actual query that ran as a property of the event that can be used later on for filtering and , as we will see later, dimensional analysis.

You can see a screenshot of what the app looks like below. There’s a simple bootstrap themed frontend that does the display here.

app screenshot

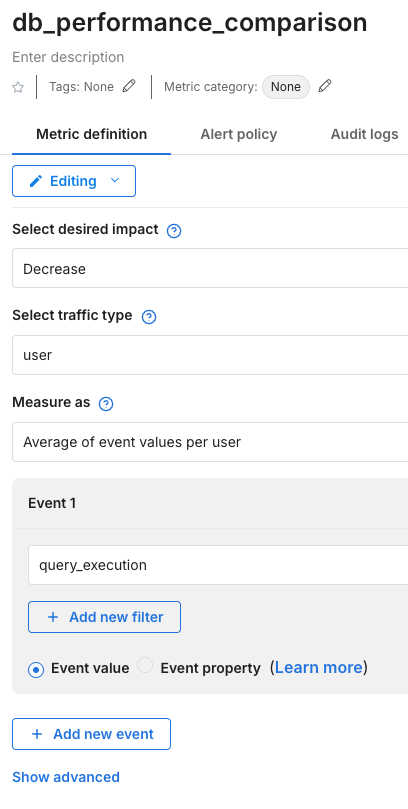

The last step here is that we need to build a metric to do the comparison.

Here we built a metric called db_performance_comparison . In this metric we set up our desired impact — we want the query time to decrease. Our traffic type is of user.

Metric configuration

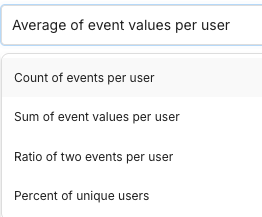

One of the most important questions is what we will select for the Measure as option. Here we have a few options, as can be seen below

Measure as options

We want to compare across users, and are interested in faster average query execution times, so we select Average of event values per user. Count, sum, ratio, and percent don’t make sense here.

Lastly, we are measuring the query_execution event.

We added this metric as a key metric for our db_performance_comparison feature flag.

Selection of our metric as a key metric

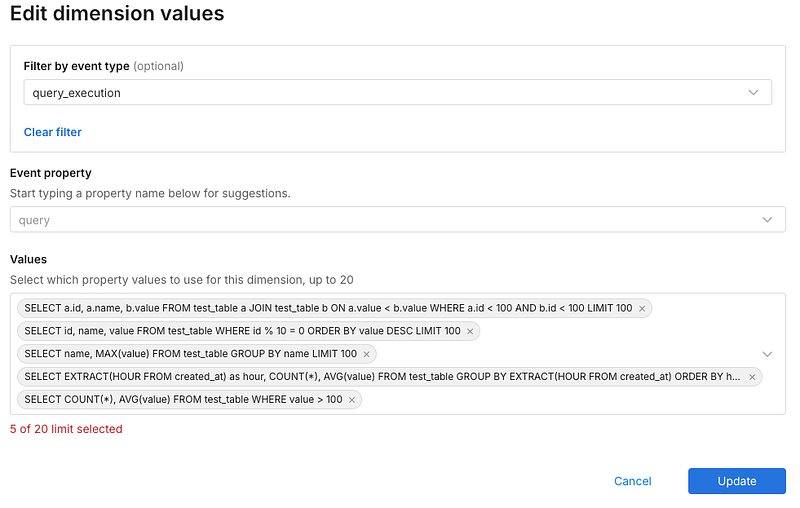

One additional thing we will want to do is set up dimensional analysis, like we mentioned above. Dimensional analysis will let us drill down into the individual queries to see which one(s) were more or less performant on each database. We can have up to 20 values in here. If we’ve already been sending events they can simply be selected as we keep track of them internally — otherwise, we will input our queries here.

selection of values for dimensional analysis

Now that we have our dimensions, our metric, and our application set to use our feature flag, we can now send traffic to the application.

For this example, I’ve created a load testing script that uses Selenium to load up my application. This will send enough traffic so that I’ll be able to get significance on my db_performance_comparison metric.

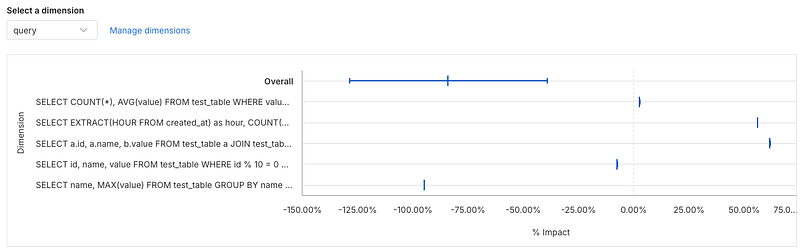

I got some pretty interesting results, if we look at the metrics impact screen we can see that Postgres resulted in a 84% drop in query time.

Even more, if we drill down to the dimensional analysis for the metric, we can see which queries were faster and which were actually slower using Postgres.

So some queries were faster and some were slower, but the faster queries were MUCH faster. This allows you to pinpoint the performance you would get by changing database engines.

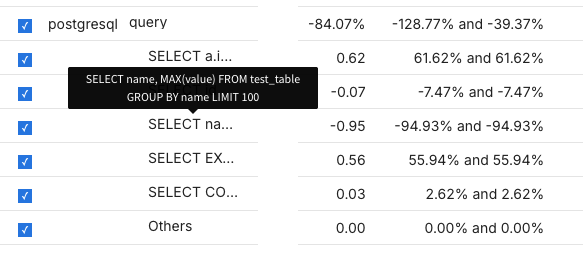

You can also see the statistics in a table below — seems like the query with the most significant speedup was one that used grouping and limits.

However, the query that used a join was much slower in Postgres — you can see it’s the query that starts with SELECT a.i... , since we are doing a self-join the table alias is a. Also the query that uses EXTRACT (an SQL date function) is nearly 56% slower as well.

Conclusion

In summary, running experiments on backend infrastructure like databases using Harness FME can yield significant insights and performance improvements. As demonstrated, testing MariaDB against PostgreSQL revealed an 84% drop in query time with Postgres. Furthermore, dimensional analysis allowed us to identify specific queries that benefited the most, specifically those involving grouping and limits, and which queries were slower. This level of detailed performance data enables you to make informed decisions about your database engine and infrastructure, leading to optimization, efficiency, and ultimately, better user experience. Harness FME provides a robust platform for conducting such experiments and extracting actionable insights. For example — if we had an application that used a lot of join based queries or used SQL date functions like EXTRACT it may end up showing that MariaDB would be faster than Postgres and it wouldn’t make sense to consider a migration to it.

The full code for our experiment lives here: https://github.com/Split-Community/DB-Speed-Test

AI Agents vs Real-World Web Tasks: Harness Leads the Way in Enterprise Test Automation

AI Agents vs Real-World Web Tasks: Harness Leads the Way in Enterprise Test Automation

Written by Deba Chatterjee, Gurashish Brar, Shubham Agarwal, and Surya Vemuri

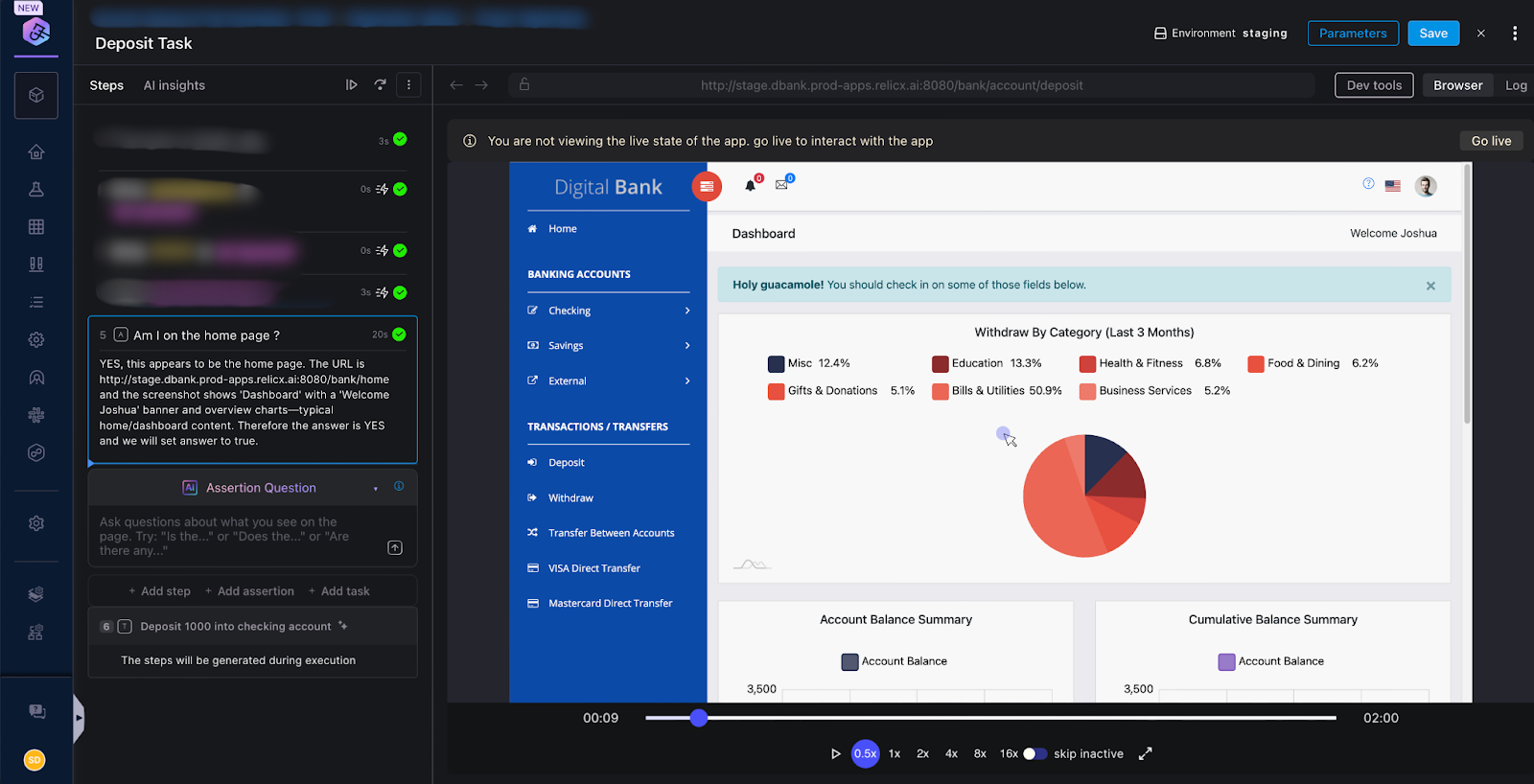

Can an AI agent test your enterprise banking workflow without human help? We found out. AI-powered test automation will be the de facto method for engineering teams to validate applications. Following our previous work exploring AI operations on the web and test automation capabilities, we expand our evaluation to include agents from the leading model providers to execute web tasks. In this latest benchmark, we evaluate how well top AI agents, including OpenAI Operator and Anthropic Computer Use, perform real-world enterprise scenarios. From banking applications to audit trail log navigation, we tested 22 tasks inspired by our customers and users.

Building on Previous Research

Our journey began with introducing a framework to benchmark AI-powered web automation solutions. We followed up with a direct comparison between our AI Test Automation and browser-use. This latest evaluation extends our research by incorporating additional enterprise-focused tasks inspired by the demands of today’s B2B applications.

The B2B Challenge

Business applications present unique challenges for agents performing tasks through web browser interactions. They feature complex workflows, specialized interfaces, and strict security requirements. Testing these applications demands precision, adaptability, and repeatability — the ability to navigate intricate UIs while maintaining consistent results across test runs.

To properly evaluate each agent, we expanded our original test suite with three additional tasks:

- A banking application workflow requiring precise transaction handling, i.e., deposit of funds into a checking account

- Navigation of a business application to view audit logs filtered by date

- Interacting with a messaging application and validating the conversation in the history

These additions brought the total test suite to 22 distinct tasks varying in complexity and domain specificity.

Comprehensive Evaluation Results

User tasks and Agent results

The four solutions performed very differently, especially on complex tasks. Our AI Test Automation led with an 86% success rate, followed by browser-use at 64%, while OpenAI Operator and Anthropic Computer Use achieved 45% and 41% success rates, respectively.

The performance varies as tasks interact with complex artifacts such as calendars, information-rich tables, and chat interfaces.

Additional Web Automation Tasks

As in previous research, each agent executed their tasks on popular browsers, i.e., Firefox and Chrome. Also, even though OpenAI Operator required some user interaction, no additional manual help or intervention was provided outside the evaluation task.

Banking Application Navigation

The first additional task involves banking. The instructions include logging into a demo banking application, depositing $350 into a checking account, and verifying the transaction. Each solution must navigate the site without prior knowledge of the interface.

Our AI Test Automation completed the workflow, correctly selecting the family checking account and verifying that the $350 deposit appeared in the transaction history. Browser-use struggled with account selection and failed to complete the deposit action. Both Anthropic Computer Use and OpenAI Operator encountered login issues. Neither solution progressed past the initial authentication step.

Audit Trail Navigation

Finding audit trail records in a table full of data is a common enterprise requirement. We challenged each solution to navigate Harness’s Audit Trail interface to locate two-day-old entries. The AI Test Automation solution navigated to the Audit Logs and paged through the table to identify two-day-old entries. Browser-use reached the audit log UI but failed to navigate, i.e., paginate to the requested records. Anthropic Computer Use did not scroll sufficiently to find the Audit Trail tile. The default browser resolution is a limiting factor with Anthropic Computer Use. The OpenAI Operator found the two-day-old audit logs.

This task demonstrates that handling information-rich tables remains challenging for browser automation tools.

Messaging Application Interaction

The third additional task involves a messaging application. The intent is to initiate a conversation with a bot and verify the conversation in a history table. This task incorporates browser interaction and verification logic.

The AI Test Automation solution completed the chat interaction and correctly verified the conversation’s presence in the history. Browser-use also completed this task. Anthropic Computer Use, on the other hand, is unable to start a conversation. OpenAI Operator initiates the conversation but never sends a message. As a result, a new conversation does not appear in the history.

This task reveals varying levels of sophistication in executing multi-step workflows with validation.

What Makes Solutions Perform Differently?

Several factors contribute to the performance differences observed:

Specialized Architecture: Harness AI Test Automation leverages multiple agents designed for software testing use cases. Each agent has varying levels of responsibility, from planning to handling special components like calendars and data-intensive tables.

Enterprise Focus: Harness AI Test Automation is designed with enterprise use cases in mind. There are certain features to take into account from the enterprise. A sample of these features includes:

- security

- repeatability for CI/CD integration

- precision

- ability to interact with an API

- uncommon interfaces that are not generally accessible via web crawling, hence not available for training

Task Complexity: Browser-use, Anthropic Computer Use, and OpenAI Operator execute many tasks. But as complexity increases, the performance gap widens significantly.

Why Harness Outperforms

- Custom agents for calendars, rich tables

- API-driven validation where UI alone is insufficient

- Secure handling of login and secrets

Conclusion

Our evaluation demonstrates that while all four solutions handle basic web tasks, the performance diverges when faced with more complex tasks and web UI elements. In such a fast-moving environment, we will continue to evolve our solution to execute more use cases. We will stay committed to tracking performance across emerging solutions and sharing insights with the developer community.

At Harness, we continue to enhance our solution to meet enterprise challenges. Promising enhancements to the product include self-diagnosis and tighter CI/CD integrations. Intent-based software testing is easier to write, more adaptable to updates, and easier to maintain than classic solutions. We continue to enhance our AI Test Automation solution to address the unique challenges of enterprise testing, empowering development teams to deliver high-quality software confidently. After all, we’re obsessed with empowering developers to do what they love: ship great software.

AI-Powered Resilience Testing with Harness MCP Server and Windsurf

The complexity of modern distributed systems demands proactive resilience testing, yet the old-school chaos engineering often presents a steep learning curve that can slow adoption across teams. What if you could perform chaos experiments using simple, natural language conversations directly within your development environment?

The integration of Harness Chaos Engineering with Windsurf through the Model Context Protocol (MCP) makes this vision a reality. This powerful combination enables DevOps, QA, and SRE teams to discover, execute, and analyze chaos experiments without deep vendor-specific knowledge, accelerating your organization's journey toward building a resilience testing culture.

Simplifying Chaos Engineering

Chaos engineering has proven its value in identifying system weaknesses before they impact production. However, traditional implementations face common challenges:

Technical Complexity: Setting up experiments requires deep understanding of fault injection mechanisms, blast radius calculations, and monitoring configurations.

Learning Curve: Teams need extensive training on vendor-specific tools and chaos engineering principles before becoming productive.

Context Switching: Engineers constantly move between documentation, experiment configuration interfaces, and result analysis tools.

Skill Scaling: Organizations struggle to democratize chaos engineering beyond specialized reliability teams.

The Harness MCP integration changes this landscape by bringing chaos engineering capabilities directly into your AI-powered development workflow.

Understanding Harness Chaos Engineering MCP Tools

The Harness Chaos Engineering MCP server provides six specialized tools that cover the complete chaos engineering lifecycle:

Core Experiment Tools

chaos_experiments_list: Discover all available chaos experiments in your project. Perfect for understanding your resilience testing capabilities and finding experiments relevant to specific services.

chaos_experiment_describe: Get details about any experiment, including its purpose, target infrastructure, expected impact, and success criteria.

chaos_experiment_run: Execute chaos experiments with intelligent parameter detection and automatic configuration, removing the complexity of manual setup.

chaos_experiment_run_result: Retrieve detailed results including resilience scores, performance impact analysis, and actionable recommendations for improvement.

Advanced Monitoring Tools

chaos_probes_list: Discover all available monitoring probes that validate system health during experiments, giving you visibility into your monitoring capabilities.

chaos_probe_describe: Get detailed information about specific probes, including their validation criteria, monitoring setup, and configuration parameters.

Setting Up Harness MCP Server with Windsurf

Prerequisites

Before beginning the setup, ensure you have:

- Windsurf IDE installed

- Harness Platform access with Chaos Engineering enabled

- Harness API key with appropriate permissions

- Go 1.23+ (to build from source)

Step 1: Build the Harness MCP Server Binary

You have multiple installation options. Choose the one that best fits your environment:

Building from Source

For advanced users who prefer building from source:

- Clone the Repository:

git clone https://github.com/harness/mcp-server

cd mcp-server

- Build the Binary:

go build -o cmd/harness-mcp-server/harness-mcp-server ./cmd/harness-mcp-server

Step 2: Configure the Harness MCP Server in Windsurf

- Navigate to your Windsurf Settings, click on Cascade, then Manage MCPs.

- Click on View raw config to open your mcp_config.json file

- Add the below configuration to the file

{

"mcpServers": {

"harness": {

"command": "/path/to/harness-mcp-server",

"args": ["stdio"],

"env": {

"HARNESS_API_KEY": "your-api-key-here",

"HARNESS_DEFAULT_ORG_ID": "your-org-id",

"HARNESS_DEFAULT_PROJECT_ID": "your-project-id",

"HARNESS_BASE_URL": "https://app.harness.io"

}

}

}

}

Step 3: Add the Path of your Binary and Harness Credentials

Gather the following information, add it to the placeholders and save the mcp_config.json file.

- Command: Path to your built harness-mcp-server binary

- API Key: Generate from your Harness account settings (Profile > My API Keys)

- Organization ID: Found in your Harness URL or organization settings

- Project ID: The project containing your chaos experiments

- Base URL: Your Harness instance URL (typically https://app.harness.io)

Step 4: Verify Installation

- Restart Windsurf: Close and reopen Windsurf to load the new configuration

- Go back to Mange MCPs, you should see a list of tools available

- Test Connection: Try a simple prompt like:

"List all chaos experiments available in my project"

If successful, you should see chaos-related tools with the "chaos" prefix and receive a response with your experiment list.

AI-Powered Chaos Engineering in Action

With your setup complete, let's explore how to leverage these tools effectively through natural language interactions.

Discovery and Learning Phase

Service-Specific Exploration:

"I am interested in catalog service resilience. Can you tell me what chaos experiments are available?"

Expected Output: Filtered list of experiments targeting your catalog service, categorized by fault type (network, compute, storage).

Deep-Dive Analysis:

"Describe briefly what the pod deletion experiment does and what services it targets"

Expected Output: Technical details about the experiment, including fault injection mechanism, expected impact, target selection criteria, and success metrics.

Understanding Resilience Metrics:

"Describe the resilience score calculation details for the network latency experiment"

Expected Output: Detailed explanation of scoring methodology, performance thresholds, and interpretation guidelines.

Experiment Execution Phase

Targeted Experiment Execution:

"Can you run the pod deletion experiment on my payment service?"

Expected Output: Automatic parameter detection, experiment configuration, execution initiation, and real-time monitoring setup.

Structured Overview Creation:

"Can you list the network chaos experiments and the corresponding services targeted? Tabulate if possible."

Expected Output: Well-organized table showing experiment names, target services, fault types, and current status.

Monitoring Probe Discovery:

"Show me all available chaos probes and describe how they work"

Expected Output: Complete catalog of available probes with their monitoring capabilities, validation criteria, and configuration details.

Analysis and Reporting Phase

Result Interpretation:

"Summarise the result of the database connection timeout experiment"

Expected Output: Comprehensive analysis including performance impact, resilience score, business implications, and specific recommendations for improvement.

Probe Configuration Details:

"Describe the HTTP probe used in the catalog service experiment"

Expected Output: Detailed probe configuration, validation criteria, success/failure thresholds, and monitoring setup instructions.

Comprehensive Resilience Assessment:

"Scan the experiments that were run against the payment service in the last week and summarise the resilience posture for me"

Expected Output: Executive-level resilience report with trend analysis, critical findings, and actionable improvement recommendations.

The Road Ahead

The convergence of AI and chaos engineering represents more than a technological advancement, it's a fundamental shift toward more accessible, and intelligent resilience testing. By embracing this approach with Harness and Windsurf, you're not just testing your systems' resilience, you're building the foundation for reliable, battle-tested applications that can withstand the unexpected challenges of production environments.

Start your AI-powered chaos engineering journey today and discover how natural language can transform the way your organization approaches system reliability.

Resilience Testing using Harness

In today's fast-paced digital landscape, ensuring the reliability and resilience of your systems is more critical than ever. Downtime can lead to significant business losses, eroded customer trust, and operational headaches. That's where Harness Chaos Engineering comes in—a powerful module within the Harness platform designed to help teams proactively test and strengthen their infrastructure. In this blog post, we'll dive into what Harness Chaos Engineering is, how it works, its key features, and how you can leverage it to build more robust systems.

What is Harness Chaos Engineering?

Harness Chaos Engineering is a dedicated module on the Harness platform that enables efficient resilience testing. It's trusted by a wide range of teams, including developers, QA engineers, performance testing specialists, and Site Reliability Engineers (SREs). By simulating real-world failures in a controlled environment, it helps uncover hidden weaknesses in your systems and identifies potential risks that could impact your business.

At its core, resilience testing involves running chaos experiments. These experiments inject faults deliberately and measure how well your system holds up. Harness uses resilience probes to verify the expected state of the system during these tests, culminating in a resilience score ranging from 0 to 100. This score quantifies how effectively your system withstands injected failures.

But Harness goes beyond just resilence scoring— it also provides resilience test coverage metrics. Together, these form what's known as your system's resilience posture. This actionable insight empowers businesses to prioritize improvements and enhance overall service reliability.

Comprehensive Capabilities for End-to-End Resilience Testing

Harness Chaos Engineering is equipped with everything you need for thorough, end-to-end resilience testing. Here's a breakdown of its standout features:

- Extensive Chaos Fault Library: Access over 200 out-of-the-box chaos faults through the enterprise Chaos Hub. These cover a broad spectrum of environments, including major cloud platforms, Linux and Windows systems, Kubernetes, Pivotal Cloud Foundry (PCF), and application runtimes like JVM.

- Automated Resilience Probes: Measure resilience scores effortlessly with integrations to popular monitoring tools and services. Connect seamlessly with Kubernetes, Prometheus, Dynatrace, Datadog, New Relic, Splunk, and various cloud provider monitoring solutions to automate assessments.

- ChaosGuard for Governance: Maintain control over chaos experiments with robust governance features. Define policies on who can run specific types of experiments, on which systems, and during designated time windows—ensuring safe and compliant testing.

- GameDay Portal: SREs can easily orchestrate GameDays in production environments using the built-in portal. This facilitates collaborative, real-time exercises to prepare teams for actual incidents.

- AI Reliability Agent: Harness incorporates AI to supercharge your chaos engineering efforts. Get intelligent recommendations for creating new experiments, optimizing existing ones, and troubleshooting probe failures.

Once you've created your chaos experiments and organized them into custom Chaos Hubs, the possibilities are endless.

Real-World Use Cases for Harness Chaos Experiments

Harness Chaos Engineering isn't just theoretical—it's built for practical application across your workflows. Here are some key use cases:

- Integration with Deployment Pipelines: Embed chaos experiments directly into tools like Harness Continuous Delivery (CD), GitHub Actions, Jenkins, or GitLab. This ensures resilience is validated as part of your CI/CD process.

- Combining with Load Testing: Run chaos alongside performance tools such as LoadRunner, Gatling, Locust, or JMeter to simulate high-stress scenarios and measure true system behavior under pressure.

- GameDays and Production Testing: Use the GameDay portal to conduct structured exercises in live environments, fostering a culture of preparedness.

- Disaster Recovery (DR) Testing: Validate your DR strategies by injecting faults that mimic outages, ensuring your failover mechanisms work as intended.

These integrations make it simple to incorporate chaos engineering into your existing processes, turning potential vulnerabilities into opportunities for improvement.

Easy Onboarding and Scalability

Getting started with Harness Chaos Engineering is straightforward, and it's designed to scale with your needs. Key features that support seamless adoption and growth include:

- Centralized Chaos Execution Plane (Agentless Chaos): Manage experiments from a single, agentless control plane, simplifying operations across distributed environments.

- Templates and Terraform Support: Reuse proven experiment templates and automate infrastructure with Terraform for faster setup.

- Platform RBACs and Custom Chaos Hubs: Fine-tune access controls with Role-Based Access Control (RBAC) and create tailored Chaos Hubs to organize experiments by team or project.

Whether you're a small team just dipping your toes into chaos engineering or a large enterprise scaling across multiple clouds, Harness makes it efficient and manageable.

Deployment Options: SaaS and On-Premise

Harness Chaos Engineering is flexible in how you deploy it. The SaaS version offers a free plan that includes all core capabilities—even AI-driven features—to help you kickstart your resilience testing journey without upfront costs. For organizations preferring more control, an On-Premise option is available, ensuring compliance with internal security and data policies.

Conclusion: Build Resilient Systems with Harness

In an era where system failures can have cascading effects, Harness Chaos Engineering empowers you to test, measure, and improve resilience proactively. By discovering weaknesses early, you not only mitigate risks but also boost confidence in your infrastructure. Whether through automated probes, AI insights, or integrated workflows, Harness provides the tools to achieve a superior resilience posture.

Ready to get started? Explore the free SaaS plan today and transform how your teams approach reliability. For more details, visit the Harness platform or check out our documentation. Let's engineer chaos—for a more reliable tomorrow!

Learn How to Build a Chaos Lab for Real-World Resilience Testing

Harness launches MCP tools to enhance its AI powered Chaos Engineering Capabilities

The practice of Chaos Engineering helps in doing resilience testing to get the measurable data for resilience of services or discover the weaknesses in them. Either way, the users will have actionable resilience data around their application services to check compliance and take proactive actions for improvements. This practice is on the rise in recent years because of heavy digital modernisation and move to cloud native systems. A successful adoption of this practice in an Enterprise requires consistent skilling of developers around chaos experimentation and resilience management, which is a challenge in itself.

The uprise in the availability of AI LLMs and associated technology advancements such as AI Agents and MCP Tools make it possible to significantly reduce the skills required to do efficient resilience testing. Users will be able to do the resilience testing successfully with very little knowledge of the vendor tools and the actual chaos experiments details. The MCP tools will do the job of converting simple user prompts in the natural language to the required product API and provide the responses, which then are interpreted nicely by the LLMs.

Harness has published it's MCP server in open source here and the documentation is found here. In this article we are announcing the MCP tools for Chaos Engineering on Harness.

Introducing Chaos MCP tools:

The initial set of chaos tools that is released will help in discovering, understanding and planning the orchestration of chaos experiments for the end users. Following are the tools

- chaos_experiments_list: List all the chaos experiments for a specific project in the account.

- chaos_experiment_describe: Get details of a specific chaos experiment.

- chaos_experiment_run: Run a specific chaos experiment.

- chaos_experiment_run_result: Get the result of a specific chaos experiment run.

These MCP tools will help the user to start and make progress on resilience testing using simple natural language prompts.

Following are some of the prompts that user can effectively use with the above tools:

- I am interested in catalog service resilience. Can you tell me what chaos experiments are available?

- Describe briefly what a particular chaos experiment does?

- Describe the resilience score calculation details of a specific chaos experiment?

- Can you run a specific experiment for me?

- Can you list the network chaos experiments and the corresponding services targeted? Tabulate if possible.

- Summarise the result of a particular chaos experiment

- Scan the experiments that were run against particular service in the last one week and summarise the resilience posture for me.

An example report would look like the following with Claude Desktop

.png)

.png)

How to setup the Harness MCP Server?

Harness MCP server can be setup in various ways. The installation setup of MCP server is available on the documentation site. Chaos tools are part of the Harness MCP server. Follow the instructions and setup the harness-mcp-server on your AI-editors or local AI desktop application like Claude Desktop.

How do I get started with resilience testing using Harness MCP tools?

Once MCP server is setup, provide simple natural language prompts to

- First, discover the list of chaos experiment capabilities. You can even describe the resilience test scenario that you have in mind and check if your Harness project has suitable chaos experiments

- Then, understand what each experiment does in detail.

- Then, run chaos experiment of your choice and observe the resilience reports

- Generate resilience summary or brief reports or detailed reports of a particular service or a set of service

- Tabulate the results of resilience tests

Video Tutorial:

In the below video you can find details of how to configure Harness MCP server on Claude Desktop and do the resilience testing using simple natural language prompts.

Important Links:

New to Harness Chaos Engineering ? Signup here

Trying to find the documentation for Chaos Engineering ? Go here: Chaos Engineering

Want to build the Harness MCP server here ? Go here: GitHub

Want to know how to setup Harness MCP servers with Harness API Keys ? Go here: Manage API keys

.jpg)

.jpg)

Harness AI Test Automation: End-to-End, AI-Powered Testing for Faster, Smarter DevOps

We’re excited to announce the General Availability (GA) of Harness AI Test Automation – the industry’s first truly AI-native, end-to-end test automation solution, that's fully integrated across the entire CI/CD pipeline, built to meet the speed, scale, and resilience demanded by modern DevOps. With AI Test Automation, Harness is transforming the software delivery landscape by eliminating the bottlenecks of manual and brittle testing and empowering teams to deliver quality software faster than ever before.

This launch comes at a critical time. Organizations spend billions of dollars on software quality assurance each year, yet most still face the same core challenges of fragility, speed, and pace. Testing remains a significant obstacle in an otherwise automated DevOps toolchain, with around 70-80% of organizations still reliant on manual testing methods, thus slowing down delivery and introducing risks.

Harness AI Test Automation changes that paradigm, replacing outdated test frameworks with a seamless, AI-powered solution that delivers smarter, faster, and more resilient testing across the SDLC. With this offering, Harness has the industry’s first fully automated software delivery platform, where our customers can code, build, test, and deploy applications seamlessly.

What Makes Harness AI Test Automation Different?

Harness AI Test Automation has a lot of unique capabilities that make high-quality end-to-end testing effortless.

"Traditional testing methods struggled to keep up, it is too manual, fragile, and slow. So we’ve reimagined testing with AI. Intent-based testing brings greater intelligence and adaptability to automation, and it seamlessly integrates into your delivery pipeline." - Sushil Kumar, Head of Business, AI Test Automation

Some of the standout benefits of AI Test Automation are:

Create High-Quality Tests in Minutes: No Code Required

AI Test Automation streamlines the creation of high-quality tests:

- Live Test Authoring: Record tests automatically by simply interacting with your web app. No scripting or coding expertise needed.

- Intent-Based Testing: Author test cases and assertions using natural language prompts. Just type what you want to verify—like “Did the login succeed?”—and let AI handle the rest.

- AI Auto Assertions: Harness AI suggests and auto-generates assertions after each step, pre-verified for seamless functionality. This ensures robust coverage and saves hours of manual work.

- Visual Testing with AI: Validate complex UI elements, including dynamic and canvas-based components, using human-like visual testing. Use natural language to verify visual states, eliminating the need for fragile scripts.

Self-Healing, Adaptive Test Maintenance

AI Test Automation eliminates manual test maintenance while improving test reliability:

- AI-Generated Selectors: Tests adapt automatically to UI and workflow changes, dramatically reducing test flakiness and maintenance by up to 70%.

- Run Stable Tests Across Environments: Smart Selector technology and automatic URL translation ensure tests work everywhere, with no modifications required.

AI Test Automation adapts to the UI changes with its smart selectors

Intelligent, Scalable Test Execution

AI Test Automation boosts efficiency and streamlines testing workflows:

- Intelligent Retries: AI Test Automation distinguishes between transient issues and real bugs, reducing false positives and debugging time.

- Parallel Execution: Effortlessly scale to thousands of tests running in parallel, optimizing validation as your application grows.

- Data-Driven Testing: Parameterized tests dynamically handle data at runtime, enabling flexible and efficient workflows.

Seamless Integration and Enterprise-Grade Security

AI Test Automation enables resilient end-to-end automation:

- Unified with Harness CI/CD: AI Test Automation is natively integrated with Harness pipelines, enabling true end-to-end automation from build to deploy.

- SOC 2 Type 2 Compliance: Enterprise-ready security and compliance, so you can trust your test automation at scale.

- No-Code Simplicity + Advanced Flexibility: Add custom JavaScript or Puppeteer scripts for complex scenarios — get the best of both worlds.

Real Results: Customer Success Stories

At Harness, we use what we build. We’ve adopted AI Test Automation across our own products, achieving 10x faster test creation and enabling visual testing with AI – all fully integrated into our CI/CD pipelines. Since AI can be nondeterministic, and testing AI workflows using traditional test automation tools can be hard, we also use AI Test Automation to test our internal AI workflows.

“With AI Test Automation, I just literally wrote out and wireframed all the test cases, and in a matter of 15–20 minutes, I was able to knock out one test. Using the templating functionality, we were able to come up from a suite of 0 to 55 tests in the span of 2 and a half weeks.” - Rohan Gupta, Principal Product Manager, Harness

Our customers are seeing dramatic results, too. For example, using Harness AI Test Automation, Siemens Healthineers slashed QA bottlenecks and transformed test creation from days to minutes.

Wasimil, a hotel booking and management platform, has reduced its test maintenance time by 50%, allowing it to release twice as frequently as it used to before using Playwright.

"With AI Test Automation, we could ship features, not just bug fixes. We don't wanna spend 30 to 40% of our engineering resources on fixing bugs because we can't be proud to ship bug fixes to our customers. Right? And they expect features, not bug fixes."

- Tom, CTO, Wasimil

Watch AI Test Automation in action

The Future Is Now: Join the Testing Revolution

With AI Test Automation, Harness becomes the first platform to offer true, fully automated software delivery, from build → test → deploy, without manual gaps or toolchain silos.

- Create robust, intent-based tests 10x faster using natural language

- Slash maintenance by up to 70% with self-healing, AI-powered tests

- Accelerate release cycles and improve developer experience

- Achieve higher quality, lower costs, and reduced risk—at enterprise scale

Be part of the revolution: start your AI-powered testing journey with Harness today.

Ready to see AI Test Automation in action? Contact us to get started!

Split Embraces OpenFeature

Driving Feature Flag Standardization with OpenFeature

Split is excited to announce participation in OpenFeature, an initiative led by Dynatrace and recently submitted to the Cloud Native Computing Foundation (CNCF) for consideration as a sandbox program.

As part of an effort to define a new open standard for feature flag management, this project brings together an industry consortium of top leaders. Together, we aim to provide a vendor-neutral approach to integrating with feature flagging and management solutions. By defining a standard API and SDK for feature flagging, OpenFeature is meant to reduce issues or friction commonly experienced today with the end goal of helping all development teams ramp reliable release cycles at scale and, ultimately, move towards a progressive delivery model.

At Split, we believe this effort is a strong signal that feature flagging is truly going “mainstream” and will be the standard best practice across all industries in the near future.

What Is Feature Flagging?

Feature flagging is a simple, yet powerful technique that can be used for a range of purposes to improve the entire software development lifecycle. Other common terms include things like “feature toggle” or “feature gate.” Despite sometimes going by different names, the basic concept underlying feature flags is the same:

A feature flag is a mechanism that allows you to decouple a feature release from a deployment and choose between different code paths in your system at runtime.

Because feature flags enable software development and delivery teams to turn functionality on and off at runtime without deploying new code, feature management has become a mission-critical component for delivering cloud-native applications. In fact, feature management supports a range of practices rooted in achieving continuous delivery, and it is especially key for progressive delivery’s goal of limiting blast radius by learning early.

Think about all the use cases. Feature flags allow you to run controlled rollouts, automate kill switches, a/b test in production, implement entitlements, manage large-scale architectural migrations, and more. More fundamentally, feature flags enable trunk-based development, which eliminates the need to maintain multiple long-lived feature branches within your source code, simplifying and accelerating release cycles.

Where Does Split Come in?

While feature flags alone are very powerful, organizations that use flagging at scale quickly learn that additional functionality is needed for a proper, long-term feature management approach. This requires functionality like a management interface, the ability to perform controlled rollouts, automated scheduling, permissions and audit trails, integration into analytics systems, and more. For companies who want to start feature flagging at scale, and eventually move towards a true progressive delivery model, this is where companies like Split come into the mix.

Split offers full support for progressive delivery. We provide sophisticated targeting for controlled rollouts but also flag-aware monitoring to protect your KPIs for every release, as well as feature-level experimentation to optimize for impact. Additionally, we invite you to learn more about our enterprise-readiness, API-first approach, and leading integration ecosystem.

So, Why Is OpenFeature Needed?

Feature flag tools, like Split, all use their proprietary SDKs with frameworks, definitions, and data/event types unique to their platform. There are differences across the feature management landscape in how we define, document, and integrate feature flags with 3rd party solutions, and with this, issues can arise.

For one, we all end up maintaining a library of feature flagging SDKs in various tech stacks. This can be quite a lot of effort, and that all is duplicated by each feature management solution. Additionally, while it is commonly accepted that feature management solutions are essential in modern software delivery, for some, these differences also make the barrier to entry seem too high. Rather, standardizing feature management will allow organizations to worry less about easy integration across their tech stack, so they can just get started using feature flags!

Ultimately, we see OpenFeature as an important opportunity to promote good software practices through developing a vendor-neutral approach and building greater feature flag awareness.

Introducing OpenFeature

Created to support a robust feature flag ecosystem using cloud-native technologies, OpenFeature is a collective effort across multiple vendors and verticals. The mission of OpenFeature is to improve the software development lifecycle, no matter the size of the project, by standardizing feature flagging for developers.

By defining a standard API and providing a common SDK, OpenFeature will provide a language-agnostic, vendor-neutral standard for feature flagging. This provides flexibility for organizations, and their application integrators, to choose the solutions that best fit their current requirements while avoiding code-level lock-in.

Feature management solutions, like Split, will implement “providers” which integrate into the OpenFeature SDK, allowing users to rely on a single, standard API for flag evaluation across every tech stack. Ultimately, the hope is that this standardization will provide the confidence for more development teams to get started with feature flagging.

Final Thoughts

“OpenFeature is a timely initiative to promote a standardized implementation of feature flags. Time and again we’ve seen companies reinventing the wheel and hand-rolling their feature flags. At Split, we believe that every feature should be behind a feature flag, and that feature flags are best when paired with data. OpenFeature support for Open Telemetry is a great step in the right direction,” Pato Echagüe, Split CTO and sitting member of the OpenFeature consortium.

We are confident in the power of feature flagging and know that the future of software delivery will be done progressively using feature management solutions, like Split. Our hope is that OpenFeature provides a win for both development teams as well as vendors, including feature management tools and 3rd party solutions across the tech stack. Most importantly, this initiative will continue to push forward the concept of feature flagging as a standard best practice for all modern software delivery.

To learn more about OpenFeature, we invite you to visit: https://openfeature.dev.

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done. Switch on a free account today, schedule a demo, or contact us for further questions.

The benefits of streaming architecture for feature flags

Delivering feature flags with lightning speed and reliability has always been one of our top priorities at Split. We’ve continuously improved our architecture as we’ve served more and more traffic over the past few years (We served half a trillion flags last month!). To support this growth, we use a stable and simple polling architecture to propagate all feature flag changes to our SDKs.

At the same time, we’ve maintained our focus on honoring one of our company values, “Every Customer”. We’ve been listening to customer feedback and weighing that feedback during each of our quarterly prioritization sessions. Over the course of those sessions, we’ve recognized that our ability to immediately propagate changes to SDKs was important for many customers so we decided to invest in a real-time streaming architecture.

Our Approach to Streaming Architecture

Early this year we began to work on our new streaming architecture that broadcasts feature flag changes immediately. We plan for this new architecture to become the new default as we fully roll it out in the next two months.

For this streaming architecture, we chose Server-Sent Events (SSE from now on) as the preferred mechanism. SSE allows a server to send data asynchronously to a client (or a server) once a connection is established. It works over the HTTPS transport layer, which is an advantage over other protocols as it offers a standard JavaScript client API named EventSource implemented in most modern browsers as part of the HTML5 standard.

While real-time streaming using SSE will be the default going forward, customers will still have the option to choose polling by setting the configuration on the SDK side.

Streaming Architecture Performance

Running a benchmark to measure latencies over the Internet is always tricky and controversial as there is a lot of variability in the networks. To that point, describing the testing scenario is a key component of such tests.

We created several testing scenarios which measured:

- Latencies from the time in which a feature flag (split) change was made

- The time the push notification arrived

- The time until the last piece of the message payload was received

We then ran this test several times from different locations to see how latency varies from one place to another.

In all those scenarios, the push notifications arrived within a few hundred milliseconds and the full message containing all the feature flag changes were consistently under a second latency. This last measurement includes the time until the last byte of the payload arrives.

As we march toward the general availability of this functionality, we’ll continue to perform more of these benchmarks and from new locations so we can continue to tune the systems to achieve acceptable performance and latency. So far we are pleased with the results and we look forward to rolling it out to everyone soon.

Choosing when Streaming or Polling is Best for You

Both streaming and polling offer a reliable, highly performant platform to serve splits to your apps.

By default, we will move to a streaming mode because it offers:

- Immediate propagation time when changes are made to flags.

- Reduced network traffic, as the server will initiate the request when there is data to be sent (aside from small traffic being sent to keep the connection alive).

- Native browser support to handle sophisticated use cases like reconnections when using SSE.

In case the SDK detects any issues with the streaming service, it will use polling as a fallback mechanism.

In some cases, a polling technique is preferable. Rather than react to a push message, in polling mode, the client asks the server for new data on a user-defined interval. The benefits of using a polling approach include:

- Easier to scale, stateless, and less memory-demanding as each connection is treated as an independent request.

- More tolerant of unreliable connectivity environments, such as mobile networks.

- Avoids security concerns around keeping connections open through firewalls.

Streaming Architecture and an Exciting Future for Split

We are excited about the capabilities that this new streaming architecture approach to delivering feature flag changes will deliver. We’re rolling out the new streaming architecture in stages starting in early May. If you are interested in having early access to this functionality, contact your Split account manager or email support at support@split.io to be part of the beta.

To learn about other upcoming features and be the first to see all our content, we’d love to have you follow us on Twitter!

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done. Switch on a free account today, schedule a demo, or contact us for further questions.

Overcoming Experimentation Obstacles In B2B

Determine the Optimal Traffic Type