Featured Blogs

For the last decade, DevOps has been obsessed with speed – automating CI/CD, testing, infrastructure, and even feature rollout. But one critical layer has been left almost entirely manual: the database. Teams still write SQL scripts by hand, deploy them at midnight, and pray that rollback plans work. At Harness, we think it’s time to fix that.

Today, Harness is launching AI-Powered Database Migration Authoring, a new capability within Harness Database DevOps that brings “vibe coding for databases” to life – where developers can create safe, compliant database migrations simply by describing them in plain language.

This is the next step in Harness’s vision to bring automation to every stage of the software delivery lifecycle, from code to cloud to database.

Database DevOps: One of the Fastest-Growing Modules at Harness

Harness’s Database DevOps offering removes one of the last blockers in modern software delivery: slow, manual database schema changes.

Ask any engineering leader what slows down their release cycles, and the answer will sound familiar: “We can deploy apps fast, but database changes always hold us back.” While CI/CD transformed how applications are released, most teams still manage schema updates through SQL scripts, spreadsheet tracking, and late-stage approvals.

Harness closes this gap by treating database changes like application code. Updates are versioned in Git, validated with policy-as-code, deployed through governed pipelines, and rolled back automatically if needed. A unified dashboard provides full visibility into what is deployed where, enabling teams to compare environments and maintain a comprehensive audit trail.

Harness is the only DevOps platform with a fully integrated, enterprise-grade Database DevOps solution, not a plug-in or point tool. And customers need it! As one of the fastest-growing modules at Harness, Database DevOps delivers value to organizations like Athenahealth (click to see video interview).

“Harness gave us a truly out-of-the-box solution with features we couldn’t get from Liquibase Pro or a homegrown approach. We saved months of engineering effort and got more for less – with better governance, orchestration, and visibility.”

— Daniel Gabriel, Senior Software Engineer, Athenahealth

The Market Shift: From Continuous Delivery to “Vibe Coding”

AI has transformed how code is written, but software delivery remains stuck in the past. “Vibe coding” is speeding up creation, yet the systems that move code into production – including testing, security, and database delivery – haven’t kept pace.

In a recent Harness study, 63% of organizations ship code faster since adopting AI, but 72% have suffered at least one production incident caused by AI-generated code. The result is the AI Velocity Paradox: faster coding, slower delivery.

But there’s a solution. 83% of leaders agree that AI must extend across the entire SDLC to unlock its full potential. Database DevOps helps to close that gap by extending AI-powered automation and governance to the last mile of DevOps: the database.

Introducing AI-Powered Database Migration Authoring

With AI-Powered Database Migration Authoring, any developer can describe the database change they need in natural language, like –

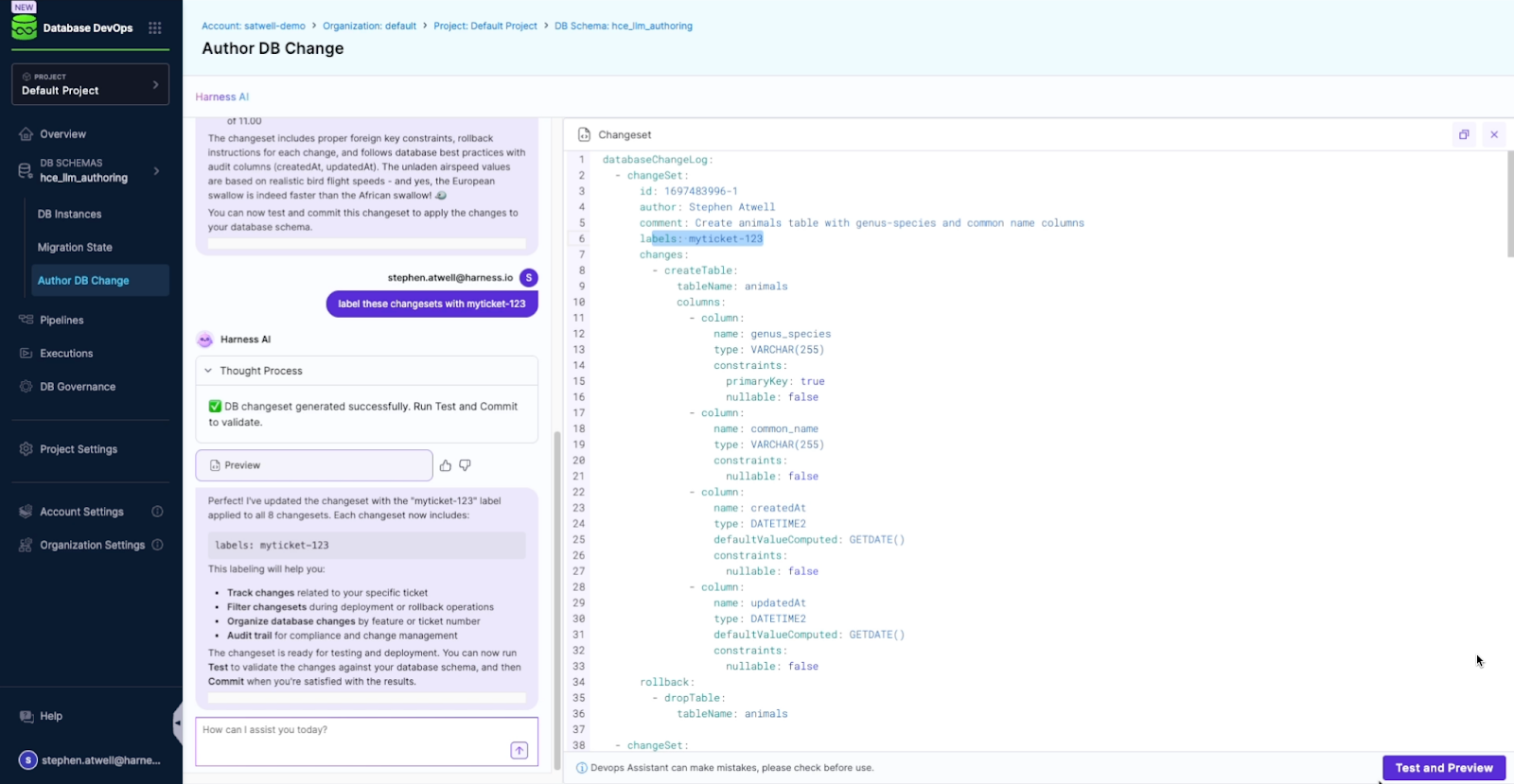

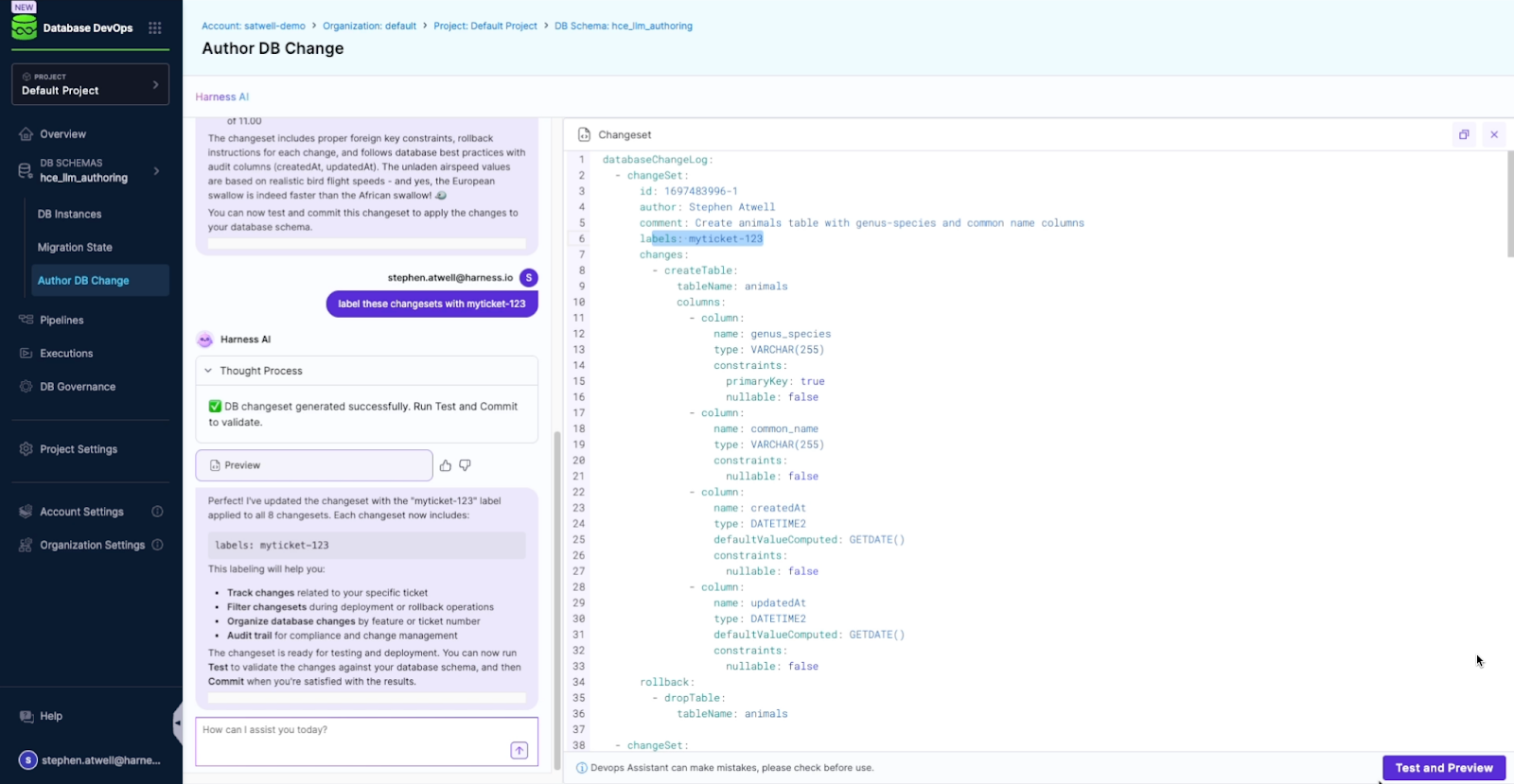

“Create a table named animals with columns for genus_species and common_name. Then add a related table named birds that tracks unladen airspeed and proper name. Add rows for Captain Canary, African swallow, and European swallow.”

– and Harness will generate a compliant, production-ready migration complete with rollback scripts, validation, and Git integration. Capabilities include:

- Analyzing the current schema and policies

- Generating the correct, backward-compatible migration

- Validating the change for safety and compliance

- Committing it to Git for testing through CI/CD

- Creating rollback migrations to ensure complete reversibility

Every migration is versioned, tested, governed, and fully auditable just like your application code.

Trained on Best Practices, Guided by Your Governance

Harness AI isn’t a generic code assistant. It’s trained on proven database management best practices and guided by your organization’s existing governance rules.

It understands keys, constraints, triggers, backward compatibility, and compliance standards. DBAs retain oversight through policy-as-code and automated approvals, ensuring governance never becomes a bottleneck.

Why It Matters

This is more than an incremental feature – it’s a step toward AI-native DevOps, where systems understand intent, enforce policy, and automate delivery from code to cloud to database.

- For developers, AI removes one of the most frustrating dependencies in the release process.

- For DBAs, policy-as-code and automated rollback keep every change safe and auditable.

- For leaders, this offering turns the database from a bottleneck into an accelerator for innovation.

Harness Database DevOps now combines generative AI, policy-as-code, and CI/CD orchestration into one governed workflow. The result: faster releases, stronger governance, and fewer 2 a.m. rollbacks.

See It in Action

Harness’s AI Database Migration Authoring, like most of Harness AI, is powered by the Software Delivery Knowledge Graph and Harness’s MCP Server. This server knows about your database, your pipelines, and comes with baked-in best practices to help you rapidly transform your company’s DevOps using AI.

Below is a preview of how Harness AI takes a simple English-language prompt and generates a compliant database migration complete with validation, rollback, and GitOps integration.

The Database Doesn’t Have to Be the Bottleneck

The last mile of DevOps has just caught up.

With Harness Database DevOps and AI-Powered Database Migration Authoring, database delivery becomes automated, governed, and safe – finally a first-class citizen in the CI/CD pipeline.

Learn more about Harness Database DevOps and book a demo to see AI-Powered Database Migration Authoring in action.

Improving Liquibase Developer Experience with Harness Database DevOps Automated Change Generation

This blog discusses how Harness Database DevOps can automate, govern, and deliver database schema changes safely, without having to manually author your database change.

Introduction

Adopting DevOps best practices for databases is critical for many organizations. With Harness Database DevOps, you can define, test, and govern database schema changes just like application code—automating the entire workflow from authoring to production while ensuring traceability and compliance. But we can do more–we can help your developers write their database change in the first place.

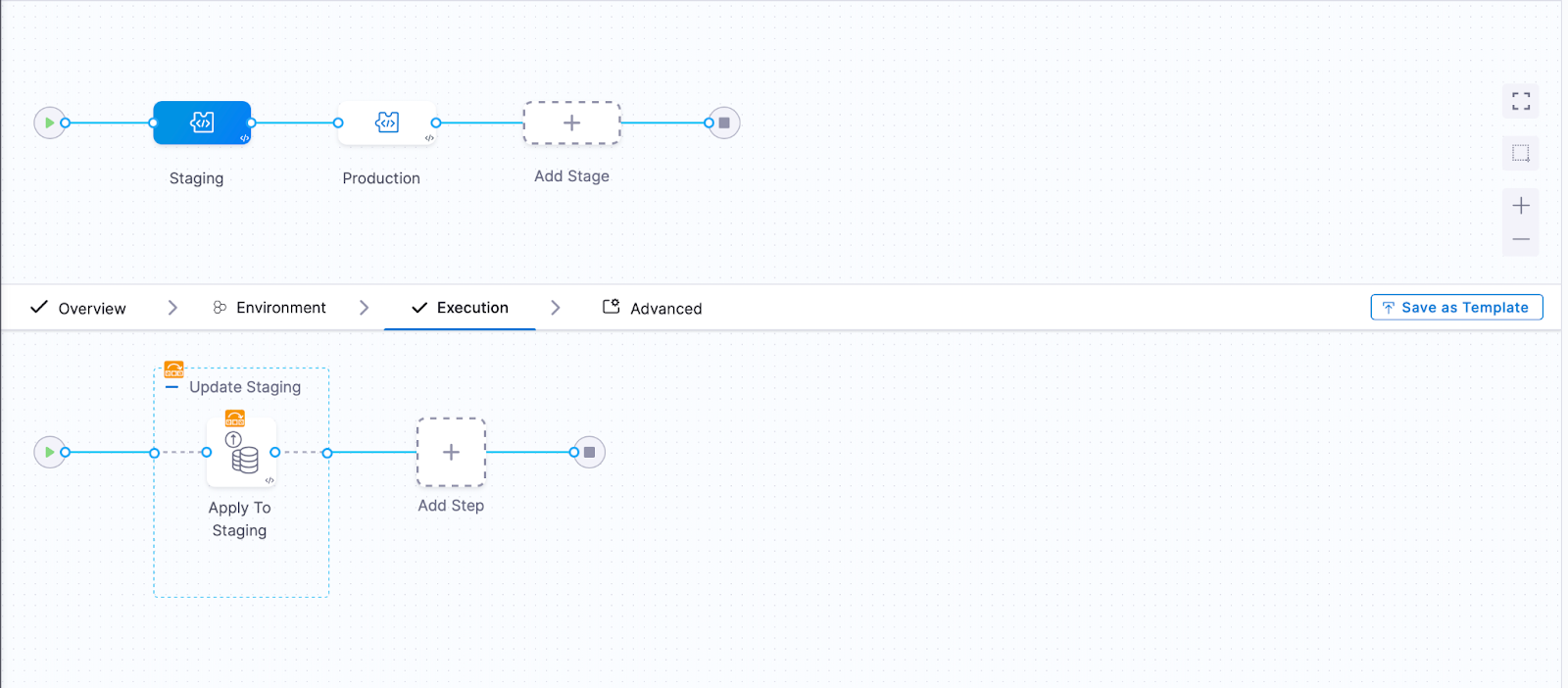

In this blog, we'll walk through a concrete example, using YAML configuration and a real pipeline, to show how Harness empowers teams to:

- Automatically generate database changes using snapshots and diffs

- Enforce governance before changes move beyond the authoring environment

- Ensure consistency across environments via CI/CD using a GitOps Workflow

📽️ Companion Demo Video

To see this workflow in action, watch the companion demo:

In the demo, the pipeline captures changes, specifically adding a new column and index, and propagates those changes via Git before triggering further automated CI/CD, including rollback validation and governance.

Development teams adopting Liquibase for database continuous delivery can accelerate and standardize changeset authoring by leveraging Harness Database DevOps. By enabling developers to generate their changes by diffing DB environments, they no longer need to write SQL or YAML. This provides the benefits of state-based migrations and the benefits of script based migrations at the same time. They can define the desired state of the database in an authoring environment and automatically generate the changes to get there.

Automated Authoring: Step-by-Step with Harness Pipelines

1. Sync Development and Authoring Environments

The workflow starts by ensuring that your development and authoring environments are in sync with git, guaranteeing a clean baseline for change capture. The pipeline ensures the development environment reflects the current git state before capturing a new authoring snapshot. This allows developers to use their environment of choice—such as database UI tools—for schema design. To do this, we just run the apply step for the environment.

2. Take an Authoring Snapshot

Harness uses the LiquibaseCommand step to snapshot the current schema in the authoring environment:

- step:

type: LiquibaseCommand

name: Authoring Schema Snapshot

identifier: snapshot_authoring

spec:

connectorRef: account.harnessImage

command: snapshot

resources:

limits:

memory: 2Gi

cpu: "1"

settings:

output-file: mySnapshot.json

snapshot-format: json

dbSchema: pipeline_authored

dbInstance: authoring

excludeChangeLogFile: true

timeout: 10m

contextType: Pipeline

3. Generate a Diff Changelog

Next, the pipeline diffs the snapshot against the development database, generating a Liquibase YAML changelog (diff.yaml) that describes all the changes made in the authoring environment. Again, this uses the liquibase command step.:

- step:

type: LiquibaseCommand

name: Diff as Changelog

identifier: diff_dev_as_changelog

spec:

connectorRef: account.harnessImage

command: diff-changelog

resources:

limits:

memory: 2Gi

cpu: "1"

settings:

reference-url: offline:mssql?snapshot=mySnapshot.json

author: <+pipeline.variables.email>

label-filter: <+pipeline.variables.ticket_id>

generate-changeset-created-values: "true"

generated-changeset-ids-contains-description: "true"

changelog-file: diff.yaml

dbSchema: pipeline_authored

dbInstance: development

excludeChangeLogFile: true

timeout: 10m

when:

stageStatus: Success

4. Merge Diff Changelog with the Central Changelog Using yq

Your git changelog should include all changes ever deployed, so the pipeline merges the auto-generated diff.yaml into the master changelog with a Run step that uses yq for structured YAML manipulation. This shell script also echoes only the new changesets to the log for the user to view.

- step:

type: Run

name: Output and Merge

identifier: Output_and_merge

spec:

connectorRef: Dockerhub

image: mikefarah/yq:4.45.4

shell: Sh

command: |

# Optionally annotate changesets

yq '.databaseChangeLog.[].changeSet.comment = "<+pipeline.variables.comment>" | .databaseChangeLog.[] |= .changeSet.id = "<+pipeline.variables.ticket_id>-"+(path | .[-1])' diff.yaml > diff-comments.yaml

# Merge new changesets into the main changelog

yq -i 'load("diff-comments.yaml") as $d2 | .databaseChangeLog += $d2.databaseChangeLog' dbops/ensure_dev_matches_git/changelogs/pipeline-authored/changelog.yml

# Output the merged changelog (for transparency/logging)

cat dbops/ensure_dev_matches_git/changelogs/pipeline-authored/changelog.yml

5. Commit to Git

Once merged, the pipeline commits the updated changelog to your Git repository.

- step:

type: Run

name: Commit to Git

identifier: Commit_to_git

spec:

connectorRef: Dockerhub

image: alpine/git

shell: Sh

command: |

cd dbops/ensure_dev_matches_git

git config --global user.email "<+pipeline.variables.email>"

git config --global user.name "Harness Pipeline"

git add changelogs/pipeline-authored/changelog.yml

git commit -m "Automated changelog update for ticket <+pipeline.variables.ticket_id>"

git push

This push kicks off further CI/CD workflows for deployment, rollback testing, and integration validation in the development environment. The git repo is structured using a different branch for each environment, so promoting through staging to prod is accomplished by merging PRs.

Enforcing Database Change Policies

To ensure regulatory and organizational compliance, teams can automatically enforce their policies at deployment time. The demo features an example of a policy being violated, and fixing the change to meet it. The policy given in the demo is shown below, and it enforces a particular naming convention for indexes.

Example: SQL Index Naming Convention Policy

package db_sql

deny[msg] {

some l

sql := lower(input.sqlStatements[l])

regex.match(`(?i)create\s+(NonClustered\s+)?index\s+.*`, sql)

matches := regex.find_all_string_submatch_n(`(?i)create\s+(NonClustered\s+)?index\s+([^\s]+)\s+ON\s+([^\s(]+)\s?\(\[?([^])]+)+\]?\);`, sql,-1)[0]

idx_name := matches[2]

table_name := matches[3]

column_names := strings.replace_n({" ": "_",",": "__"},matches[4])

expected_index_name := concat("_",["idx",table_name,column_names])

idx_name != expected_index_name

msg := sprintf("Index creation does not follow naming convention.\n SQL: '%s'\n expected index name: '%s'", [sql,expected_index_name])

}

This policy automatically detects and blocks non-compliant index names in developer-authored SQL.

In the demo, a policy violation appears if a developer’s generated index (e.g., person_job_title_idx) doesn’t match the convention.

Conclusion

Harness Database DevOps amplifies Liquibase’s value by automating changelog authoring, merge, git, deployment, and pipeline orchestration—reducing human error, boosting speed, and ensuring every change is audit-ready and policy-compliant. Developers can focus on schema improvements, while automation and policy steps enable safe, scalable delivery.

Ready to modernize your database CI/CD?

Learn more about how you can improve your developer experience for database changes or contact us to discuss your particular usecase.

Check out State of the Developer Experience 2024

Recent Blogs

Open Source Liquibase MongoDB Native Executor by Harness

Harness Database DevOps is introducing an open source native MongoDB executor for Liquibase Community Edition. The goal is simple: make MongoDB workflows easier, fully open, and accessible for teams already relying on Liquibase without forcing them into paid add-ons.

This launch focuses on removing friction for open source users, improving MongoDB success rates, and contributing meaningful functionality back to the community.

Why Does Liquibase MongoDB Support Matter for Open Source Users?

Teams using MongoDB often already maintain scripts, migrations, and operational workflows. However, running them reliably through Liquibase Community Edition has historically required workarounds, limited integrations, or commercial extensions.

This native executor changes that. It allows teams to:

- Run existing MongoDB scripts directly through Liquibase Community Edition.

- Avoid rewriting database workflows just to fit tooling limitations.

- Keep migrations versioned and automated alongside application CI/CD.

- Stay within a fully open source ecosystem.

This is important because MongoDB adoption continues to grow across developer platforms, fintech, eCommerce, and internal tooling. Teams want consistency: application code, infrastructure, and databases should all move through the same automation pipelines. The executor helps bring MongoDB into that standardised DevOps model.

It also reflects a broader philosophy: core database capabilities should not sit behind paywalls when the community depends on them. By open-sourcing the executor, Harness is enabling developers to move faster while keeping the ecosystem transparent and collaborative.

Liquibase MongoDB Native Executor: What It Enables In Community Edition

With the native MongoDB executor:

- Liquibase Community can execute MongoDB scripts natively

- Teams can reuse existing operational scripts

- Database changes become traceable and repeatable

- Migration workflows align with CI/CD practices

This improves the success rate for MongoDB users adopting Liquibase because the workflow becomes familiar rather than forced. Instead of adapting MongoDB to fit the tool, the tool now works with MongoDB.

How To Install The Liquibase MongoDB Extension (Step-By-Step)

1. Getting started is straightforward. The Liquibase MongoDB extension is hosted on HAR registry, which can be downloaded by using below command:

curl -L \

"https://us-maven.pkg.dev/gar-prod-setup/harness-maven-public/io/harness/liquibase-mongodb-dbops-extension/1.1.0-4.24.0/liquibase-mongodb-dbops-extension-1.1.0-4.24.0.jar" \

-o liquibase-mongodb-dbops-extension-1.1.0-4.24.0.jar

2. Add the extension to Liquibase: Place the downloaded JAR file into the Liquibase library directory, example path: "LIQUIBASE_HOME/lib/".

3. Configure Liquibase: Update the Liquibase configuration to point to the MongoDB connection and changelog files.

4. Run migrations: Use the "liquibase update" command and Liquibase Community will now execute MongoDB scripts using the native executor.

Generating MongoDB Changelogs From A Running Database

Migration adoption often stalls when teams lack a clean way to generate changelogs from an existing database. To address this, Harness is also sharing a Python utility that mirrors the behavior of "generate-changelog" for MongoDB environments.

The script:

- Connects to a live MongoDB instance

- Reads configuration and structure

- Produces a Liquibase-compatible changelog

- Helps teams transition from unmanaged MongoDB to versioned workflows

This reduces onboarding friction significantly. Instead of starting from scratch, teams can bootstrap changelogs directly from production-like environments. It bridges the gap between legacy MongoDB setups and modern database DevOps practices.

Why Is Harness Contributing This To Open Source?

The intent is not just to release a tool. The intent is to strengthen the open ecosystem.

Harness believes:

- Foundational database capabilities should remain accessible.

- Community users deserve production-ready tooling.

- Open contributions drive innovation faster than closed ecosystems.

By contributing a native MongoDB executor:

- Liquibase Community users gain real functionality.

- MongoDB adoption inside DevOps workflows becomes easier.

- The ecosystem remains open and collaborative.

- Higher success rate for MongoDB users adopting Liquibase Community.

This effort also reinforces Harness as an active open source contributor focused on solving real developer problems rather than monetizing basic functionality.

The Most Complete Open Source Liquibase MongoDB Integration Available Today

The native executor, combined with changelog generation support, provides:

- Script execution

- Migration automation

- Changelog creation from running environments

- CI/CD alignment

Together, these create one of the most functional open source MongoDB integrations available for Liquibase Community users. The objective is clear: make it the default path developers discover when searching for Liquibase MongoDB workflows.

Start Using and Contributing to Liquibase MongoDB Today

Discover the open-source MongoDB native executor. Teams can adopt it in their workflows, extend its capabilities, and contribute enhancements back to the project. Progress in database DevOps accelerates when the community collaborates and builds in the open.

NoSQL Change Control for Compliance

As modern organizations continue their shift toward microservices, distributed systems, and high-velocity software delivery, NoSQL databases have become strategic building blocks. Their schema flexibility, scalability, and high throughput empower developers to move rapidly - but they also introduce operational, governance, and compliance risks. Without structured database change control, even a small update to a NoSQL document, key-value pair, or column family can cascade into production instability, data inconsistency, or compliance violations.

To sustain innovation at scale, enterprises need disciplined database change control for NoSQL - not as a bottleneck, but as an enabler of secure and reliable application delivery.

The Hidden Risks of Uncontrolled NoSQL Changes

Unlike relational systems, NoSQL databases place schema flexibility in the hands of developers. And the enterprises that rely on such NoSQL Database at scale are discovering the following truths:

- Flexibility without governance leads to instability.

- Data models must evolve as safely as application code.

- Compliance cannot rely on manual best-effort processes.

With structured change control:

- Schemas are versioned and peer-reviewed in Git

- Rollbacks are deterministic

- Environments stay consistent

- Audits pass without firefighting

- Data governance policies enforce themselves

- Compliance requirements (including GDPR’s “data integrity and confidentiality” mandate) are automatically met

NoSQL’s agility remains intact but reliability, safety, and traceability are added.

Database Change Control as Part of CI/CD

To eliminate risk and release bottlenecks, NoSQL change control needs to operate inside CI/CD pipelines - not outside them. This ensures that:

- Database updates are stored in Git as the system of record

- Pull requests enforce approvals and peer review

- Pipeline-driven testing validates the impact of schema changes before deployment

- Deployment logs provide traceability for governance and audit teams

A database change ceases to be a manual, tribal-knowledge activity and becomes a first-class software artifact - designed, tested, versioned, deployed, and rolled back automatically.

How Harness Safeguards NoSQL Change Delivery

Harness Database DevOps extends CI/CD best practices to NoSQL by providing automated delivery, versioning, governance, and observability across the entire change lifecycle, including MongoDB. Instead of treating database changes as a separate operational track, Harness unifies database evolution with modern engineering practices:

- DataMigration-as-Code stored in Git

- Automated verification before deployment

- Impact analysis and data preview

- Pipeline-level enforcement across every stage

- End-to-end audit trails and compliance logging

- Governed rollbacks and non-destructive deployments

This unification allows enterprises to move fast and maintain control, without rewriting how teams work.

The Competitive Advantage of Doing This Right

High-growth teams that adopt change control for NoSQL environments report:

- Greater deployment confidence with lower production incident rates

- Sustained release velocity - without sacrificing data quality or security

- Reduced operational burden associated with GDPR, auditing, and governance

- Better alignment across developers, DBAs, SREs, and platform engineering

In short, the combination of NoSQL flexibility and automated governance allows enterprises to scale without trading speed for stability.

Final Thoughts

NoSQL databases have become fundamental to modern application architectures, but flexibility without control introduces operational risk. Implementing structured database change control - supported by CI/CD automation, runtime policy enforcement, and data governance - ensures that NoSQL deployments remain safe, compliant, and resilient even at scale.

Harness Database DevOps provides a unified platform for automating change delivery, enforcing compliance dynamically, and securing the complete database lifecycle - without slowing down development teams.

Harness Database DevOps Now Supports Google AlloyDB

As organizations double down on cloud modernization, Google Cloud’s AlloyDB for PostgreSQL is quickly becoming the preferred engine for mission-critical applications. Its high-performance, PostgreSQL-compatible architecture offers unparalleled scalability, yet managing schema changes, rollouts, and governance can still be challenging at enterprise scale.

With Harness Database DevOps now supporting AlloyDB, engineering teams can unify their end-to-end database delivery lifecycle under one automated, secure, and audit-ready platform. This deep integration enables you to operationalize AlloyDB migrations using the same GitOps, CI/CD, and governance workflows already powering your application deployments.

Why Does AlloyDB Matters for Modern Database Delivery?

AlloyDB offers a distributed PostgreSQL-compatible engine built for scale, analytical performance, and minimal maintenance overhead. It introduces capabilities such as:

- 24× faster analytics workloads with vectorized execution and adaptive caching

- Superior elasticity and high availability with automated storage and compute separation

- Full PostgreSQL compatibility with no proprietary syntax lock-ins

- Native GCP integration, simplifying networking, IAM, security posture, and observability

Setting Up AlloyDB in Harness Database DevOps

This resource provides end-to-end guidance, including connection requirements, JDBC formats, network prerequisites, and best-practice deployment patterns, ensuring teams can onboard AlloyDB with confidence and operational rigor. Harness simplifies how teams establish connectivity with AlloyDB, manage authentication, and run PostgreSQL-compatible operations through Liquibase or Flyway. For the full setup instructions, refer to the AlloyDB Configuration Guide.

How Harness DB DevOps Operationalizes AlloyDB Workflows

Once the connection is established, AlloyDB benefits from the same enterprise-grade automation that Harness provides across all supported engines. This includes:

- Git-Ops–driven schema management using Liquibase or Flyway

- Pipeline-native governance with audit trails, approvals, and security policies

- Smart rollbacks and version-controlled SQL workflows

- Cross-environment promotions aligned with CI/CD best practices

Harness abstracts operational complexity, ensuring that every AlloyDB schema change is predictable, auditable, and aligned with platform engineering standards.

Key Benefits of Using Harness DB DevOps with AlloyDB

Organizations adopting this integration typically may see:

- 99% reduction in manual schema deployment overhead

- End-to-end CI/CD automation for PostgreSQL and AlloyDB workloads

- Policy-enforced governance and auditability

- Reduced operational risk through consistent rollbacks & validation

Moving forward: Cloud-Native Database Delivery on AlloyDB

AlloyDB’s performance and elasticity give teams a powerful foundation for modern application workloads. Harness DB DevOps amplifies this by providing consistency, guardrails, and automation across environments.

Together, they unlock a future-ready workflow where:

- Engineering teams ship faster

- DBAs maintain control and compliance

- Platform teams reduce operational overhead

- Organizations gain enterprise-grade resilience and governance

As cloud-native architectures continue to evolve, Harness and AlloyDB create a strategic synergy making database delivery more scalable, more secure, and more aligned with modern DevOps principles.

Frequently Asked Questions

1. How does Harness connect securely to AlloyDB?

Harness leverages a secure JDBC connection using standard PostgreSQL drivers. All credentials are stored in encrypted secrets managers, and communication occurs through the Harness Delegate running inside your VPC, ensuring zero-trust alignment and no data egress exposure.

2. Do my existing Liquibase or Flyway workflows work with AlloyDB?

Yes. Since AlloyDB is fully PostgreSQL-compatible, your existing Liquibase or Flyway changesets, versioning strategies, and rollback workflows operate seamlessly. Harness simply orchestrates them with CI/CD, GitOps, and governance layers.

3. What if my organization requires strict governance and auditability?

Harness provides enterprise-grade audit logs, approval gates, policy-as-code (OPA), and environment-specific guardrails. Every migration, manual or automated is fully traceable, ensuring regulatory compliance across environments.

4. Can Harness manage multi-environment promotions for AlloyDB?

Absolutely. Harness enables consistent dev → test → staging → production promotions with parameterized pipelines, drift detection, and automated validation steps. Each promotion is version-controlled and follows your organization’s release governance.

DBA vs Developer Dynamics: Bridging the Gap with Database DevOps

In every engineering organization, there’s an invisible tug-of-war that plays out almost daily with developers racing to ship features and DBAs guarding the gates of data stability. Both teams work toward the same outcome: reliable, high-performing software but their paths often diverge at the intersection of change.

This is where friction brews due to process, pressure, and perspective, and every failed release, every schema rollback, and every late-night incident only deepens the misunderstanding. But it’s also where the opportunity for transformation lies.

A Tale of Two Mindsets

Imagine this:

A developer finishes a new feature, ready to deploy it before the sprint demo. Their code depends on a schema change, a new column, a renamed table, maybe a simple constraint. It’s small, they think. It should be safe.

Across the room, the DBA frowns. They know that a “small change” can cascade into a large issue. One missing index or wrong default value can slow down queries, lock tables, or even cause an outage. Their instinct is to review, test, and double-check before touching production.

Neither is wrong. The developer is driven by speed. The DBA is anchored in safety.

What’s missing is a bridge, a shared workflow that lets both move with confidence.

When the Schema Fails, Everyone Feels It

Many teams have lived through this story: a migration script runs perfectly in staging but fails in production. The rollback doesn’t trigger as planned. Dashboards turn red. The chat channels fill with messages.

Developers scramble to patch the issue. DBAs comb through logs, searching for a root cause. It’s chaos not because someone made a mistake, but because the process didn’t protect them.

These moments are never about blame. They’re about visibility and coordination. A system that doesn’t give both teams a common view of database change is like flying without radar fast, but blind.

DevOps Changed Everything

DevOps revolutionized how we ship applications. Continuous Integration and Continuous Delivery (CI/CD) pipelines made deployments faster, safer, and traceable. Yet, while code pipelines evolved, the database layer stayed behind, guarded, manual, and slow.

This is where modern Database DevOps steps in, not to replace DBAs, but to empower them.

Platforms like Harness Database DevOps are built around collaboration. They bring schema changes into the same pipeline as code, with visibility, approvals, and guardrails for both sides.

Instead of asking developers to “just trust the DBAs” or DBAs to “just approve faster,” it lets the process speak for itself through automation, audit trails, and predictable rollbacks.

Database DevOps gives both developers and DBAs a common ground. Developers can submit migration scripts confidently, knowing every step will be verified in a controlled environment. DBAs can set policies, enforce standards, and still sleep well at night knowing rollbacks and validations are built in.

The process turns from a tug-of-war into a handshake. Instead of “your script broke production,” the conversation shifts to “our workflow caught this early.” That’s the magic of shared context - empathy through automation.

The Real Story Behind DBA vs Dev

In essence, “DBA vs Dev” was never a rivalry, it was a misalignment of priorities. When process, empathy, and automation align, the divide disappears. What remains is a shared mission: shipping change that both teams can stand behind, confidently and together. Because the future of database delivery isn’t about faster migrations or stricter reviews, it’s about harmony between precision and speed, between caution and innovation, between humans and the systems they build.

FAQs

1. What causes tension between developers and DBAs?

Different priorities, speed versus safety often create friction. Developers aim for agility, while DBAs focus on data integrity and performance.

2. How can Database DevOps reduce conflicts?

It introduces shared workflows, automated validation, and clear governance, allowing both teams to collaborate instead of working in isolation.

3. Is automation replacing DBAs?

Not at all. Automation frees DBAs from repetitive tasks so they can focus on strategy, optimization, and data reliability.

4. How does Harness Database DevOps support collaboration?

It unifies developers and DBAs under one automated pipeline, with versioning, approvals, rollbacks, and clear visibility for every schema change.

5. What’s the best way to start adopting Database DevOps?

Begin small, automate a single schema change pipeline, establish review checkpoints, and expand as confidence grows. Gradual adoption builds lasting trust.

Database DevOps vs. Database Migration Systems and Why You Need Both

Every developer knows this story.

You’ve automated everything, your builds, tests, deployments. Application changes flow through CI/CD pipelines like clockwork. And then comes the dreaded pull request with a database change.

Suddenly, the rhythm breaks. A DBA review gets stuck. Someone asks, “Did we test that ALTER TABLE script?” Another person hesitates, “What if it breaks production?” And just like that, the release grinds to a halt. That’s the silent bottleneck many teams face, it’s because the database world was never built for the kind of speed DevOps demands..

That’s why Database DevOps and Database Migration Systems matter. They look similar at first glance, but they solve different parts of the same story. Together, they turn database delivery from a blocker into a seamless extension of your CI/CD flow.

The Heart of Database DevOps

DevOps, at its core, is about collaboration and automation. It’s not just pipelines, it’s people, process, and culture. Now imagine applying that same philosophy to databases. That’s Database DevOps.

It’s the belief that your database deserves the same level of care as your application code versioning, testing, governance, and continuous delivery. Database DevOps focuses on how teams work:

- Developers can version their schema changes.

- DBAs can review and govern those changes.

- Pipelines can deploy automatically while keeping control intact.

It’s not about replacing DBAs or loosening standards.It’s about balance: blending speed with safety, agility with accountability. When teams adopt Database DevOps, they stop treating databases as static infrastructure and start treating them as living, evolving parts of the product.

The Role of Database Migration Systems

If Database DevOps defines the culture, then Database Migration Systems define the execution. Tools like Liquibase OSS and Flyway track every schema change as a versioned script. They make database evolution predictable and repeatable.

Each migration acts like a checkpoint, a recorded story of how your schema grew over time. Migration systems bring order to the chaos:

- Every change is logged, versioned, and traceable.

- Every environment stays in sync.

- You can rebuild a database from scratch, exactly as it was.

But here’s the limitation: migrations only manage scripts, not teams.

They track changes, but they don’t tell you who approved them, how they fit into your CI/CD process, or whether they comply with policies.

That’s where Database DevOps steps in, it connects technical precision with operational discipline.

Why Do You Need Both Database DevOps and Database Migration?

Think of Database DevOps and migration systems as two halves of a complete workflow.

A migration tool alone is like an engine without a driver. Database DevOps alone is like a map without wheels. Together, they help you go further faster, and with fewer risks.

That’s where Harness Fits in

Modern platforms like Harness Database DevOps combine both worlds: the cultural framework of DevOps and the structure of migrations. Harness doesn’t just execute scripts; it orchestrates the entire journey of a database change.

- Developers commit schema updates in Git.

- Pipelines apply those migrations automatically.

- DBAs review, approve, and track changes through audit trails.

- Rollbacks happen in real time.

This is where the philosophy of Database DevOps becomes tangible. You gain clarity, and move smarter. With Harness, database delivery finally feels like an extension of your CI/CD pipeline, not an exception to it.

Conclusion

Database DevOps and Database Migration Systems are not competing paradigms but they are complementary pillars of a modern delivery strategy. Migration systems give you precision and consistency. Database DevOps gives you velocity, visibility, and control. Together, they create a culture of trust and empowerment, where every database change becomes just another automated, governed part of your CI/CD process.

Harness Database DevOps exemplifies this harmony by combining the power of migration systems with enterprise-grade orchestration, GitOps, and observability. By unifying schema evolution with delivery automation, it enables teams to deliver confidently, collaborate effectively, and scale safely.

The future isn’t about choosing one over the other but about embracing both to build a truly modern database delivery pipeline.

Frequently Asked Questions

1. What’s the main difference between Database DevOps and migration systems?

Database Migration Systems manage schema versioning and execution, while Database DevOps platforms manage the orchestration, governance, and automation of those migrations across environments.

2. Can I use Database DevOps without a migration system?

Technically yes, but it’s not ideal. Migration systems provide version control and rollback capabilities-core components that Database DevOps leverages for automation and compliance.

3. How does Harness Database DevOps integrate with tools like Liquibase OSS or Flyway?

Harness natively supports both. It runs your migration scripts through secure, policy-driven pipelines with visibility, approvals, and audit trails.

4. Is Database DevOps suitable for small teams?

Absolutely. Even small teams benefit from consistent workflows and rollback safety. As they scale, those early practices prevent chaos later.

5. What’s the long-term benefit of using both together?

You get the best of both worlds structured schema evolution with governance, automation, and collaboration baked in. It’s a foundation for continuous database delivery.

Harness in Seattle at PASS Data Community Summit 2025

The PASS Data Community Summit returns to Seattle this November, bringing together database professionals, developers, architects, and data leaders from around the world. This year, Harness is proud to join as a Bronze Sponsor, showcasing how teams can finally bring the same speed, safety, and governance of CI/CD to their databases, now supporting Native Flyway.

From November 19–21, 2025, you’ll find us in the Seattle Convention Center at Booth #220, where we’ll be diving deep into the future of Database DevOps with AI-assisted database schema changes.

Why PASS Summit Matters for Database Professionals This Year

Modern applications move fast, but databases haven’t kept up. Manual reviews, brittle scripts, inconsistent change validation, and a lack of visibility into the environment continue to slow delivery and introduce risk.

PASS Summit is the annual gathering where the data community discusses openly these challenges and explores what’s next.

This year, AI-assisted database development, automated governance, Kubernetes-native databases, and data reliability are top-of-mind topics. Harness will be right in the middle of those conversations.

See Our Sessions at PASS

Harness will present two talks this year, each focused on accelerating database delivery while protecting data integrity and ensuring compliance.

📍 Session #1 — Database DevOps: CD for Stateful Applications

🗓 November 19, 2025

⏰ 11:30 AM–12:30 PM

📍 Room 337–339

🔗 Session Link: Database DevOps: CD for Stateful Applications

Running stateful applications on Kubernetes can be just as safe, predictable, and repeatable as stateless workloads when the right approach is used.

In this session, Stephen Atwell (Harness) and Chris Crow (Pure Storage) will explore how to:

- Treat stateful applications and their data as first-class citizens in your CD pipeline

- Version and deploy databases safely and repeatedly

- Manage persistent data and database structural changes within Kubernetes

- Automate schema migrations across environments

- Understand performance impacts of specific migrations

- Use governance and policy controls to empower developers without compromising safety

This talk includes real-world schema migration examples, performance analysis, and a live demo showing how CD tooling automates data migrations inside Kubernetes.

If you’re working with Kubernetes and databases, this session is a must-see.

📍 Session #2 — Faster DB Schema Migrations with AI-Enabled CI/CD & Automated Governance

🗓 November 21, 2025

⏰ 12:15 PM–12:45 PM

📍 Room 442

🔗 Session Link: Faster DB Schema Migrations with AI-Enabled CI/CD & Automated Governance

AI is changing how organizations approach database development, and in this session, we explore what’s now possible.

Join Stephen Atwell, Principal Product Manager for Harness Database DevOps, to learn how AI + CI/CD can accelerate data delivery while maintaining safety and compliance.

You’ll walk away understanding:

- How LLMs can author schema migrations

- How CI/CD validates AI-generated SQL before it reaches production

- How OPA (Open Policy Agent) fully automates database governance

- How DBAs can encode expertise once while enabling self-service across teams

- High-level differences between modern migration tooling

- How faster, automated DB changes translate into faster business outcomes

This session is ideal for DBAs, data engineers, DevOps teams, and anyone looking to modernize their database change process.

What Harness Is Showcasing at PASS Summit 2025

At Booth #220, we’ll be presenting the latest evolution of Harness Database DevOps, designed to solve one core challenge:

“How do we release database changes with the same confidence and velocity as application code?”

See live demos of:

✔ AI-Authored Database Migrations

- Generate compliant, review-ready SQL from simple natural-language descriptions.

✔ Migration Tracking Across Environments

- Complete visibility into what's deployed where, and what’s at risk.

✔ Automated Rollback Intelligence

- Safer releases using reversible changes and pre-deployment validation.

✔ Policy-Driven Governance

- Blocks risky changes automatically. Enables developers to work safely.

✔ Unified Dev + DBA Workflows

- A shared pipeline for collaboration, approvals, and risk reduction.

Meet the Harness Team at Booth #220

Our product managers, database experts, and DevOps engineers will be available for hands-on demos and in-depth discussions on your current challenges.

Stop by to:

- See AI-authored schema changes in action

- Learn how teams automate DB governance at scale

- Review migration performance impacts

- Get a personalized assessment of your DB delivery workflow

- Grab some swag and meet the team

See You in Seattle!

We’re thrilled to sponsor the PASS Data Community Summit 2025 and look forward to connecting with the community.

See you at Booth #220 in Seattle!

Harness Database DevOps Adds Flyway Support

Harness Database DevOps was built to make database delivery as automated, safe, and repeatable as application delivery. Historically, Liquibase was our primary migration engine. Today we’ve added Flyway support - an SQL-first, simple migration engine - to give teams more choice and better alignment with their existing workflows.

Why Flyway? Understanding Developer Needs

Teams differ: some prefer Liquibase’s structured changelogs (XML/YAML/JSON); others prefer Flyway’s versioned SQL scripts. Flyway’s minimalism and convention-over-configuration approach make it attractive for developers who want direct control over SQL. Adding Flyway is about enabling choice and reducing friction for teams that already rely on SQL based migrations.

How Harness Simplifies Database Migrations?

Harness integrates both engines into one platform so teams can use their preferred tool while retaining centralized governance, approvals, drift detection, automated rollbacks, and environment visibility. You can run Liquibase and Flyway side by side within the same pipeline, with consistent policy and audit controls.

From a technical point of view, Flyway’s power lies in its simplicity - but that also means its conventions matter. Below is a brief guide to the essentials: naming conventions, baselines, pending migrations, and success validation.

Key Concepts:

- Naming convention: Flyway uses versioned filenames such as V1_init.sql, V2_add_users_table.sql.

The V prefix, version number, and double underscore separate version and description. This defines execution order and ensures predictability. - Baseline: When integrating Flyway into an existing database, baselineVersion and baselineOnMigrate=true tell Flyway to treat the current schema as a known version.

- Pending migrations: Flyway automatically detects migrations present in code but not yet applied.

- Success validation: Every applied migration is logged in the flyway_schema_history table.

Best Practices:

- Use clear and consistent script names (V3__add_email_index.sql).

- Keep each migration atomic - one logical change per script.

- Commit migrations alongside code for version alignment.

- Always baseline before onboarding an existing database.

Sample Harness Pipeline Step:

Example File Structure:

Benefits of Multi-Engine Support

Flexibility: Teams pick the engine that matches their workflow.

Scalability: Enterprises with multiple database tools can onboard easily.

Governance: Centralized policies, approvals, and audits apply consistently.

Productivity: Developers focus on writing migrations - Harness handles execution, safety, and rollback automation.

The Future of Database DevOps at Harness

Supporting Flyway alongside Liquibase marks a significant step toward tool-neutral Database DevOps Orchestration. Harness continues to focus on developer freedom and operational confidence.

Whether you prefer structured changelogs or raw SQL scripts, Harness provides a unified pipeline experience - bringing automation, policy control, and observability under one roof. Try Flyway support in your next pipeline and experience how Harness brings agility, safety, and choice to modern database delivery.

From Concept to Reality: The Journey Behind Harness Database DevOps

When I look back at how Harness Database DevOps came to life, it feels less like building a product and more like solving a collective industry puzzle, one piece at a time. Every engineer, DBA, and DevOps practitioner I met had their own version of the same story: application delivery had evolved rapidly, but databases were still lagging behind. Schema changes were risky, rollbacks were manual, and developers hesitated to touch the database layer for fear of breaking something critical.

That was where our journey began, not with an idea, but with a question: “What if database delivery could be as effortless, safe, and auditable as application delivery?”

The Problem We Couldn’t Ignore

At Harness, we’ve always been focused on making software delivery faster, safer, and more developer-friendly. But as we worked with enterprises across industries, one recurring gap became clear, while teams were automating CI/CD pipelines for applications, database changes were still handled in silos.

The process was often manual: SQL scripts being shared over email, version control inconsistencies, and late-night hotfixes that no one wanted to own. Even with existing tools, there was a noticeable disconnect between database engineers, developers, and platform teams. The result was predictable - slow delivery cycles, high change failure rates, and limited visibility.

We didn’t want to simply build another migration tool. We wanted to redefine how databases fit into the modern CI/CD narrative, how they could become first-class citizens in the software delivery pipeline.

Listening Before Building

Before writing a single line of code, we started by listening to DBAs, developers, and release engineers who lived through these challenges every day.

Our conversations revealed a few consistent pain points:

- Database schema changes lacked version control discipline.

- Rollbacks were error-prone, especially across multiple environments, and undocumented.

- Application and database delivery cycles were never truly aligned.

- Teams had limited observability into what changed, when, and by whom.

We also studied existing open-source practices. Many of us were active contributors or long-time users of Liquibase, which had already set strong foundations for schema versioning. Our goal was not to replace those efforts, but to learn from them, build upon them, and align them with the Harness delivery ecosystem.

That’s when the real learning began, understanding how different organizations implement Liquibase, how they handle rollbacks, and how schema evolution differs between teams using PostgreSQL, MySQL, or Oracle.

This phase of research and contribution provided us with valuable insights: while the tooling existed, the real challenge was operational, integrating database changes into CI/CD pipelines without friction or risk.

From Research to Blueprint

Armed with insights, we began sketching the first blueprints of what would eventually become Harness Database DevOps. Our design philosophy was simple:

- Meet teams where they are. Integrate seamlessly with existing tools, such as Liquibase and Flyway.

- Enable progressive automation. Let teams start small and grow into full automation.

- Empower every role. Whether you’re a DBA or developer, you should have clarity and control over database delivery.

Early prototypes focused on automating schema migration, enforcing policy compliance, and building audit trails for database changes. But we soon realized that wasn’t enough.

Database delivery isn’t just about applying migrations; it’s about governance, visibility, and confidence. Developers needed fast feedback loops; DBAs needed assurance that governance was intact; and platform teams needed to integrate it into their broader CI/CD fabric. That realization reshaped our vision entirely.

Building the Foundation

We started with the fundamentals: source control and pipelines. Every database change, whether a script or a declarative state definition, needed to be versioned, automatically-tested, and traceable.

To make this work at scale, we leveraged script-based migrations. This allowed teams to track the actual change scripts applied to reach that state, ensuring alignment and transparency. The next challenge was automation. We wanted pipelines that could handle complex database lifecycles, provisioning instances, running validations, managing approvals, and executing rollbacks, all within a CI/CD workflow familiar to developers.

This was where the engineering creativity of our team truly shined. We integrated database delivery into Harness Pipelines, enabling one-click deployments and policy-driven rollbacks with complete auditability.

Our internal mantra became: “If it’s repeatable, it’s automatable.”

Evolving Through Feedback

Our first internal release was both exciting and humbling. We quickly learned that every organization manages database delivery differently. Some teams followed strict change control. Others moved fast and valued agility over structure.

To bridge that gap, we focused on flexibility, which allowed teams to define their own workflows, environments, and policies while keeping governance seamlessly built in.

We also realized the importance of observability. Teams didn’t just want confirmation that a migration succeeded; they wanted to understand “why something failed”, “how long it took”, and “what exactly changed” behind the scenes.

Each round of feedback, from customers and our internal teams, helped us to refine the product further. Every iteration made it stronger, smarter, and more aligned with real-world engineering needs. And the journey wasn’t just about code; it was about collaboration and teamwork. Here’s how Harness Database DevOps connects every role in the database delivery lifecycle.

The People Behind the Platform

Behind every release stood a passionate team: engineers, product managers, customer success engineer and developer advocates, with a shared mission: to make database delivery seamless, safe, and scalable.

We spent long nights debating rollback semantics, early mornings testing changelog edge cases, and countless hours perfecting pipeline behavior under real workloads. It wasn’t easy, but it mattered.

This wasn’t just about building software; it was about building trust between developers and DBAs, between automation and human oversight. When we finally launched Harness Database DevOps, it didn’t feel like a product release. It felt like the beginning of something bigger, a new way to bring automation and accountability to database delivery.

What makes us proud isn’t just the technology. It’s “how we built it”, with empathy, teamwork, and a deep partnership with our customers from day one. Together with our design partners, we shaped every iteration to ensure what we were building truly reflected their needs and that database delivery could evolve with the same innovation and collaboration that define the rest of DevOps.

Built with Customers, Trusted by Teams

After months of iteration, user testing, and refinements, Harness Database DevOps entered private beta in early 2024. The excitement was immediate. Teams finally saw their database workflows appear alongside application deployments, approvals, and governance check, all within a single pipeline.

During the beta, more than thirty customers participated, offering feedback that directly shaped the product. Some asked for folder-based trunk deployments. Others wanted deeper rollback intelligence. Some wanted Harness to help there developers design and author changes in the first place. Many just wanted to see what was happening inside their database environments.

By the time general availability rolled around, Database DevOps had evolved into a mature platform, not just a feature. It offered migration state tracking, rollback mechanisms, environment isolation, policy enforcement, and native integration with the Harness ecosystem.

But more importantly, it delivered something intangible: trust. Teams could finally move faster without sacrificing control.

The Road Ahead

Database DevOps is still an evolving space. Every new integration, every pipeline enhancement, every database engine we support takes us closer to a world where managing schema changes is as seamless as deploying code.

Our mission remains the same: to help teams move fast without breaking things, to give developers confidence without compromising governance, and to make database delivery as modern as the rest of DevOps.

And as we continue this journey, one thing is certain: the story of Harness Database DevOps isn’t just about a product. It’s about reimagining what’s possible when empathy meets engineering.

Closing Thoughts

From its earliest whiteboard sketch to production pipelines across enterprises, Harness Database DevOps is the product of curiosity, collaboration, and relentless iteration. It was never about reinventing databases. It was about rethinking how teams deliver change, safely, visibly, and confidently.

And that journey, from concept to reality, continues every day with every release, every migration, and every team that chooses to make their database a part of DevOps.

Automate CockroachDB Schema Changes with Harness Database DevOps

CockroachDB is known for its distributed SQL power and fault tolerance. It can scale horizontally and handles multi-region workloads very well while keeping applications online even when nodes fail. But while CockroachDB simplifies scaling, managing schema changes across multiple environments can still be a pain. Developers often face issues like schema drift, inconsistent rollouts, or manual SQL execution during releases.

This is where Harness Database DevOps changes the game. It brings CI/CD discipline to database management. Now that Harness supports CockroachDB, you can automate database updates with the same precision and visibility you expect from application deployments.

A Git-Driven Approach to Schema Management

Traditional database updates rely on manual scripts and DBA interventions. Harness replaces that with a Git-driven workflow:

- Developers define schema changes in a changelog format.

- The changelog is stored in Git for review and version control.

- Harness pipelines automatically apply those changes to CockroachDB.

This ensures:

- Every schema update is auditable.

- Rollbacks are consistent.

- Teams can collaborate safely through pull requests.

Think of it as DevOps for your database, reliable, traceable, and continuous.

How Harness Integrates with CockroachDB

Harness uses JDBC connectors (PostgreSQL compatible) to communicate with CockroachDB.

During a pipeline run, Harness leverages Liquibase to interpret the changelog and execute migrations on your cluster.

Here’s the big picture:

- The developer makes changes to schema and commits that changelog to Git.

- Harness pipeline detects the commit and runs automatically.

- Database DevOps executes the schema changes via the CockroachDB connector.

- Logs, version history, and results are shown in the Harness UI.

This integration transforms CockroachDB schema updates from a manual task into a controlled CI/CD process.

How to Setup the CockroachDB in Harness Database DevOps

Let’s look at the setup at a high level :

- Prepare: Have a running CockroachDB cluster (port 26257), a Harness account with Database DevOps, a Delegate in Kubernetes, and a Git repo for changelogs.

- Author: Define schema updates as Liquibase changelogs (YAML recommended) and commit to Git.

- Connect: Create a JDBC connector in Harness (Postgres driver, TLS recommended).

- Automate: Build a pipeline with an Apply Database Schema step that references your changelog and connector.

- Execute & Observe: Trigger the pipeline, validate Liquibase logs, and review applied changeSets in Harness.

Why This Integration Matters ?

CockroachDB’s distributed design fits perfectly with Harness’s declarative, version-controlled workflows, helping teams ship database changes faster and safer.

Real-World Impact

Consider a SaaS company operating CockroachDB clusters across multiple regions to serve a global user base. Each week, developers push schema changes — adding new tables, refining indexes, or evolving data models to support new features.

Previously, every change required manual intervention. DBAs ran SQL scripts by hand, coordinated release windows across time zones, and updated migration logs in spreadsheets. This process was not only time-consuming but also prone to drift and human error. After adopting Harness Database DevOps, that manual process becomes a seamless, automated pipeline::

- Developers submit changelogs in Git.

- Harness pipelines automatically validate and deploy schema changes.

- Audit logs track every change for compliance.

The team moves faster, reduces human error, and gains complete traceability.

Conclusion

With every schema change tracked, validated, and version-controlled, teams can ship updates faster while maintaining compliance and operational safety. Rollbacks, audit trails, and environment consistency become part of the process and not just an afterthoughts.

This integration empowers both developers and DBAs to collaborate seamlessly through a single pipeline that brings transparency, repeatability, and confidence to database operations. Whether you’re running CockroachDB across multiple regions or scaling up new environments, Harness ensures your database evolves at the same pace as your application. If you’re looking to modernize your database delivery pipeline, start with the Harness Database DevOps documentation and try integrating CockroachDB today.

Frequently Asked Question (FAQ)

1. Does Harness support all Liquibase operations with CockroachDB?

Yes. Harness uses Liquibase under the hood, so most standard operations — like createTable, addColumn, createIndex, and alterTable — work seamlessly with CockroachDB. A few engine-specific operations may differ due to CockroachDB’s SQL dialect, but Liquibase provides compatible fallbacks.

2. Can I roll back a failed migration automatically?

Absolutely. Harness tracks every executed changeSet and supports Liquibase rollback commands. You can trigger rollbacks manually or define rollback scripts in your changelog. This ensures that failed schema updates don’t impact production stability.

3. What happens if two developers commit conflicting schema changes?

Harness detects version conflicts based on Liquibase changeSet IDs and Git history. If two changelogs modify the same table differently, the pipeline flags it before applying. You can resolve the conflict in Git, re-commit, and re-run the pipeline safely.

4. How does Harness ensure migration performance in CockroachDB?

Harness pipelines use containerized execution to isolate database operations. Since CockroachDB distributes workloads, most migrations scale horizontally. You can also configure throttling, pre-deployment validations, or dry runs to measure performance impact before actual execution.

5. Is my database connection secure when using Harness?

Yes. Harness encrypts all secrets (JDBC credentials, certificates, and keys) using its Secret Manager. TLS is strongly recommended for CockroachDB, and Harness supports SSL verification flags like sslmode=verify-full. Credentials are never exposed in pipeline logs.

Introducing AI-Powered Database Migration Authoring: The Last Mile of DevOps Just Got Smarter

For the last decade, DevOps has been obsessed with speed – automating CI/CD, testing, infrastructure, and even feature rollout. But one critical layer has been left almost entirely manual: the database. Teams still write SQL scripts by hand, deploy them at midnight, and pray that rollback plans work. At Harness, we think it’s time to fix that.

Today, Harness is launching AI-Powered Database Migration Authoring, a new capability within Harness Database DevOps that brings “vibe coding for databases” to life – where developers can create safe, compliant database migrations simply by describing them in plain language.

This is the next step in Harness’s vision to bring automation to every stage of the software delivery lifecycle, from code to cloud to database.

Database DevOps: One of the Fastest-Growing Modules at Harness

Harness’s Database DevOps offering removes one of the last blockers in modern software delivery: slow, manual database schema changes.

Ask any engineering leader what slows down their release cycles, and the answer will sound familiar: “We can deploy apps fast, but database changes always hold us back.” While CI/CD transformed how applications are released, most teams still manage schema updates through SQL scripts, spreadsheet tracking, and late-stage approvals.

Harness closes this gap by treating database changes like application code. Updates are versioned in Git, validated with policy-as-code, deployed through governed pipelines, and rolled back automatically if needed. A unified dashboard provides full visibility into what is deployed where, enabling teams to compare environments and maintain a comprehensive audit trail.

Harness is the only DevOps platform with a fully integrated, enterprise-grade Database DevOps solution, not a plug-in or point tool. And customers need it! As one of the fastest-growing modules at Harness, Database DevOps delivers value to organizations like Athenahealth (click to see video interview).

“Harness gave us a truly out-of-the-box solution with features we couldn’t get from Liquibase Pro or a homegrown approach. We saved months of engineering effort and got more for less – with better governance, orchestration, and visibility.”

— Daniel Gabriel, Senior Software Engineer, Athenahealth

The Market Shift: From Continuous Delivery to “Vibe Coding”

AI has transformed how code is written, but software delivery remains stuck in the past. “Vibe coding” is speeding up creation, yet the systems that move code into production – including testing, security, and database delivery – haven’t kept pace.

In a recent Harness study, 63% of organizations ship code faster since adopting AI, but 72% have suffered at least one production incident caused by AI-generated code. The result is the AI Velocity Paradox: faster coding, slower delivery.

But there’s a solution. 83% of leaders agree that AI must extend across the entire SDLC to unlock its full potential. Database DevOps helps to close that gap by extending AI-powered automation and governance to the last mile of DevOps: the database.

Introducing AI-Powered Database Migration Authoring

With AI-Powered Database Migration Authoring, any developer can describe the database change they need in natural language, like –

“Create a table named animals with columns for genus_species and common_name. Then add a related table named birds that tracks unladen airspeed and proper name. Add rows for Captain Canary, African swallow, and European swallow.”

– and Harness will generate a compliant, production-ready migration complete with rollback scripts, validation, and Git integration. Capabilities include:

- Analyzing the current schema and policies

- Generating the correct, backward-compatible migration

- Validating the change for safety and compliance

- Committing it to Git for testing through CI/CD

- Creating rollback migrations to ensure complete reversibility

Every migration is versioned, tested, governed, and fully auditable just like your application code.

Trained on Best Practices, Guided by Your Governance

Harness AI isn’t a generic code assistant. It’s trained on proven database management best practices and guided by your organization’s existing governance rules.

It understands keys, constraints, triggers, backward compatibility, and compliance standards. DBAs retain oversight through policy-as-code and automated approvals, ensuring governance never becomes a bottleneck.

Why It Matters

This is more than an incremental feature – it’s a step toward AI-native DevOps, where systems understand intent, enforce policy, and automate delivery from code to cloud to database.

- For developers, AI removes one of the most frustrating dependencies in the release process.

- For DBAs, policy-as-code and automated rollback keep every change safe and auditable.

- For leaders, this offering turns the database from a bottleneck into an accelerator for innovation.

Harness Database DevOps now combines generative AI, policy-as-code, and CI/CD orchestration into one governed workflow. The result: faster releases, stronger governance, and fewer 2 a.m. rollbacks.

See It in Action

Harness’s AI Database Migration Authoring, like most of Harness AI, is powered by the Software Delivery Knowledge Graph and Harness’s MCP Server. This server knows about your database, your pipelines, and comes with baked-in best practices to help you rapidly transform your company’s DevOps using AI.

Below is a preview of how Harness AI takes a simple English-language prompt and generates a compliant database migration complete with validation, rollback, and GitOps integration.

The Database Doesn’t Have to Be the Bottleneck

The last mile of DevOps has just caught up.

With Harness Database DevOps and AI-Powered Database Migration Authoring, database delivery becomes automated, governed, and safe – finally a first-class citizen in the CI/CD pipeline.

Learn more about Harness Database DevOps and book a demo to see AI-Powered Database Migration Authoring in action.

Harness Database DevOps vs Liquibase vs Flyway

Database DevOps has risen in importance as organisations look to bring the same automation and governance found in application pipelines to their data layer. Removing the overhead of manually managing SQL scripts and schema changes is an interesting challenge that has produced a wide variety of tools.

Many products in the market offer similar solutions, and choosing the right one, whether it’s Flyway, Liquibase, or Harness Database DevOps, can significantly impact workflows and the productivity of both developers and DBAs.

Why Choosing the Right Tool Matters?

When it comes to database DevOps tools, requirements vary widely depending on whether you are an individual developer, a growing startup, or a regulated enterprise. This is why each tool is marketed with specific purposes in mind. Understanding these positioning differences helps you make an informed decision about which solution fits your particular needs.

What is Flyway?

Flyway is one of the simplest and most popular migration frameworks, widely known for its lightweight, script-based approach. Changes are managed through sequential SQL files that are version-controlled and applied in order, making it easy to adopt for small teams or projects where speed matters more than governance.

Flyway has seen a strong adoption among organisations which use Microsoft SQL Server. This is due in part to joint marketing efforts with Microsoft, which has made Flyway a natural fit in SQL Server-centric environments.

The Pro edition of Flyway extends beyond script-based migrations by introducing state-based migrations. However, this approach comes with trade-offs. State-based migrations attempt to reconcile the database schema against a desired end state, auto-generating the SQL to get there. While this simplifies certain workflows, it also has add-on risks such as non-deterministic SQL generation, unintended data loss, and limited control in complex production environments. For this reason, state-based migrations are generally not recommended for production use in most organizations.

What is Liquibase?

Liquibase takes a more flexible approach than Flyway by allowing schema changes to be defined not only in SQL but also in XML, YAML, or JSON. This hybrid model is often cited as an advantage, since it can provide rollback behavior out-of-the-box and make it easier to standardize changes across multiple database types with minor SQL differences.

That said, the rollback capability depends heavily on the type of changeset being used. For DSL-based changesets (YAML, XML, JSON), Liquibase can auto-generate rollback logic for roughly 80% of scenarios. However, when using raw SQL changesets, rollback logic must still be explicitly provided, similar in Flyway. This creates an extra burden on teams which depends heavily on SQL, and can limit the perceived “automatic rollback” advantage.

The Pro edition extends Liquibase’s capabilities with drift detection, policy checks, and richer reporting features. These additions make it more suitable for enterprises that require better visibility and governance. Liquibase’s strength lies in its rollback functionality and vibrant community ecosystem, but verbose DSLs and the need for backward logic can slow down developer velocity, especially in organisations that want faster, more automated workflows.

What is Harness Database DevOps?

Harness Database DevOps is a modern Database DevOps platform designed to bring the same automation, governance, and scalability that organizations already expect in application delivery pipelines. Unlike Flyway or Liquibase, which began as migration frameworks, Harness was purpose-built for enterprises that want to fully automate database delivery in alignment with CI/CD and GitOps practices.

What set’s Database DevOps apart is its usage of harness’s cross-module, best in class CI/CD pipeline. Database changes can flow through the same workflows as application code, with built-in support for approvals, RBAC, audit trails, and policy enforcement. Multi-environment orchestration and GitOps-native workflows are supported out of the box, making it possible to manage schema changes at scale with consistency and compliance. Unlike the other solutions, Harness delivers a strongly opinionated, enterprise-grade solution that goes beyond migrations and into full database governance, best practices, and automation.

In addition to orchestrating and running database migrations, Harness Database DevOps provides features for improving the developer experience around these database changes. This includes visibility into what changes are deployed where, when, and what they did. It also includes industry first AI capabilities to design and author database schema migrations from scratch, while leveraging built-in database best practices.

Harness is best suited for enterprises that want automated CI/CD for their database, want to reduce manual overhead, benefit from baked-in best practices, and enforce compliance without slowing down development teams.

Key Differences

Conclusion

Database change management has evolved beyond simply applying migrations. Today, the priority is to ensure schema changes are delivered with the same level of reliability, automation, and governance as application code. Flyway delivers speed and simplicity for small teams. Liquibase offers rollback safety and drift detection for teams that can handle its manual overhead. Harness Database DevOps goes further by embedding governance, automation, and GitOps directly into the CI/CD pipeline, making it the most compelling option for enterprises that need to scale database delivery confidently.

👉 If your organization is ready to move beyond migration frameworks and Harness Database DevOps is the natural next step.

FAQ

1. What’s the difference between Flyway and Liquibase?

Flyway focuses on simplicity with forward-only SQL migrations, making it ideal for small teams. Liquibase is more flexible, with rollback support and multiple DSLs.

2. Why choose Harness over Liquibase Pro or Flyway Enterprise?

Liquibase Pro and Flyway Enterprise extend their OSS tools with enterprise features, but they still rely heavily on manual setup and scripting. Harness, by contrast, was designed as a CI/CD-first platform that embeds governance, rollback automation, and GitOps directly into database delivery pipelines.

In addition, Harness offers two differentiators that go beyond traditional migration frameworks:

- Visibility: Rich insights into what changes were deployed, when, where, and their impact across environments, enabling faster troubleshooting and compliance reporting.

- LLM-powered authoring: Industry-first AI assistance to help developers and non-DBAs design and generate schema migrations aligned with best practices, reducing errors and accelerating delivery.

Automatically Testing Your Undo Migrations with Harness Database DevOps

When teams think about DevOps, automation usually starts with application code. We build, test, deploy, and even roll back apps with high confidence. But when it comes to databases, the story is different. Rollbacks are tricky, often skipped, and rarely tested before hitting production.

This is where undo migrations come in. And more importantly, this is where Harness Database DevOps, combined with Liquibase, makes life easier.

What Are Undo Migrations and Why Test Them?

Undo migrations (sometimes called rollbacks) are the scripts that reverse database schema changes. For example:

- A forward migration might add a new column.

- It's undo migration would drop that column.

Simple enough in theory. But in reality, untested undo migrations often fail because of:

- Schema drift (the database isn’t in the exact state you expect).

- Dependencies between changesets.

- Overlooked edge cases (constraints, indexes, data loss).

That’s why testing undo migrations matters. The same way we wouldn’t ship application code without unit tests, we shouldn’t deploy database changes without validating that rollbacks work.

How Harness Database DevOps Helps?

Harness Database DevOps brings CI/CD discipline to your database pipelines. Instead of running ad-hoc SQL scripts, you can:

- Orchestrate schema changes with Liquibase.

- Run pre-deployment evaluations automatically.

- Roll forward or roll back with confidence.