Featured Blogs

KubeCon 2025 Atlanta is here! For the next four days, Atlanta is the undisputed center of the cloud native universe. The buzz is palpable, but this year, one question seems to be hanging over every keynote, session, and hallway track: AI.

We've all seen the impressive demos. But as developers and engineers, we have to ask the hard questions. Can AI actually help us ship code better? Can it make our complex CI/CD pipelines safer, faster, and more intelligent? Or is it just another layer of hype we have to manage?

At Harness, we believe AI is the key to solving software delivery's biggest challenges. And we're not just talking about it—we're here to show you the code with Harness AI, purpose-built to bring intelligence and automation to every step of the delivery process.

⚡ AI in Action: The Can't-Miss Talk at the Google Booth

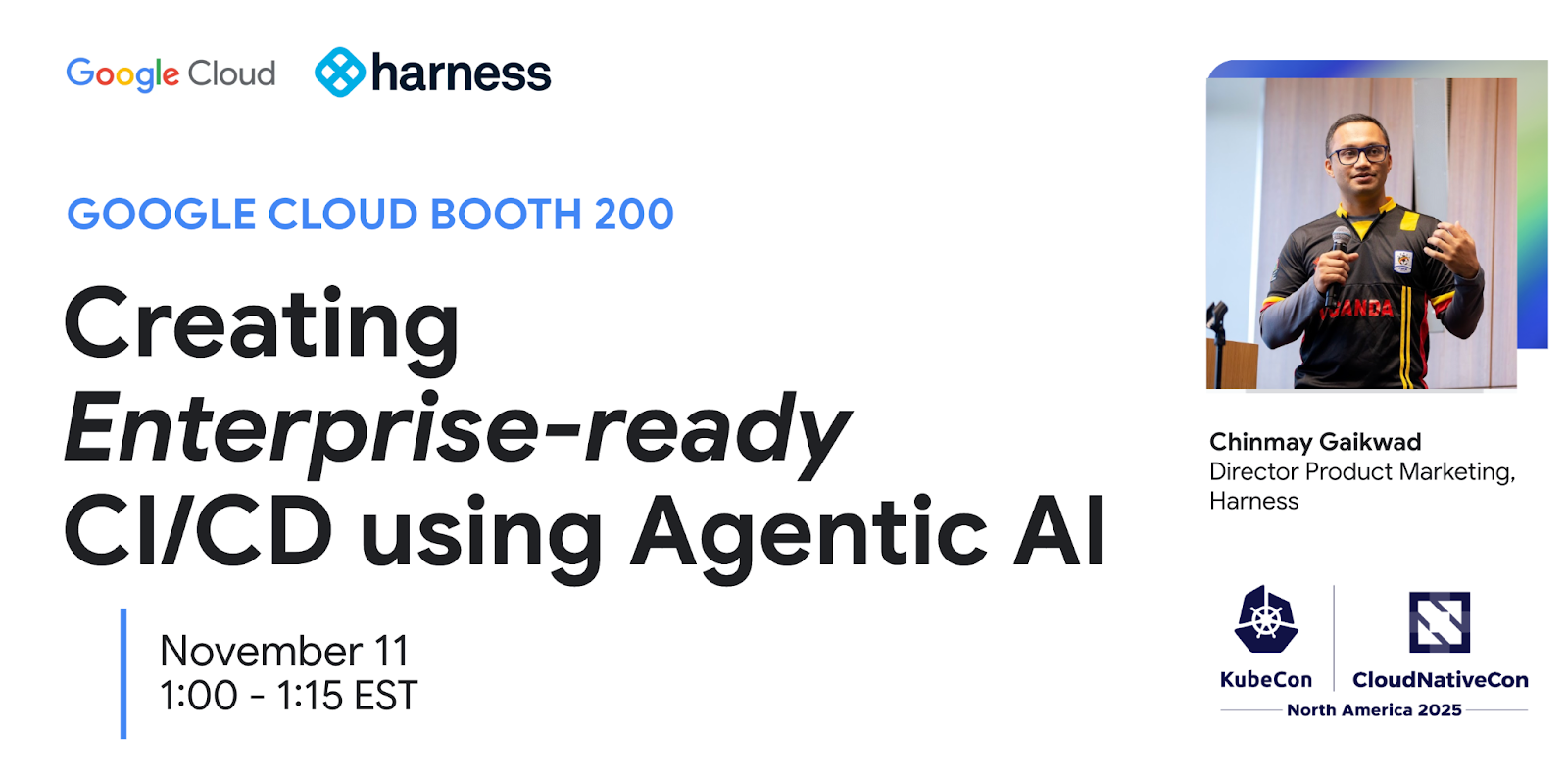

We are thrilled to team up with Google Cloud to present a special lightning talk on Agentic AI and its practical use in CI/CD. This is where the hype stops and the engineering begins.

Join our Director of Product Marketing, Chinmay Gaikwad, for this deep-dive session.

- Talk: Creating Enterprise-ready CI/CD using Agentic AI

- Where: Google Cloud Booth 200

- When: Tuesday, November 11th, at 1:00 PM ET (1:00 – 1:15 PM)

Chinmay will be on hand to demonstrate how Agentic AI is moving from a concept to a practical, powerful tool for building and securing enterprise-grade pipelines. Be sure to stop by, ask questions, and get personalized guidance.

Your Map for KubeCon Week

AI is our big theme, but we're everywhere this week, focusing on the core problems you face. Here's where to find us.

1. Main Event: The Harness Home Base (Nov 11-13)

- 📍 : Booth #522 (Solutions Showcase)

This is our command center. Come by Booth #522 to see live demos of our Agentic AI in action. You can also talk to our engineers about the full Harness platform, including how we integrate with OpenTofu, empower platform engineering teams, and help you get a handle on cloud costs. Plus, we have the best swag at the show.

2. Co-located Event: Platform Engineering Day (Nov 10)

- 📍 : Booth #Z45

As a Platinum Sponsor, we're kicking off the week with a deep focus on building Internal Developer Platforms (IDPs). Stop by Booth #Z45 to chat about building "golden paths" that developers will actually love and how to prove the value of your platform.

3. Co-located Event: OpenTofu Day (Nov 10)

- 📍 : Level 2, Room B203

We are incredibly proud to be a Gold Sponsor of OpenTofu Day. As one of the top contributors to the OpenTofu project, our engineers are in the trenches helping shape the future of open-source Infrastructure as Code.

The momentum is undeniable:

- 26K+ GitHub stars

- 10M+ total downloads

- 180+ engineering contributors

Our engineers have contributed major features like the AzureRM backend rewrite and the new Azure Key Provider, and we serve on the Technical Steering Committee. Come find us in Room B203 to meet the team and talk all things IaC.

Can't wait? Download the digital copy of The Practical Guide to Modernizing Infrastructure Delivery and AI-Native Software Delivery right now.

KubeCon 2025 Atlanta is about what's next. This year, "what's next" is practical AI, smarter platforms, and open collaboration. We're at the center of all three.

See you on the floor!

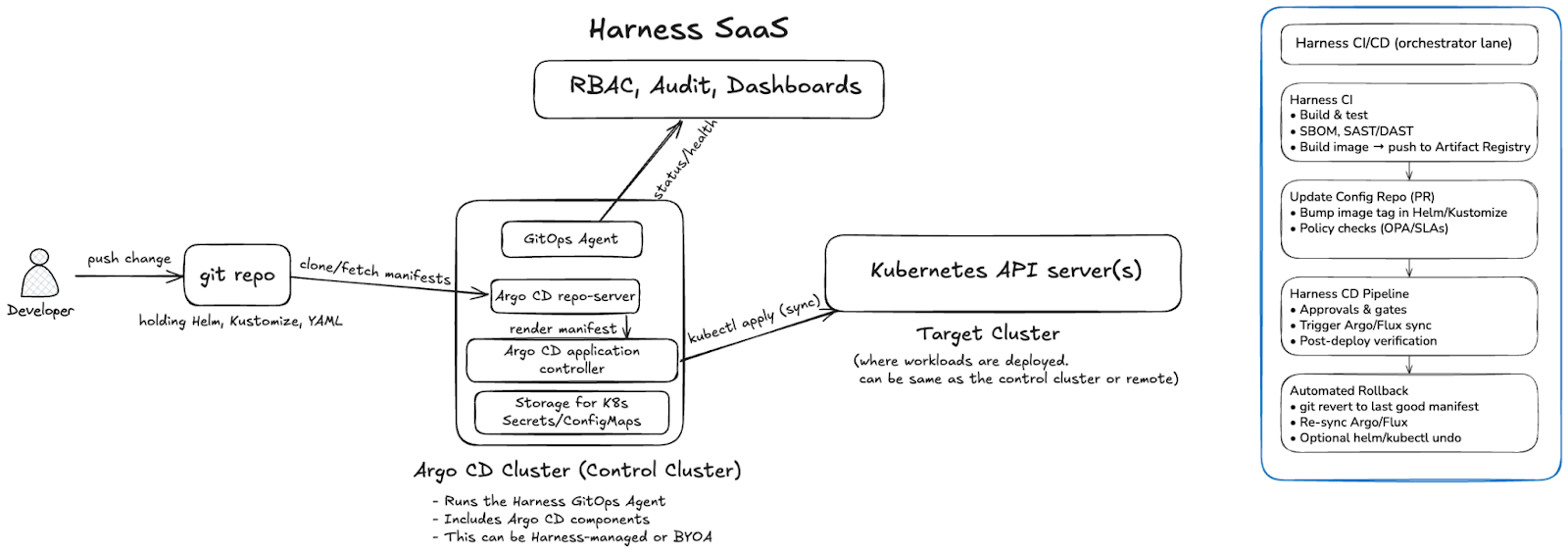

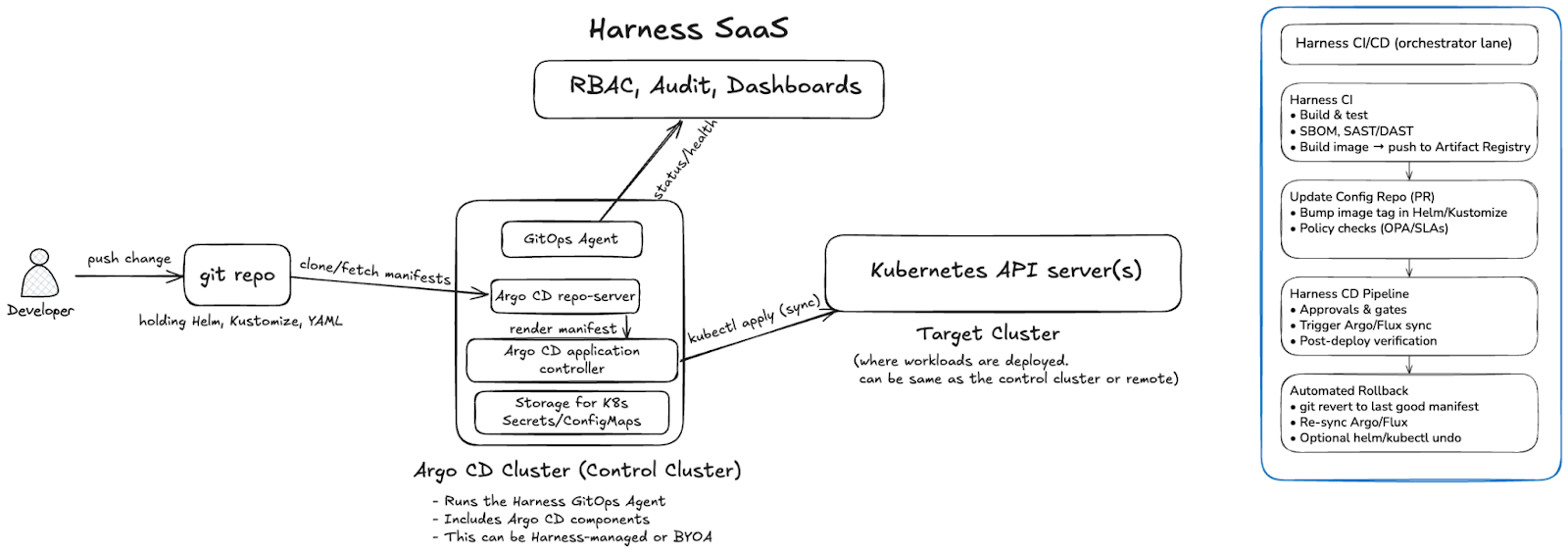

Harness GitOps builds on the Argo CD model by packaging a Harness GitOps Agent with Argo CD components and integrating them into the Harness platform. The result is a GitOps architecture that preserves the Argo reconciliation loop while adding visibility, audit, and control through Harness SaaS.

The Control Plane: Argo CD Cluster

At the center of the architecture is the Argo CD cluster, sometimes called the control cluster. This is where both the Harness GitOps Agent and Argo CD’s core components run:

- GitOps Agent: a lightweight worker installed via YAML that establishes outbound connections to Harness SaaS. The GitOps Agent establishes an outbound-only connection to Harness SaaS, executes SaaS-initiated requests locally, and continuously reports the state of Argo CD resources (Applications, ApplicationSets, Repositories, and Clusters).

- Repo-server: pulls manifests from Git repositories.

- Application Controller: compares desired state with live cluster state and applies changes through the Kubernetes API.

- ApplicationSet Controller: automates the creation and management of multiple Argo CD Applications from a single definition, using generators (for example, list, Git, or cluster) to create parameterized applications. This makes it easier to handle large-scale and dynamic deployments. Learn more in the Argo CD docs(Argo CD : Generating Applications with ApplicationSet(https://argo-cd.readthedocs.io/en/latest/user-guide/application-set/)).

The control cluster can be deployed in two models:

- Harness-managed: Harness provides a pre-packaged installation bundle (Kubernetes manifests or Helm configs). You apply these to your cluster, and they set up the required Argo CD components along with the GitOps Agent. Harness makes it easier to get started, but you still own the install action. In the Harness-managed model, Harness provides upgrade bundles for Argo CD components; in BYOA, you retain full responsibility for Argo lifecycle management and version drift.

- Bring Your Own Argo (BYOA): If you already operate Argo CD, Harness only provides the GitOps Agent installation instructions. You continue managing the full lifecycle and upgrades of Argo CD yourself.

Target Clusters

The Argo CD Application Controller applies manifests to one or more target clusters by talking to their Kubernetes API servers.

- In the simplest setup, the control cluster and target cluster are the same (in-cluster).

- In a hub-and-spoke setup, a single Argo CD cluster can manage multiple remote target clusters.

- Multiple agents can be deployed if you want to isolate environments or scale out reconciliation.

Git as the Source of Truth

Developers push declarative manifests (YAML, Helm, or Kustomize) into a Git repository. The GitOps Agent and repo-server fetch these manifests. The Application Controller continuously reconciles the cluster state against the desired state. Importantly, clusters never push changes back into Git. The repository remains the single source of truth. Harness configuration, including pipeline definitions, can also be stored in Git, providing a consistent Git-based experience.

Harness SaaS Integration

While the GitOps loop runs entirely in the control cluster and target clusters, the GitOps Agent makes outbound-only connections to Harness SaaS.

Harness SaaS provides:

- User interface for GitOps operations.

- Audit logging of syncs and drifts.

- RBAC enforcement at the project, org, or account level.

All sensitive configuration data, such as repository credentials, certificates, and cluster secrets, remain in the GitOps Agent’s namespace as Kubernetes Secrets and ConfigMaps. Harness SaaS only stores a metadata snapshot of the GitOps setup (Applications, ApplicationSets, Clusters, Repositories, etc.), never the sensitive data itself. Unlike some SaaS-first approaches, Harness never requires secrets to leave your cluster, and all credentials and certificates remain confined to your Kubernetes namespace.

End-to-End Flow

- A developer commits or merges a change to Git.

- The Argo CD repo-server fetches the updated manifests.

- The Application Controller compares the desired vs live state.

- If drift exists, it is reconciled by applying the manifests through the Kubernetes API.

- The GitOps Agent reports sync and health status back to Harness SaaS for visibility and governance.

In short: a developer commits, Argo fetches and reconciles, and the GitOps Agent reports status back to Harness SaaS for governance and visibility.

This is the pure GitOps architecture: Git defines the desired state, Argo CD enforces it, and Harness provides governance and observability without altering the core reconciliation model.

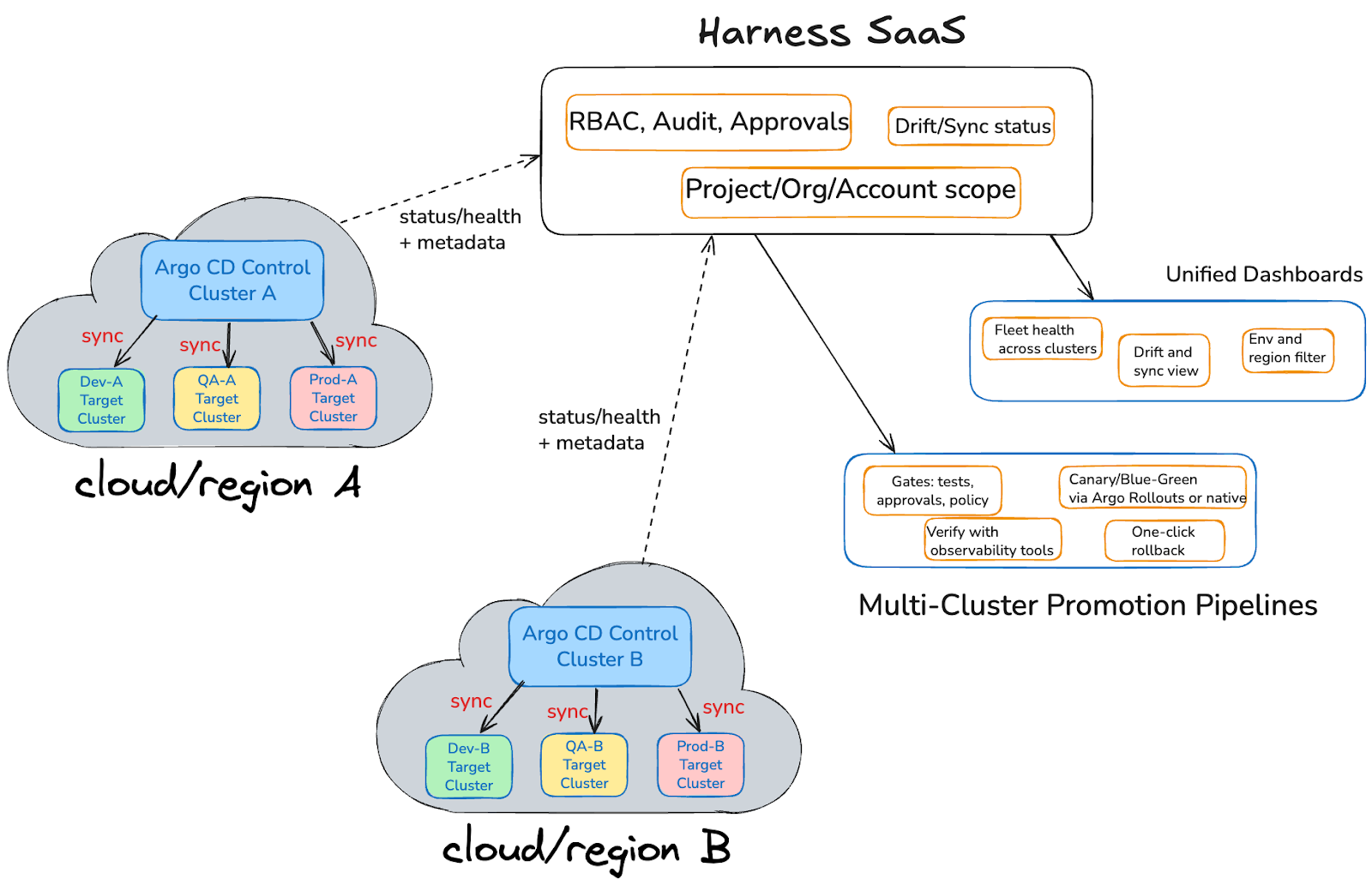

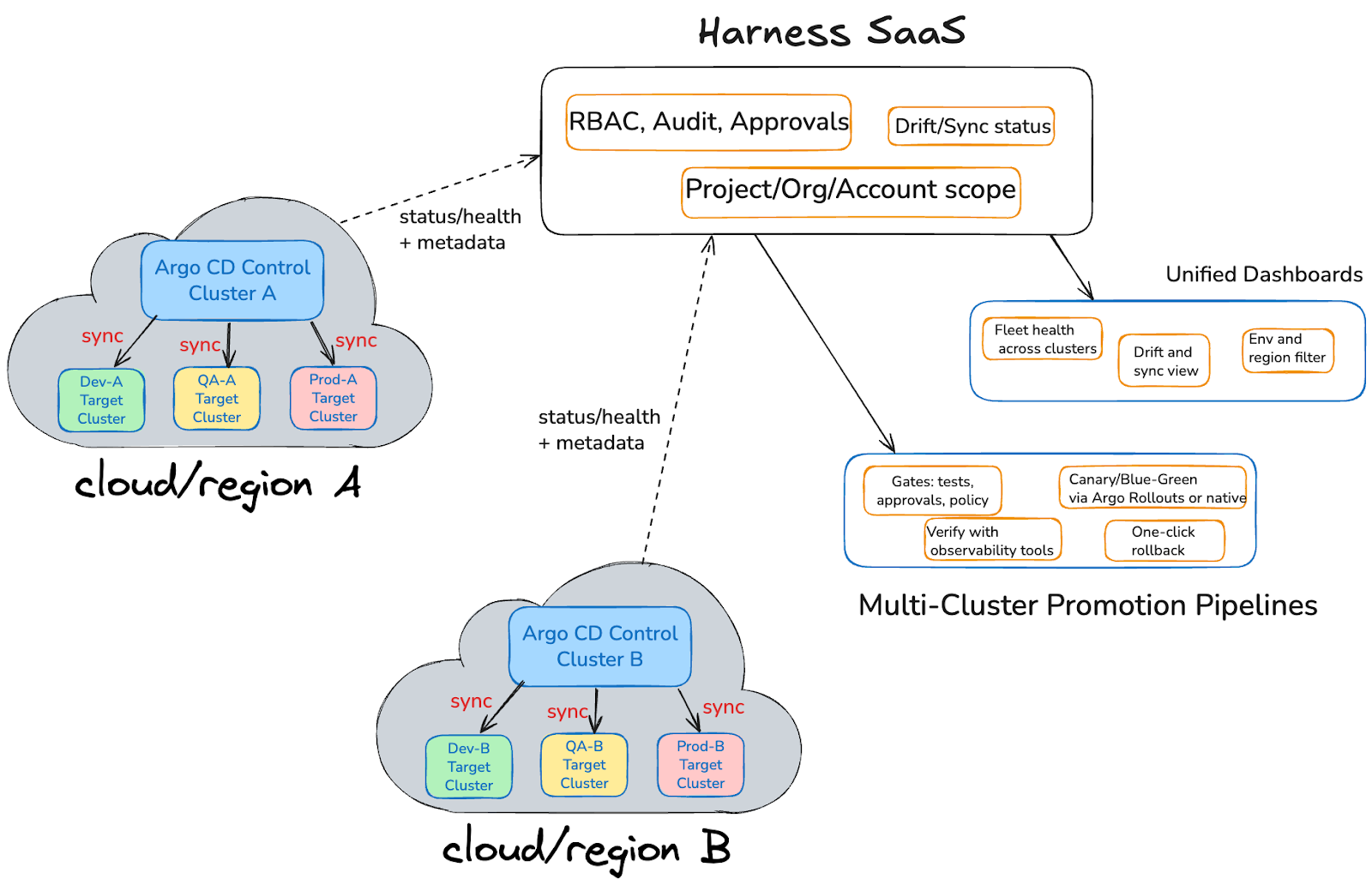

Scaling Beyond a Single Cluster

Most organizations operate more than one Kubernetes cluster, often spread across multiple environments and regions. In this model, each region has its own Argo CD control cluster. The control cluster runs the Harness GitOps Agent alongside core Argo CD components and reconciles the desired state into one or more target clusters such as dev, QA, or prod.

The flow is straightforward:

- Developers push declarative manifests into Git.

- Each control cluster fetches those manifests, compares the desired state to the live state, and applies changes to its target clusters through Kubernetes API calls (sync).

- The control cluster then reports status, health, and metadata back to Harness SaaS over outbound-only connections.

Harness SaaS aggregates data from all control clusters, giving teams a single view and a single place to drive rollouts:

- Unified Dashboards:

- Fleet health across clusters

- Drift and sync visibility

- Environment and region filtering

- Fleet health across clusters

- Multi-Cluster Promotion Pipelines:

- Gates for tests, approvals, and policies

- Canary or blue/green rollouts with Argo Rollouts or native strategies

- Verification using Harness Verify with integrations to observability tools such as AppDynamics, Datadog, Prometheus, New Relic, Elasticsearch, Grafana Loki, Splunk, and Sumo Logic, enabling automated analysis of metrics and logs to gate promotions with confidence.

- One-click rollback that restores applications, infrastructure, and cluster resources defined in Git, and database schema when migrations are stored alongside your manifests, providing a true rollback to a known good state.

- Gates for tests, approvals, and policies

This setup preserves the familiar Argo CD reconciliation loop inside each control cluster while extending it with Harness’ governance, observability, and promotion pipelines across regions.

Note: Some enterprises run multiple Argo CD control clusters per region for scale or isolation. Harness SaaS can aggregate across any number of clusters, whether you have two or two hundred.

Next Steps

Harness GitOps lets you scale from single clusters to a fleet-wide GitOps model with unified dashboards, governance, and pipelines that promote with confidence and roll back everything when needed. Ready to see it in your stack? Get started with Harness GitOps and bring enterprise-grade control to your Argo CD deployments.

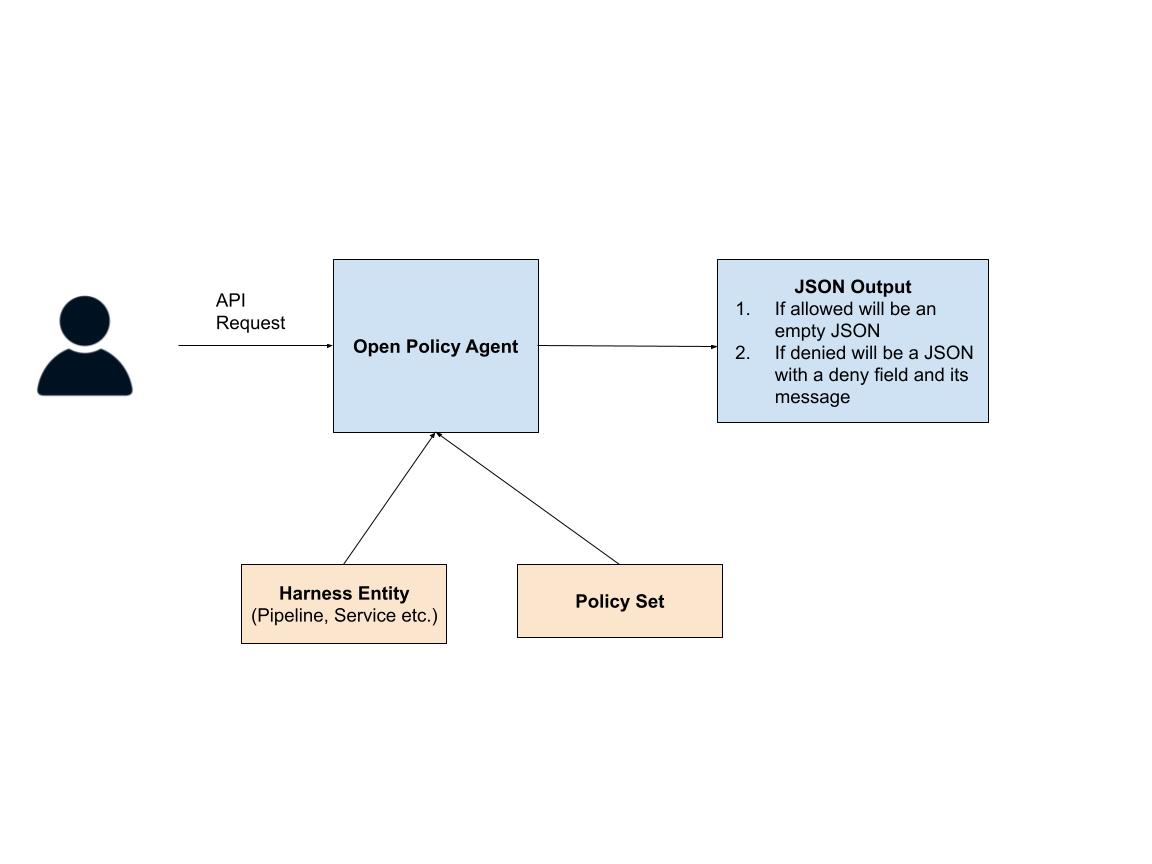

Rego 101: Policy Driven DevOps

DevOps governance is a crucial aspect of modern software delivery. To ensure that software releases are secure and compliant, it is pivotal to embed governance best practices within your software delivery platform. At Harness, the feature that powers this capability is Open Policy Agent (OPA), a policy engine that enables fine-grained access control over your Harness entities.

In this blog post, we’ll explain how to use OPA to ensure that your DevOps team follows best practices when releasing software to production. More specifically, we’ll explain how to write these policies in Harness.

Policy Enforcement in Harness

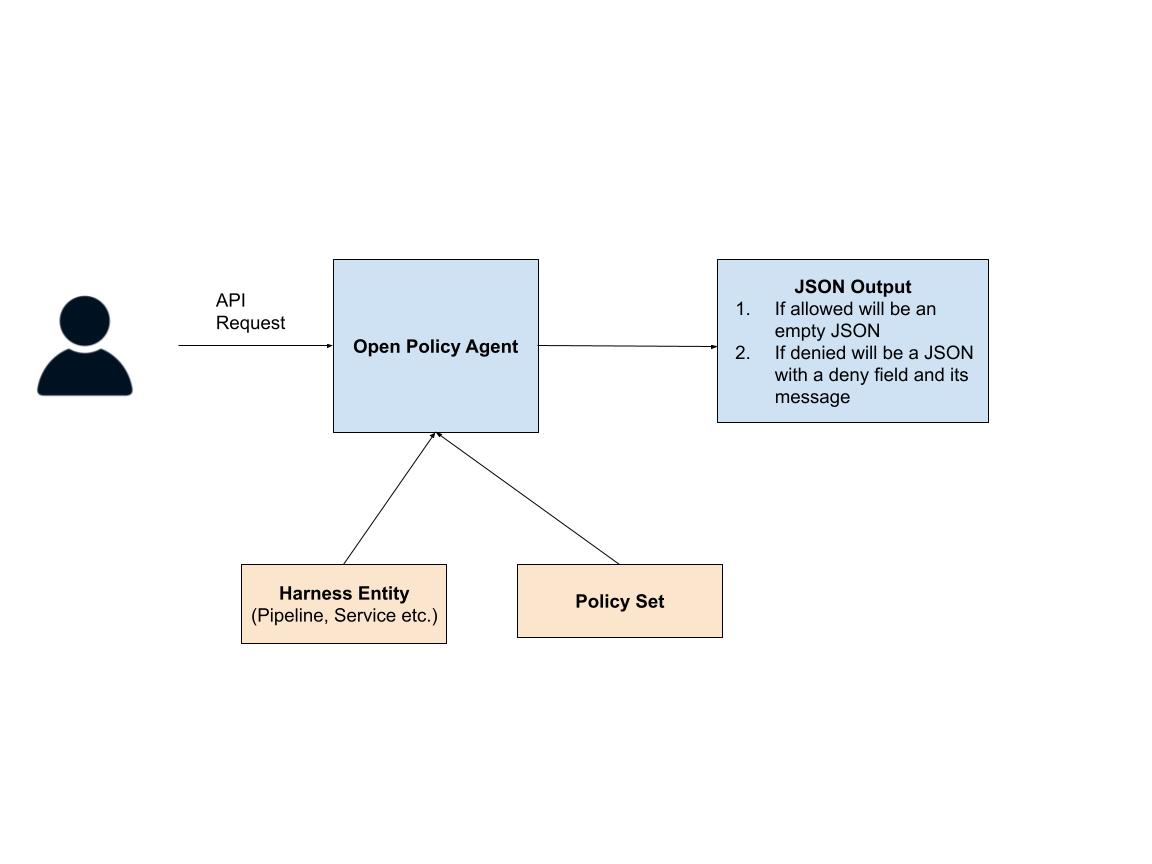

Every time an API request comes to Harness, the service sends the API request to the policy agent. The policy agent uses three things to evaluate whether the request can be made: the contents of the request, the target entity of the request, and the policy set(s) on that entity. After evaluating the policies in those policy sets, the agent simply outputs a JSON object.

If the API request should be denied the JSON object looks like:

{

“deny”:[<reason>]

}And the JSON object is empty, if the API request should be allowed:

{}Let’s now dive a bit deeper to look into how to actually write these policies.

Rego: The Policy Language

OPA policies are written using Rego, a declarative language that allows you to reason about information in structured documents. Let’s take an example of a possible practice that you’d want to enforce within your Continuous Delivery pipelines. Let’s say you don’t want to be making HTTP calls to outside services within your deployment environment and want to enforce the practice: “Every pipeline’s Deployment Stage shouldn’t have an HTTP step”

Now, let’s look at the policy below that enforces this rule:

package pipeline

deny[msg] {

# Check for a deployment stage ...

input.pipeline.stages[i].stage.type == "Deployment"

# For that deployment stage check if there’s an Http step ...

input.pipeline.stages[i].stage.spec.execution.steps[j].step.type == "Http"

# Show a human-friendly error message

msg := "Deployment pipeline should not have HTTP step"

}

First you’ll notice some interesting things about this policy language. The first line declares that the policy is part of a package (“package pipeline”) and then the next line:

deny[msg] {is declaring a “deny block,” which tells the agent that if the statements in the policy are true, declare the variable deny with the message variable.

Then you’ll notice that the next line checks to see if there’s a deployment stage:

input.pipeline.stages[i].stage.type == "Deployment"

You may be thinking, there’s a variable “i” that was never declared! We’ll get to that later in the blog but for now just know that what OPA will do here is try to see if there’s any number i for which this statement is true. If there is, it will assign that number to “i” and move on to the next line,

input.pipeline.stages[i].stage.spec.execution.steps[j].step.type == "Http"Just like above, here OPA will now look for any j for which the statement above is true. If there are values of i and j for which these lines are true then OPA will finally move on to the last line:

msg := “deployment pipeline should not have HTTP step”Which sets the message variable to that string. So for the following input

{

"pipeline": {

"name": "abhijit-test-2",

"identifier": "abhijittest2",

"tags": {},

"projectIdentifier": "abhijittestproject",

"orgIdentifier": "default",

"stages": [

{

"stage": {

"name": "my-deployment",

"identifier": "my-deployment",

"description": "",

"type": "Deployment",

"spec": {

"execution": {

"steps": [

{

"step": {

"name": "http",

"identifier": "http",

"type": "Http",

…

}

The output will be:

{

“Deny”:["Deployment pipeline should not have HTTP step."]

}Ok, so that might have made some sense at a high level, but let’s really get a bit deeper into how to write these policies. Let’s look into how Rego works under the hood and get you to a point where you can write Rego policies for your use cases in Harness.

Rego’s Declarative Approach

You may have noticed that throughout this blog we’ve been referring to Rego as a “declarative language” but what does that exactly mean? Most programming languages are “imperative” which means that each line of code explicitly states what needs to be done. In a declarative language, at run time, the program walks through a data source to find a match. With Rego what you do is you define a certain set of conditions, and OPA searches the input data to see whether those conditions are matched. Let’s see what this means with a simple example.

Imagine you have the following Rego policy:

x if input.user == “alex”

y if input.tokens > 100

and the engine gets the following input:

{

“user”: “alex”,

“tokens”: 200

}

The Policy engine will take the input and evaluate the policy line by line. Since both statements are true for the input shown, the policy engine will output:

{

"x": true,

“y”: true

}

Now, both of these were simple rules that could be defined in one line each. But you often want to do something a bit more complex. In fact, most rules in Rego are written using the following syntax:

variable_name := value {

condition 1

condition 2

...

}

The way to read this is, the variable is assigned the value if all the conditions within the block are met.

So let’s go back to the simple statements we had above. Let’s say we want our policy engine to allow a request only if the user is alex and if they have more than 100 tokens left. Thus our policy would look like:

allow := true {

input.user == “alex”

input.tokens > 100

}

It would return true for the following input request:

{

“user”: “alex”,

“tokens”: 200

}

But false for either of the following

{

“user”: “bob”,

“tokens”: 200

}

{

“user”: “alex”,

“tokens”: 50

}

Now let’s look at something a bit more complicated. Let’s say you want to write a policy to allow a “delete” action if the user has the “admin” permission attached. This is what the policy would look like (note: this policy is for illustrative purposes only and will not work in Harness)

deny {

input.action == “delete”

not user_is_admin

}

user_is_admin {

input.role == “admin”

}

So the first line will match only if the input is a delete action. The second line will then evaluate the “user_is_admin” rule which checks to see if the role field is “admin” and if not, the deny will get triggered. So for the following input:

{

"action": "delete",

“role": “non-admin”

}

The policy agent will return:

{“deny”: true}because the role was not “admin” . But for the following input

{

"action": "delete",

“role": “admin”

}

The policy agent will return:

{}Variables, Sets, Arrays

So far we’ve only seen instances of a rego policy taking in input and checking some fields within that input. Let’s see how variables, sets, and arrays are defined. Let’s say you only want to allow a code owner to trigger a pipeline. If that’s the case then the following policy will do the trick (note: this policy is for illustrative purposes only and will not work in Harness):

code_owners = {"albert", "beth", "claire"}

deny[msg] {

triggered_by = input.triggered_by

not code_owners[triggered_by]

msg := "Triggered user is not permitted to run publish CI"

}

Here, on line 1 we are defining the code owners variable as a set with three names. We are then entering the deny block. Remember, for the deny block to evaluate to true, all three lines within the block need to evaluate to true. The first line sets the “triggered_by” variable to see who triggered the pipeline. The next line

not code_owners[triggered_by]Checks if the code_owners set does not contain the variable triggered_by. Finally if that line evaluates to true, the next line is then run, where the value of message is set and finally the deny variable is established.

Now let’s look at an example of a policy that contains an array. Let’s say you want to ensure that every last step of a Harness pipeline is an “Approval” step. The policy below will ensure that’s the case (this policy will work in Harness):

package pipeline

deny[msg] {

arr_len = count(input.pipeline.stages)

not input.pipeline.stages[arr_len-1].stage.type == "Approval"

msg := "Last stage must be an approval stage"

}

The first line will first assign the length of the array to the variable “arr_len” and then the next line will ensure that the last stage in the pipeline is an Approval stage.

Ok, let’s look at another slightly more complicated policy that’ll work in Harness. Let’s say you want to write a policy: “For all pipelines where there’s a Deployment step, it is immediately followed by an Approval step”

deny[msg] {

input.pipeline.stages[i].stage.type == "Deployment"

not input.pipeline.stages[i + 1].stage.type == 'Approval'

}

The first line matches all values of ‘i’ for which the stage type is ‘Deployment’. The next line then checks whether there’s any value of i for which the stage at i+1 is not an ‘Approval’ stage. If for any i those two statements are true, then the deny block gets evaluated to true.

Objects and Dictionaries

Finally, Rego also supports objects and dictionaries. An object is an unordered key-value collection. The key can be of any type and so can the value.

user_albert = {

"admin":true, "employee_id": 12, "state": "Texas"

}

To access any of this object’s attributes you simply use the “.” notation (i.e. user_albert.state).

You can also create dictionaries as follows:

users_dictionary = {

"albert": user_albert,

"bob": user_bob ...

}

And access each entry using the following syntax users_dictionary[‘albert’]

Harness Objects

Of course in order to be able to write these policies correctly you need to know the types of objects that you can apply them on and the schema of these objects:

- Pipeline

- Flag

- Template

- Sbom

- Security Tests

- Terraform plan

- Terraform state

- Terraform plan cost

- Service

- Environment

- Infrastructure

- Override

- Variable

A simple way to figure out how to refer to deeply nested attributes within an object’s schema is shown in the gif below.

- Navigate to the policy creation window as shown below.

- Then click on testing terminal.

- Copy/paste a sample YAML object into the “Testing Terminal”

- As you navigate through the YAML you’ll see the dotted notation to refer to each field right above the policy editor.

Get Started

Visit our documentation to get started with OPA today!

See Also

Recent Blogs

Unit Testing in CI/CD: How to Accelerate Builds Without Sacrificing Quality

Modern unit testing in CI/CD can help teams avoid slow builds by using smart strategies. Choosing the right tests, running them in parallel, and using intelligent caching all help teams get faster feedback while keeping code quality high.

Platforms like Harness CI use AI-powered test intelligence to reduce test cycles by up to 80%, showing what’s possible with the right tools. This guide shares practical ways to speed up builds and improve code quality, from basic ideas to advanced techniques that also lower costs.

What Is a Unit Test?

Knowing what counts as a unit test is key to building software delivery pipelines that work.

The Smallest Testable Component

A unit test looks at a single part of your code, such as a function, class method, or a small group of related components. The main point is to test one behavior at a time. Unit tests are different from integration tests because they look at the logic of your code. This makes it easier to figure out what went wrong if something goes wrong.

Isolation Drives Speed and Reliability

Unit tests should only check code that you wrote and not things like databases, file systems, or network calls. This separation makes tests quick and dependable. Tests that don't rely on outside services run in milliseconds and give the same results no matter where they are run, like on your laptop or in a CI pipeline.

Foundation for CI/CD Quality Gates

Unit tests are one of the most important part of continuous integration in CI/CD pipelines because they show problems right away after code changes. Because they are so fast, developers can run them many times a minute while they are coding. This makes feedback loops very tight, which makes it easier to find bugs and stops them from getting to later stages of the pipeline.

Unit Testing Strategies: Designing for Speed and Reliability

Teams that run full test suites on every commit catch problems early by focusing on three things: making tests fast, choosing the right tests, and keeping tests organized. Good unit testing helps developers stay productive and keeps builds running quickly.

Deterministic Tests for Every Commit

Unit tests should finish in seconds, not minutes, so that they can be quickly checked. Google's engineering practices say that tests need to be "fast and reliable to give engineers immediate feedback on whether a change has broken expected behavior." To keep tests from being affected by outside factors, use mocks, stubs, and in-memory databases. Keep commit builds to less than ten minutes, and unit tests should be the basis of this quick feedback loop.

Intelligent Test Selection

As projects get bigger, running all tests on every commit can slow teams down. Test Impact Analysis looks at coverage data to figure out which tests really check the code that has been changed. AI-powered test selection chooses the right tests for you, so you don't have to guess or sort them by hand.

Parallelization and Caching

To get the most out of your infrastructure, use selective execution and run tests at the same time. Divide test suites into equal-sized groups and run them on different machines simultaneously. Smart caching of dependencies, build files, and test results helps you avoid doing the same work over and over. When used together, these methods cut down on build time a lot while keeping coverage high.

Standardized Organization for Scale

Using consistent names, tags, and organization for tests helps teams track performance and keep quality high as they grow. Set clear rules for test types (like unit, integration, or smoke) and use names that show what each test checks. Analytics dashboards can spot flaky tests, slow tests, and common failures. This helps teams improve test suites and keep things running smoothly without slowing down developers.

Unit Test Example: From Code to Assertion

A good unit test uses the Arrange-Act-Assert pattern. For example, you might test a function that calculates order totals with discounts:

def test_apply_discount_to_order_total():

# Arrange: Set up test data

order = Order(items=[Item(price=100), Item(price=50)])

discount = PercentageDiscount(10)

# Act: Execute the function under test

final_total = order.apply_discount(discount)

# Assert: Verify expected outcome

assert final_total == 135 # 150 - 10% discountIn the Arrange phase, you set up the objects and data you need. In the Act phase, you call the method you want to test. In the Assert phase, you check if the result is what you expected.

Testing Edge Cases

Real-world code needs to handle more than just the usual cases. Your tests should also check edge cases and errors:

def test_apply_discount_with_empty_cart_returns_zero():

order = Order(items=[])

discount = PercentageDiscount(10)

assert order.apply_discount(discount) == 0

def test_apply_discount_rejects_negative_percentage():

order = Order(items=[Item(price=100)])

with pytest.raises(ValueError):

PercentageDiscount(-5)Notice the naming style: test_apply_discount_rejects_negative_percentage clearly shows what’s being tested and what should happen. If this test fails in your CI pipeline, you’ll know right away what went wrong, without searching through logs.

Benefits of Unit Testing: Building Confidence and Saving Time

When teams want faster builds and fewer late-stage bugs, the benefits of unit testing are clear. Good unit tests help speed up development and keep quality high.

- Catch regressions right away: Unit tests run in seconds and find breaking changes before they get to integration or production environments.

- Allow fearless refactoring: A strong set of tests gives you the confidence to change code without adding bugs you didn't expect.

- Cut down on costly debugging: Research shows that unit tests cover a lot of ground and find bugs early when fixing them is cheapest.

- Encourage modular design: Writing code that can be tested naturally leads to better separation of concerns and a cleaner architecture.

When you use smart test execution in modern CI/CD pipelines, these benefits get even bigger.

Disadvantages of Unit Testing: Recognizing the Trade-Offs

Unit testing is valuable, but knowing its limits helps teams choose the right testing strategies. These downsides matter most when you’re trying to make CI/CD pipelines faster and more cost-effective.

- Maintenance overhead grows as automated tests expand, requiring ongoing effort to update brittle or overly granular tests.

- False confidence occurs when high unit test coverage hides integration problems and system-level failures.

- Slow execution times can bottleneck CI pipelines when test collections take hours instead of minutes to complete.

- Resource allocation shifts developer time from feature work to test maintenance and debugging flaky tests.

- Coverage gaps appear in areas like GUI components, external dependencies, and complex state interactions.

Research shows that automatically generated tests can be harder to understand and maintain. Studies also show that statement coverage doesn’t always mean better bug detection.

Industry surveys show that many organizations have trouble with slow test execution and unclear ROI for unit testing. Smart teams solve these problems by choosing the right tests, using smart caching, and working with modern CI platforms that make testing faster and more reliable.

How Do Developers Use Unit Tests in Real Workflows?

Developers use unit tests in three main ways that affect build speed and code quality. These practices turn testing into a tool that catches problems early and saves time on debugging.

Test-Driven Development and Rapid Feedback Loops

Before they start coding, developers write unit tests. They use test-driven development (TDD) to make the design better and cut down on debugging. According to research, TDD finds 84% of new bugs, while traditional testing only finds 62%. This method gives you feedback right away, so failing tests help you decide what to do next.

Regression Prevention and Bug Validation

Unit tests are like automated guards that catch bugs when code changes. Developers write tests to recreate bugs that have been reported, and then they check that the fixes work by running the tests again after the fixes have been made. Automated tools now generate test cases from issue reports. They are 30.4% successful at making tests that fail for the exact problem that was reported. To stop bugs that have already been fixed from coming back, teams run these regression tests in CI pipelines.

Strategic Focus on Business Logic and Public APIs

Good developer testing doesn't look at infrastructure or glue code; it looks at business logic, edge cases, and public interfaces. Testing public methods and properties is best; private details that change often should be left out. Test doubles help developers keep business logic separate from systems outside of their control, which makes tests more reliable. Integration and system tests are better for checking how parts work together, especially when it comes to things like database connections and full workflows.

Unit Testing Best Practices: Maximizing Value, Minimizing Pain

Slow, unreliable tests can slow down CI and hurt productivity, while also raising costs. The following proven strategies help teams check code quickly and cut both build times and cloud expenses.

- Write fast, isolated tests that run in milliseconds and avoid external dependencies like databases or APIs.

- Use descriptive test names that clearly explain the behavior being tested, not implementation details.

- Run only relevant tests using selective execution to cut cycle times by up to 80%.

- Monitor test health with failure analytics to identify flaky or slow tests before they impact productivity.

- Refactor tests regularly alongside production code to prevent technical debt and maintain suite reliability.

Types of Unit Testing: Manual vs. Automated

Choosing between manual and automated unit testing directly affects how fast and reliable your pipeline is.

Manual Unit Testing: Flexibility with Limitations

Manual unit testing means developers write and run tests by hand, usually early in development or when checking tricky edge cases that need human judgment. This works for old systems where automation is hard or when you need to understand complex behavior. But manual testing can’t be repeated easily and doesn’t scale well as projects grow.

Automated Unit Testing: Speed and Consistency at Scale

Automated testing transforms test execution into fast, repeatable processes that integrate seamlessly with modern development workflows. Modern platforms leverage AI-powered optimization to run only relevant tests, cutting cycle times significantly while maintaining comprehensive coverage.

Why High-Velocity Teams Prioritize Automation

Fast-moving teams use automated unit testing to keep up speed and quality. Manual testing is still useful for exploring and handling complex cases, but automation handles the repetitive checks that make deployments reliable and regular.

Difference Between Unit Testing and Other Types of Testing

Knowing the difference between unit, integration, and other test types helps teams build faster and more reliable CI/CD pipelines. Each type has its own purpose and trade-offs in speed, cost, and confidence.

Unit Tests: Fast and Isolated Validation

Unit tests are the most important part of your testing plan. They test single functions, methods, or classes without using any outside systems. You can run thousands of unit tests in just a few minutes on a good machine. This keeps you from having problems with databases or networks and gives you the quickest feedback in your pipeline.

Integration Tests: Validating Component Interactions

Integration testing makes sure that the different parts of your system work together. There are two main types of tests: narrow tests that use test doubles to check specific interactions (like testing an API client with a mock service) and broad tests that use real services (like checking your payment flow with real payment processors). Integration tests use real infrastructure to find problems that unit tests might miss.

End-to-End Tests: Complete User Journey Validation

The top of the testing pyramid is end-to-end tests. They mimic the full range of user tasks in your app. These tests are the most reliable, but they take a long time to run and are hard to fix. Unit tests can find bugs quickly, but end-to-end tests may take days to find the same bug. This method works, but it can be brittle.

The Test Pyramid: Balancing Speed and Coverage

The best testing strategy uses a pyramid: many small, fast unit tests at the bottom, some integration tests in the middle, and just a few end-to-end tests at the top.

Workflow of Unit Testing in CI/CD Pipelines

Modern development teams use a unit testing workflow that balances speed and quality. Knowing this process helps teams spot slow spots and find ways to speed up builds while keeping code reliable.

The Standard Development Cycle

Before making changes, developers write code on their own computers and run unit tests. They run tests on their own computers to find bugs early, and then they push the code to version control so that CI pipelines can take over. This step-by-step process helps developers stay productive by finding problems early, when they are easiest to fix.

Automated CI Pipeline Execution

Once code is in the pipeline, automation tools run unit tests on every commit and give feedback right away. If a test fails, the pipeline stops deployment and lets developers know right away. This automation stops bad code from getting into production. Research shows this method can cut critical defects by 40% and speed up deployments.

Accelerating the Workflow

Modern CI platforms use Test Intelligence to only run the tests that are affected by code changes in order to speed up this process. Parallel testing runs test groups in different environments at the same time. Smart caching saves dependencies and build files so you don't have to do the same work over and over. These steps can help keep coverage high while lowering the cost of infrastructure.

Results Analysis and Continuous Improvement

Teams analyze test results through dashboards that track failure rates, execution times, and coverage trends. Analytics platforms surface patterns like flaky tests or slow-running suites that need attention. This data drives decisions about test prioritization, infrastructure scaling, and process improvements. Regular analysis ensures the unit testing approach continues to deliver value as codebases grow and evolve.

Unit Testing Techniques: Tools for Reliable, Maintainable Tests

Using the right unit testing techniques can turn unreliable tests into a reliable way to speed up development. These proven methods help teams trust their code and keep CI pipelines running smoothly:

- Replace slow external dependencies with controllable test doubles that run consistently.

- Generate hundreds of test cases automatically to find edge cases you'd never write manually.

- Run identical test logic against multiple inputs to expand coverage without extra maintenance.

- Capture complex output snapshots to catch unintended changes in data structures.

- Verify behavior through isolated components that focus tests on your actual business logic.

These methods work together to build test suites that catch real bugs and stay easy to maintain as your codebase grows.

Isolation Through Test Doubles

As we've talked about with CI/CD workflows, the first step to good unit testing is to separate things. This means you should test your code without using outside systems that might be slow or not work at all. Dependency injection is helpful because it lets you use test doubles instead of real dependencies when you run tests.

It is easier for developers to choose the right test double if they know the differences between them. Fakes are simple working versions, such as in-memory databases. Stubs return set data that can be used to test queries. Mocks keep track of what happens so you can see if commands work as they should.

This method makes sure that tests are always quick and accurate, no matter when you run them. Tests run 60% faster and there are a lot fewer flaky failures that slow down development when teams use good isolation.

Teams need more ways to get more test coverage without having to do more work, in addition to isolation. You can set rules that should always be true with property-based testing, and it will automatically make hundreds of test cases. This method is great for finding edge cases and limits that manual tests might not catch.

Expanding Coverage with Smart Generation

Parameterized testing gives you similar benefits, but you have more control over the inputs. You don't have to write extra code to run the same test with different data. Tools like xUnit's Theory and InlineData make this possible. This helps find more bugs and makes it easier to keep track of your test suite.

Both methods work best when you choose the right tests to run. You only run the tests you need, so platforms that know which tests matter for each code change give you full coverage without slowing things down.

Verifying Complex Outputs

The last step is to test complicated data, such as JSON responses or code that was made. Golden tests and snapshot testing make things easier by saving the expected output as reference files, so you don't have to do complicated checks.

If your code’s output changes, the test fails and shows what’s different. This makes it easy to spot mistakes, and you can approve real changes by updating the snapshot. This method works well for testing APIs, config generators, or any code that creates structured output.

Teams that use full automated testing frameworks see code coverage go up by 32.8% and catch 74.2% more bugs per build. Golden tests help by making it easier to check complex cases that would otherwise need manual testing.

The main thing is to balance thoroughness with easy maintenance. Golden tests should check real behavior, not details that change often. When you get this balance right, you’ll spend less time fixing bugs and more time building features.

Unit Testing Tools: Frameworks That Power Modern Teams

Picking the right unit testing tools helps your team write tests efficiently, instead of wasting time on flaky tests or slow builds. The best frameworks work well with your language and fit smoothly into your CI/CD process.

- JUnit and TestNG dominate Java environments, with TestNG offering advanced features like parallel execution and seamless pipeline integration.

- pytest leads Python testing environments with powerful fixtures and minimal boilerplate, making it ideal for teams prioritizing developer experience.

- Jest provides zero-configuration testing for JavaScript/TypeScript projects, with built-in mocking and snapshot capabilities.

- RSpec delivers behavior-driven development for Ruby teams, emphasizing readable test specifications.

Modern teams use these frameworks along with CI platforms that offer analytics and automation. This mix of good tools and smart processes turns testing from a bottleneck into a productivity boost.

Transform Your Development Velocity Today

Smart unit testing can turn CI/CD from a bottleneck into an advantage. When tests are fast and reliable, developers spend less time waiting and more time releasing code. Harness Continuous Integration uses Test Intelligence, automated caching, and isolated build environments to speed up feedback without losing quality.

Want to speed up your team? Explore Harness CI and see what's possible.

Powering Harness Executions Page: Inside Our Flexible Filters Component

Filtering data is at the heart of developer productivity. Whether you’re looking for failed builds, debugging a service or analysing deployment patterns, the ability to quickly slice and dice execution data is critical.

At Harness, users across CI, CD and other modules rely on filtering to navigate complex execution data by status, time range, triggers, services and much more. While our legacy filtering worked, it had major pain points — hidden drawers, inconsistent behaviour and lost state on refresh — that slowed both developers and users.

This blog dives into how we built a new Filters component system in React: a reusable, type-safe and feature-rich framework that powers the filtering experience on the Execution Listing page (and beyond).

Prefer Watching? Here’s the Talk

The Starting Point: Challenges with Our Legacy Filters

Our old implementation revealed several weaknesses as Harness scaled:

- Poor Discoverability and UX: Filters were hidden in a side panel, disrupting workflow and making applied filters non-glanceable. Users didn’t get feedback until the filter was applied/saved.

- Inconsistency Across Modules: Custom logic in modules like CI and CD led to confusing behavioural differences.

- High Developer Overhead: Adding new filters was cumbersome, requiring edits to multiple files with brittle boilerplate.

These problems shaped our success criteria: discoverability, smooth UX, consistent behaviour, reusable design and decoupled components.

The Evolution of Filters: A Design Journey

Building a truly reusable and powerful filtering system required exploration and iteration. Our journey involved several key stages and learning from the pitfalls of each:

Iteration 1: React Components (Conditional Rendering)

Shifted to React functional components but kept logic centralised in the FilterFramework. Each filter was conditionally rendered based on visibleFilters array. Framework fetched filter options and passed them down as props.

COMPONENT FilterFramework:

STATE activeFilters = {}

STATE visibleFilters = []

STATE filterOptions = {}

ON visibleFilters CHANGE:

FOR EACH filter IN visibleFilters:

IF filterOptions[filter] NOT EXISTS:

options = FETCH filterData(filter)

filterOptions[filter] = options

ON activeFilters CHANGE:

makeAPICall(activeFilters)

RENDER:

<AllFilters setVisibleFilters={setVisibleFilters} />

IF 'services' IN visibleFilters:

<DropdownFilter

name="Services"

options={filterOptions.services}

onAdd={updateActiveFilters}

onRemove={removeFromVisible}

/>

IF 'environments' IN visibleFilters:

<DropdownFilter ... />

Pitfalls: Adding new filters required changes in multiple places, creating a maintenance nightmare and poor developer experience. The framework had minimal control over filter implementation, lacked proper abstraction and scattered filter logic across the codebase, making it neither “stupid-proof” nor scalable.

Iteration 2: React.cloneElement Pattern

Improved the previous approach by accepting filters as children and using React.cloneElement to inject callbacks (onAdd, onRemove) from the parent framework. This gave developers a cleaner API to add filters.

children.forEach(child => {

if (visibleFilters.includes(child.props.filterKey)) {

return React.cloneElement(child, {

onAdd: (label, value) => {

activeFilters[child.props.filterKey].push({ label, value });

},

onRemove: () => {

delete activeFilters[child.props.filterKey];

}

});

}

});Pitfalls: React.cloneElement is an expensive operation that causes performance issues with frequent re-renders and it’s considered an anti-pattern by the React team. The approach tightly coupled filters to the framework’s callback signature, made prop flow implicit and difficult to debug and created type safety issues since TypeScript struggles with dynamically injected props.

Final Solution: Context API

The winning design uses React Context API to provide filter state and actions to child components. Individual filters access setValue and removeFilter via useFiltersContext() hook. This decouples filters from the framework while maintaining control.

COMPONENT Filters({ children, onChange }):

STATE filtersMap = {} // { search: { value, query, state } }

STATE filtersOrder = [] // ['search', 'status']

FUNCTION updateFilter(key, newValue):

serialized = parser.serialize(newValue) // Type → String

filtersMap[key] = { value: newValue, query: serialized }

updateURL(serialized)

onChange(allValues)

ON URL_CHANGE:

parsed = parser.parse(urlString) // String → Type

filtersMap[key] = { value: parsed, query: urlString }

RENDER:

<Context.Provider value={{ updateFilter, filtersMap }}>

{children}

</Context.Provider>

END COMPONENTBenefits: This solution eliminated the performance overhead of cloneElement, decoupled filters from framework internals and made it easy to add new filters without touching framework code. The Context API provides clear data flow that’s easy to debug and test, with type safety through TypeScript.

Inversion of Control (IoC)

The Context API in React unlocks something truly powerful — Inversion of Control (IoC). This design principle is about delegating control to a framework instead of managing every detail yourself. It’s often summed up by the Hollywood Principle: “Don’t call us, we’ll call you.”

In React, this translates to building flexible components that let the consumer decide what to render, while the component itself handles how and when it happens.

Our Filters framework applies this principle: you don’t have to manage when to update state or synchronise the URL. You simply define your filter components and the framework orchestrates the rest — ensuring seamless, predictable updates without manual intervention.

How Filters Inverts Control

Our Filters framework demonstrates Inversion of Control in three key ways.

- Logic via Props: The framework doesn’t know how to save filters or fetch data — the parent injects those functions. The framework decides when to call them, but the parent defines what they do.

- Content via Children (Composition): The parent decides which filters to render.

- Actions via Callbacks: The framework triggers callbacks when users type, select or apply filters, but it’s your code that decides what happens next — fetch data, update cache or send analytics.

The result? A single, reusable Filters component that works across pipelines, services, deployments or repositories. By separating UI logic from business logic, we gain flexibility, testability and cleaner architecture — the true power of Inversion of Control.

COMPONENT DemoPage:

STATE filterValues

FilterHandler = createFilters()

FUNCTION applyFilters(data, filters):

result = data

IF filters.onlyActive == true:

result = result WHERE item.status == "Active"

RETURN result

filteredData = applyFilters(SAMPLE_DATA, filterValues)

RENDER:

<RouterContextProvider>

<FilterHandler onChange = (updatedFilters) => SET filterValues = updatedFilters>

// Dropdown to add filters dynamically

<FilterHandler.Dropdown>

RENDER FilterDropdownMenu with available filters

</FilterHandler.Dropdown>

// Active filters section

<FilterHandler.Content>

<FilterHandler.Component parser = booleanParser filterKey = "onlyActive">

RENDER CustomActiveOnlyFilter

</FilterHandler.Component>

</FilterHandler.Content>

</FilterHandler>

RENDER DemoTable(filteredData)

</RouterContextProvider>

END COMPONENTThe URL Problem

One of the key technical challenges in building a filtering system is URL synchronization. Browsers only understand strings, yet our applications deal with rich data types — dates, booleans, arrays and more. Without a structured solution, each component would need to manually convert these values, leading to repetitive, error-prone code.

The solution is our parser interface, a lightweight abstraction with just two methods: parse and serialize.

parseconverts a URL string into the type your app needs.serializedoes the opposite, turning that typed value back into a string for the URL.

This bidirectional system runs automatically — parsing when filters load from the URL and serialising when users update filters.

const booleanParser: Parser<boolean> = {

parse: (value: string) => value === 'true', // "true" → true

serialize: (value: boolean) => String(value) // true → "true"

}FiltersMap — The State Hub

At the heart of our framework lies the FiltersMap — a single, centralized object that holds the complete state of all active filters. It acts as the bridge between your React components and the browser, keeping UI state and URL state perfectly in sync.

Each entry in the FiltersMap contains three key fields:

- Value — the parsed, typed data your components actually use (e.g. Date objects, arrays, booleans).

- Query — the serialized string representation that’s written to the URL.

- State — the filter’s lifecycle status: hidden, visible or actively filtering.

You might ask — why store both the typed value and its string form? The answer is performance and reliability. If we only stored the URL string, every re-render would require re-parsing, which quickly becomes inefficient for complex filters like multi-selects. By storing both, we parse only once — when the value changes — and reuse the typed version afterward. This ensures type safety, faster URL synchronization and a clean separation between UI behavior and URL representation. The result is a system that’s predictable, scalable, and easy to maintain.

interface FilterType<T = any> {

value?: T // The actual filter value

query?: string // Serialized string for URL

state: FilterStatus // VISIBLE | FILTER_APPLIED | HIDDEN

}The Journey of a Filter Value

Let’s trace how a filter value moves through the system — from user interaction to URL synchronization.

It all starts when a user interacts with a filter component — for example, selecting a date. This triggers an onChange event with a typed value, such as a Date object. Before updating the state, the parser’s serialize method converts that typed value into a URL-safe string.

The framework then updates the FiltersMap with both versions:

- the typed value under

valueand - the serialized string under

query.

From here, two things happen simultaneously:

- The

onChangecallback fires, passing typed values back to the parent component — allowing the app to immediately fetch data or update visualizations. - The URL updates using the serialized query string, keeping the browser’s address bar in sync and making the current filter state instantly shareable or bookmarkable.

The reverse flow works just as seamlessly. When the URL changes — say, the user clicks the back button — the parser’s parse method converts the string back into a typed value, updates the FiltersMap and triggers a re-render of the UI.

All of this happens within milliseconds, enabling a smooth, bidirectional synchronization between the application state and the URL — a crucial piece of what makes the Filters framework feel so effortless.

Conclusion

For teams tackling similar challenges — complex UI state management, URL synchronization and reusable component design — this architecture offers a practical blueprint to build upon. The patterns used are not specific to Harness; they are broadly applicable to any modern frontend system that requires scalable, stateful and user-driven filtering.

The team’s core objectives — discoverability, smooth UX, consistent behavior, reusable design and decoupled elements — directly shaped every architectural decision. Through Inversion of Control, the framework manages the when and how of state updates, lifecycle events and URL synchronization, while developers define the what — business logic, API calls and filter behavior.

By treating the URL as part of the filter state, the architecture enables shareability, bookmarkability and native browser history support. The Context API serves as the control distribution layer, removing the need for prop drilling and allowing deeply nested components to seamlessly access shared logic and state.

Ultimately, Inversion of Control also paved the way for advanced capabilities such as saved filters, conditional rendering, and sticky filters — all while keeping the framework lightweight and maintainable. This approach demonstrates how clear objectives and sound architectural principles can lead to scalable, elegant solutions in complex UI systems.

Closing the Year Strong: Harness Q4 2025 Continuous Delivery & GitOps Update

Welcome back to the quarterly update series! Catch up on the latest Harness

Continuous Delivery innovations and enhancements with this quarter's Q4 2025 release. For full context, check out our previous updates:

- Q1 2025 Product Update: All the Latest Features Delivered by Harness

- Unlocking Innovation: Harness Q2 Feature Releases That Accelerate Your DevOps Journey

- Harness Q3 2025 update on continuous delivery enhancements

Q4 2025 builds on last quarter's foundation of performance, observability, and governance with "big-swing" platform upgrades that make shipping across VMs/Kubernetes safer, streamline artifacts/secrets, and scale GitOps without operational drag.

Deployments

Google Cloud Managed Instance Groups (MIG)

Harness now supports deploying and managing Google Cloud Managed Instance Groups (MIGs), bringing a modern CD experience to VM-based workloads. Instead of stitching together instance templates, backend services, and cutovers in the GCP console, you can run repeatable pipelines that handle the full deployment lifecycle—deploy, validate, and recover quickly when something goes wrong.

For teams that want progressive delivery, Harness also supports blue-green deployments with Cloud Service Mesh traffic management. Traffic can be shifted between stable and stage environments using HTTPRoute or GRPCRoute, enabling controlled rollouts like 10% → 50% → 100% with checkpoints along the way. After the initial setup, Harness keeps the stable/stage model consistent and can swap roles once the new version is fully promoted, so you’re not re-planning the mechanics every release.

Learn more about MIG

Multi-account AWS CDK deployments

AWS CDK deployments can now target multiple AWS accounts using a single connector by overriding the region and assuming a step-level IAM role. This is a big quality-of-life improvement for orgs that separate “build” and “run” accounts or segment by business unit, but still want one standardized connector strategy.

Learn more about Multi-account AWS CDK deployments

Automated ECS blue-green traffic shifting.

ECS blue-green deployments now auto-discover the correct stage target group when it’s not provided, selecting the target group with 0% traffic and failing fast if weights are ambiguous. This reduces the blast radius of a very real operational footgun: accidentally deploying into (and modifying) the live production target group during a blue/green cycle.

Learn more about automated ECS blue-green shifting

Azure WebApp API rate-limit resiliency

Improved resiliency for Azure WebApp workflows impacted by API rate limits, reducing flaky behavior and improving overall deployment stability in environments that hit throttling.

Harness Artifact Registry as a native source

HAR is now supported as a native artifact source for all CD deployment types except Helm, covering both container images and packaged artifacts (Maven, npm, NuGet, and generic). Artifact storage and consumption can live inside the same platform that orchestrates the deployment, which simplifies governance and reduces integration sprawl.

Because HAR is natively integrated, you don’t need a separate connector just to pull artifacts. Teams can standardize how artifacts are referenced, keep tagging/digest strategies consistent, and drive more predictable “what exactly are we deploying?” audits across environments.

Learn more about Harness Artifact Registry

GCP connector for Terraform steps

Terraform steps now support authenticating to GCP using GCP connector credentials, including Manual Credentials, Inherit From Delegate, and OIDC authentication methods. This makes it much easier to run consistent IaC workflows across projects without bespoke credential handling in every pipeline.

Learn more about GCP connector

AWS connector: AssumeRole session duration

AWS connectors now support configuring AssumeRole session duration for cross-account access. This is vital when you have longer-running operations (large Terraform applies, multi-region deployments, or complex blue/green flows) and want the session window to match reality.

Learn more about AWS connector

Terragrunt v1.x support (0.78.0+)

Harness now supports Terragrunt 0.78.0+ (including the v1.x line), with automatic detection and the correct command formats. If you’ve been waiting to upgrade Terragrunt without breaking pipeline behavior, this closes a major gap.

Learn more about Terragrunt

HashiCorp Vault JWT claim enhancements

Vault JWT auth now includes richer claims such as pipeline, connector, service, environment, and environment type identifiers. This enables more granular Vault policies, better secret isolation between environments, and cleaner multi-tenant setups.

Continuous Verification (CV)

Custom webhook notifications for verification sub-tasks

CV now supports custom webhook notifications for verification sub-tasks, sending real-time updates for data collection and analysis (with correlation IDs) and allowing delivery via Platform or Delegate. This is a strong building block for teams that want deeper automation around verification outcomes and richer external observability workflows.

Learn more about Custom webhook notifications

Cross-project GCP Operations health sources

You can now query metrics and logs from a different GCP project than the connector’s default by specifying a project ID. This reduces connector sprawl in multi-project organizations and keeps monitoring setups aligned with how GCP estates are actually structured.

Learn more about cross-project GCP Operations

Pipelines

This quarter’s pipeline updates focused on making executions easier to monitor, triggers more resilient, and dynamic pipeline patterns more production-ready. If you manage a large pipeline estate (or rely heavily on PR-driven automation), these changes reduce operational blind spots and help pipelines keep moving even when parts of the system don't function as expected.

Pipeline Notifications for “Waiting on User Action”

Pipelines can now emit a dedicated notification event when execution pauses for approvals, manual interventions, or file uploads. This makes “human-in-the-loop” gates visible in the same places you already monitor pipeline health, and helps teams avoid pipelines silently idling until someone notices.

Bitbucket Cloud Connector – Workspace API Token Support

Harness now supports Bitbucket Cloud Workspace API Tokens in the native connector experience. This is especially useful for teams moving off deprecated app password flows and looking for an authentication model that’s easier to govern and rotate.

Learn more about Bitbucket Cloud Connector

Pipeline Metadata Export to Data Platform (Knowledge Graph Enablement)

Pipeline metadata is now exported to the data platform, enabling knowledge graph style use cases and richer cross-entity insights. This lays the foundation for answering questions like “what deploys what,” “where is this template used,” and “which pipelines are affected if we change this shared component.”

Dynamic InputSet Branch Resolution for PR Triggers

Pull request triggers can now load InputSets from the source branch of the pull request. This is a big unlock for teams that keep pipeline definitions and trigger/config repositories decoupled, or that evolve InputSets alongside code changes in feature branches.

Learn more about Dynamic InputSet Branch Resolutions

Improved Trigger Processing Resilience

Trigger processing is now fault-tolerant; a failure in one trigger’s evaluation no longer blocks other triggers in the same processing flow. This improves reliability during noisy event bursts and prevents one faulty trigger from suppressing otherwise valid automations.

API Support for “Referenced By” Information on CD Objects

Added API visibility into “Referenced By” relationships for CD objects, making it easier to track template adoption and understand downstream impact. This is particularly useful for platform teams that maintain shared templates and need to measure usage, plan migrations, or audit dependencies across orgs and projects.

Detection & Recovery for Stuck Pipeline Executions

Harness now includes detection and recovery mechanisms for pipeline executions that get stuck, reducing reliance on manual support intervention. The end result is fewer long-running “zombie” executions and better overall system reliability for critical delivery workflows.

Dynamic Stages with Git-backed Pipeline YAMLs

Dynamic Stages can now source pipeline YAML directly from Git, with support for connector, branch, file path, and optional commit pinning. Since these values can be expression-driven and resolved at runtime, teams can implement powerful patterns like environment-specific stage composition, governed reuse of centrally managed YAML, and safer rollouts via pinned versions.

Learn more about Dynamic Stages

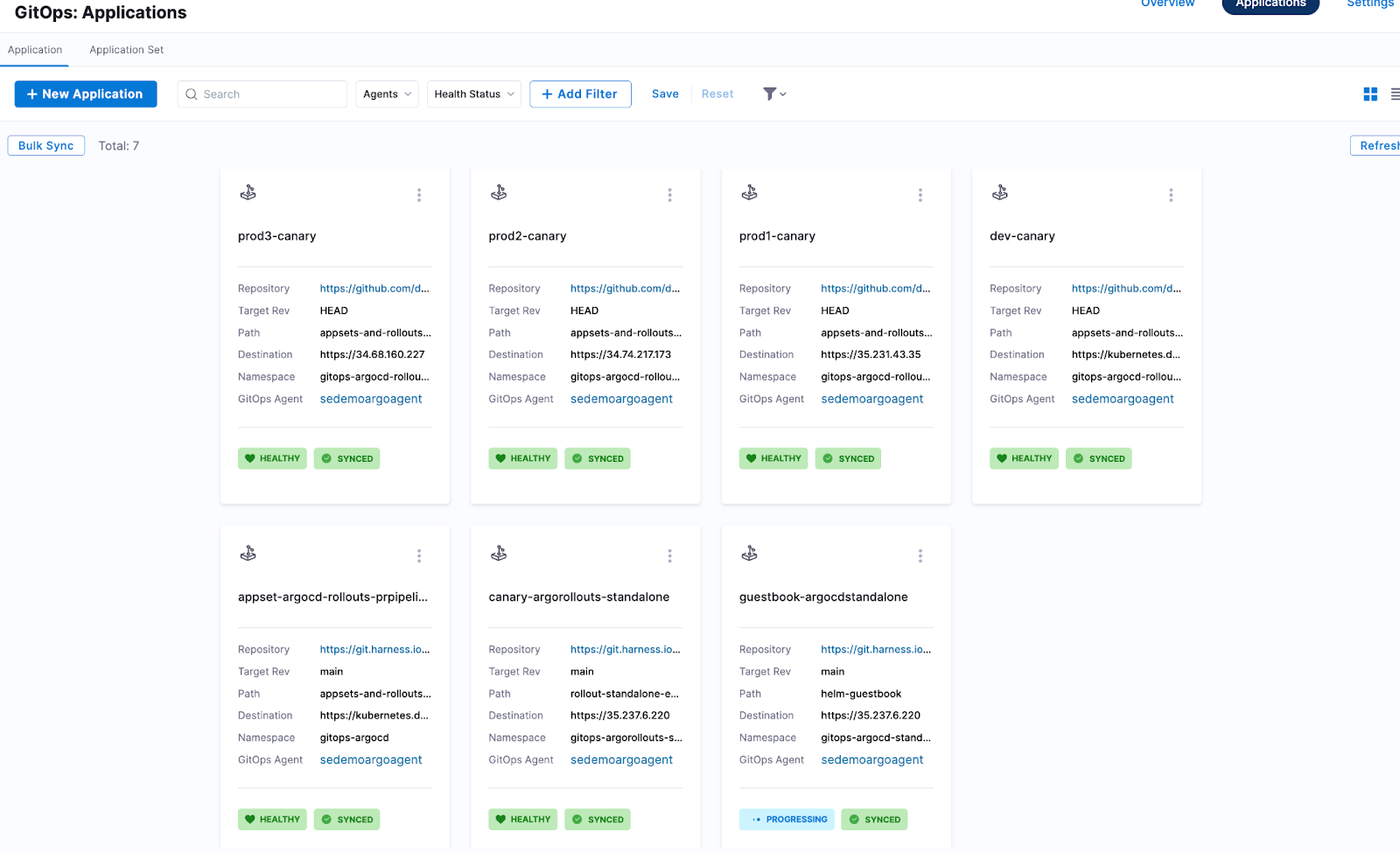

GitOps

ApplicationSets as first-class entities

ApplicationSets are built for a problem every GitOps team eventually hits: one app template, dozens or hundreds of targets. Instead of managing a growing pile of near-duplicate GitOps applications, ApplicationSets act like an application factory—one template plus generators that produce the child applications.

With first-class ApplicationSet support, Harness adds an enhanced UI wizard and deeper platform integration. That includes Service/Environment integration (via standard labels), better RBAC alignment, validation/preview of manifests, and a cleaner operational experience for creating and managing ApplicationSets over time.

Learn more about ApplicationSets

Harness secret expressions in K8s manifests

You can now use Harness secret expressions directly inside Kubernetes manifests in a GitOps flow using the Harness Argo CD Config Management Plugin. The key shift is where resolution happens: secrets are resolved during Argo CD’s manifest rendering phase, which supports a pure GitOps pattern without requiring a Harness Delegate to decrypt secrets.

The developer experience is straightforward. You reference secrets using expressions like <+secrets.getValue("...")>, commit the manifest, and the plugin injects resolved values as Argo CD renders the manifests for deployment.

Learn more about Harness secret expressions

Argo Rollouts support

Harness GitOps now supports Argo Rollouts, unlocking advanced progressive delivery strategies like canary and blue/green with rollout-specific controls. For teams that want more than “sync and hope,” this adds a structured mechanism to shift traffic gradually, validate behavior, and roll back based on defined criteria.

This pairs naturally with pipeline orchestration. You can combine rollouts with approvals and monitoring gates to enforce consistency in how progressive delivery is executed across services and environments.

Learn more about Argo Rollouts support

Want to try these GitOps capabilities hands-on?

Check out the GitOps Samples repo for ready-to-run examples you can fork, deploy, and adapt to your own workflows.

Explore GitOps-Samples

Next steps

That wraps up our Q4 2025 Continuous Delivery update. Across CD, Continuous Verification, Pipelines, and GitOps, the theme this quarter was simple: make releases safer by default, reduce operational overhead, and help teams scale delivery without scaling complexity.

If you want to dive deeper, check the “Learn more” links throughout this post and the documentation they point to. We’d also love to hear what’s working (and what you want next); share feedback in your usual channels or reach out through Harness Support.

How to Scale GitOps Without Hitting the Argo Ceiling

GitOps has become the default model for deploying applications on Kubernetes. Tools like Argo CD have made it simple to declaratively define desired state, sync it to clusters, and gain confidence that what’s running matches what’s in Git.

And for a while, it works exceptionally well.

Most teams that adopt GitOps experience a familiar pattern: a successful pilot, strong early momentum, and growing trust in automated delivery. But as adoption spreads across more teams, environments, and clusters, cracks begin to form. Troubleshooting slows down. Governance becomes inconsistent. Delivery workflows sprawl across scripts and tools.

This is the point many teams describe as “Argo not scaling.”

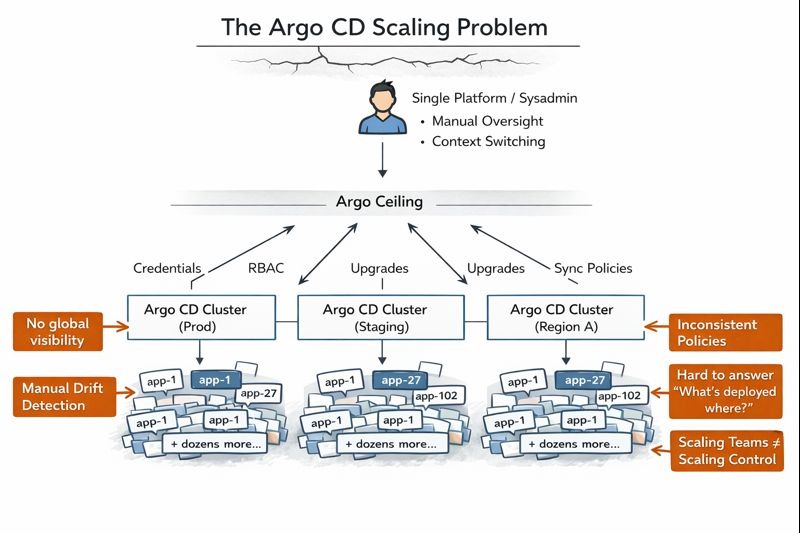

In reality, they’ve hit what we call the Argo ceiling.

The Argo ceiling isn’t a flaw in Argo CD. It’s a predictable inflection point that appears when GitOps is asked to operate at scale without a control plane.

What Is the Argo Ceiling?

The Argo ceiling is the moment when GitOps delivery starts to lose cohesion as scale increases.

Argo CD is intentionally designed to be cluster-scoped. That design choice is one of its strengths: it keeps the system simple, reliable, and aligned with Kubernetes’ model. But as organizations grow, that same design introduces friction.

Teams move from:

- A small number of clusters to dozens (or hundreds)

- A handful of applications to thousands

- One platform team to many autonomous product teams

At that point, GitOps still works — but operating GitOps becomes harder. Visibility fragments. Orchestration logic leaks into scripts. Governance depends on human process instead of platform guarantees.

The Argo ceiling isn’t a hard limit. It’s the point where teams realize they need more structure around GitOps to keep moving forward.

The Symptoms Teams See at Scale

Fragmented Visibility

One of the first pain points teams encounter is visibility.

Argo CD provides excellent insight within a single cluster. But as environments multiply, troubleshooting often turns into dashboard hopping. Engineers find themselves logging into multiple Argo CD instances just to answer basic questions:

- Where is this application deployed?

- Which environment failed?

- Was this caused by the same change?

What teams usually want instead is:

- A single place to see deployment status across clusters

- The ability to correlate failures back to a specific change or release

- Faster root-cause analysis without switching contexts

Argo CD doesn’t try to be a global control plane, so this gap is expected. When teams start asking for cross-cluster visibility, it’s often the first sign they’ve hit the Argo ceiling.

Glue Code and Script Entropy

As GitOps adoption grows, orchestration gaps start to appear. Teams need to handle promotions, validations, approvals, notifications, and integrations with external systems.

In practice, many organizations fill these gaps with:

- Jenkins pipelines

- GitHub Actions

- Custom scripts glued together over time

These scripts usually start small and helpful. But as they grow, they begin to:

- Accumulate environment-specific logic

- Encode tribal knowledge

- Become difficult to change safely

This is a classic Argo ceiling symptom. Orchestration lives outside the platform instead of being modeled as a first-class, observable workflow. Over time, GitOps starts to feel less like a modern delivery model and more like scripted CI/CD from a decade ago.

Awkward Promotion Flows

Promotion is another area where teams feel friction.

Argo CD is excellent at syncing desired state, but it doesn’t model the full lifecycle of a release. As a result, promotions often involve:

- Manual pull requests

- Repo hopping between environments

- Ad-hoc approvals and checks

These steps slow delivery and increase cognitive load, especially as the number of applications and environments grows.

Secret Sprawl

Git is the source of truth in GitOps — but secrets don’t belong in Git.

At small scale, teams manage this tension with conventions and external secret stores. At larger scale, this often turns into a patchwork of approaches:

- Different secret managers per team

- Custom templating logic

- Inconsistent access controls

The result is secret sprawl and operational risk. Managing secrets becomes harder precisely when consistency matters most.

Difficult Audits

Finally, audits become painful.

Change records are scattered across Git repos, CI systems, approval tools, and human processes. Reconstructing who changed what, when, and why turns into a forensic exercise.

At this stage, compliance depends more on institutional memory than on reliable system guarantees.

What Not to Do When You Hit the Ceiling

When teams hit the Argo ceiling, the instinctive response is often to add more tooling:

- More scripts

- More pipelines

- More conventions

- More manual reviews

Unfortunately, this usually makes things worse.

The problem isn’t a lack of tools. It’s a lack of structure. Scaling GitOps requires rethinking how visibility, orchestration, and governance are handled — not piling on more glue code.

Principles for Scaling GitOps Correctly

Before introducing solutions, it’s worth stepping back and defining the principles that make GitOps sustainable at scale.

Centralize Control (Without Centralizing Ownership)

One of the biggest mistakes teams make is repeating the same logic in every Argo CD cluster.

Instead, control should be centralized:

- RBAC policies

- Governance rules

- Audit trails

- Global visibility

At the same time, application ownership remains decentralized. Teams still own their services and repositories — but the rules of the road are consistent everywhere.

Orchestrate, Don’t Script

GitOps should feel modern, not like scripted CI/CD.

Delivery is more than “sync succeeded.” Real workflows include:

- Pre- and post-deployment steps

- Validations and checks

- Approvals and notifications

These should be modeled as structured, observable workflows — not hidden inside scripts that only a few people understand.

Automate Guardrails

Many teams start enforcing rules through:

- PR reviews

- Documentation

- Manual approvals

That approach doesn’t scale.

In mature GitOps environments, guardrails are enforced automatically:

- Objective policy checks

- Repeatable enforcement

- Rules that run before, during, and after deployment

Git remains the source of truth, but compliance becomes a platform guarantee instead of a human responsibility.

Why GitOps Needs a Control Plane

These challenges point to a common conclusion: GitOps at scale needs a control plane.

Git excels at versioning desired state, but it doesn’t provide:

- Cross-cluster visibility

- Consistent governance

- End-to-end workflow orchestration

A control plane complements GitOps by sitting above individual clusters. It doesn’t replace Argo CD — it coordinates and governs it.

Harness as the GitOps Control Plane

Harness provides a control plane that allows teams to scale GitOps without losing control.

Unified Visibility

Harness gives teams a single place to see deployments across clusters and environments. Failures can be correlated back to the same change or release, dramatically reducing time to root cause.

Structured Orchestration

Instead of relying on scripts, Harness models delivery as structured workflows:

- Pre- and post-sync steps

- Promotions and approvals

- Notifications and integrations

This keeps orchestration visible, reusable, and safe to evolve over time.

AI-Assisted Deployment Verification

Kubernetes and Argo CD can tell you whether a deployment technically succeeded — but not whether the application is actually behaving correctly.

Harness customers use AI-assisted deployment verification to analyze metrics, logs, and signals automatically. Rather than relying on static thresholds or manual checks, the system evaluates real behavior and can trigger rollbacks when anomalies are detected.

This builds on ideas from progressive delivery (such as Argo Rollouts analysis) while making verification consistent and governable across teams and environments.

Solving the GitOps–Secrets Paradox

Harness GitOps Secret Expressions address the tension between GitOps and secret management:

- Secrets are created and stored securely in the Harness platform

- GitOps manifests reference secrets using expressions

- The GitOps agent resolves them at runtime

- Secrets never leave customer infrastructure

This keeps Git clean while making secret handling consistent and auditable.

What’s Next

The Argo ceiling isn’t a failure of GitOps — it’s a sign of success.

Teams hit it when GitOps adoption grows faster than the systems around it. Argo CD remains a powerful foundation, but at scale it needs a control plane to provide visibility, orchestration, and governance.

GitOps doesn’t break at scale.

Unmanaged GitOps does.

Ready to move past the Argo ceiling? Watch the on-demand session to learn how teams scale GitOps with confidence.

Harness Dynamic Pipelines: Complete Adaptability, Rock Solid Governance

For a long time, CI/CD has been “configuration as code.” You define a pipeline, commit the YAML, sync it to your CI/CD platform, and run it. That pattern works really well for workflows that are mostly stable.

But what happens when the workflow can’t be stable?

- An automation script needs to assemble a one-off release flow based on inputs.

- An AI agent (or even just a smart service) decides which tests to run for this change, right now.

- The pipeline is shadowing the definition written (and maintained) for a different tool

In all of those cases, forcing teams to pre-save a pipeline definition, either in the UI or in a repo, turns into a bottleneck.

Today, I want to introduce you to Dynamic Pipelines in Harness.

Dynamic Pipelines let you treat Harness as an execution engine. Instead of having to pre-save pipeline configurations before you can run them, you can generate Harness pipeline YAML on the fly (from a script, an internal developer portal, or your own code) and execute it immediately via API.

See it in action

Why Dynamic Pipelines?

To be clear, dynamic pipelines are an advanced functionality. Pipelines that rewrite themselves on the fly are not typically needed and should generally be avoided. They’re more complex than you want most of the time. But when you need this power, you really need it ,and you want it implemented well.

Here are some situations where you may want to consider using dynamic pipelines.

1) True “headless” orchestration

You can build a custom UI, or plug into something like Backstage, to onboard teams and launch workflows. Your portal asks a few questions, generates the corresponding Harness YAML behind the scenes, and sends it to Harness for execution.