Featured Blogs

Recent Blogs

.png)

.png)

Build vs Buy IaC: Choosing the Right IaCM Strategy

Have you ever watched a “temporary” Infrastructure as Code script quietly become mission-critical, undocumented, and owned by someone who left the company two years ago? We can all related to a similar scenario, if not infrastructure-specific, and this is usually the moment teams realise the build vs buy IaC decision was made by accident, not design.

As your teams grow from managing a handful of environments to orchestrating hundreds of workspaces across multiple clouds, the limits of homegrown IaC pipeline management show up fast. It starts as a few shell scripts wrapping OpenTofu or Terraform commands often evolves into a fragile web of CI jobs, custom glue code, and tribal knowledge that no one feels confident changing.

The real question is not whether you can build your own IaC solution. Most teams can. The question is what it costs you in velocity, governance, and reliability once the platform becomes business-critical.

The Appeal and Cost of Custom IaC Pipelines

Building a custom IaC solution feels empowering at first. You control every detail. You understand exactly how plan and apply flows work. You can tailor pipelines to your team’s preferences without waiting on vendors or abstractions.

For small teams with simple requirements, this works. A basic OpenTofu or Terraform pipeline in GitHub Actions or GitLab CI can handle plan-on-pull-request and apply-on-merge patterns just fine. Add a manual approval step and a notification, and you are operational.

The problem is that infrastructure rarely stays simple.

As usage grows, the cracks start to appear:

- Governance gaps: Who is allowed to apply changes to production? How are policies enforced consistently? What happens when someone bypasses the pipeline and runs apply locally?

- State and workspace sprawl: Managing dozens or hundreds of state files, backend configurations, and locking behaviour becomes a coordination problem, not a scripting one.

- Workflow inconsistency: Each team builds its own version of “the pipeline.” What starts as flexibility turns into a support burden when every repository behaves differently.

- Security and audit blind spots: Secrets handling, access controls, audit trails, and drift detection are rarely first-class concerns in homegrown tooling. They become reactive fixes after something goes wrong.

At this point, the build vs buy IaC question stops being technical and becomes strategic.

What an IaCM Platform Is Actually Solving

We cannot simply label our infrastructure as code management platform as “CI for Terraform.” It exists to standardise how infrastructure changes are proposed, reviewed, approved, and applied across teams.

Instead of every team reinventing the same patterns, an IaCM platform provides shared primitives that scale.

Consistent Workspace Lifecycles

Workspaces are treated as first-class entities. Plans, approvals, applies, and execution history are visible in one place. When something fails, you do not have to reconstruct context from CI logs and commit messages.

Enforced IaC Governance

IaC governance stops being a best-practice document and becomes part of the workflow. Policy checks run automatically. Risky changes are surfaced early. Approval gates are applied consistently based on impact, not convention.

This matters regardless of whether teams are using OpenTofu as their open-source baseline or maintaining existing Terraform pipelines.

Centralised Variables and Secrets

Managing environment-specific configuration across large numbers of workspaces is one of the fastest ways to introduce mistakes. IaCM platforms provide variable sets and secure secret handling so values are managed once and applied consistently.

Drift Detection Without Custom Glue

Infrastructure drift is inevitable. Manual console changes, provider behaviour, and external automation all contribute. An IaCM platform detects drift continuously and surfaces it clearly, without relying on scheduled scripts parsing CLI output.

Module and Provider Governance

Reusable modules are essential for scaling IaC, but unmanaged reuse creates risk. A built-in module and provider registry ensures teams use approved, versioned components and reduces duplication across the organisation.

Why Building This Yourself Rarely Scales

Most platform teams underestimate how much work lives beyond the initial pipeline.

You will eventually need:

- Role-based access control.

- Approval workflows that vary by environment.

- Audit logs that satisfy compliance reviews.

- Concurrency controls and workspace locking.

- Safe ways to evolve pipelines without breaking dozens of teams.

None of these are hard in isolation. Together, they represent a long-term maintenance commitment. Unless building IaC tooling is your product, this effort rarely delivers competitive advantage.

How Harness IaCM Changes the Build vs Buy Equation

Harness Infrastructure as Code Management (IaCM) is designed for teams that want control without rebuilding the same platform components over and over again.

It supports both OpenTofu and Terraform, allowing teams to standardise workflows even as tooling evolves. OpenTofu fits naturally as an open-source execution baseline for new workloads, while Terraform remains supported where existing investment makes sense.

Harness IaCM provides:

- Default plan and apply pipelines that work out of the box.

- Workspace templates to enforce consistent backend configuration and governance.

- Module and provider registries to manage reuse safely.

- Policy enforcement, security checks, and cost visibility built directly into every run.

Instead of writing and maintaining custom orchestration logic, teams focus on infrastructure design and delivery.

Drift detection, approvals, and audit trails are handled consistently across every workspace, without bespoke scripts or CI hacks.

Making a Deliberate Build vs Buy IaC Decision

The build vs buy IaC decision should be intentional, not accidental.

If your organisation has a genuine need to own every layer of its tooling and the capacity to maintain it long-term, building can be justified. For most teams, however, the operational overhead outweighs the benefits.

An IaCM platform provides faster time-to-value, stronger governance, and fewer failure modes as infrastructure scales.

Harness Infrastructure as Code Management enables teams to operationalise best practices for OpenTofu and Terraform without locking themselves into brittle, homegrown solutions.

The real question is not whether you can build this yourself. It is whether you want to be maintaining it when the platform becomes critical.

Explore Harness IaCM and move beyond fragile IaC pipelines.

Infrastructure Guardrails: Why Your IaC Stack Needs Them

Have you ever asked yourself, what is the fastest way to turn a harmless Infrastructure as Code change into a production incident and an awkward postmortem? We did, and found that usually, it's from letting it through without any guardrails.

Infrastructure guardrails in Infrastructure as Code (IaC) were once a nice-to-have. Today, they’re essential. Without clear boundaries and safety mechanisms, even well-designed IaC workflows can turn small mistakes into fast-moving, high-impact problems.

What Are Infrastructure Guardrails?

Infrastructure guardrails are preventive controls that help teams standardize and secure infrastructure deployments. They act as a safety net, ensuring changes consistently align with organizational policies, security best practices, and compliance requirements.

Think of infrastructure guardrails as the difference between letting developers drive on an open road with no lanes versus providing clear lane markings, speed limits, and crash barriers. Guardrails do not restrict innovation. They make it safe to move fast without losing control.

Why Infrastructure Guardrails Are Critical for Modern Cloud Operations

As organizations adopt cloud-native practices and infrastructure as code becomes the standard for deployment, the complexity and scale of infrastructure management increases exponentially. Here's why infrastructure guardrails have become non-negotiable:

Prevent Costly Mistakes

Without proper infrastructure guardrails, simple human errors can result in significant outages or security incidents. Consider these common scenarios:

- A developer accidentally provisions oversized instances across multiple regions

- Security groups are incorrectly configured, exposing sensitive services to the public internet

- Production environments are modified during testing due to misconfigured workspace variables

Each of these scenarios can lead to substantial financial impact, from unexpected cloud bills to costly security breaches and downtime. Infrastructure guardrails help prevent these issues before they manifest in your environment.

Enforce Infrastructure as Code Best Practices

Infrastructure guardrails ensure teams follow infrastructure as code best practices consistently. These include:

- Standardizing resource naming conventions

- Ensuring all resources have required tags for cost allocation

- Enforcing module versioning to prevent unexpected changes

- Requiring documentation for infrastructure components

When these practices are enforced through guardrails rather than through documentation alone, teams naturally develop better habits while reducing technical debt.

Key Types of Infrastructure Guardrails Your Stack Needs

Policy-Based Infrastructure Guardrails

Policy-based guardrails enforce rules across your entire infrastructure. Tools like Open Policy Agent (OPA) integrate with OpenTofu and Terraform to validate infrastructure changes against organizational policies before deployment.

These policies can be as simple or complex as needed:

Policy-based infrastructure guardrails provide the flexibility to codify any organizational requirement while ensuring consistent enforcement.

Compliance Controls

Both OpenTofu and Terraform benefit from specific guardrails that enhance their native capabilities:

- Module Registry Controls: Ensuring teams use only approved, tested modules from internal registries

- Variable Validation: Preventing deployments with invalid or potentially dangerous input values

- Custom Policy Frameworks (OpenTofu): Enforcing business and security rules

OpenTofu compliance controls can be particularly effective when integrated into CI/CD pipelines, creating automated checkpoints that validate changes before they reach production environments.

Infrastructure Drift Prevention

One of the most insidious challenges in infrastructure management is configuration drift. Without proper infrastructure guardrails, manual changes can occur outside the IaC workflow, creating inconsistencies between your code and the actual deployed resources.

Effective drift prevention guardrails include:

- Regular automated drift detection scans

- Remediation workflows that reconcile detected drift

- Prevention of manual changes through access controls

- Alerting systems that notify teams when drift occurs

Implement IaC Security Controls Within Your Guardrails

Infrastructure guardrails should incorporate robust IaC security controls to protect against both accidental and malicious security issues:

- Static Code Analysis: Scan IaC templates for security misconfigurations before deployment

- Secrets Management: Prevent hardcoded credentials in infrastructure code

- Least Privilege Enforcement: Ensure IAM roles and permissions follow principle of least p

- Network Security Validation: Verify that network configurations don't create unintended exposure

These security-focused infrastructure guardrails help organizations maintain a strong security posture even as infrastructure scales and evolves.

Cloud Infrastructure Governance Through Effective Guardrails

For organizations operating at scale, infrastructure guardrails form the foundation of cloud infrastructure governance. This governance framework provides:

- Visibility into all infrastructure resources across environments

- Accountability through detailed audit trails of changes

- Cost management through enforcement of resource constraints

- Compliance with industry regulations and internal policies

How Harness IaCM Enhances Your Infrastructure Guardrails

Harness Infrastructure as Code Management (IaCM) provides a comprehensive platform for implementing and maintaining effective infrastructure guardrails. Supporting both OpenTofu and Terraform, Harness IaCM addresses the challenges we've discussed through several key capabilities:

Built-in Policy Enforcement

Harness IaCM integrates policy-as-code directly into your infrastructure workflows. Teams can define, test, and enforce policies that validate infrastructure changes against security, compliance, and operational requirements. These policies run automatically during the plan phase, preventing non-compliant changes from being applied.

Standardization Through Module and Provider Registry

Harness IaCM includes a built-in registry for OpenTofu and Terraform modules and providers. This enables teams to:

- Publish approved, pre-validated infrastructure components

- Version modules to ensure controlled changes

- Share reusable components across teams with appropriate guardrails already built in

This standardization dramatically reduces the risk of configuration errors while improving developer productivity.

Controlled Deployment Workflows

With Harness IaCM, infrastructure deployments follow consistent, auditable workflows:

- Changes are proposed through version control

- Infrastructure guardrails validate the changes through policy enforcement

- Approvals are routed to appropriate stakeholders based on impact

- Deployments proceed only after all guardrails have been satisfied

These workflows provide the perfect balance between developer autonomy and operational control.

Drift Detection and Remediation

Harness IaCM continuously monitors your infrastructure for drift, automatically detecting when resources deviate from their expected state. When drift occurs, teams can:

- Receive immediate notifications

- Visualize exactly what changed

- Automatically remediate drift by reapplying the correct configuration

This ensures your infrastructure guardrails remain effective even after deployment.

Get Started with Infrastructure Guardrails

Implementing effective infrastructure guardrails doesn't have to be an all-or-nothing proposition. Start with these steps:

- Identify your highest-risk areas: Begin with guardrails that protect against your most common or costly errors

- Start with simple policies: Focus on basic security and cost management policies before adding complexity

- Integrate guardrails into your existing workflow: Use tools like Harness IaCM that work with your current processes

- Measure the impact: Track reduced incidents, faster deployments, and improved compliance

Conclusion: Infrastructure Guardrails as Enablers, Not Barriers

Effective infrastructure guardrails don't limit innovation, they enable it by providing a safe environment for experimentation and rapid deployment. By preventing costly errors, enforcing best practices, and ensuring compliance, guardrails give teams the confidence to move quickly without sacrificing reliability or security.

Harness Infrastructure as Code Management provides the ideal platform for implementing these guardrails, with native support for both OpenTofu and Terraform, built-in policy enforcement, and comprehensive drift management capabilities.

Ready to implement effective infrastructure guardrails in your environment? Explore how Harness IaCM can help your team deploy more confidently and securely while maintaining the flexibility developers need to innovate.

How Enterprises Modernize and Migrate to the Cloud Safely with Harness Automation

Cloud Migration Series | Part 1

Cloud migration has shifted from a tactical relocation exercise to a strategic modernization program. Enterprise teams no longer view migration as just the movement of compute and storage from one cloud to another. Instead, they see it as an opportunity to redesign infrastructure, streamline delivery practices, strengthen governance, and improve cost control, all while reducing manual effort and operational risk. This is especially true in regulated industries like banking and insurance, where compliance and reliability are essential.

This first installment in our cloud migration series introduces the high-level concepts and the automation framework that enables enterprise-scale transitions, without disrupting ongoing delivery work. Later entries will explore the technical architecture behind Infrastructure as Code Management (IaCM), deployment patterns for target clouds, Continuous Integration (CI) and Continuous Delivery (CD) modernization, and the financial operations required to keep migrations predictable.

Cloud Migration Is Broader Than Most Organizations Expect

Many organizations begin their migration journey with the assumption that only applications need to move. In reality, cloud migration affects five interconnected areas: infrastructure provisioning, application deployment workflows, CI and CD systems, governance and security policies, and cost management. All five layers must evolve together, or the migration unintentionally introduces new risks instead of reducing them.

Infrastructure and networking must be rebuilt in the target cloud with consistent, automated controls. Deployment workflows often require updates to support new environments or adopt GitOps practices. Legacy CI and CD tools vary widely across teams, which complicates standardization. Governance controls differ by cloud provider, so security models and policies must be reintroduced. Finally, cost structures shift when two clouds run in parallel, which can cause unpredictability without proper visibility.

Why Enterprises Pursue Cloud-to-Cloud Migration

Cloud migration is often motivated by a combination of compliance requirements, access to more suitable managed services, performance improvements, or cost efficiency goals. Some organizations move to support a multi-cloud strategy while others want to reduce dependence on a single provider. In many cases, migration becomes an opportunity to correct architectural debt accumulated over years.

Azure to AWS is one example of this pattern, but it is not the only one. Organizations regularly move between all major cloud providers as their business and regulatory conditions evolve. What remains consistent is the need for predictable, auditable, and secure migration processes that minimize engineering toil.

Challenges That Slow Down Enterprise Migration

The complexity of enterprise systems is the primary factor that makes cloud migration difficult. Infrastructure, platform, security, and application teams must coordinate changes across multiple domains. Old and new cloud environments often run side by side for months, and workloads need to operate reliably in both until cutover is complete.

Another challenge comes from the variety of CI and CD tools in use. Large organizations rarely rely on a single system. Azure DevOps, Jenkins, GitHub Actions, Bitbucket, and custom pipelines often coexist. Standardizing these workflows is part of the migration itself, and often a prerequisite for reliability at scale..

Security and policy enforcement also require attention. When two clouds differ in their identity models, network boundaries, or default configurations, misconfigurations can easily be introduced . Finally, cost becomes a concern when teams pay for two clouds at once. Without visibility, migration costs rise faster than expected.

How Harness Provides Structure and Control for Cloud Migration

Harness addresses these challenges by providing an automation layer that unifies infrastructure provisioning, application deployment, governance, and cost analysis. This creates a consistent operating model across both the current and target clouds.

Harness Internal Developer Portal (IDP) provides a centralized view of service inventory, ownership, and readiness, helping teams track standards and best-practice adoption throughout the migration lifecycle. Harness Infrastructure as Code Management (IaCM) defines and provisions target environments and enforces policies through OPA, ensuring every environment is created consistently and securely. It helps teams standardize IaC, detect drift, and manage approvals. Harness Continuous Delivery (CD) introduces consistent, repeatable deployment practices across clouds and supports progressive delivery techniques that reduce cutover risk. GitOps workflows create clear audit trails. Harness Cloud Cost Management (CCM) allows teams to compare cloud costs, detect anomalies, and govern spend during the transition before costs escalate.

A High-Level Migration Blueprint for Enterprises

A successful, low-risk cloud migration usually follows a predictable pattern. Teams begin by modeling both clouds using IaC so the target environment can be provisioned safely. Harness IaCM then creates the new cloud infrastructure while the existing cloud remains active. Once environments are ready, teams modernize their pipelines. This process is platform agnostic and applies whether the legacy pipelines were built in Azure DevOps, Jenkins, GitHub Actions, Bitbucket, or other systems. The new pipelines can run in parallel to ensure reliability before switching over.

Workloads typically migrate in waves. Stateless services move first, followed by stateful systems and other dependent components. Parallel runs between the source and target clouds provide confidence in performance, governance adherence, and deployment stability without slowing down release cycles. Throughout this process, Harness CCM monitors cloud costs to prevent unexpected increases. After the migration is complete, teams can strengthen stability using feature flags, chaos experiments, or security testing.

Expected Outcomes for Technology Leaders

When migration is guided by automation and governance, enterprises experience fewer failures and smoother transitions, and faster time-to-value. Timelines become more predictable because infrastructure and pipelines follow consistent patterns. Security and compliance improve as policy enforcement becomes automated. Cost visibility allows leaders to justify business cases and track savings. Most importantly, engineering teams end up with a more modern, efficient, and unified operating model in the target cloud.

What Comes Next

The next blog in this series will examine how to design target environments using Harness IaCM, including patterns for enforcing consistent, compliant baseline configurations. Later entries will explore pipeline modernization, cloud deployment patterns, cost governance, and reliability practices for post-migration operations.

Terraform Variable Management at Scale: Centralizing IaC with Variable Sets and Provider Registry in Harness IaCM

Infrastructure as Code (IaC) has made provisioning infrastructure faster than ever, but scaling it across hundreds of workspaces and teams introduces new challenges. Secrets get duplicated. Variables drift. Custom providers become hard to share securely.

That’s why we’re excited to announce two major enhancements to Harness Infrastructure as Code Management (IaCM):

Variable Sets and Provider Registry built to help platform teams standardize and secure infrastructure workflows without slowing developers down.

Variable Sets: Centralized Configuration Without the Chaos

Variables in Infrastructure as Code store configuration values like credentials and environment settings so teams can reuse and customize deployments without hardcoding. However, once teams operate dozens or hundreds of workspaces, variables quickly become fragmented and hard to govern. Variable Sets provide a single control plane for configuration parameters, secrets, and variable files used across multiple workspaces. In large organizations, hundreds of Terraform or OpenTofu workspaces share overlapping credentials and configuration keys such as Terraform variable sets or OpenTofu variable sets. Traditionally, these are duplicated, making credential rotation, auditing, and drift prevention painful.

Harness IaCM implements Variable Sets as first-class resources within its workspace model that are attachable at the account, organization, or project level. The engine dynamically resolves variable inheritance based on a priority ordering system, ensuring the highest-priority set overrides conflicting keys at runtime.

.png)

Core Capabilities

- Hierarchical Inheritance Graph: Workspaces resolve variables based on an explicit priority order defined by the platform team, with the highest-priority Variable Set taking precedence. Conflicts are clearly surfaced in the UI, showing overridden values and the exact source of each variable.

- Type and Scope Support: Handles both regular key-value pairs and .tfvars files. Variables can reference Harness Connectors (e.g., Vault or AWS Secrets Manager) for secure retrieval at execution. Both Terraform variable sets and OpenTofu variable sets can also be attached to Workspace Templates.

- Change Propagation: When a variable changes, Harness automatically lists all affected workspaces via reference tracking, allowing controlled rollouts or bulk updates.

- Access Control and Auditing: Only users with workspace edit permissions can change precedence; future RBAC plans extend granular edit and view rights. Every modification is recorded in IaCM audit logs.

- Runtime Execution: Conflict resolution occurs at Terraform runtime for variable files but design-time for inline variables giving predictable behavior and faster validation.

For enterprises running hundreds of Terraform workspaces across multiple regions, Variable Sets give platform engineers a single, authoritative home for Vault credentials. When keys are rotated, every connected workspace automatically inherits the update by eliminating manual edits, reducing risk, and ensuring compliance across the organization. It’s a fundamental capability for terraform variable management at scale.

Provider Registry: Secure Distribution for Custom Providers

Provider Registry introduces a trusted distribution mechanism for custom Terraform registry and OpenTofu provider registry. While the official Terraform registry and OpenTofu Provider Registry caters to public providers, enterprise teams often build internal providers to integrate IaC with proprietary APIs or on-prem systems. Managing these binaries securely is non-trivial.

Harness IaCM solves this with a GPG-signed, multi-platform binary repository that sits alongside the Module Registry under IaCM > Registry. Each provider is published with platform-specific artifacts (macOS, Linux, Windows), SHA256 checksums, and signature files.

.png)

Core Capabilities

- Integration with Policy as Code: Platform teams can enforce which providers are allowed within configurations using OPA-based policy checks in the pipeline.

- Secure by Default: Each provider binary is signed with a GPG key and verified during download to prevent tampering.

- Cross-Platform Resolution: At tofu init or terraform init, Harness detects the OS/architecture and automatically delivers the correct binary without manual setup.

- Version Consistency: Strict semantic version matching (v1.0.0 ≠ v1.0.1) prevents runtime mismatches and enforces dependency integrity

- Faster Internal Integrations: Publish internal APIs or custom integrations as reusable providers.

- No Manual Management: Developers can seamlessly use approved providers directly in configurations without managing binaries locally.

For any enterprise teams that build a custom provider to integrate OpenTofu with their internal API. Using Harness Provider Registry, they sign and publish binaries for multiple platforms. Developers simply declare the provider source in code, Harness handles signature verification, delivery, and updates automatically. Together with the Module Registry and Testing for Modules, Provider Registry completes the picture for trusted, reusable infrastructure components helping organizations scale IaC with confidence.

Why These Features Matter

Harness IaCM already provides governed-by-default workflows with centralized pipelines, policy-as-code enforcement, and workspace templates that reduce drift. Now, with Variable Sets and Provider Registry, IaCM extends that governance deeper into how teams manage configuration and custom integrations. These updates make Harness IaCM not just a Terraform or OpenTofu orchestrator, but a secure, AI infrastructure management platform that unifies visibility, control, and collaboration across all environments.

Harness’s broader IaCM ecosystem includes:

- Multi-IaC support: Terraform, OpenTofu, Terragrunt, Ansible (with more coming soon).

- Cost visibility: Pre-deployment cost estimation and post-deployment tracking.

- GitOps-native workflows: Approvals and policy checks built into pull requests.

- AI-powered policy generation: Intelligent guardrails to accelerate standards enforcement.

- AI-driven pipeline generation and failure analysis: leveraging the same intelligent capabilities used across Harness pipelines to streamline authoring and troubleshoot issues faster.

How IaCM is different

Unlike standalone tools today, Harness IaCM brings a unified, end-to-end approach to infrastructure delivery, combining:

- A single workspace model for every IaC tool

- Centralized variable and provider management giving platform teams consistent governance and control.

- AI-native governance with policy generation

- Native security scanning through integrated STO and SCS, ensuring misconfigurations and vulnerabilities are caught early in the SDLC.

- A unified SDLC pipeline experience, where infrastructure, application, security, and compliance checks all run through the same pipeline model.

- A developer-friendly experience with Harness IDP, offering self-service templates, golden paths, and standardized guardrails that make infrastructure safe and accessible for every team.

This all-in-one approach means fewer tools to manage, tighter compliance, and faster onboarding for developers while maintaining the flexibility of open IaC standards. Harness is the only platform that brings policy-as-code, cost insight, and self-service provisioning together into a single developer experience.

Get Started Today

Explore how Variable Sets and Provider Registry can streamline your infrastructure delivery all within the Harness Platform. Request a Demo to see how your team can standardize configurations, improve security, and scale infrastructure delivery without slowing down innovation.

You’re Late to the OpenTofu Party. Here’s Why That’s a Problem.

Are you still using Terraform without realizing the party has already moved on?

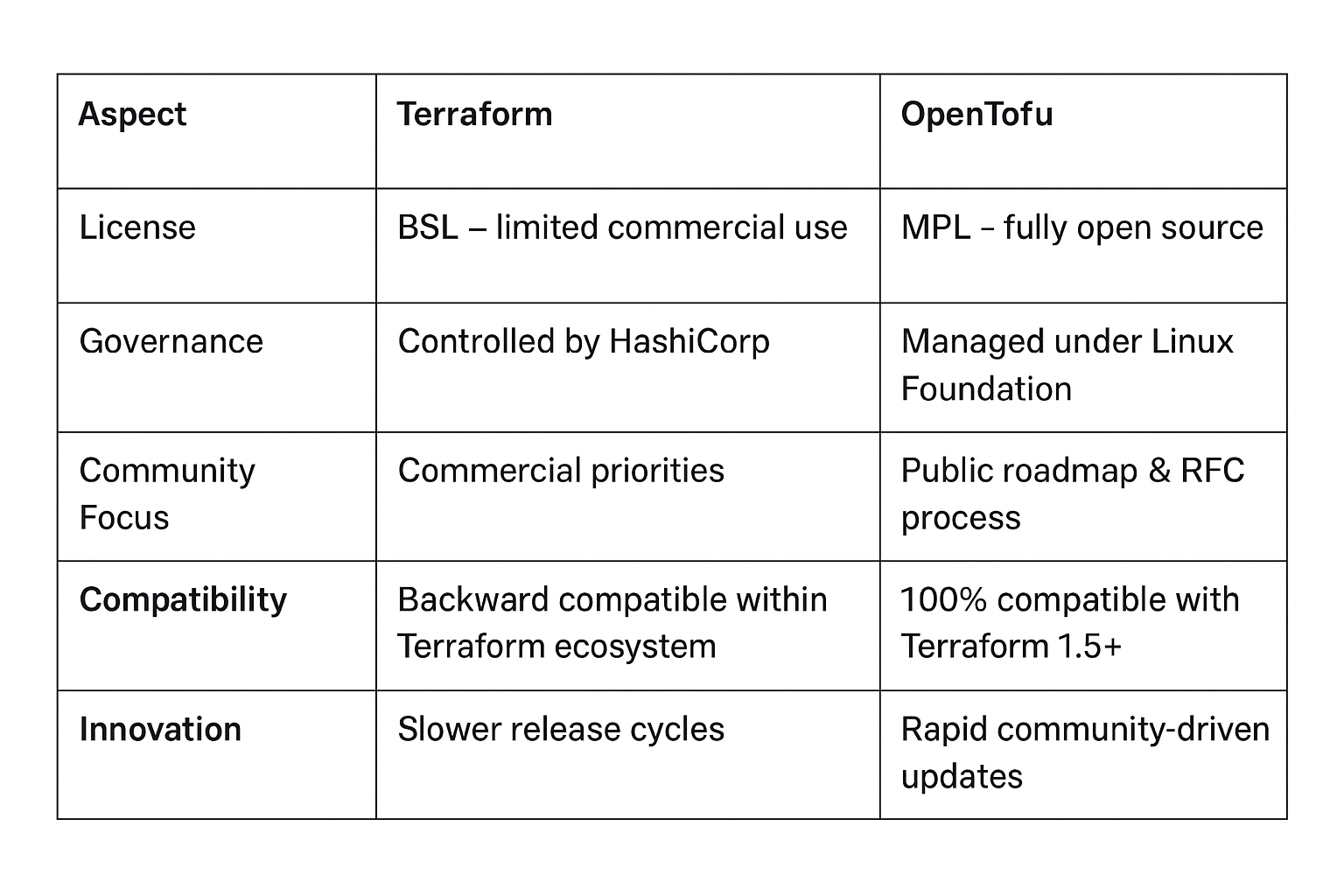

For years, Terraform was the default language of Infrastructure as Code (IaC). It offered predictability, community, and portability across cloud providers. But then, the music stopped. In 2023, HashiCorp changed Terraform’s license from Mozilla Public License (MPL) to the Business Source License (BSL), a move that put guardrails around what users and competitors could do with the code.

That shift opened a door for something new and truly open.

That “something” is OpenTofu.

And if you’re not already using or contributing to it, you’re missing your chance to help shape the future of infrastructure automation.

The fork that changed IaC forever

OpenTofu didn’t just appear out of thin air. It was born from community demand, a collective realization that Terraform’s BSL license could limit the open innovation that made IaC thrive in the first place.

So OpenTofu forked from Terraform’s last open source MPL version and joined the Linux Foundation, ensuring that it would remain fully open, community-governed, and vendor-neutral. A true Terraform alternative.

Unlike Terraform’s now-centralized governance, OpenTofu’s roadmap is decided by contributors, people building real infrastructure at real companies, not by a single commercial entity.

That means if you depend on IaC tools to build and scale your environments, your voice actually matters here.

Why OpenTofu is gaining momentum

OpenTofu is not a “different tool.” It’s a continuation, the same HCL syntax, same workflows, and same mental model, but under open governance and a faster, community-driven release cadence.

Let’s break down the Terraform vs OpenTofu comparison:

It’s still Terraform-compatible. You can take your existing configurations and run them with OpenTofu today. But beyond compatibility, OpenTofu is already moving faster and more freely, prioritizing developer-requested features that a commercial model might not. Some key examples of it's true power and longevity include:

1. Standardized distribution with OCI registries

Packaging and sharing modules or providers privately has always been clunky. You either ran your own registry or relied on Terraform Cloud.

OpenTofu solves this with OCI Registries, i.e. using the same open container standard that Docker uses.

It’s clean, familiar, and scalable.

Your modules live in any OCI-compatible registry (Harbor, Artifactory, ECR, GCR, etc.), complete with built-in versioning, integrity checks, and discoverability. No proprietary backend required.

For organizations managing hundreds of modules or providers, this is a big deal. It means your IaC supply chain can be secured and audited with the same standards you already use for container images.

2. True security with encryption at rest

Secrets in your Terraform state have always been a headache.

Even with remote backends, you’re still left with the risk of plaintext credentials or keys living inside the state file.

OpenTofu is the only IaC framework with built-in encryption at rest.

You can define an encryption block directly in configuration:

This encrypts the state transparently, no custom wrapper scripts or external encryption logic.

It also supports multiple key providers (AWS KMS, GCP KMS, Azure Key Vault, and more).

Coming soon in OpenTofu 1.11 (beta): ephemeral resources.

This feature lets providers mark sensitive data as transient so it never touches your state file in the first place. That’s a security level no other mainstream IaC tool currently offers.

3. A community-driven future

OpenTofu’s most powerful feature isn’t in its code, it’s in its process.

Every proposal goes through a public RFC. Every contributor has a say. Every decision is archived and transparent.

If you want a feature, you can write a proposal, gather community feedback, and influence the outcome.

Contrast that with traditional vendor-driven roadmaps, where features are often prioritized by product-market fit rather than user need.

That’s what “being late to the party” really means: you miss your seat at the table where the next decade of IaC innovation is being decided.

Why you don’t want to miss this party

Being early in an open-source ecosystem isn’t about bragging rights, it’s about influence.

OpenTofu is already gaining serious traction:

- Major cloud providers and IaC platforms are integrating it.

- Contributors from across the industry are shaping releases.

- Security and compliance enhancements (like encryption and OCI support) are coming faster than ever.

If you join later, you’ll still get the code. But you won’t get the same opportunity to shape it.

The longer you wait, the more you’ll be reacting to other people’s decisions instead of helping make them.

Ready to switch? The OpenTofu migration path is smooth.

Migrating is a one-liner!

The OpenTofu migration guide shows that most users can simply install the tofu CLI and reuse their existing Terraform files:

It’s the same commands, same workflow, but under an open license. You can even use your existing Terraform state files directly; no conversion step required.

For teams already managing infrastructure at scale, the move to OpenTofu doesn’t just preserve your workflow, it future-proofs it.

How Harness IaCM supports OpenTofu

When you’re ready to bring OpenTofu into a managed, collaborative environment, Harness Infrastructure as Code Management (IaCM) has you covered.

Harness IaCM natively supports both Terraform and OpenTofu. You can create a workspace, select your preferred binary, and run init, plan, and apply pipelines without changing your configurations.

That means you can:

- Experiment safely with OpenTofu while retaining version control.

- Store and share modules through a managed environment.

- Adopt OpenTofu’s features (like encryption and OCI) inside CI/CD pipelines.

- Gradually migrate Terraform workspaces without breaking production.

Harness essentially gives you the sandbox to explore OpenTofu’s potential, whether you’re testing ephemeral resource behavior or building private OCI registries for module distribution.

So while the OpenTofu community defines the standards, Harness ensures you can implement them securely and at scale.

Contribute, don’t just consume

The real magic of OpenTofu lies in participation.

If you’ve ever complained about Terraform limitations, this is your moment to shape the alternative.

You can:

- Test new features.

- Submit issues or RFCs.

- Contribute code or docs.

- Influence what the next release includes.

Everything lives in the open on the OpenTofu Repository.

Even reading a few discussions there shows how open, constructive, and fast-moving the community is.

Final thoughts

The IaC landscape is changing, and this time, the direction isn’t being set by a vendor, but by the community.

OpenTofu brings us back to the roots of open-source infrastructure: collaboration, transparency, and freedom to innovate.

It’s more than a fork, it’s a course correction.

If you’re still watching from the sidelines, remember: the earlier you join, the more your voice matters.

The OpenTofu party is already in full swing.

Grab your seat at the table, bring your ideas, and help build the future of IaC, before someone else decides it for you.

A 5-step enterprise guide to IaCM

Ever felt like managing your infrastructure is less like engineering and more like trying to herd cats through a perpetually changing obstacle course?

You’re not alone. In the glorious, chaotic world of modern IT, where microservices evolve constantly and scale pushes the limits of complexity, traditional approaches to managing infrastructure simply don’t keep pace. This is where Infrastructure as Code (IaC), and more importantly, Infrastructure as Code Management (IaCM) come in, enabling organizations to bring consistency, automation, and governance to even the most complex environments.

What is Infrastructure as Code (IaC)?

At its heart, IaC is the practice of defining and provisioning infrastructure resources. Think servers, databases, networks, and all their configurations, through code. Instead of clicking endlessly through cloud provider consoles or manually configuring settings, you write declarative configuration files. These files become the single source of truth for your infrastructure. Just like your application code, these infrastructure definitions can be versioned, reviewed, tested, and deployed, bringing software development best practices to infrastructure operations.

The advantages are transformative:

- Consistency: Say goodbye to “it works on my machine” for infrastructure. IaC ensures identical environments across development, staging, and production.

- Speed & Agility: Automate the provisioning process, drastically reducing the time it takes to spin up new environments or scale existing ones.

- Reliability: Automated, repeatable deployments minimize human error. If it works once, it will work again.

- Scalability: Easily replicate and scale infrastructure resources up or down, responding to demand with unprecedented elasticity.

- Traceability & Auditability: Every change to your infrastructure is tracked in version control, providing a clear audit trail and simplifying compliance.

Why IaCM is Vital for Enterprise Infrastructure at Scale

While IaC is a monumental leap forward, simply writing code for your infrastructure isn't enough when you're operating at an enterprise scale. Imagine hundreds of teams, thousands of infrastructure resources, multiple cloud providers, and strict regulatory requirements. This is where Infrastructure as Code Management (IaCM) becomes not just beneficial, but absolutely vital.

IaCM is the overarching strategy and set of tools designed to effectively manage your IaC across the entire organization. It addresses the inherent complexities and challenges that arise when scaling IaC practices:

- The “IaC Sprawl” Problem: Without IaCM, enterprises often end up with a fragmented landscape of IaC scripts, templates, and modules scattered across various repositories, lacking standardization and central oversight. IaCM brings order to this chaos.

- Centralized Control and Visibility: IaCM provides a unified platform to manage all your IaC configurations, irrespective of the underlying tools (OpenTofu or Terraform, CloudFormation, Ansible, Kubernetes manifests, etc.). This gives you a single pane of glass for all infrastructure definitions and deployments.

- Policy Enforcement and Compliance at Scale: Enterprises operate under stringent compliance mandates and internal governance policies. IaCM allows you to define these policies as code and automatically enforce them across all infrastructure deployments. This prevents unapproved configurations, ensures security best practices, and streamlines audit processes.

- Secure Secrets Management: Infrastructure often requires access to sensitive information like API keys, database credentials, and certificates. IaCM platforms integrate with dedicated secrets management solutions, ensuring that these secrets are never hardcoded and are securely injected only when needed, minimizing exposure.

- Advanced Workflow Orchestration and Automation: Enterprise-grade deployments are rarely simple. They involve complex sequences of provisioning, testing, approvals, and rollbacks. IaCM orchestrates these intricate workflows, automating the entire deployment pipeline and providing guardrails for safe, consistent, and compliant releases.

- Cost Management and Optimization: IaCM can help track and optimize infrastructure costs by ensuring that resources are provisioned according to policies, preventing over-provisioning, and identifying orphaned resources.

- Enhanced Collaboration and Governance: IaCM facilitates secure and efficient collaboration among diverse teams (developers, operations, security) while maintaining strict governance. It ensures that changes are reviewed, approved, and deployed consistently, reducing friction and improving team synergy.

- Consistency Across Hybrid and Multi-Cloud Environments: For enterprises leveraging hybrid cloud or multi-cloud strategies, IaCM provides the necessary abstraction and management capabilities to apply consistent practices and policies across disparate environments.

Without a robust IaCM strategy, large organizations risk turning the promise of IaC into a new form of operational headache – one where inconsistencies, security gaps, and manual oversight creep back in, negating the very benefits IaC aims to deliver. IaCM elevates IaC from a technical practice to a strategic operational model, essential for controlling and managing infrastructure at enterprise scale with speed, security, and precision.

Implement IaCM at Enterprise Scale: A 5-Step Guide

Implementing IaCM across an enterprise might seem daunting, but by breaking it down into a structured approach, organizations can successfully adopt and leverage its full potential. Here’s a 5-step guide to help you get started:

Step 1: Assess Your Current State & Define Your Vision

Before you start, understand where you are.

- Audit Existing Infrastructure: Document your current infrastructure, identifying what’s already managed by IaC (if anything), what’s manual, and where inconsistencies lie.

- Identify Pain Points: Pinpoint the challenges your teams face with current infrastructure management (e.g., slow provisioning, manual errors, compliance issues, lack of visibility).

- Define Your IaCM Vision: Clearly articulate what success looks like. What are your key objectives for implementing IaCM? (e.g., 90% automated provisioning, consistent environments, centralized policy enforcement).

- Stakeholder Alignment: Engage key stakeholders from development, operations, security, and compliance to ensure buy-in and align on the vision and goals.

Step 2: Choose Your IaC Tools & Platform

Selecting the right tools is crucial, but remember, IaCM is about managing them all.

- Evaluate IaC Tools: Based on your current infrastructure and future needs, choose your primary IaC tools such as OpenTofu or Terraform for infrastructure provisioning, Ansible for configuration management, Kubernetes for orchestration, CloudFormation for AWS-native).

- Select an IaCM Platform: This is the heart of your enterprise strategy. Consider platforms that offer centralized control, policy enforcement, secrets management, and workflow orchestration across your chosen IaC tools. Look for features like module registries, variable sets, and GitOps integration.

- Pilot Project: Start with a small, contained project to test your chosen IaC tools and IaCM platform. This allows you to iron out initial challenges and gather valuable feedback.

Step 3: Standardize & Centralize IaC Artifacts

Bringing order to chaos is a core benefit of IaCM.

- Develop Standardized Modules & Templates: Create reusable IaC modules and templates for common infrastructure components (e.g., standard VPCs, database configurations, application environments). Store these in your IaCM platform’s module registry.

- Centralize Variable Management: Utilize your IaCM platform’s variable sets to manage environment-specific configurations and sensitive data securely, preventing hardcoding and ensuring consistency.

- Implement Version Control: Enforce strict version control (e.g., Git) for all IaC code. This provides an audit trail, enables collaboration, and supports rollback capabilities.

- Establish Naming Conventions & Tagging: Implement clear naming conventions and tagging strategies for all infrastructure resources to improve visibility, cost tracking, and governance.

Step 4: Implement Policy-as-Code & Security Controls

Security and compliance are non-negotiable at the enterprise level.

- Define Policies as Code: Translate your organization’s security, compliance, and governance policies into code (e.g., using Open Policy Agent (OPA)).

- Automate Policy Enforcement: Integrate these policies into your IaCM platform to automatically enforce them during IaC deployments, preventing non-compliant infrastructure from being provisioned.

- Integrate Secrets Management: Connect your IaCM platform with an enterprise-grade secrets manager (e.g., HashiCorp Vault, AWS Secrets Manager) to securely inject credentials and sensitive data at runtime.

- Implement Role-Based Access Control (RBAC): Configure fine-grained RBAC within your IaCM platform to ensure that only authorized individuals and teams can initiate, approve, or modify infrastructure deployments.

- Drift Detection: Leverage your IaCM platform’s capabilities to detect and remediate configuration drift, ensuring that your deployed infrastructure always matches its defined state.

Step 5: Automate Workflows & Foster a GitOps Culture

Embrace automation and treat your infrastructure like application code.

- Automate CI/CD for Infrastructure: Integrate your IaCM platform with your existing CI/CD pipelines to fully automate the testing, approval, and deployment of IaC changes.

- Embrace GitOps: Drive all infrastructure changes through Git. Your Git repository becomes the single source of truth, and automated processes ensure that the actual infrastructure converges to the desired state defined in Git.

- Establish Review & Approval Processes: Implement peer review and automated approval gates for all IaC changes before deployment to production environments.

- Monitor & Optimize: Continuously monitor your IaCM pipelines and deployed infrastructure. Use data from your IaCM platform to identify bottlenecks, optimize costs, and refine your IaC and IaCM practices over time.

- Training & Education: Provide comprehensive training to your teams on IaC tools, IaCM platform usage, and the new GitOps-driven workflows. Foster a culture of learning and continuous improvement.

By following these steps, enterprises can systematically transition to a fully managed, automated, and compliant infrastructure environment, unlocking the true potential of Infrastructure as Code.

Take the Next Step with Harness IaCM

To truly operationalize IaCM at scale, enterprises need a platform built for governance, automation, and collaboration. Harness IaCM brings these capabilities together, enabling teams to manage infrastructure securely and efficiently across the organization.

Harness IaCM empowers teams to leverage reusable, enterprise-grade tooling designed to maximize consistency and speed, including:

- Module and Provider Registries: Centralize and share reusable infrastructure modules and providers, ensuring standardization and reducing duplication across teams.

- Workspace Templates: Define and reuse workspace configurations that maintain consistent setup and governance across all IaC projects.

- Default Pipelines: Streamline deployment workflows with preconfigured, best-practice CI/CD pipelines that accelerate infrastructure delivery.

With Harness IaCM, your organization can move beyond simply writing infrastructure code to managing it as a governed, automated, and scalable system—empowering teams to innovate faster and operate with confidence.

Confidently Ship Reusable OpenTofu and Terraform Modules

If there’s one thing we all care deeply about, it’s not fame, fortune or perfect HCL formatting; it’s reusability.

Whether you're a seasoned practitioner or new to Infrastructure as Code (IaC), Reusable modules are fast becoming the backbone of modern platform engineering. That's why modern platforms introduced Module Registries, central systems for publishing and consuming OpenTofu/Terraform modules across your organization.

They promote the DRY principle ("Don't Repeat Yourself") by codifying best practices, reducing duplication, and helping teams ship faster by focusing on what’s unique to their workload

But as teams scale, so does the risk: a misconfigured or buggy module can break dozens of environments in seconds.

Enter testing for infrastructure modules.

Real-world example: How one bug could have taken out everything

A few years ago, a platform engineering team learned a painful lesson: one bad Terraform command can destroy everything.

This real incident describes how a single misconfigured module and an unguarded Terraform destroy wiped out an entire staging environment, dozens of services gone in minutes. Recovery took days.

Now imagine your team building a reusable VPC module. Without testing, a single overlooked bug, say, a missing region variable or a misconfigured ACL that leaves an S3 bucket public, could silently make it into your registry. Every environment using that module would be exposed.

Here’s how to prevent it:

Before publishing, the platform team runs an integration pipeline that provisions a real test workspace with actual cloud credentials. On the first run, the missing region is caught. On the second, the public S3 bucket is flagged. Both are fixed before the module ever touches the registry.

The single step of testing modules in isolation before release turns potential outages into harmless build failures, protecting every downstream environment.

Why test your modules?

When you publish a shared module to your registry, you're trusting that it works now, and will continue to work later. Without dedicated testing, it's easy to miss:

- Required variables that aren't defined

- Breaking changes introduced by new commits

- Inadvertent updates that change resource behaviour across all consumers

- Cross-module dependency conflicts

- Security misconfigurations that expose resources publicly

Testing modules addresses these risks by validating them in isolation before they’re promoted to the registry.

How module tests work

A dedicated Integration pipeline is added to your module’s development branch. This pipeline:

- Provisions a real test environment.

- Executes real init, plan, and apply commands using OpenTofu (or Terraform).

- Validates cross-module compatibility and runtime behaviour.

✅ Tip: You define test inputs just like consumers would, using actual variables, connectors, and real infrastructure.

Only after the module passes this pipeline should it be promoted to the main branch and published.

Key Benefits

- Confidence in Reuse: Prevent broken or misconfigured modules from being published to your shared registry

- Safe Promotion Workflow: Validate modules in feature branches before promoting to main

- Catch Critical Misconfigurations: Detect missing variables, logic bugs, or public exposure risks early

- Governance and Drift Control: Enforce testing standards for modules, protecting downstream workspaces

- Auditable Changes: Avoid disaster-level rollouts by validating before any code touches production

- Encourages Reusability: With confidence in test coverage, teams are more likely to standardize on shared modules

CI/CD Integration and Testing Approaches

Testing modules complements traditional IaC testing techniques like the following methods:

tflint,validate- Syntax, formatting, and static checks

Terratest- Unit tests & mocks

Checkov,tfsec- Static security scanning

- Registry testing

- Real-world integration and runtime testing

Example CI/CD flow:

The integration testing stage spins up real infrastructure to validate that your modules work as expected before they reach production consumers.

Advanced Use Cases

Multi-Environment Testing

Test your modules across different cloud regions or account configurations to ensure portability:

Dependency Chain Validation

Test complex module hierarchies where modules depend on outputs from other modules:

Compliance and Security Validation

Integrate security scanning directly into your integration tests:

Rollback Testing

Validate that module updates can be safely rolled back by testing both upgrade and downgrade paths.

Implementation Considerations

Setting up tests for your modules requires some initial overhead, but the investment pays dividends as your module ecosystem grows:

Resource Costs: Integration tests provision real infrastructure, so factor in cloud costs for test environments. Use short-lived resources and automated cleanup to minimize expenses.

Test Environment Management: Establish dedicated sandbox accounts or subscriptions for integration testing to avoid conflicts with production resources.

Pipeline Execution Time: Real infrastructure provisioning takes longer than unit tests, so optimize your pipeline for parallel execution where possible.

Where to find Module Registry and Module Testing

Testing modules is becoming a core best practice in the OpenTofu ecosystem. But finding a platform that natively integrates registry management and test pipelines can be challenging.

If you’re looking for a platform that natively integrates module registries with testing pipelines, Harness Infrastructure as Code Management (IaCM) has you covered:

- ✅ Manage reusable modules with a built-in Module Registry

- ✅ Validate them using Integration pipelines before publishing

- ✅ Promote only tested, trusted modules to your consumers

Check out how to create and register your IaC modules and configure module tests to get started with pipeline setup and test inputs.

Conclusion

If you value stability, reusability, and rapid iteration, then testing your modules is more than a nice-to-have; it’s your safeguard against chaos.

By combining traditional CI/CD validation with real infrastructure testing, you get the best of both worlds: fast feedback and real-world assurance.

Start small. Iterate. And as your registry grows, let testing give you the confidence to scale.

Harness Expands Infrastructure as Code Management with Powerful Reusability Features for Greater Scalability

When we launched Harness Infrastructure as Code Management (IaCM), our goal was clear: help enterprises scale infrastructure automation without compromising on governance, consistency, or developer velocity. One year later and we’re proud of the progress we’ve made when it comes to delivering this solution with unmatched capabilities for templatization and enterprise scalability.

Today we’re announcing a major expansion of Harness IaCM with two new features: Module Registry and Workspace Templates. Both are designed to drive repeatability, security, and control with a common foundation: reusability.

In software development we talk quite a bit about the DRY principle, aka “Don’t Repeat Yourself.” These new capabilities bring that mindset to infrastructure, giving teams the tools to define once and reuse everywhere with built-in governance.

Bringing Reusability to Infrastructure

During customer meetings one theme came up over and over again – the need to define infrastructure once and reuse it across the platform in a secure and consistent manner, at scale. Our latest expansion of Harness IaCM was built to solve exactly that.

The DRY principle has long been a foundational best practice in software engineering. Now, with the launch of Module Registry and Workspace Templates, we’re bringing the same mindset to infrastructure – enabling platform teams to adopt a more standardized approach while reducing risk.

From a security and compliance perspective, these features allow teams to define infrastructure patterns once, test them thoroughly, and then reuse them with confidence across teams and environments. This massively improves consistency across teams and reduces the risk of human error — without slowing down delivery.

Here’s how each feature works.

Module Registry: Centralized Reusability for Tested Infrastructure Modules

Module Registry empowers users to create, share, and manage centrally stored “golden templates” for infrastructure components. By registering modules centrally, teams can:

- Reuse proven infrastructure patterns across projects without repeating code.

- Accelerate deployments by giving developers access to pre-approved and well-tested modules.

- Enforce governance through centralized oversight, ensuring only compliant, secure modules are used.

By making infrastructure components standardized, discoverable, and governed from a single location, Module Registry dramatically simplifies complexity and empowers teams to focus on building value, not reinventing the wheel.

The potential is already generating excitement among early adopters:

"The new Module Registry is exactly what we need to scale our infrastructure standards across teams,” said John Maynard, Director of Platform Engineering at PlayQ. “Harness IaCM has already helped us cut provisioning times dramatically – what used to take hours in Terraform Cloud now takes minutes – and with Module Registry, we can drive even more consistency and efficiency."

With Module Registry, we’re not just improving scalability, we’re simplifying the way teams manage their infrastructure.

Workspace Templates: Standardized Blueprints for Every New Project

Workspace Templates allow teams to predefine essential variables, configuration settings, and policies as reusable templates. When new workspaces are created, this approach:

- Enables teams to “start from template” and quickly spin up new projects with consistent, organization-approved settings.

- Reduces manual effort and accelerates onboarding by eliminating repetitive setup tasks and avoiding common misconfigurations.

- Keeps standards current by ensuring that any updates or improvements to a template automatically apply to all new workspaces created from it.

By embedding best practices into every new project, Workspace Templates help teams move faster while maintaining alignment, control, and repeatability across the organization.

How IaCM Has Transformed Infrastructure Management

Traditional Infrastructure as Code (IaC) solutions laid the foundation for how teams manage their cloud resources. But as organizations scale, many run into bottlenecks caused by complexity, drift, and fragmented tooling. Without built-in automation, repeatability, and visibility, teams struggle to maintain reliable infrastructure across environments.

Harness IaCM was built to solve these challenges. As a proud sponsor and contributor to the OpenTofu community, Harness also supports a more open, community-driven future for infrastructure as code. IaCM builds on that foundation with enterprise-grade capabilities like:

- Policy-aware pipelines that bake in governance and security from the start

- Change review workflows that catch issues early and enable safe collaboration

- Developer self-service with automated guardrails

- Reusable templates, modules, and variable sets that reduce duplication and enforce standards

- Tight integration with Harness CI/CD and Cloud Cost Management for full lifecycle automation and insight

Together, these capabilities help teams to:

- Move faster with flexible, standardized infrastructure workflows

- Minimize risk by enforcing best practices automatically

- Accelerate developer productivity without compromising on controls

- Streamline operations while maintaining visibility and accountability across teams

Since its GA launch last year, Harness IaCM has gained strong traction with several dozens of enterprise customers already on board – including multiple seven-figure deals. In financial services, one customer is managing dozens of workspaces using just a handful of templates, with beta users averaging more than 10 workspaces per template. In healthcare, another team now releases 100% of their modules with pre-configured tests, dramatically improving reliability. And a major banking customer has scaled to over 4,000 workspaces in just six months, enabled by standardization and governance patterns that drive consistency and confidence at scale.

With a focus on automation, reusability and visibility, Harness IaCM is helping enterprise teams rethink how they manage and deliver infrastructure at scale.

What’s Next for IaCM?

Harness’ Infrastructure as Code Management (IaCM) was built to address a massive untapped opportunity: to merge automation with deep capabilities in compliance, governance, and operational efficiency and create a solution that redefines how infrastructure code is managed throughout its lifecycle. Since launch, we’ve continued to invest in that vision – adding powerful features to drive consistency, governance, and speed. And we’re just getting started.

As we look ahead, we’re expanding IaCM in three key areas:

- Expanded Support for IaC Tools: We’re expanding support to tools like Ansible and Terragrunt. Teams can manage infrastructure provisioning, configuration, and application deployment all within a single Harness pipeline.

- Standardization at Scale: We’re rolling out reusable variable sets and a centralized provider registry to make it easier for teams to standardize configuration and onboard new projects quickly.

- Developer Experience: We’re significantly improving how teams create and manage ephemeral workspaces for testing, iteration, and experimentation in isolated, secure environments.

I invite you to sign up for a demo today and see firsthand how Harness IaCM is helping organizations scale infrastructure with greater speed, consistency, and control.

Fidelity's OpenTofu Migration: A DevOps Success Story Worth Studying

Fidelity's OpenTofu Migration: A DevOps Success Story Worth Studying

For Fidelity Investments, Hashicorp’s move to BSL licensing of Terraform and the community’s immediate response of creating an open-source fork, OpenTofu, under the Linux Foundation raised immediate questions. As an organization deeply committed to open source principles, moving from Terraform to OpenTofu aligned perfectly with their strategic values. They weren't just avoiding license restrictions; they were embracing a community-driven future for infrastructure automation.

What makes their story remarkable isn't just the scale (though managing 50,000+ state files is impressive), but how straightforward the migration proved to be. Because OpenTofu is a true drop-in replacement for Terraform, Fidelity's challenge was organizational, not technical. Their systematic approach offers lessons for any enterprise considering the move to OpenTofu—or tackling any major infrastructure change.

Let me walk you through what they did, because there are insights here that extend far beyond tool migration.

The Scale Challenge

First, let's appreciate what Fidelity was dealing with:

- 2,000+ applications

- 50,000+ state files

- 4+ million individual resources

- 4,000+ daily state file updates

This isn't a side project. This is production infrastructure that keeps a financial services giant running. Any misstep ripples through the entire organization.

Six-Phase Migration Strategy

Phase 1: Rigorous POC

They didn't start with faith they started with evidence. The key question wasn't "Does OpenTofu work?" but "Does it work with our existing CI/CD pipelines and artifact management?"

The answer was yes, confirming what many of us suspected: OpenTofu really is a drop-in replacement for Terraform.

Phase 2: Lighthouse Project

Here's where theory meets reality. Fidelity took an internal IaC platform application, converted it to OpenTofu, and deployed it to production. Not staging. Production.

This lighthouse approach is brilliant because it surfaces the unknown unknowns before they become organization-wide problems.

Phase 3: Building Consensus

You can't mandate your way through a migration of this scale. Fidelity invested heavily in socializing the change, presenting pros and cons honestly, engaging with key stakeholders, and targeting their biggest Terraform users for early buy-in.

Phase 4: Enablement Infrastructure

Migration success isn't just about the technology—it's about the people using it. Fidelity built comprehensive support structures, including tooling, documentation, and training, to ensure developers had everything they needed to succeed.

Phase 5: Transparent Progress Tracking

They made migration progress visible across the organization. Data-driven approaches build confidence. When people can see momentum, they're more likely to participate.

Phase 6: Default Switch

Once confidence was high, they made OpenTofu the default CLI, consolidated versions, and deprecated older Terraform installations.

Bonus: They branded their internal IaC services as "Bento"—creating a unified identity for standardized pipelines and reusable modules. Sometimes organizational psychology matters as much as the technology.

Key Insights

OpenTofu delivers on its compatibility promise. The migration effort focused on infrastructure pipeline adaptation, not massive code rewrites. This validates what the OpenTofu community has been saying—it really is a drop-in replacement that makes migration far simpler than switching between fundamentally different tools.

Shared pipelines are a force multiplier. Central pipeline changes benefited multiple teams simultaneously. This is why standardization matters—it creates leverage and makes organization-wide changes manageable.

CLI version consistency is crucial. Consolidating Terraform versions before migration eliminated a major source of friction. This organizational discipline paid dividends during the actual transition.

Open source alignment was deeply strategic. This wasn't just about licensing costs—Fidelity wanted to contribute to the OpenTofu community and actively shape IaC's future. They're now part of building the tools they depend on, rather than just consuming them.

The Broader Context

Fidelity's success illustrates how straightforward OpenTofu migration can be when approached systematically. The real work wasn't rewriting infrastructure code—it was organizational: building consensus, creating enablement, measuring progress.

This validates a key point about OpenTofu: because it maintains compatibility with Terraform, the traditional migration pain points (syntax changes, feature gaps, learning curves) simply don't exist. Organizations can focus on process and adoption rather than technical rewrites.

The shift to OpenTofu represents more than just avoiding HashiCorp's licensing restrictions. It's about participating in a community-driven future for infrastructure automation—something that clearly resonated with Fidelity's open source values.

What This Means for You

If you're managing infrastructure at scale, Fidelity's playbook offers a proven path for OpenTofu migration. The key insight? Because OpenTofu is compatible with Terraform, your migration complexity is organizational, not technical. Focus on consensus-building, phased adoption, and comprehensive enablement rather than worrying about code rewrites.

For organizations committed to open source principles, the choice becomes even clearer. OpenTofu offers the same functionality with the added benefit of community control and transparent development. You're not just getting a tool—you're joining an ecosystem where you can influence the future of infrastructure automation.

The infrastructure automation landscape is evolving toward community-driven solutions. Organizations like Fidelity aren't just adapting to this change they're leading it. Their migration proves that moving to OpenTofu isn't just possible at enterprise scale; with the right approach, it's surprisingly straightforward.

Worth studying, worth emulating and worth making the move.

At Harness, we offer our Infrastructure-as-Code Management customers guidance and services to streamline their migration from Terraform to OpenTofu if that's part of their plans. To learn more about that, please contact us.

The Four Stages of Infrastructure Automation

Infrastructure management has undergone a radical transformation in the past decade. Gone are the days of manual server configuration and endless clicking through cloud provider consoles. Today, we're witnessing a renaissance of infrastructure management, driven by Infrastructure as Code (IaC) tools like OpenTofu.

The Origin Story: Why Automation Matters

Imagine a world where deploying infrastructure was like assembling furniture without instructions. Each engineer would interpret the blueprint differently, leading to inconsistent, fragile systems. This was the reality before IaC. OpenTofu emerged as a community-driven solution to standardize and simplify infrastructure deployment, offering a declarative approach that treats infrastructure like software.

Stage 1: Version Control and Basic Automation

The first stage of infrastructure automation is about bringing structure and repeatability to deployments. Here, teams transition from manual configurations to storing infrastructure definitions in version-controlled repositories.

Picture a development team where infrastructure changes are no longer mysterious, one-off events. Instead, every network configuration and every server setup becomes a traceable, reviewable piece of code. Pull requests become the new change management meetings, with automated checks validating proposed infrastructure modifications before they touch production.

Collaborative Infrastructure Workflows

Version control integration transformed infrastructure management. Suddenly, infrastructure changes became collaborative, transparent processes where team members could review, comment, and validate complex system modifications before deployment. By treating infrastructure code like application code, organizations created more reliable, predictable deployment mechanisms.

This approach allows teams to:

- Implement peer review processes for infrastructure changes

- Create audit trails for all infrastructure modifications

- Reduce risk through collaborative validation

- Ensure consistency across deployment environments

Stage 2: Specialized Infrastructure Pipelines

As organizations mature, they move beyond basic automation to create sophisticated, environment-specific deployment strategies. This isn't just about deploying infrastructure—it's about creating intelligent, context-aware deployment mechanisms.

Custom workflows emerge, allowing teams to:

- Enforce granular access controls

- Create environment-specific deployment gates

- Integrate sophisticated security and compliance checks

- Automate complex multi-stage infrastructure rollouts

Stage 3: Advanced Infrastructure Orchestration

Here's where things get interesting. Advanced teams start thinking about infrastructure not as monolithic blocks, but as dynamic, interconnected micro-services. Infrastructure becomes adaptable, scalable, and increasingly intelligent.

Imagine infrastructure that can:

- Dynamically provision resources based on real-time demand

- Automatically scale and reconfigure based on workload

- Implement self-healing mechanisms that detect and correct configuration drifts

Stage 4: Governance and Self-Service Platforms

The final stage represents the holy grail of infrastructure management: a fully self-service model with robust governance and compliance mechanisms.

Security and Compliance as Code

Open Policy Agent (OPA) policies transform compliance from a bureaucratic nightmare into an automated, programmable process. Instead of lengthy approval meetings, organizations can now encode compliance requirements directly into their infrastructure deployment pipelines.

Cost Management and Reliability

Advanced platforms now offer:

- Predictive infrastructure cost modeling

- Automatic drift detection

- Real-time compliance monitoring

- Comprehensive audit trails

The OpenTofu Advantage

While Terraform pioneered this space, OpenTofu represents the next evolution. As a community-driven, open-source alternative, it offers:

- Greater transparency

- Community-powered innovation

- Reduced vendor lock-in

- Faster iterative improvements

Conclusion: The Future of Infrastructure Management

Infrastructure automation is no longer a luxury—it's a strategic imperative. By embracing tools like OpenTofu, organizations can transform infrastructure from a cost centre to a competitive advantage.

Explore Harness Infrastructure as Code Management and discover the benefits associated with IaCM tooling that integrates seamlessly with tools like CI/CD pipelines, Cloud Cost Management, Vulnerability scanner with Security Testing Orchestration and much more, all under one roof.

Unlocking Cloud Efficiency: IaCM Meets Cost Management

Managing and predicting cloud costs can be challenging in today's dynamic cloud environments, especially when infrastructure changes occur frequently. Many organizations struggle to maintain visibility into their cloud spending, which can lead to budget overruns and financial inefficiencies. This issue is exacerbated when infrastructure is provisioned and modified frequently, making it hard to predict and control costs.

Integrating Infrastructure as Code (IaC) practices with robust cost management tools can provide a solution to these challenges. By enabling cost estimates and enforcing budgetary policies at the planning stage of infrastructure changes, teams can gain greater visibility and control over their cloud expenses. This approach not only helps in avoiding surprise costs but also ensures that resources are used efficiently and aligned with business goals.

How Do IaC and Cost Management Help?

Infrastructure as Code Management (IaCM): IaCM allows teams to define, provision, and manage cloud resources using code, making infrastructure changes repeatable and consistent. This method of managing infrastructure comes with the added benefit of predictability. By incorporating cost estimation directly into the IaC workflow, teams can preview the financial impact of proposed changes before they are applied. This capability is crucial for planning and budgeting, enabling organizations to avoid costly surprises and make data-driven decisions about infrastructure investments.