Featured Blogs

At Harness, we know developer velocity depends on everyday workflow. That is why we reimagined Harness Code with a faster, cleaner, and more intuitive experience that helps engineers stay in flow from the first clone to the final merge.

Why Developers Love the New Experience

Smarter Pull Request Reviews

Review diffs and conversations without constant context switching. Inline comments, keyboard shortcuts, and faster file rendering help you focus on the code instead of the clicks.

Faster File Tree and Change Listing

The new file browser is optimized for large repositories. You can search, jump, and scan changes instantly even when working with thousands of files.

Seamless Repo Navigation

Move between branches, commits, and repositories without losing your scroll position or comment state.

Unified Harness Design System

The entire interface now uses the same design system as the rest of the Harness platform, which reduces the learning curve and makes navigation feel natural.

Why Leaders Should Care

Every inefficiency in the developer experience is a hidden tax on velocity. Harness Code removes those blockers so your teams:

- Ship faster by reducing wasted time in code reviews.

- Collaborate better with a clear, intuitive UI that scales across teams.

- Standardize workflows with a design system that unifies the Harness platform.

All 500-plus Harness engineers are already using the new experience, proving it scales in real enterprise environments.

Seamless Rollout, Zero Migration

Adopting the new experience is effortless:

- Beginning of October 2025: Available for all users (opt-in).

- End of December 2025: Legacy UI deprecated.

- Beginning of January 2026: New experience becomes default.

There is nothing to migrate. Simply click 'Opt In', and your repositories, permissions, and integrations will continue to work as before.

What’s Next

The new Harness Code experience is only the beginning. Coming soon:

- Even faster repo load times.

- More native AI support for PR reviews and commit insights.

We’re continuing to invest in developer-first features that make Harness Code not just a repository, but the heartbeat of your software delivery pipeline.

Try It Today

If you have been looking for a modern, developer-first alternative to GitHub or GitLab that integrates directly with your CI/CD pipelines, now is the time to try it.

👉 Start your Harness Code trial today and experience a repo that helps you move faster and deliver more.

Learn more: Workflow Management, What Is a Developer Platform

.webp)

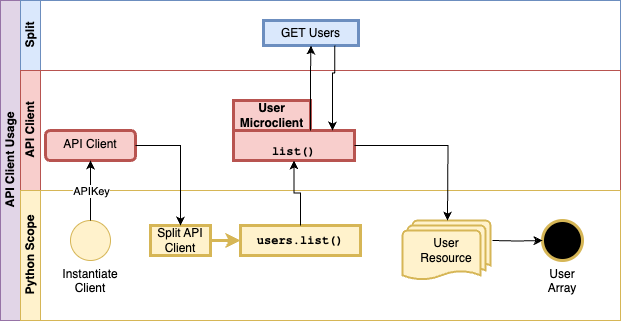

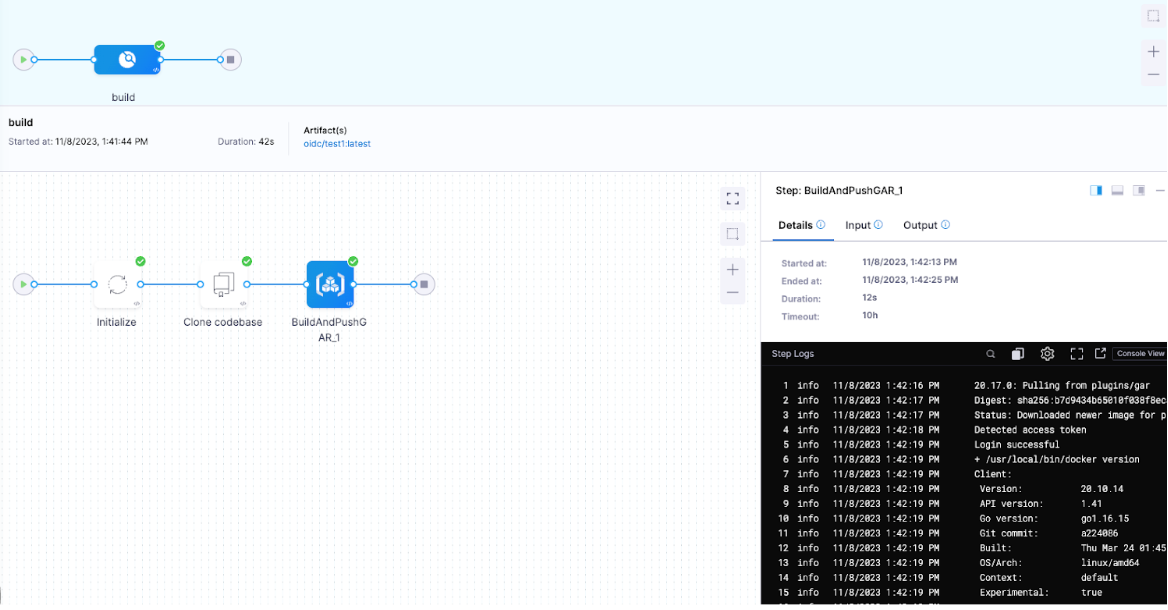

Harness Cloud is a fully managed Continuous Integration (CI) platform that allows teams to run builds on Harness-managed virtual machines (VMs) pre-configured with tools, packages, and settings typically used in CI pipelines. In this blog, we'll dive into the four core pillars of Harness Cloud: Speed, Governance, Reliability, and Security. By the end of this post, you'll understand how Harness Cloud streamlines your CI process, saves time, ensures better governance, and provides reliable, secure builds for your development teams.

Faster Builds

Harness Cloud delivers blazing-fast builds on multiple platforms, including Linux, macOS, Windows, and mobile operating systems. With Harness Cloud, your builds run in isolation on pre-configured VMs managed by Harness. This means you don’t have to waste time setting up or maintaining your infrastructure. Harness handles the heavy lifting, allowing you to focus on writing code instead of waiting for builds to complete.

The speed of your CI pipeline is crucial for agile development, and Harness Cloud gives you just that—quick, efficient builds that scale according to your needs. With starter pipelines available for various programming languages, you can get up and running quickly without having to customize your environment.

Streamlined Governance

One of the most critical aspects of any enterprise CI/CD process is governance. With Harness Cloud, you can rest assured that your builds are running in a controlled environment. Harness Cloud makes it easier to manage your build infrastructure with centralized configurations and a clear, auditable process. This improves visibility and reduces the complexity of managing your CI pipelines.

Harness also gives you access to the latest features as soon as they’re rolled out. This early access enables teams to stay ahead of the curve, trying out new functionality without worrying about maintaining the underlying infrastructure. By using Harness Cloud, you're ensuring that your team is always using the latest CI innovations.

Reliable and Scalable Infrastructure

Reliability is paramount when it comes to build systems. With Harness Cloud, you can trust that your builds are running smoothly and consistently. Harness manages, maintains, and updates the virtual machines (VMs), so you don't have to worry about patching, system failures, or hardware-related issues. This hands-off approach reduces the risk of downtime and builds interruptions, ensuring that your development process is as seamless as possible.

By using Harness-managed infrastructure, you gain the peace of mind that comes with a fully supported, reliable platform. Whether you're running a handful of builds or thousands, Harness ensures they’re executed with the same level of reliability and uptime.

Robust Security

Security is at the forefront of Harness Cloud. With Harness managing your build infrastructure, you don't need to worry about the complexities of securing your own build machines. Harness ensures that all the necessary security protocols are in place to protect your code and the environment in which it runs.

Harness Cloud's commitment to security includes achieving SLSA Level 3 compliance, which ensures the integrity of the software supply chain by generating and verifying provenance for build artifacts. This compliance is achieved through features like isolated build environments and strict access controls, ensuring each build runs in a secure, tamper-proof environment.

For details, read the blog An In-depth Look at Achieving SLSA Level-3 Compliance with Harness.

Harness Cloud also enables secure connectivity to on-prem services and tools, allowing teams to safely integrate with self-hosted artifact repositories, source control systems, and other critical infrastructure. By leveraging Secure Connect, Harness ensures that these connections are encrypted and controlled, eliminating the need to expose internal resources to the public internet. This provides a seamless and secure way to incorporate on-prem dependencies into your CI workflows without compromising security.

Next Steps

Harness Cloud makes it easy to run and scale your CI pipelines without the headache of managing infrastructure. By focusing on the four pillars—speed, governance, reliability, and security—Harness ensures that your development pipeline runs efficiently and securely.

Harness CI and Harness Cloud give you:

✅ Blazing-fast builds—8X faster than traditional CI solutions

✅ A unified platform—Run builds on any language, any OS, including mobile

✅ Native SCM—Harness Code Repository is free and comes packed with built-in governance & security

If you're ready to experience a fully managed, high-performance CI environment, give Harness Cloud a try today.

.webp)

As software projects scale, build times often become a major bottleneck, especially when using tools like Bazel. Bazel is known for its speed and scalability, handling large codebases with ease. However, even the most optimized build tools can be slowed down by inefficient CI pipelines. In this blog, we’ll dive into how Bazel’s build capabilities can be taken to the next level with Harness CI. By leveraging features like Build Intelligence and caching, Harness CI helps maximize Bazel's performance, ensuring faster builds and a more efficient development cycle.

How Harness CI Enhances Bazel Builds

Harness CI integrates seamlessly with Bazel, taking full advantage of its strengths and enhancing performance. The best part? As a user, you don’t have to provide any additional configuration to leverage the build intelligence feature. Harness CI automatically configures the remote cache for your Bazel builds, optimizing the process from day one.

Build Intelligence: Speeding Up Bazel Builds

Harness CI’s Build Intelligence ensures that Bazel builds are as fast and efficient as possible. While Bazel has its own caching mechanisms, Harness CI takes this a step further by automatically configuring and optimizing the remote cache, reducing build times without any manual setup.

This automatic configuration means that you can benefit from faster, more efficient builds right away—without having to tweak cache settings or worry about how to handle build artifacts across multiple machines.

How It Works with Bazel

Harness CI seamlessly integrates with Bazel’s caching system, automatically handling the configuration of remote caches. So, when you run a build, Harness CI makes sure that any unchanged files are skipped, and only the necessary tasks are executed. If there are any changes, only those parts of the project are rebuilt, making the process significantly faster.

For example, when building the bazel-gazelle project, Harness CI ensures that any unchanged files are cached and reused in subsequent builds, reducing the need for unnecessary recompilation. All this happens automatically in the background without requiring any special configuration from the user.

Benefits for Bazel Projects

- Automatic Remote Caching: No manual configuration needed—Harness CI configures the remote cache for you.

- Faster Builds: Builds run faster by reusing previously built outputs and skipping redundant tasks.

- Optimized Resource Usage: Reduces the use of CPU and memory by only running the tasks that are necessary.

Sample Pipeline: Harness CI with Bazel

Benchmarking: Harness CI vs. GitHub Actions with Bazel

We compared the performance of Bazel builds using Harness CI and GitHub Actions, and the results were clear: Harness CI, with its automatic configuration and optimized caching, delivered up to 4x faster builds than GitHub Actions. The automatic configuration of the remote cache made a significant difference, helping Bazel avoid redundant tasks and speeding up the build process.

Results:

- Harness CI with Bazel: Builds were up to 4x faster, thanks to automatic remote cache configuration and build optimization.

- GitHub Actions with Bazel: Slower builds due to less efficient caching and redundant task execution.

Next Steps

Bazel is an excellent tool for large-scale builds, but it becomes even more powerful when combined with Harness CI and Harness Cloud. By automatically configuring remote caches and applying build intelligence, Harness CI ensures that your Bazel builds are as fast and efficient as possible, without requiring any additional configuration from you.

By combining other Harness CI intelligence features like Cache Intelligence, Docker Layer Caching, and Test Intelligence, you can speed up your Bazel projects by up to 8x.With the hyper optimized build infrastructure, you can experience lightning-fast builds on Harness Cloud at reasonable costs. This seamless integration allows you to spend less time waiting for builds and more time focusing on delivering quality code.

If you're looking to speed up your Bazel builds, give Harness CI a try today and experience the difference!

Recent Blogs

Top Continuous Integration Metrics Every Platform Engineering Leader Should Track

Your developers complain about 20-minute builds while your cloud bill spirals out of control. Pipeline sprawl across teams creates security gaps you can't even see. These aren't separate problems. They're symptoms of a lack of actionable data on what actually drives velocity and cost.

The right CI metrics transform reactive firefighting into proactive optimization. With analytics data from Harness CI, platform engineering leaders can cut build times, control spend, and maintain governance without slowing teams down.

Why Do CI Metrics Matter for Platform Engineering Leaders?

Platform teams who track the right CI metrics can quantify exactly how much developer time they're saving, control cloud spending, and maintain security standards while preserving development velocity. The importance of tracking CI/CD metrics lies in connecting pipeline performance directly to measurable business outcomes.

Reclaim Hours Through Speed Metrics

Build time, queue time, and failure rates directly translate to developer hours saved or lost. Research shows that 78% of developers feel more productive with CI, and most want builds under 10 minutes. Tracking median build duration and 95th percentile outliers can reveal your productivity bottlenecks.

Harness CI delivers builds up to 8X faster than traditional tools, turning this insight into action.

Turn Compute Minutes Into Budget Predictability

Cost per build and compute minutes by pipeline eliminate the guesswork from cloud spending. AWS CodePipeline charges $0.002 per action-execution-minute, making monthly costs straightforward to calculate from your pipeline metrics.

Measuring across teams helps you spot expensive pipelines, optimize resource usage, and justify infrastructure investments with concrete ROI.

Measure Artifact Integrity at Scale

SBOM completeness, artifact integrity, and policy pass rates ensure your software supply chain meets security standards without creating development bottlenecks. NIST and related EO 14028 guidance emphasize on machine-readable SBOMs and automated hash verification for all artifacts.

However, measurement consistency remains challenging. A recent systematic review found that SBOM tooling variance creates significant detection gaps, with tools reporting between 43,553 and 309,022 vulnerabilities across the same 1,151 SBOMs.

Standardized metrics help you monitor SBOM generation rates and policy enforcement without manual oversight.

10 CI/CD Metrics That Move the Needle

Not all metrics deserve your attention. Platform engineering leaders managing 200+ developers need measurements that reveal where time, money, and reliability break down, and where to fix them first.

- Performance metrics show where developers wait instead of code. High-performing organizations achieve up to 440 times faster lead times and deploy 46 times more frequently by tracking the right speed indicators.

- Cost and resource indicators expose hidden optimization opportunities. Organizations using intelligent caching can reduce infrastructure costs by up to 76% while maintaining speed, turning pipeline data into budget predictability.

- Quality and governance metrics scale security without slowing delivery. With developers increasingly handling DevOps responsibilities, compliance and reliability measurements keep distributed teams moving fast without sacrificing standards.

So what does this look like in practice? Let's examine the specific metrics.

- Build Duration (p50/p95): Pinpointing Bottlenecks and Outliers

Build duration becomes most valuable when you track both median (p50) and 95th percentile (p95) times rather than simple averages. Research shows that timeout builds have a median duration of 19.7 minutes compared to 3.4 minutes for normal builds. That’s over five times longer.

While p50 reveals your typical developer experience, p95 exposes the worst-case delays that reduce productivity and impact developer flow. These outliers often signal deeper issues like resource constraints, flaky tests, or inefficient build steps that averages would mask. Tracking trends in both percentiles over time helps you catch regressions before they become widespread problems. Build analytics platforms can surface when your p50 increases gradually or when p95 spikes indicate new bottlenecks.

Keep builds under seven minutes to maintain developer engagement. Anything over 15 minutes triggers costly context switching. By monitoring both typical and tail performance, you optimize for consistent, fast feedback loops that keep developers in flow. Intelligent test selection reduces overall build durations by up to 80% by selecting and running only tests affected by the code changes, rather than running all tests.

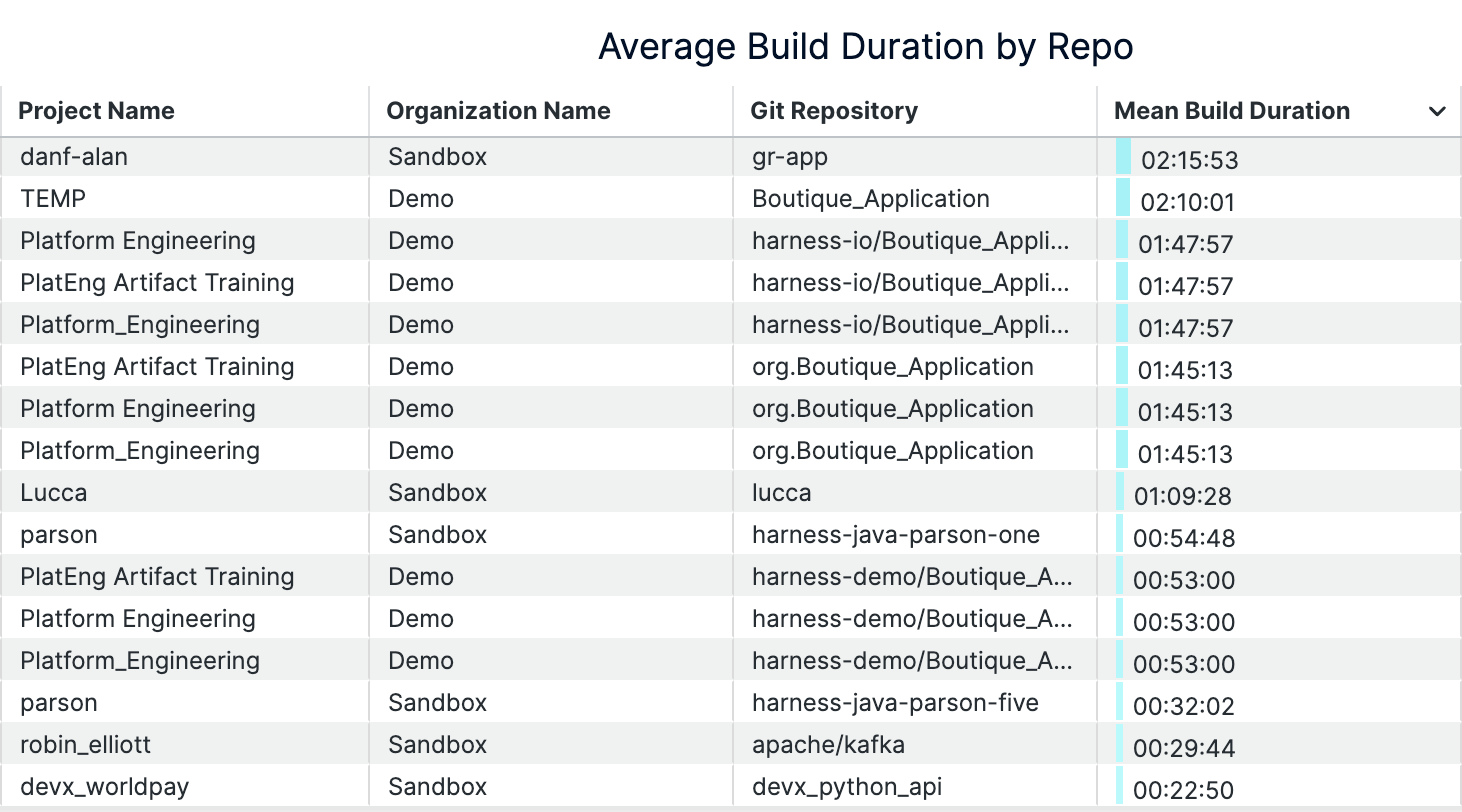

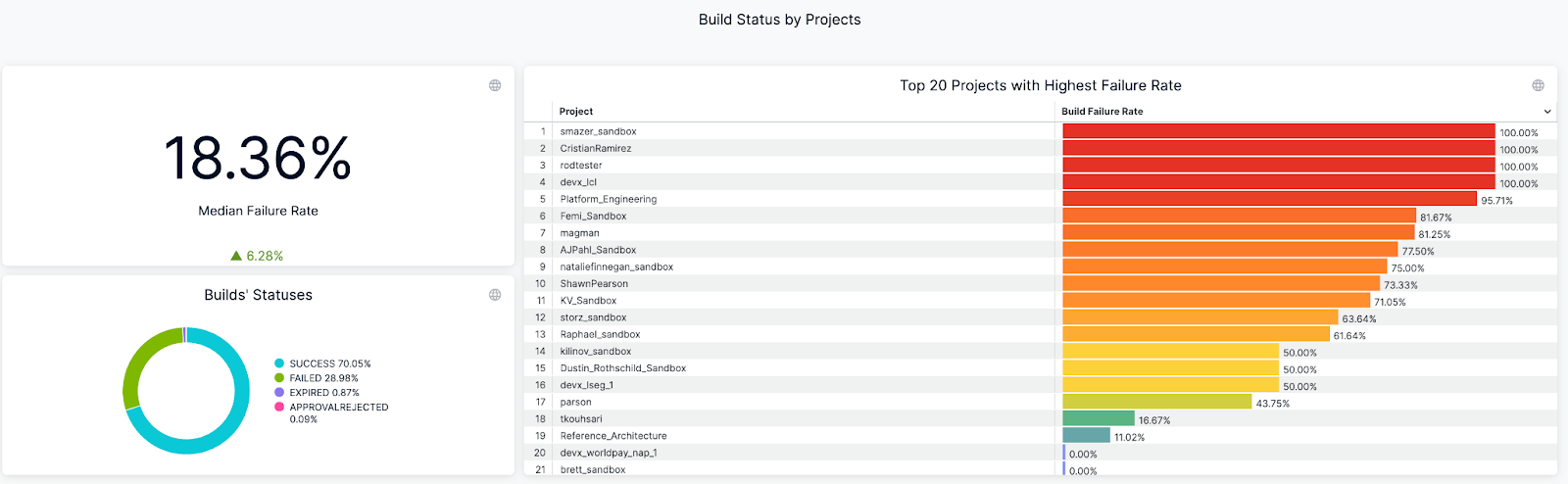

An example of build durations dashboard (on Harness)

- Queue Time: Measuring Infrastructure Constraints

Queue time measures how long builds wait before execution begins. This is a direct indicator of insufficient build capacity. When developers push code, builds shouldn't sit idle while runners or compute resources are tied up. Research shows that heterogeneous infrastructure with mixed processing speeds creates excessive queue times, especially when job routing doesn't account for worker capabilities. Queue time reveals when your infrastructure can't handle developer demand.

Rising queue times signal it's time to scale infrastructure or optimize resource allocation. Per-job waiting time thresholds directly impact throughput and quality outcomes. Platform teams can reduce queue time by moving to Harness Cloud's isolated build machines, implementing intelligent caching, or adding parallel execution capacity. Analytics dashboards track queue time trends across repositories and teams, enabling data-driven infrastructure decisions that keep developers productive.

- Build Success Rate: Ensuring Pipeline Reliability

Build success rate measures the percentage of builds that complete successfully over time, revealing pipeline health and developer confidence levels. When teams consistently see success rates above 90% on their default branches, they trust their CI system to provide reliable feedback. Frequent failures signal deeper issues — flaky tests that pass and fail randomly, unstable build environments, or misconfigured pipeline steps that break under specific conditions.

Tracking success rate trends by branch, team, or service reveals where to focus improvement efforts. Slicing metrics by repository and pipeline helps you identify whether failures cluster around specific teams using legacy test frameworks or services with complex dependencies. This granular view separates legitimate experimental failures on feature branches from stability problems that undermine developer productivity and delivery confidence.

An example of Build Success/Failure Rate Dashboard (on Harness)

- Mean Time to Recovery (MTTR): Speeding Up Incident Response

Mean time to recovery measures how fast your team recovers from failed builds and broken pipelines, directly impacting developer productivity. Research shows organizations with mature CI/CD implementations see MTTR improvements of over 50% through automated detection and rollback mechanisms. When builds fail, developers experience context switching costs, feature delivery slows, and team velocity drops. The best-performing teams recover from incidents in under one hour, while others struggle with multi-hour outages that cascade across multiple teams.

Automated alerts and root cause analysis tools slash recovery time by eliminating manual troubleshooting, reducing MTTR from 20 minutes to under 3 minutes for common failures. Harness CI's AI-powered troubleshooting surfaces failure patterns and provides instant remediation suggestions when builds break.

- Flaky Test Rate: Eliminating Developer Frustration

Flaky tests pass or fail non-deterministically on the same code, creating false signals that undermine developer trust in CI results. Research shows 59% of developers experience flaky tests monthly, weekly, or daily, while 47% of restarted failing builds eventually passed. This creates a cycle where developers waste time investigating false failures, rerunning builds, and questioning legitimate test results.

Tracking flaky test rate helps teams identify which tests exhibit unstable pass/fail behavior, enabling targeted stabilization efforts. Harness CI automatically detects problematic tests through failure rate analysis, quarantines flaky tests to prevent false alarms, and provides visibility into which tests exhibit the highest failure rates. This reduces developer context switching and restores confidence in CI feedback loops.

- Cost Per Build: Controlling CI Infrastructure Spend

Cost per build divides your monthly CI infrastructure spend by the number of successful builds, revealing the true economic impact of your development velocity. CI/CD pipelines consume 15-40% of overall cloud infrastructure budgets, with per-run compute costs ranging from $0.40 to $4.20 depending on application complexity, instance type, region, and duration. This normalized metric helps platform teams compare costs across different services, identify expensive outliers, and justify infrastructure investments with concrete dollar amounts rather than abstract performance gains.

Automated caching and ephemeral infrastructure deliver the biggest cost reductions per build. Intelligent caching automatically stores dependencies and Docker layers. This cuts repeated download and compilation time that drives up compute costs.

Ephemeral build machines eliminate idle resource waste. They spin up fresh instances only when the queue builds, then terminate immediately after completion. Combine these approaches with right-sized compute types to reduce infrastructure costs by 32-43% compared to oversized instances.

- Cache Hit Rate: Accelerating Builds With Smart Caching

Cache hit rate measures what percentage of build tasks can reuse previously cached results instead of rebuilding from scratch. When teams achieve high cache hit rates, they see dramatic build time reductions. Docker builds can drop from five to seven minutes to under 90 seconds with effective layer caching. Smart caching of dependencies like node_modules, Docker layers, and build artifacts creates these improvements by avoiding expensive regeneration of unchanged components.

Harness Build and Cache Intelligence eliminates the manual configuration overhead that traditionally plagues cache management. It handles dependency caching and Docker layer reuse automatically. No complex cache keys or storage management required.

Measure cache effectiveness by comparing clean builds against fully cached runs. Track hit rates over time to justify infrastructure investments and detect performance regressions.

- Test Cycle Time: Optimizing Feedback Loops

Test cycle time measures how long it takes to run your complete test suite from start to finish. This directly impacts developer productivity because longer test cycles mean developers wait longer for feedback on their code changes. When test cycles stretch beyond 10-15 minutes, developers often switch context to other tasks, losing focus and momentum. Recent research shows that optimized test selection can accelerate pipelines by 5.6x while maintaining high failure detection rates.

Smart test selection optimizes these feedback loops by running only tests relevant to code changes. Harness CI Test Intelligence can slash test cycle time by up to 80% using AI to identify which tests actually need to run. This eliminates the waste of running thousands of irrelevant tests while preserving confidence in your CI deployments.

- Pipeline Failure Cause Distribution: Prioritizing Remediation

Categorizing pipeline issues into domains like code problems, infrastructure incidents, and dependency conflicts transforms chaotic build logs into actionable insights. Harness CI's AI-powered troubleshooting provides root cause analysis and remediation suggestions for build failures. This helps platform engineers focus remediation efforts on root causes that impact the most builds rather than chasing one-off incidents.

Visualizing issue distribution reveals whether problems are systemic or isolated events. Organizations using aggregated monitoring can distinguish between infrastructure spikes and persistent issues like flaky tests. Harness CI's analytics surface which pipelines and repositories have the highest failure rates. Platform teams can reduce overall pipeline issues by 20-30%.

- Artifact Integrity Coverage: Securing the Software Supply Chain

Artifact integrity coverage measures the percentage of builds that produce signed, traceable artifacts with complete provenance documentation. This tracks whether each build generates Software Bills of Materials (SBOMs), digital signatures, and documentation proving where artifacts came from. While most organizations sign final software products, fewer than 20% deliver provenance data and only 3% consume SBOMs for dependency management. This makes the metric a leading indicator of supply chain security maturity.

Harness CI automatically generates SBOMs and attestations for every build, ensuring 100% coverage without developer intervention. The platform's SLSA L3 compliance capabilities generate verifiable provenance and sign artifacts using industry-standard frameworks. This eliminates the manual processes and key management challenges that prevent consistent artifact signing across CI pipelines.

Steps to Track CI/CD Metrics and Turn Insights Into Action

Tracking CI metrics effectively requires moving from raw data to measurable improvements. The most successful platform engineering teams build a systematic approach that transforms metrics into velocity gains, cost reductions, and reliable pipelines.

Step 1: Standardize Pipeline Metadata Across Teams

Tag every pipeline with service name, team identifier, repository, and cost center. This standardization creates the foundation for reliable aggregation across your entire CI infrastructure. Without consistent tags, you can't identify which teams drive the highest costs or longest build times.

Implement naming conventions that support automated analysis. Use structured formats like team-service-environment for pipeline names and standardize branch naming patterns. Centralize this metadata using automated tag enforcement to ensure organization-wide visibility.

Step 2: Automate Metric Collection and Visualization

Modern CI platforms eliminate manual metric tracking overhead. Harness CI provides dashboards that automatically surface build success rates, duration trends, and failure patterns in real-time. Teams can also integrate with monitoring stacks like Prometheus and Grafana for live visualization across multiple tools.

Configure threshold-based alerts for build duration spikes or failure rate increases. This shifts you from fixing issues after they happen to preventing them entirely.

Step 3: Analyze Metrics and Identify Optimization Opportunities

Focus on p95 and p99 percentiles rather than averages to identify critical performance outliers. Drill into failure causes and flaky tests to prioritize fixes with maximum developer impact. Categorize pipeline failures by root cause — environment issues, dependency problems, or test instability — then target the most frequent culprits first.

Benchmark cost per build and cache hit rates to uncover infrastructure savings. Optimized caching and build intelligence can reduce build times by 30-40% while cutting cloud expenses.

Step 4: Operationalize Improvements With Governance and Automation

Standardize CI pipelines using centralized templates and policy enforcement to eliminate pipeline sprawl. Store reusable templates in a central repository and require teams to extend from approved templates. This reduces maintenance overhead while ensuring consistent security scanning and artifact signing.

Establish Service Level Objectives (SLOs) for your most impactful metrics: build duration, queue time, and success rate. Set measurable targets like "95% of builds complete within 10 minutes" to drive accountability. Automate remediation wherever possible — auto-retry for transient failures, automated cache invalidation, and intelligent test selection to skip irrelevant tests.

Make Your CI Metrics Work

The difference between successful platform teams and those drowning in dashboards comes down to focus. Elite performers track build duration, queue time, flaky test rates, and cost per build because these metrics directly impact developer productivity and infrastructure spend.

Start with the measurements covered in this guide, establish baselines, and implement governance that prevents pipeline sprawl. Focus on the metrics that reveal bottlenecks, control costs, and maintain reliability — then use that data to optimize continuously.

Ready to transform your CI metrics from vanity to velocity? Experience how Harness CI accelerates builds while cutting infrastructure costs.

Continuous Integration Metrics FAQ

Platform engineering leaders often struggle with knowing which metrics actually move the needle versus creating metric overload. These answers focus on metrics that drive measurable improvements in developer velocity, cost control, and pipeline reliability.

What separates actionable CI metrics from vanity metrics?

Actionable metrics directly connect to developer experience and business outcomes. Build duration affects daily workflow, while deployment frequency impacts feature delivery speed. Vanity metrics look impressive, but don't guide decisions. Focus on measurements that help teams optimize specific bottlenecks rather than general health scores.

Which CI metrics have the biggest impact on developer productivity?

Build duration, queue time, and flaky test rate directly affect how fast developers get feedback. While coverage monitoring dominates current practices, build health and time-to-fix-broken-builds offer the highest productivity gains. Focus on metrics that reduce context switching and waiting.

How do CI metrics help reduce infrastructure costs without sacrificing quality?

Cost per build and cache hit rate reveal optimization opportunities that maintain quality while cutting spend. Intelligent caching and optimized test selection can significantly reduce both build times and infrastructure costs. Running only relevant tests instead of entire suites cuts waste without compromising coverage.

What's the most effective way to start tracking CI metrics across different tools?

Begin with pipeline metadata standardization using consistent tags for service, team, and cost center. Most CI platforms provide basic metrics through built-in dashboards. Start with DORA metrics, then add build-specific measurements as your monitoring matures.

How often should teams review CI metrics and take action?

Daily monitoring of build success rates and queue times enables immediate issue response. Weekly reviews of build duration trends and monthly cost analysis drive strategic improvements. Automated alerts for threshold breaches prevent small problems from becoming productivity killers.

Unit Testing in CI/CD: How to Accelerate Builds Without Sacrificing Quality

Modern unit testing in CI/CD can help teams avoid slow builds by using smart strategies. Choosing the right tests, running them in parallel, and using intelligent caching all help teams get faster feedback while keeping code quality high.

Platforms like Harness CI use AI-powered test intelligence to reduce test cycles by up to 80%, showing what’s possible with the right tools. This guide shares practical ways to speed up builds and improve code quality, from basic ideas to advanced techniques that also lower costs.

What Is a Unit Test?

Knowing what counts as a unit test is key to building software delivery pipelines that work.

The Smallest Testable Component

A unit test looks at a single part of your code, such as a function, class method, or a small group of related components. The main point is to test one behavior at a time. Unit tests are different from integration tests because they look at the logic of your code. This makes it easier to figure out what went wrong if something goes wrong.

Isolation Drives Speed and Reliability

Unit tests should only check code that you wrote and not things like databases, file systems, or network calls. This separation makes tests quick and dependable. Tests that don't rely on outside services run in milliseconds and give the same results no matter where they are run, like on your laptop or in a CI pipeline.

Foundation for CI/CD Quality Gates

Unit tests are one of the most important part of continuous integration in CI/CD pipelines because they show problems right away after code changes. Because they are so fast, developers can run them many times a minute while they are coding. This makes feedback loops very tight, which makes it easier to find bugs and stops them from getting to later stages of the pipeline.

Unit Testing Strategies: Designing for Speed and Reliability

Teams that run full test suites on every commit catch problems early by focusing on three things: making tests fast, choosing the right tests, and keeping tests organized. Good unit testing helps developers stay productive and keeps builds running quickly.

Deterministic Tests for Every Commit

Unit tests should finish in seconds, not minutes, so that they can be quickly checked. Google's engineering practices say that tests need to be "fast and reliable to give engineers immediate feedback on whether a change has broken expected behavior." To keep tests from being affected by outside factors, use mocks, stubs, and in-memory databases. Keep commit builds to less than ten minutes, and unit tests should be the basis of this quick feedback loop.

Intelligent Test Selection

As projects get bigger, running all tests on every commit can slow teams down. Test Impact Analysis looks at coverage data to figure out which tests really check the code that has been changed. AI-powered test selection chooses the right tests for you, so you don't have to guess or sort them by hand.

Parallelization and Caching

To get the most out of your infrastructure, use selective execution and run tests at the same time. Divide test suites into equal-sized groups and run them on different machines simultaneously. Smart caching of dependencies, build files, and test results helps you avoid doing the same work over and over. When used together, these methods cut down on build time a lot while keeping coverage high.

Standardized Organization for Scale

Using consistent names, tags, and organization for tests helps teams track performance and keep quality high as they grow. Set clear rules for test types (like unit, integration, or smoke) and use names that show what each test checks. Analytics dashboards can spot flaky tests, slow tests, and common failures. This helps teams improve test suites and keep things running smoothly without slowing down developers.

Unit Test Example: From Code to Assertion

A good unit test uses the Arrange-Act-Assert pattern. For example, you might test a function that calculates order totals with discounts:

def test_apply_discount_to_order_total():

# Arrange: Set up test data

order = Order(items=[Item(price=100), Item(price=50)])

discount = PercentageDiscount(10)

# Act: Execute the function under test

final_total = order.apply_discount(discount)

# Assert: Verify expected outcome

assert final_total == 135 # 150 - 10% discountIn the Arrange phase, you set up the objects and data you need. In the Act phase, you call the method you want to test. In the Assert phase, you check if the result is what you expected.

Testing Edge Cases

Real-world code needs to handle more than just the usual cases. Your tests should also check edge cases and errors:

def test_apply_discount_with_empty_cart_returns_zero():

order = Order(items=[])

discount = PercentageDiscount(10)

assert order.apply_discount(discount) == 0

def test_apply_discount_rejects_negative_percentage():

order = Order(items=[Item(price=100)])

with pytest.raises(ValueError):

PercentageDiscount(-5)Notice the naming style: test_apply_discount_rejects_negative_percentage clearly shows what’s being tested and what should happen. If this test fails in your CI pipeline, you’ll know right away what went wrong, without searching through logs.

Benefits of Unit Testing: Building Confidence and Saving Time

When teams want faster builds and fewer late-stage bugs, the benefits of unit testing are clear. Good unit tests help speed up development and keep quality high.

- Catch regressions right away: Unit tests run in seconds and find breaking changes before they get to integration or production environments.

- Allow fearless refactoring: A strong set of tests gives you the confidence to change code without adding bugs you didn't expect.

- Cut down on costly debugging: Research shows that unit tests cover a lot of ground and find bugs early when fixing them is cheapest.

- Encourage modular design: Writing code that can be tested naturally leads to better separation of concerns and a cleaner architecture.

When you use smart test execution in modern CI/CD pipelines, these benefits get even bigger.

Disadvantages of Unit Testing: Recognizing the Trade-Offs

Unit testing is valuable, but knowing its limits helps teams choose the right testing strategies. These downsides matter most when you’re trying to make CI/CD pipelines faster and more cost-effective.

- Maintenance overhead grows as automated tests expand, requiring ongoing effort to update brittle or overly granular tests.

- False confidence occurs when high unit test coverage hides integration problems and system-level failures.

- Slow execution times can bottleneck CI pipelines when test collections take hours instead of minutes to complete.

- Resource allocation shifts developer time from feature work to test maintenance and debugging flaky tests.

- Coverage gaps appear in areas like GUI components, external dependencies, and complex state interactions.

Research shows that automatically generated tests can be harder to understand and maintain. Studies also show that statement coverage doesn’t always mean better bug detection.

Industry surveys show that many organizations have trouble with slow test execution and unclear ROI for unit testing. Smart teams solve these problems by choosing the right tests, using smart caching, and working with modern CI platforms that make testing faster and more reliable.

How Do Developers Use Unit Tests in Real Workflows?

Developers use unit tests in three main ways that affect build speed and code quality. These practices turn testing into a tool that catches problems early and saves time on debugging.

Test-Driven Development and Rapid Feedback Loops

Before they start coding, developers write unit tests. They use test-driven development (TDD) to make the design better and cut down on debugging. According to research, TDD finds 84% of new bugs, while traditional testing only finds 62%. This method gives you feedback right away, so failing tests help you decide what to do next.

Regression Prevention and Bug Validation

Unit tests are like automated guards that catch bugs when code changes. Developers write tests to recreate bugs that have been reported, and then they check that the fixes work by running the tests again after the fixes have been made. Automated tools now generate test cases from issue reports. They are 30.4% successful at making tests that fail for the exact problem that was reported. To stop bugs that have already been fixed from coming back, teams run these regression tests in CI pipelines.

Strategic Focus on Business Logic and Public APIs

Good developer testing doesn't look at infrastructure or glue code; it looks at business logic, edge cases, and public interfaces. Testing public methods and properties is best; private details that change often should be left out. Test doubles help developers keep business logic separate from systems outside of their control, which makes tests more reliable. Integration and system tests are better for checking how parts work together, especially when it comes to things like database connections and full workflows.

Unit Testing Best Practices: Maximizing Value, Minimizing Pain

Slow, unreliable tests can slow down CI and hurt productivity, while also raising costs. The following proven strategies help teams check code quickly and cut both build times and cloud expenses.

- Write fast, isolated tests that run in milliseconds and avoid external dependencies like databases or APIs.

- Use descriptive test names that clearly explain the behavior being tested, not implementation details.

- Run only relevant tests using selective execution to cut cycle times by up to 80%.

- Monitor test health with failure analytics to identify flaky or slow tests before they impact productivity.

- Refactor tests regularly alongside production code to prevent technical debt and maintain suite reliability.

Types of Unit Testing: Manual vs. Automated

Choosing between manual and automated unit testing directly affects how fast and reliable your pipeline is.

Manual Unit Testing: Flexibility with Limitations

Manual unit testing means developers write and run tests by hand, usually early in development or when checking tricky edge cases that need human judgment. This works for old systems where automation is hard or when you need to understand complex behavior. But manual testing can’t be repeated easily and doesn’t scale well as projects grow.

Automated Unit Testing: Speed and Consistency at Scale

Automated testing transforms test execution into fast, repeatable processes that integrate seamlessly with modern development workflows. Modern platforms leverage AI-powered optimization to run only relevant tests, cutting cycle times significantly while maintaining comprehensive coverage.

Why High-Velocity Teams Prioritize Automation

Fast-moving teams use automated unit testing to keep up speed and quality. Manual testing is still useful for exploring and handling complex cases, but automation handles the repetitive checks that make deployments reliable and regular.

Difference Between Unit Testing and Other Types of Testing

Knowing the difference between unit, integration, and other test types helps teams build faster and more reliable CI/CD pipelines. Each type has its own purpose and trade-offs in speed, cost, and confidence.

Unit Tests: Fast and Isolated Validation

Unit tests are the most important part of your testing plan. They test single functions, methods, or classes without using any outside systems. You can run thousands of unit tests in just a few minutes on a good machine. This keeps you from having problems with databases or networks and gives you the quickest feedback in your pipeline.

Integration Tests: Validating Component Interactions

Integration testing makes sure that the different parts of your system work together. There are two main types of tests: narrow tests that use test doubles to check specific interactions (like testing an API client with a mock service) and broad tests that use real services (like checking your payment flow with real payment processors). Integration tests use real infrastructure to find problems that unit tests might miss.

End-to-End Tests: Complete User Journey Validation

The top of the testing pyramid is end-to-end tests. They mimic the full range of user tasks in your app. These tests are the most reliable, but they take a long time to run and are hard to fix. Unit tests can find bugs quickly, but end-to-end tests may take days to find the same bug. This method works, but it can be brittle.

The Test Pyramid: Balancing Speed and Coverage

The best testing strategy uses a pyramid: many small, fast unit tests at the bottom, some integration tests in the middle, and just a few end-to-end tests at the top.

Workflow of Unit Testing in CI/CD Pipelines

Modern development teams use a unit testing workflow that balances speed and quality. Knowing this process helps teams spot slow spots and find ways to speed up builds while keeping code reliable.

The Standard Development Cycle

Before making changes, developers write code on their own computers and run unit tests. They run tests on their own computers to find bugs early, and then they push the code to version control so that CI pipelines can take over. This step-by-step process helps developers stay productive by finding problems early, when they are easiest to fix.

Automated CI Pipeline Execution

Once code is in the pipeline, automation tools run unit tests on every commit and give feedback right away. If a test fails, the pipeline stops deployment and lets developers know right away. This automation stops bad code from getting into production. Research shows this method can cut critical defects by 40% and speed up deployments.

Accelerating the Workflow

Modern CI platforms use Test Intelligence to only run the tests that are affected by code changes in order to speed up this process. Parallel testing runs test groups in different environments at the same time. Smart caching saves dependencies and build files so you don't have to do the same work over and over. These steps can help keep coverage high while lowering the cost of infrastructure.

Results Analysis and Continuous Improvement

Teams analyze test results through dashboards that track failure rates, execution times, and coverage trends. Analytics platforms surface patterns like flaky tests or slow-running suites that need attention. This data drives decisions about test prioritization, infrastructure scaling, and process improvements. Regular analysis ensures the unit testing approach continues to deliver value as codebases grow and evolve.

Unit Testing Techniques: Tools for Reliable, Maintainable Tests

Using the right unit testing techniques can turn unreliable tests into a reliable way to speed up development. These proven methods help teams trust their code and keep CI pipelines running smoothly:

- Replace slow external dependencies with controllable test doubles that run consistently.

- Generate hundreds of test cases automatically to find edge cases you'd never write manually.

- Run identical test logic against multiple inputs to expand coverage without extra maintenance.

- Capture complex output snapshots to catch unintended changes in data structures.

- Verify behavior through isolated components that focus tests on your actual business logic.

These methods work together to build test suites that catch real bugs and stay easy to maintain as your codebase grows.

Isolation Through Test Doubles

As we've talked about with CI/CD workflows, the first step to good unit testing is to separate things. This means you should test your code without using outside systems that might be slow or not work at all. Dependency injection is helpful because it lets you use test doubles instead of real dependencies when you run tests.

It is easier for developers to choose the right test double if they know the differences between them. Fakes are simple working versions, such as in-memory databases. Stubs return set data that can be used to test queries. Mocks keep track of what happens so you can see if commands work as they should.

This method makes sure that tests are always quick and accurate, no matter when you run them. Tests run 60% faster and there are a lot fewer flaky failures that slow down development when teams use good isolation.

Teams need more ways to get more test coverage without having to do more work, in addition to isolation. You can set rules that should always be true with property-based testing, and it will automatically make hundreds of test cases. This method is great for finding edge cases and limits that manual tests might not catch.

Expanding Coverage with Smart Generation

Parameterized testing gives you similar benefits, but you have more control over the inputs. You don't have to write extra code to run the same test with different data. Tools like xUnit's Theory and InlineData make this possible. This helps find more bugs and makes it easier to keep track of your test suite.

Both methods work best when you choose the right tests to run. You only run the tests you need, so platforms that know which tests matter for each code change give you full coverage without slowing things down.

Verifying Complex Outputs

The last step is to test complicated data, such as JSON responses or code that was made. Golden tests and snapshot testing make things easier by saving the expected output as reference files, so you don't have to do complicated checks.

If your code’s output changes, the test fails and shows what’s different. This makes it easy to spot mistakes, and you can approve real changes by updating the snapshot. This method works well for testing APIs, config generators, or any code that creates structured output.

Teams that use full automated testing frameworks see code coverage go up by 32.8% and catch 74.2% more bugs per build. Golden tests help by making it easier to check complex cases that would otherwise need manual testing.

The main thing is to balance thoroughness with easy maintenance. Golden tests should check real behavior, not details that change often. When you get this balance right, you’ll spend less time fixing bugs and more time building features.

Unit Testing Tools: Frameworks That Power Modern Teams

Picking the right unit testing tools helps your team write tests efficiently, instead of wasting time on flaky tests or slow builds. The best frameworks work well with your language and fit smoothly into your CI/CD process.

- JUnit and TestNG dominate Java environments, with TestNG offering advanced features like parallel execution and seamless pipeline integration.

- pytest leads Python testing environments with powerful fixtures and minimal boilerplate, making it ideal for teams prioritizing developer experience.

- Jest provides zero-configuration testing for JavaScript/TypeScript projects, with built-in mocking and snapshot capabilities.

- RSpec delivers behavior-driven development for Ruby teams, emphasizing readable test specifications.

Modern teams use these frameworks along with CI platforms that offer analytics and automation. This mix of good tools and smart processes turns testing from a bottleneck into a productivity boost.

Transform Your Development Velocity Today

Smart unit testing can turn CI/CD from a bottleneck into an advantage. When tests are fast and reliable, developers spend less time waiting and more time releasing code. Harness Continuous Integration uses Test Intelligence, automated caching, and isolated build environments to speed up feedback without losing quality.

Want to speed up your team? Explore Harness CI and see what's possible.

Harness Dynamic Pipelines: Complete Adaptability, Rock Solid Governance

For a long time, CI/CD has been “configuration as code.” You define a pipeline, commit the YAML, sync it to your CI/CD platform, and run it. That pattern works really well for workflows that are mostly stable.

But what happens when the workflow can’t be stable?

- An automation script needs to assemble a one-off release flow based on inputs.

- An AI agent (or even just a smart service) decides which tests to run for this change, right now.

- The pipeline is shadowing the definition written (and maintained) for a different tool

In all of those cases, forcing teams to pre-save a pipeline definition, either in the UI or in a repo, turns into a bottleneck.

Today, I want to introduce you to Dynamic Pipelines in Harness.

Dynamic Pipelines let you treat Harness as an execution engine. Instead of having to pre-save pipeline configurations before you can run them, you can generate Harness pipeline YAML on the fly (from a script, an internal developer portal, or your own code) and execute it immediately via API.

See it in action

Why Dynamic Pipelines?

To be clear, dynamic pipelines are an advanced functionality. Pipelines that rewrite themselves on the fly are not typically needed and should generally be avoided. They’re more complex than you want most of the time. But when you need this power, you really need it ,and you want it implemented well.

Here are some situations where you may want to consider using dynamic pipelines.

1) True “headless” orchestration

You can build a custom UI, or plug into something like Backstage, to onboard teams and launch workflows. Your portal asks a few questions, generates the corresponding Harness YAML behind the scenes, and sends it to Harness for execution.

Your portal owns the experience. Harness owns the orchestration: execution, logs, state, and lifecycle management. While mature pipeline reuse strategies will suggest using consistent templates for your IDP points, some organizations may use dynamic pipelines for certain classes of applications to generate more flexibility automatically.

2) Frictionless migration (when you can’t rewrite everything on day one)

Moving CI/CD platforms often stalls on the same reality: “we have a lot of pipelines.”

With Dynamic Pipelines, you can build translators that read existing pipeline definitions (for example, Jenkins or Drone configurations), convert them into Harness YAML programmatically, and execute them natively. That enables a more pragmatic migration path, incremental rather than a big-bang rewrite. It even supports parallel execution where both systems are in place for a short period of time.

3) AI and programmatic workflows (without the hype)

We’re entering an era where more of the delivery workflow is decided at runtime, sometimes by policy, sometimes by code, sometimes by AI-assisted systems. The point isn’t “fully autonomous delivery.” It’s intelligent automation with guardrails.

If an external system determines that a specific set of tests or checks is required for a particular change, it can assemble the pipeline YAML dynamically and run it. That’s a practical step toward a more programmatic stage/step generation over time. For that to work, the underlying DevOps platform must support dynamic pipelining. Harness does.

How it works

Dynamic execution is primarily API-driven, and there are two common patterns.

1) Fully dynamic pipeline execution

You execute a pipeline by passing the full YAML payload directly in the API request.

Workflow: your tool generates valid Harness YAML → calls the Dynamic Execution API → Harness runs the pipeline.

Result: the run starts immediately, and the execution history is tagged as dynamically executed.

2) Dynamic stages (pipeline-in-a-pipeline)

You can designate specific stages inside a parent pipeline as Dynamic. At runtime, the parent pipeline fetches or generates a YAML payload and injects it into that stage.

This is useful for hybrid setups:

- the “skeleton” stays stable (approvals, environments, shared steps)

- the “variable” parts (tests, deployments, validations) are decided at runtime

Governance without compromise

A reasonable question is: “If I can inject YAML, can I bypass security?”

Bottom line: no.

Dynamic pipelines are still subject to the same Harness governance controls, including:

- RBAC: users still need the right permissions (edit/execute) to run payloads

- OPA policies: policies are enforced against the generated YAML

- Secrets & connectors: dynamic runs use the same secrets management and connector model

This matters because speed and safety aren’t opposites if you build the right guardrails—a theme that shows up consistently in DORA’s research and in what high-performing teams do in practice.

Getting started

To use Dynamic Pipelines, enable Allow Dynamic Execution for Pipelines at both:

- the Account level, and

- the Pipeline level

Once that’s on, you can start building custom orchestration layers on top of Harness, portals, translators, internal services, or automation that generates pipelines at runtime.

The takeaway here is simple: Dynamic Pipelines unlock new “paved path” and programmatic CI/CD patterns without giving up governance. I’m excited to see what teams build with it.

Ready to try it? Check out the API documentation and run your first dynamic pipeline.

Harness patent for hybrid YAML editor enhances CI/CD workflows

We're thrilled to share some exciting news: Harness has been granted U.S. Patent US20230393818B2 (originally published as US20230393818A1) for our configuration file editor with an intelligent code-based interface and a visual interface.

This patent represents a significant step forward in how engineering teams interact with CI/CD pipelines. It formalizes a new way of managing configurations - one that is both developer-friendly and enterprise-ready - by combining the strengths of code editing with the accessibility of a visual interface.

👉 If you haven’t seen it yet, check out our earlier post on the Harness YAML Editor for context.

The Problem: YAML’s Double-Edged Sword

In modern DevOps, YAML is everywhere. Pipelines, infrastructure-as-code, Kubernetes manifests, you name it. YAML provides flexibility and expressiveness for DevOps pipelines, but it comes with drawbacks:

- Steep learning curve for newcomers.

- High error rate from indentation, nesting, and schema mismatches.

- Limited accessibility for non-developer stakeholders.

- Lack of standardization across services and teams.

The result? Developers spend countless hours fixing misconfigurations, chasing down syntax errors, and debugging pipelines that failed for reasons unrelated to their code.

We knew there had to be a better way.

The Invention: Hybrid YAML Editing

The patent covers a hybrid editor that blends the best of two worlds:

- Code-based editor - for developers who prefer raw YAML, enhanced with autocomplete, inline documentation, and semantic validation.

- Visual editor - a graphical interface that allows users to configure pipelines through icons, dropdowns, and drag-and-drop interactions, while still generating valid YAML under the hood.

What makes this unique is the schema stitching approach:

- Each microservice defines its own configuration schema.

- These are stitched together into a unified schema.

- The editor utilizes this unified schema to provide intelligent suggestions, detect errors, and validate content.

This ensures consistency, prevents invalid configurations, and gives users real-time feedback as they author pipelines.

Strategic Advantages

This isn’t just a UX improvement - it’s a strategic shift with broad implications.

1. Faster Onboarding

New developers no longer need to memorize every YAML field or indentation nuance. Autocomplete and inline hints guide them through configuration, while the visual editor provides an easy starting point. A wall of YAML can be hard to understand; a visual pipeline is easy to grok immediately.

2. Reduced Errors and Failures

Schema-based validation catches misconfigurations before they break builds or deployments. Teams save time, avoid unnecessary rollbacks, and maintain higher confidence in their pipelines.

3. Broader Adoption Across Roles

By offering both a code editor and a visual editor, the tool becomes accessible to a wider audience - developers, DevOps engineers, and even less technical stakeholders like product managers or QA leads who need visibility.

How It Works in Practice

Here’s a simple example:

Let’s say your pipeline YAML requires specifying a container image.

- In raw YAML, you’d type:

image: ubuntu:20.04

But what if you accidentally typed ubunty:20.04? In a traditional editor, the pipeline might fail later at runtime.

- In our editor, the schema stitching recognizes valid image registries and tags.

- It suggests ubuntu:20.04 as a valid option.

- If you mistype, it immediately flags the error, before you hit run.

Now add the visual editor:

- Instead of writing image: ubuntu:20.04, you pick “Ubuntu” from a dropdown of supported images.

- The editor still generates the underlying YAML for transparency, but you never risk invalid syntax.

Multiply this by hundreds of fields, across dozens of microservices, and the value becomes clear.

The Bigger Picture: Why This Matters Now

We’re in a new era of software delivery:

- Microservices mean more schemas, more configurations, more complexity.

- Platform engineering emphasizes self-service tooling for developers who don’t want to learn every underlying detail.

- Security and compliance demand consistency and auditability in configuration.

This patent directly addresses these trends by creating a foundation for intelligent, schema-driven configuration tooling. It allows Harness to:

- Build predictive configuration (e.g., suggesting next steps based on prior patterns).

- Offer real-time linting and autofix for YAML.

- Enable cross-service validation that ensures configurations align across the entire delivery pipeline.

Looking Ahead

With this patent secured, the door is open to innovate further:

- Smarter autocomplete powered by AI.

- Context-aware suggestions based on past pipelines.

- Richer visualizations of complex configurations.

- Automated detection of security misconfigurations.

This isn’t about YAML. DevOps configuration must be intuitive, resilient, and scalable to enable faster, safer, and more delightful software delivery.

Acknowledgments

This milestone wouldn’t have been possible without the incredible collaboration of our product, engineering, and legal teams. And of course, our customers. The feedback they provided shaped the YAML editor into what it is today.

Closing Thoughts

This patent is more than a legal win. It’s validation of an idea: that developer experience matters just as much as functionality. By bridging the gap between raw power and accessibility, we’re making CI/CD pipelines faster to build, safer to run, and easier to adopt.

At Harness, we invest aggressively in R&D to solve our customers' most complex problems. What truly matters is delivering capabilities that improve the lives of developers and platform teams, enabling them to innovate more quickly.

We're thrilled that this particular innovation, born from solving the real-world pain of YAML, has been formally recognized as a unique invention. It's the perfect example of our commitment to leading the industry and delivering tangible value, not just features.

👉 Curious to see it in action? Explore the Harness YAML Editor and share your feedback.

Seamless Data Sync from Google BigQuery to ClickHouse in an AWS Airgapped Environment

Seamless Data Sync from Google BigQuery to ClickHouse in an AWS Airgapped Environment

Understanding the Key Components

Airgap Environment

An airgapped environment enforces strict outbound policies, preventing external network communication. This setup enhances security but presents challenges for cross-cloud data synchronization.

Proxy Server

A proxy server is a lightweight, high-performance intermediary facilitating outbound requests from workloads in restricted environments. It acts as a bridge, enabling controlled external communication.

ClickHouse

ClickHouse is an open-source, column-oriented OLAP (Online Analytical Processing) database known for its high-performance analytics capabilities.

This article explores how to seamlessly sync data from BigQuery, Google Cloud’s managed analytics database, to ClickHouse running in an AWS-hosted airgapped Kubernetes cluster using proxy-based networking.

Use Case

Deploying ClickHouse in airgapped environments presents challenges in syncing data across isolated cloud infrastructures such as GCP, Azure, or AWS.

In our setup, ClickHouse is deployed via Helm charts in an AWS Kubernetes cluster, with strict outbound restrictions. The goal is to sync data from a BigQuery table (GCP) to ClickHouse (AWS K8S), adhering to airgap constraints.

Challenges

- Restricted Outbound Network: The ClickHouse cluster cannot directly access Google Cloud services due to airgap policies.

- Data Transfer Between Isolated Clouds: There is no straightforward mechanism for syncing data from GCP to ClickHouse in AWS without external connectivity.

Solution

The solution leverages a corporate proxy server to facilitate communication. By injecting a custom proxy configuration into ClickHouse, we enable HTTP/HTTPS traffic routing through the proxy, allowing controlled outbound access.

Architecture Overview

- BigQuery to GCS Export: Data is first exported from BigQuery to a GCS bucket.

- ClickHouse GCS Integration: ClickHouse fetches data from GCS using ClickHouse’s GCS function.

- Proxy Routing: ClickHouse’s outbound requests are routed through a corporate proxy server.

- Data Ingestion in ClickHouse: The retrieved data is processed and stored within ClickHouse for analytics.

Implementation Steps

1. Proxy Configuration

- Created a proxy.xml file defining proxy details for outbound HTTP/HTTPS requests.

- Used a Kubernetes ConfigMap (clickhouse-proxy-config)* to store this configuration.

- Mounted the ConfigMap dynamically into the ClickHouse pod.

2. Kubernetes Deployment

- Mounted proxy.xml in the ClickHouse pod at /etc/clickhouse-server/config.d/proxy.xml.

- Adjusted security contexts, allowing privilege escalation (for testing) and running the pod as root to simplify permissions.

3. Testing and Validation

- Deployed a non-stateful ClickHouse instance to iterate quickly.

- Verified that ClickHouse requests were routed through the proxy.

Observed proxy logs confirming outbound requests were successfully relayed to GCP.

Left window shows query to BigQuery and right window shows proxy logs — the request forwarding through proxy server

Outcome

This approach successfully enabled secure communication between ClickHouse (AWS) and BigQuery (GCP) in an airgapped environment. The use of a ConfigMap-based proxy configuration made the setup:

- Scalable: Easily adaptable to different cloud vendors (GCP, Azure, AWS).

- Flexible: Decouples networking configurations from application logic.

- Secure: Ensures outbound traffic is strictly controlled via the proxy.

By leveraging ClickHouse’s extensible configuration system and Kubernetes, we overcame strict network isolation to enable cross-cloud data workflows in constrained environments. This architecture can be extended to other cloud-native workloads requiring external data synchronization in airgapped environments.

AI Tooling in Non-Greenfield Codebases

AI Tooling in Non-Greenfield Codebases

It’s 2025 and if you work as a software engineer, you probably have access to an AI coding assistant at work. In this blog, I’ll share with you my experience working on a project to change the API endpoints of an existing codebase while making heavy use of an AI code assistant.

There’s a lot to be said about research showing the capability of AI code assistants on the day to day work of a software engineer. It’s clear as mud. Many people also have their own experience of working with AI tooling causing massive headaches with ‘AI Slop’ that is difficult to understand and only tangentially related to the original problem they were trying to address; filling up their codebase and making it impossible for them to actually understand what it is (or is supposed to be) doing.

I was part of the Split team that was acquired by Harness in Summer 2024. I had been maintaining an API wrapper for the Split APIs for a few years at this point.This allowed our users to take their existing python codebases and easily automate management of Split feature flags, users, groups, segments and other administrative entities. We were getting about 12–13,000 downloads per month. Not something that gets an enormous amount of traffic but not bad for someone who’s not officially on a Software Engineering team.

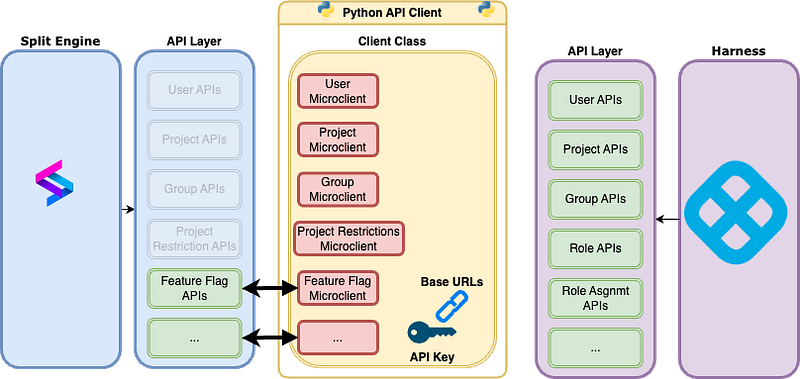

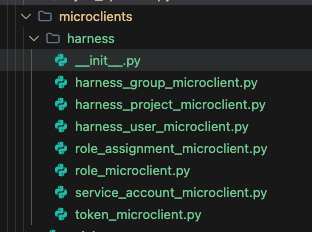

The architecture of the Python API client is that instantiating it constructs a client class that shares an API Key and optional base url configuration. Each API is served by what is called a ‘microclient’, which essentially handles the appropriate behavior of that endpoint, returning a resource of that type during create, read, and update commands.

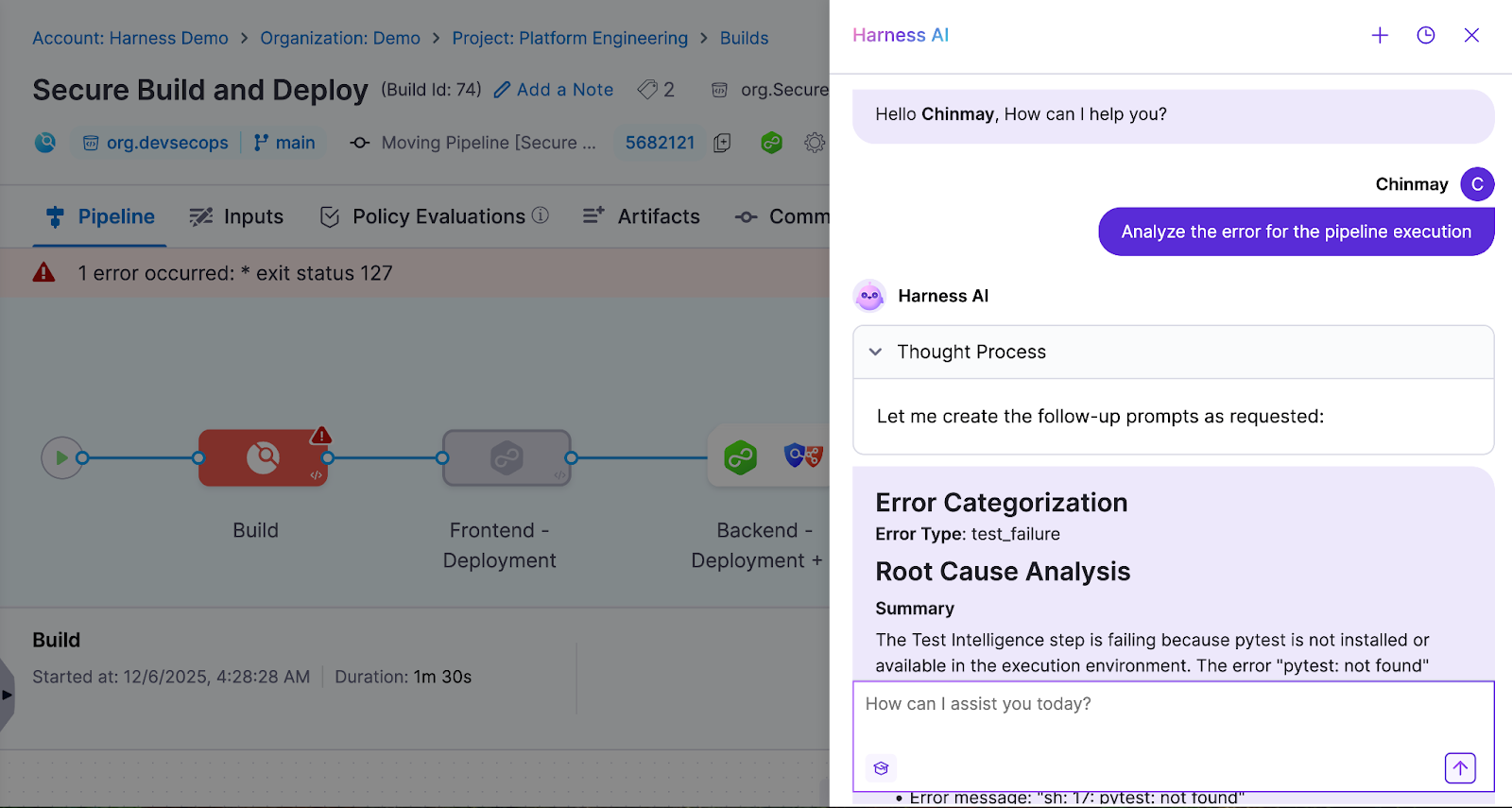

API Client Architecture

Example showing the call sequence of instantiating the API Client and making a list call

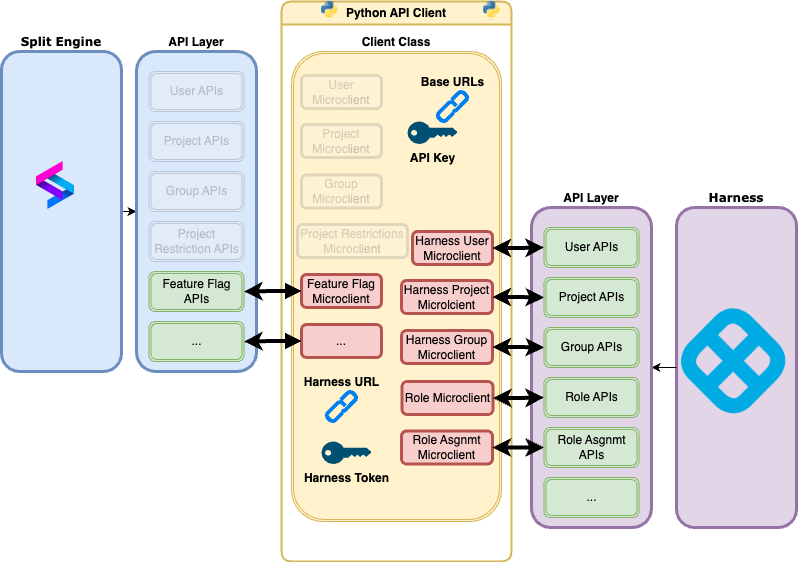

As part of the migration of Split into the Harness platform, Split will be deprecating some of its API endpoints — these — such as Users and Groups — will proceed to be maintained in the future under the banner of the Harness Platform. Split Customers are going to be migrated to have their Split App accessed from within Harness, and so Users, Groups, and Split Projects will proceed to be managed in Harness, meaning that Harness endpoints will have to be used.

How to mate the API Client with the proper endpoints for customers post Harness Migration?

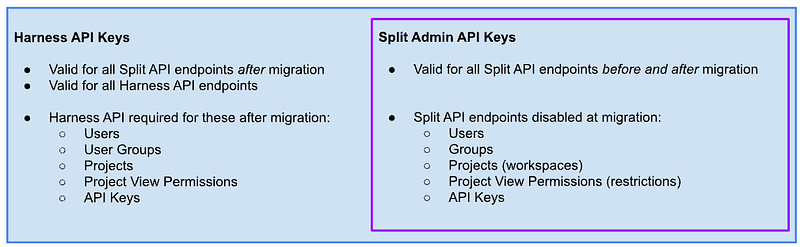

With respect to API keys, the Split API keys will continue to work for existing endpoints, and after migration to harness they will still be able to work. Harness API keys will work for everything and be required for Harness endpoints post-migration.

Now the fun begins

I had some great help from the former Split (now Harness FME) PMM and Engineering teams who took on the task of actually feeding me the relevant APIs from the Harness API Docs. This gave me a good starting point to understand what I might need to do.

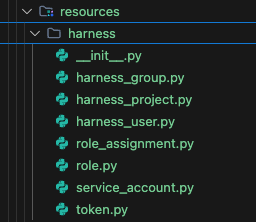

Essentially to have similar control over Harness’s Role Based Access Control (RBAC) and Project information just as we did in Split — I’d need to utilize the following Harness APIs

- Users

- Groups

- Projects

- Invites (to invite users)

- Role Assignments

- Roles

- Resource Groups

- Tokens

- API Keys

- Service Accounts

Not all Split accounts will be migrating at once to the Harness platform — this will be over a period of a few months. This means that we will have to support both API access styles for at least some period of time. I also know that I still have my normal role at Harness supporting onboarding customers using our FME SDKs and don’t have a lot of free time to re-write an API client from scratch, so I got to thinking about what my options were.

Mode Select

I really wanted to make the API transition as seamless as possible for my API client users. So the first thing I figured was that I would need a way to determine if the API key being used was from a migrated account. Unfortunately, after discussing with some folks there simply wasn’t going to be time for building out an endpoint like this for what will be, at most, a period of a few months. As such my first design decision was how to determine which ‘mode’ the Client was going to use, the existing mode with access to the older Split API endpoints, or the ‘new’ mode with those endpoints deprecated and a collection of new Harness endpoints available.

I decided this was going to be done with a variable on instantiation. Since the API client’s constructor signature already included an object as its argument, this I thought would be pretty straightforward.

Eg:

Would then have an additional option for:

Now — I was thinking and questioning how I would implement this.

Recently, Harness Employees were given access to Windsurf IDE with Claude AI. I figured since I could use the help that I would sign on and that this would help me build out my code changes faster.

I had used Claude, ChatGPT, DeepSeek, and various other AI assistants through their websites for small scale problem solving (eg — fill in this function, help me with this error, write me a shell script that does XYZ) but never actually worked with something integrated into the IDE.

So I fired up Windsurf and put in a pretty ambitious prompt to see what it was capable of doing.

Split has been acquired by harness and now the harness apis will be used for some of these endpoints. I will need to implement a seperate ‘harness_mode’ boolean that is passed in at the api constructor. In harness mode there will be new endpoints available and the existing split endpoints for users, groups, restrictions, all endpoints except ‘get’ for workspaces, and all endpoints for apikeys when the type == ‘admin’ will be deprecated. I will still need to have the apikey endpoint available for type==’client_side’ and ‘server_side’ keys.

It then whirred to work, and, quite frankly. I was really impressed with the results. However — It didn’t quite understand what I wanted. The harness endpoints are completely different in structure and methods (and in base url). The result was that I’d get the microclients to have harness methods and harness placeholders in the URLs but this wasn’t going to work. I should have told the AI that I really want different microclients and different resources for Harness. I reverted the changes and went back to the drawing board. (but I’ll get back to this later)

OpenAPI

My second Idea was to attempt to generate some API code from the Harness API docs themselves. Harness’s API docs have an OpenAPI specification available, and there are tools that can be used to generate API clients out of these specifications. However, it became clear to me that the tooling to create APIs from OpenAPI specifications isn’t easily filterable. Harness has nearly 300 API endpoints for the rich collection of modules and features that it has. Harness’s nearly 10 MB OpenAPI spec would actually crash the OpenAPI generator — it was too big. I spent some time working on code to strip out and filter the OpenAPI Spec JSON just to the endpoints I needed.

Here, the AI tooling was also helpful. I asked

how can I filter a openapi json by either tag or by endpoint resource path?

can this also remove components that aren’t part of the endpoints with tags

could you also have it remove unused tags

But the problem ended up being that the OpenAPI spec is actually more complex then I initially thought, including references, parameters and dependencies for objects. So it wasn’t going to be as simple as passing in my endpoints I need and proceeding to send them to the API Generator.

I kept attempting to run the filter script generated and then proceeded to run the generator. I did a few loops of attempting to run the script, getting an error, and sending it back to the AI assistant.

By the end I did seem to get a script that could do filtering, but filtering down to just what I needed ended up being still too big for the OpenAPI generator. You can see that code here

For a test, I did start generating with just one endpoint (harness_user) and reviewing the python generated code. One thing that was clear after reviewing the file was that it was just structured so wildly differently from the API Client that I already have. Also there are dozens of warnings inside of the generated code to not make any changes or updates to it. Moreover, I was not familiar with the codebase

Either manually or attempting via an AI assistant, stitching these together was not going to be easy, so I stashed this idea as well.

As an aside, I think this is worth noting, that an AI code assistant can’t help you when you don’t even know how to really specify what exactly you want and what your outcome is going to look like. I needed to have a better understanding of what I was trying to accomplish

Further Design Review

One of the things I had in my mind was that I really wanted to make the transition as seamless as possible. However, once my idea of the automated mode select was dashed, I still thought I could, through heroic effort, automate the creation of the existing Split python classes via the Harness APIs.

I had a deep dive into this idea and really came back with the result that it would simply be too burdensome to implement and not really give the users what they need.

For example — to create an API Key in Split, we just had one API endpoint with a json body:

However, Harness has a very rich RBAC model and with multiple modules has a far more flexible model of Service Accounts, API Keys, and individual tokens. Harness’s model allows for easy key rotation and allows the API key to really be more of a container for the actual token string that is used for authentication in the APIs.

Shown more simply in the diagrams below:

Observe the difference in structure of API Key authentication and generation

Now the Python microclient for generating API keys for Split currently makes calls structured like so:

To replicate this would mean that I would have to have the client in ‘Harness Mode’ create a Service Account, API Key, and Token all at the same time, and automatically map the roles to a created service account, being seamless to the user.

This is a tall task, and being pragmatic, I don’t see that as a real sustainable solution for developers using my library as they get more familiar with the Harness platform. They’re going to want to use Harness objects natively.

This is especially true with the delete method of the current client,

The Harness method for deleting a token takes the token identifier, not the token itself, making this signature impossible to reproduce with Harness’s APIs. And even if I could delete a token, would I want to delete the token and keep the service account and api key? Would I need to replicate the role assignment and roles that Split has? Much of this is very undefined.

Wanting to keep things as straightforward and maintainable as possible, along with trying to move to understanding the world in Harness’s API Schema, I had a design decision in my head.