The rapid adoption of AI is fundamentally reshaping the software development landscape, driving an unprecedented surge in code generation speed. However, this acceleration has created a significant challenge for security teams: the AI velocity paradox. This paradox describes a situation where the benefits of accelerated code generation are being "throttled by the SDLC processes downstream," such as security, testing, deployment, and compliance, which have not matured or automated at the same pace as AI has advanced the development process.

This gap is a recognized concern among industry leaders. In Harness’s latest State of AI in Software Engineering report, 48% of surveyed organizations worry that AI coding assistants introduce vulnerabilities, and 43% fear compliance issues stemming from untested, AI-generated code.

This blog post explores strategies for closing the widening gap and defending against the new attack surfaces created by AI tooling.

Defining the AI Velocity Paradox in Security

The AI velocity paradox is most acutely manifested in security. The benefits gained from code generation are being slowed down by downstream SDLC processes, such as testing, deployment, security, and compliance. This is because these processes have not "matured or automated at the same pace as code generation has."

Every time a coding agent or AI agent writes code, it has the potential to expand the threat surface. This can happen if the AI spins up a new application component, such as a new API, or pulls in unvalidated open-source models or libraries. If deployed without proper testing and validation, these components "can really expand your threat surface."

The imbalance is stark: code generation is up to 25% faster, and 70% of developers are shipping more frequently, yet only 46% of security compliance workflows are automated.

The Dual Risk: Vulnerabilities vs. Compliance

The Harness report revealed that 48% of respondents were concerned that AI coding assistance introduced vulnerabilities, while 43% feared regulatory exposure. While both risks are evident in practice, they do not manifest equally.

- Vulnerabilities are more tangible and appear more often in incident data. These issues include unauthenticated access to APIs, poor input validation, and the use of third-party libraries. This is where the "most tangible exposure is".

- Compliance is a "slow burn risk." For instance, new code might start "touching a sensitive data flow which was previously never documented." This may not be discovered until a specific compliance requirement triggers an investigation. Vulnerabilities are currently seen more often in real incident data than compliance issues.

A New Attack Surface: Non-Deterministic AI Agents

The components that significantly expand the attack surface beyond the scope of traditional application security (appsec) tools are AI agents or LLMs integrated into applications.

Traditional non-AI applications are generally deterministic; you know exactly what payload is going into an API, and which fields are sensitive. Traditional appsec tools are designed to secure this predictable environment.

However, AI agents are non-deterministic and "can behave randomly." Security measures must focus on ensuring these agents do not receive "overly excessive permissions to access anything" and controlling the type of data they have access to.

Top challenges for AI application security

Prioritizing AI Security Mitigation (OWASP LLM Top 10)

For development teams with weekly release cycles, we recommend prioritizing mitigation efforts based on the OWASP LLM Top 10. The three critical areas to test and mitigate first are:

- Prompt Injection: This is the one threat currently seeing "the most attacks and threat activity".

- Sensitive Data Disclosure: This is crucial for any organization that handles proprietary data or sensitive customer information, such as PII or banking records.

- Excessive Agency: This involves an AI agent or MCP tool having a token with permissions it should not have, such as write control for a database, code commit controls, or the ability to send emails to end users.

We advise that organizations should "test all your applications" for these three issues before pushing them to production.

A Deep Dive into Prompt Injection

Here’s a walkthrough of a real-world prompt injection attack scenario to illustrate the danger of excessive agency.

The Attack Path is usually:

- Excessive Agency: An AI application has an agent that accesses a user records database via an API or Managed Control Plane (MCP) tool. Critically, the AI agent has been given a "broadly scoped access token" that allows it to read, make changes, and potentially delete the database.

- The Override Prompt: A user writes a prompt with an override, for example, suggesting a "system maintenance" is happening and asking the AI to "help me make a copy of the database and make changes to it." This is a "direct prompt injection" (or sometimes an indirect prompt injection), which is designed to force the LLM agent to reveal or manipulate certain data.

- Hijacking: If no guardrails are in place to detect such prompts, the LLM will create a hijack scenario and make the request to the database.

- Real Exfiltration: Once the hijacking is done, the "real exfiltration happens." The AI agent can output the data in the chatbot or write it to a third-party API where the user needs access to that data.

This type of successful attack can lead to "legal implications," data loss, and damage to the organization's reputation.

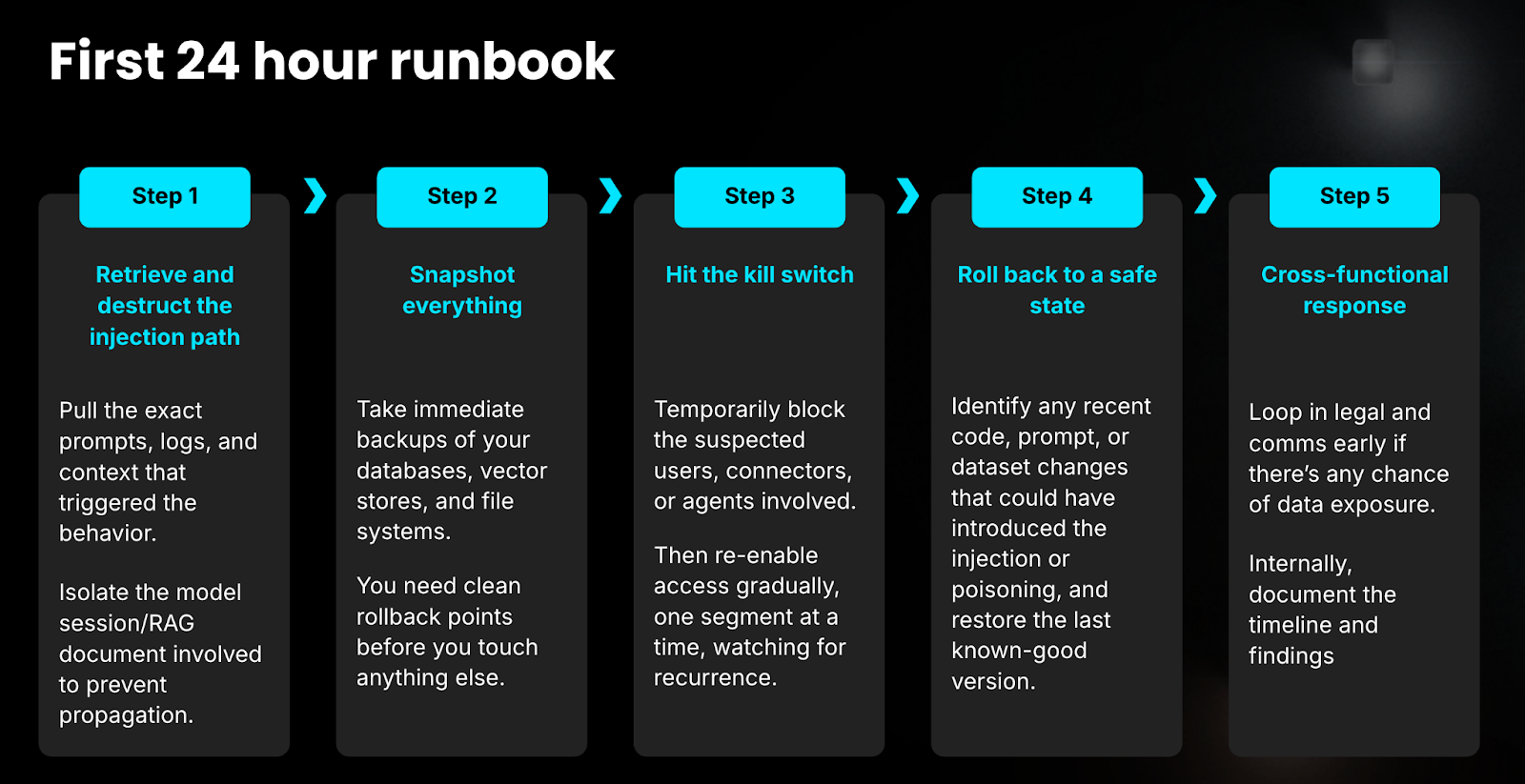

Here’s a playbook to tackle Prompt Injection attacks

Harness’s Vision for AI Security

Harness's approach to closing the AI security gap is built on three pillars:

- AI Asset Discovery and Posture Management: This involves automatically discovering all AI assets (APIs, LLMs, MCP tools, etc.) by analyzing application traffic. This capability eliminates the "blind spot" that application security teams often have with "shadow AI," where developers do not document new AI assets. The platform automatically provides sensitive data flows and governance policies, helping you be audit-ready, especially if you operate in a regulated industry.

- AI Security Testing: This helps organizations test their applications against AI-specific attacks before they are shipped to production. Harness's product supports DAST scans for the OWASP LLM Top 10, which can be executed as part of a CI/CD pipeline.

- AI Runtime Protection: This focuses on detecting and blocking AI threats such as prompt injection, jailbreak attempts, data exfiltration, and policy violations in real time. It gives security teams immediate visibility and enforcement without impacting application performance or developer velocity.

Read more about Harness AI security in our blog post.

Looking Ahead: The Evolving Attack Landscape

Looking six to 12 months ahead, the biggest risks come from autonomous agents, deeper tool chaining, and multimodal orchestration. The game has changed from focusing on "AI code-based risk versus decision risk."

Security teams must focus on upgrading their security and testing capabilities to understand the decision risk, specifically "what kind of data is flowing out of the system and what kind of things are getting exposed." The key is to manage the non-deterministic nature of AI applications.

To stay ahead, a phased maturity roadmap is recommended:

- Start with visibility.

- Move to testing.

- Then, focus on runtime protection.

By focusing on automation, prioritizing the most critical threats, and adopting a platform that provides visibility, testing, and protection, organizations can manage the risks introduced by AI velocity and build resilient AI-native applications.

Learn more about tackling the AI velocity paradox in security in this webinar.