For the last decade, the holy grail of DevOps has been Automation. We spent years writing Bash scripts to move files, Terraform to provision servers, and Ansible to configure them. And for a while, it felt like magic.

But any seasoned engineer knows the dirty secret of automation: it is brittle.

Automation is deterministic. It only does exactly what you tell it to do. It has no brain. It cannot reason. If an error occurs that isn't explicitly handled in your try/catch block, the script fails, the pipeline breaks, and a human gets paged at 3 AM to fix a problem that a machine should have understood.

We are now standing on the precipice of a new era. We are graduating from Generative AI, which writes the script for you, to Agentic AI, which runs the script, sees the error, analyzes the logs, debugs the root cause, and applies the fix.

As a Solution Architect, I see this not merely as a tool upgrade, but as a fundamental shift in our operational philosophy. We are transitioning from managing servers to managing Cognitive Architectures.

Beyond the Chatbot: The Anatomy of an Agent

To understand where we are going, we have to look beyond the "Chat with PDF" use cases. A true DevOps Agent isn't a browser window you type into; it is an autonomous control loop hardwired directly into your infrastructure.

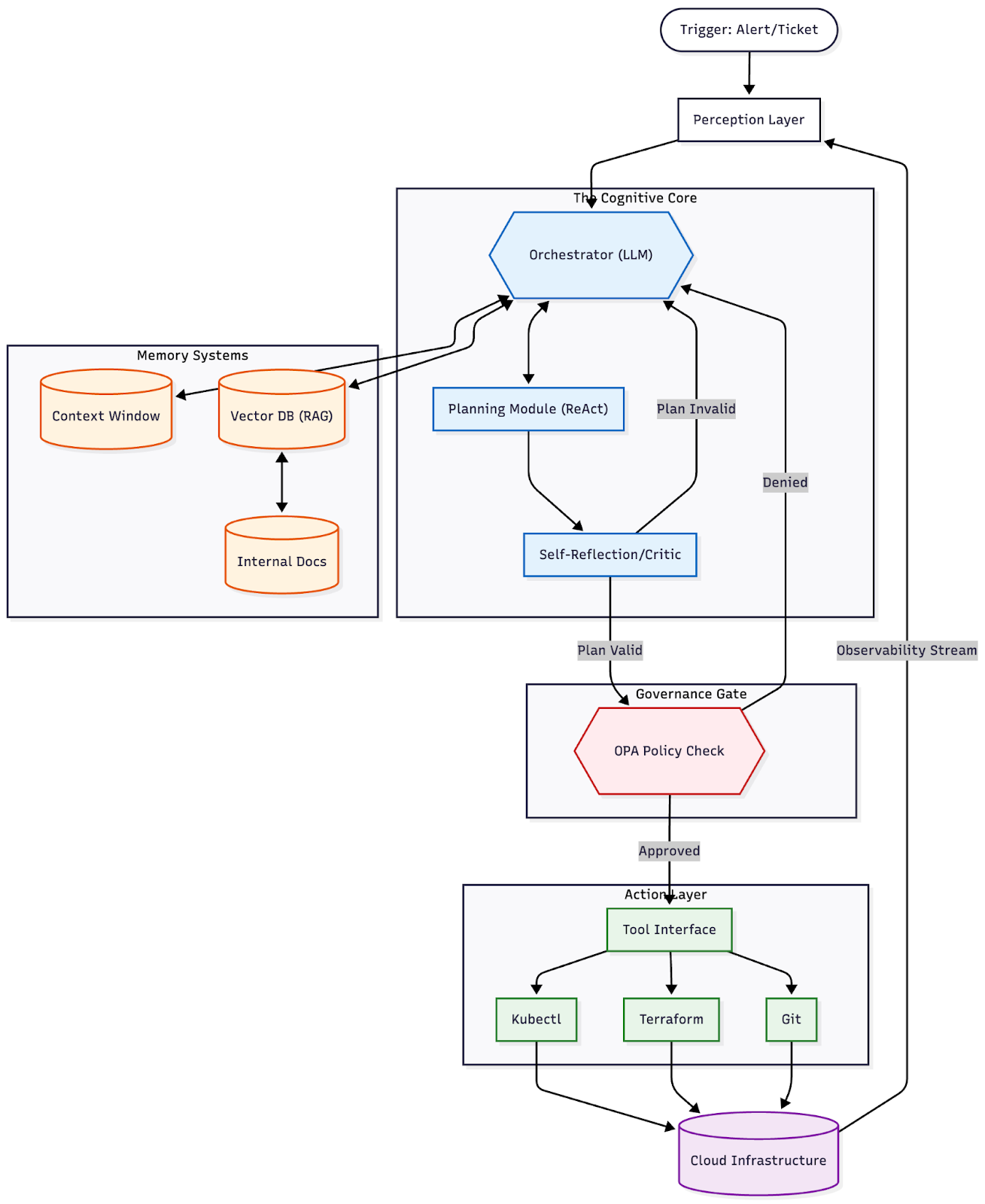

Think of it as building a synthetic brain for your operations. We define this system using the C-P-A Model (Context, Planning, Action). It transforms an LLM from a text generator into a decision engine.

- Perception (The Sensors): An agent cannot act on what it cannot see. Unlike a human reading a screen, an agent ingests high-cardinality data, logs, metrics, and traces, converting them into embeddings to detect anomalies that would slip past a regex filter.

- Memory (The Context): Scripts are amnesiacs; they run and forget. Agents have memory. Using RAG (Retrieval-Augmented Generation), they pull from a Vector Database containing your entire history of runbooks, architectural diagrams, and past incident reports. They "remember" how you fixed this last time.

- Reasoning (The Orchestrator): This is the core differentiator. Using patterns like ReAct (Reason + Act) or Chain-of-Thought, the agent breaks a complex alert—like "High Latency in US-East"—into a step-by-step investigation plan, hypothesizing root causes before touching a single server.

- Tool Use (The Actuators): Finally, the agent needs hands. Through a secure tool interface, it executes CLI commands (Kubectl, AWS CLI, Git) to effect change, verifying the output of every command just like a human engineer would.

Architectural Diagram: The Cognitive Loop

The Architect's Force Multiplier: From YAML Herder to System Designer

For us architects, Agentic AI solves our biggest pain point: The disconnect between design and reality.

Today, we draw beautiful diagrams in Miro or Visio. Then, developers write Terraform that mostly matches the diagram. Six months later, the infrastructure has drifted, the documentation is stale, and the architecture diagram is a lie.

Agentic AI acts as the "Force Multiplier" that keeps the map aligned with the territory.

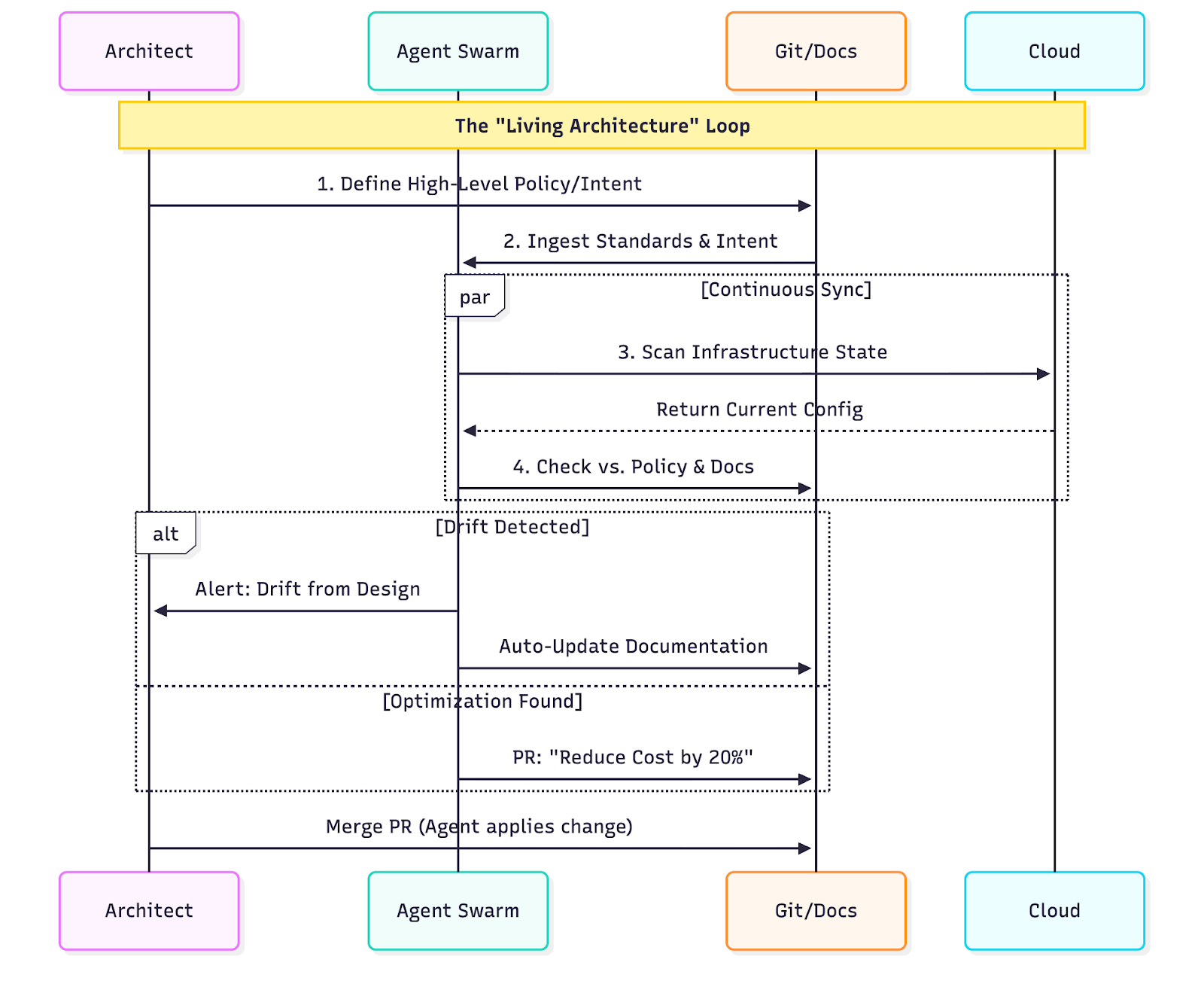

1. The "Living Architecture" Engine

Instead of manually updating diagrams, we can deploy Observer Agents. These agents continuously scan the cloud estate (via Read-Only APIs) and reverse-engineer the current state into architectural models.

- The Shift: If a developer manually spins up an RDS instance, the Agent detects the drift, updates the architectural record, and alerts the architect that the implementation has deviated from the standard.

2. Intent-Based Provisioning

We stop writing boilerplate. As an architect, I shouldn't care about the syntax of an AWS::EC2::Instance. I care about the properties of the system.

- The Workflow: I define the Intent: "I need a resilient, PCI-compliant message queue that can handle 10k TPS."

- The Agent: The agent (trained on your organization's “Golden Standards”) generates the Terraform, ensures it uses approved modules, and verifies compliance before a human ever reviews the PR.

3. Continuous Cost & Security Optimization

Humans are bad at optimization because it requires analyzing thousands of data points. Agents thrive here.

- Scenario: An "Optimizer Agent" notices a cluster is over-provisioned by 40%. It doesn't just send a report; it proposes a new Terraform plan to right-size the instance types, calculates the exact savings ($4,200/month), and opens a Pull Request for the architect to approve.

Visualizing the Architect's New Workflow

The Three Horizons of Adoption

We won't wake up tomorrow with a fully autonomous SRE team. This transition will happen in waves or "Horizons", each requiring a higher degree of trust and architectural maturity.

Horizon 1: The "Augmented Operator" (Today)

We are already living here. Agents exist as "Sidecars" to the engineer.

- The Workflow: You are the driver; the AI is the navigator. You ask, "Why is this pod crashing?" The Agent queries logs, correlates a

MemoryLimitExceedederror with a recent config change, and proposes a fix. - The Architecture: IDE extensions and CLI wrappers. The human creates the intent and approves the action.

Horizon 2: Agent Swarms & Task Autonomy (1-2 Years)

This is where things get interesting. We start giving agents specific scopes to handle repetitive tasks autonomously. We also see the rise of Agent Swarms—specialized agents collaborating.

- The Workflow: A "Security" agent identifies a CVE. It doesn't just alert you; it creates a ticket and passes it to a "Developer" agent. That agent creates a branch, bumps the version, and passes the PR to a "QA" agent to run tests. The human steps in only at the very end to click "Merge."

- The Shift: We move from Human-in-the-Loop to Human-on-the-Loop. You review the output, but you don't drive the process.

Horizon 3: The "Autonomous SRE" (3-5 Years)

The game-changer. Agents act as Tier-1 support, proactively managing system health.

- The Workflow: Production latency spikes at 2 AM. The "SRE Agent" detects the anomaly, identifies a noisy neighbor, drains the node, and cordons it. It verifies stability and posts a post-mortem to Slack. You are only paged if the agent fails to solve the problem.

- The Shift: Human-out-of-the-Loop for standard operations. You manage the policy and the goals, not the tasks.

The Architect's Challenge: Managing Probabilistic Ops

This shift introduces a terrifying concept for traditional Ops people: Probabilistic Infrastructure.

Scripts are safe because they are deterministic; run them twice, get the same result. Agents are probabilistic; they might choose a slightly different path to solve a problem based on the context. As Solution Architects, how do we sleep at night knowing an AI has root access?

We design guardrails.

- Constitutional AI (Policy-as-Code): Before any tool is executed, the agent's plan must pass through a deterministic policy engine (like Open Policy Agent). If an agent tries to execute

terraform destroyon a production database, the policy engine kills the command hard, regardless of what the LLM "thinks" is right. - Contextual Permissions: We must architect agents with distinct permission boundaries. A "Diagnosis Agent" should have extensive Read permissions but zero Write permissions. A "Remediation Agent" should have Write permissions scoped strictly to the namespace it is repairing.

- The Black Box Recorder: Every "thought" (reasoning step) and every "action" (CLI command) must be logged to a tamper-proof ledger. In a post-mortem, we need to be able to replay the agent's decision tree to understand why it made a specific choice.

Conclusion

The fear that AI will replace DevOps engineers is misplaced. It won't replace us; it will promote us.

In the future, you won't be paid to write the script that restarts the server. You will be paid to design the Cognitive Architecture that manages the fleet. You are becoming the Commander, orchestrating a team of silicon-based SREs that never sleep, never tire, and can react at the speed of light.

The future of DevOps isn't just code; it's Cognition.