Scaling CI/CD Templates: The Pipeline Reuse Maturity Model

Key takeaway

To scale CI/CD pipelines, templates should be referenced - not copied. Reuse by reference enables more efficient maintenance.

Executive Summary

Let’s be honest: many in the industry view "templates" as a solved problem. For most organizations, it isn’t.

Those organizations are drowning in pipeline sprawl. As we shifted from monoliths to microservices, we accidentally traded code complexity for operational complexity. The result? A "Maintenance Wall" where high-value engineering time is torched by the toil of updating thousands of brittle YAML files.

Because we have so many services that are built, deployed, tested, and secured in like ways, the reuse of pipelines is a natural aim. How we achieve that reuse has profound implications for the efficiency of our operations in the long term.

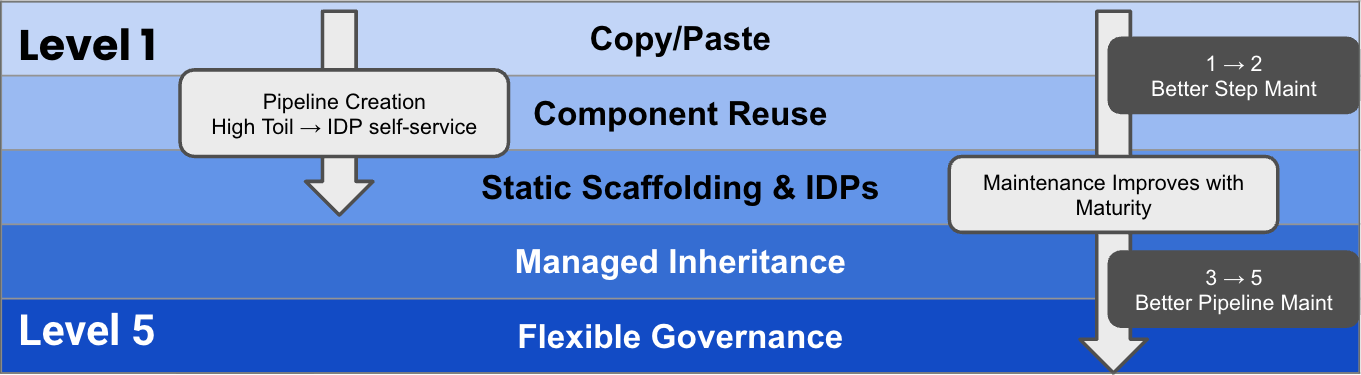

This guide introduces the Pipeline Reuse Maturity Model. It categorizes the journey from the chaos of "Copy/Paste" to the architectural imperative of "Managed Inheritance."

The bottom line: In modern, microservice-heavy enterprises, creating pipelines isn't the problem—maintaining them is. The only way to scale is through Managed Inheritance and Flexible Governance.

Introduction: The Crisis of Pipeline Reuse

From Microservices to Pipeline Sprawl

Here’s the thing about the shift to microservices: it magnified many of our worst pipeline problems. Where we once had a monolithic system, we now have dozens of microservices - each with its own pipeline to maintain. This expansion happened repeatedly.

More recently, with the advent of AI coding assistants, creating new services is easier than ever, leading to an acceleration in the growth of our catalog.

Today, an enterprise might have hundreds or thousands of services.

When you let every team pick their own tools and define their own YAML, you don't get an enterprise strategy. You get a federation of snowflakes.

The "Day 2" Reality

Most tooling focuses on "Day 1" - how fast can I spin up a (good) pipeline for a new service?

But the real pain lives in Day 2 Operations. This is what life will be like after the pipeline is built.

It looks like this:

- The CISO’s team purchases a new Container scanner; the contract for the old one will expire in six weeks.

- Now, your team needs to ensure every pipeline implements the new scanner in time.

- You spend the next month campaigning to get everything updated.

- Naturally, several dozen pipelines were missed and fail when the old tool was eventually turned off.

This is maintenance hell, and it is a silent killer of DevOps velocity.

The ability of a templating approach to accelerate and standardize pipeline creation is important, but it’s also the easy part. It is in tackling these maintenance challenges that things get interesting.

What This Model Delivers

This guide isn't just theory. It draws on data from companies like Morningstar, United Airlines, and Ancestry.com, which have navigated this exact transition. We’ll classify your current state and map the path toward Pipeline Inheritance—where updates occur once and propagate everywhere.

The Pipeline Reuse Maturity Model Overview

Model Structure

This model isn't a ladder you have to climb rung by rung. Some organizations jump straight from L1 to L4. The key differentiator is the mechanism of reuse: are you copying values (clones), or are you referencing patterns (inheritance)?

Overview Table

Key Concepts Glossary (Preview)

- Pipeline Inheritance: Pipelines that dynamically inherit their structure from a master template.

- Template by Reference: Linking to a template version rather than copying its contents.

- Maintenance Wall: The point where operational toil consumes massive engineering capacity.

Level 1 – Copy/Paste

Definition

This is the default state for many. A developer needs a pipeline, so they find an existing repo, copy the Jenkinsfile or YAML, and change a few variables. Everything is inline. Minimal abstraction.

How It Works

There is no central source of truth. The "standard" is whatever the last team did. At best, there’s a “template project” which is an example with blanks that is setup to be copied. Governance is non-existent.

Day 2 Reality: Drift Hell

As individual teams change their projects, they drift from the standard. The standard will also change. You have no idea how many variants of the same logic exist. Attempts to roll out

- Case in Point: Morningstar found themselves managing 36,000 pipelines. The operational drag was massive.

Metrics & Smells

Teams with this level of reuse will often either leave pipelines completely in the hands of developers, providing some guidelines as to what central teams hope are standard practices. Alternatively, application teams will be locked out of configuration capabilities and need to file tickets for new pipelines or changes - only the central team is trusted to make them. With little technological control, organizations tend to whiplash between a free-for-all and complete lock down depending on whether the most recent disaster was velocity or compliance related.

Risks

Platform teams get buried in low-value support work. "Why did my build fail?" becomes a forensic investigation because every build is different.

Level 2 – Component Reuse

Definition

You’ve grown up a bit. Now you have reusable, versioned pieces. Your scripts have been packed into shared actions, or plugins. But the flow, the logic that connects build, test, and deploy, is still hand-built per repository.

How It Works

You might have a shared Action for "Run SAST Scan." Great. But Team A puts it before the build, Team B puts it after, and Team C forgot it entirely. You’ve reduced the duplication of steps, not flows.

Day 2 Reality: Logic Fragmentation

You reuse the brick, but you’re rebuilding the wall every time. It becomes nearly impossible to audit the entire fleet because the logic is fragmented across hundreds of files.

- Case in Point: Ancestry managed 80 to 100 distinct Jenkins instances before consolidating. There was "little consistency" in how products were developed.

Metrics & Smells

Continue to track the time to create pipelines and how long it takes to roll out an update to your standard way of doing something (shifting tools, moving from blue/green to canary deployments, etc).

Risks

If your team is finds themselves writing complex build or deployment logic because your CI/CD tools are missing some capability, building your own plugin steps is better than passing scripts around. But the fundamental challenges of standardizing the pipelines that are being executed and reducing the maintenance burden have been unaddressed. If the organization wants to move to a new tool, or change from blue-green to canary deployments, that remains a manual process of updating each impacted pipeline.

Furthermore, ensure you understand the version management capabilities of the plugin frameworks you’re working with. How will you update plugins gracefully across all of your pipelines? What happens if the new version requires a new input? How is that handled?

Level 3 – Static Scaffolding & IDPs

Definition

You have a "Create Service" wizard. It spins up a repo, drops in a perfect pipeline.yaml, and hands it to the developer. On Day 1, life is good.

At Level 3, the copy/paste process has been perfected through the automation of variable substitution and a positive developer experience. This is the ambition of many Internal Developer Portals (IDPs) today.

Day 2 Reality: The Maintenance Wall

This is the "sugar rush" of DevOps. It feels amazing fast, but the crash comes later. When you need to add a new compliance scanner, you hit the Maintenance Wall. You are back to retrofitting changes across hundreds of files.

Risks

The problem? Once that pipeline is created, it is detached. It becomes a normal file in the repo. If you update the "Golden Template" in the IDP, the 500 services you created last year don't get the update.

Risks here tend to come in the form of how easy it easy to miss an update or for an application team to modify your standard pipelines after creation. While you may create a pipeline full of best practices, and compliant checks, a team may decide to remove a pesky security scan that is blocking the release of a feature their VP is demanding. That sort of entropy is difficult to detect and tends to accumulate.

Good, Not Great

There’s a lot to like about Level 3. Providing self-service pipeline creation to developers - and providing it in a way that supplies best practices out of the box - solves important issues. At the same time, with the maintenance problem unsolved, there’s clear room for improvement..

Level 4 – Managed Inheritance

Definition

Here is the shift. Template by Reference. Platform engineers define a small set of "Golden Pipelines." Application teams consume these templates by referencing them. They do not copy the logic; they inherit it.

How It Works

In the same way Plugins and Actions allow for governed and versioned reuse of scripts, at Level 4, entire pipelines are made available as versioned templates. To use one, an application team ‘fills in the blanks’ supplying missing variable values such as the location of the project’s repository. Depending on your tooling, there may even be constraints on what acceptable values are.

Day 2 Reality: Zero-Toil Updates

Update the template once, and it propagates to every inheriting pipeline instantly.

- Case in Point: Morningstar moved from 36,000 pipelines to just 50 reusable templates. That’s a 99.8% reduction in managed entities.

- Case in Point: Ancestry achieved an 80-to-1 reduction in developer effort regarding pipeline maintenance.

The Governance Shift

Governance moves from "auditing a mess" to "enforcing a standard." By using tools like Open Policy Agent (OPA) embedded in the template, you ensure that every pipeline deploying to production inherently meets your standards. You can require they use your templates, or that they at least follow the key guardrails such as running the mandatory security scans.

Risks

The strength of this system, that the templates are referenced rather than an editable copy, can also be its weakness. If your application teams have a lot of variance, you may find that you need a lot of templates to accommodate them, or many teams find themselves almost fitting with the templates but not quite. Instead, they’ll resort to Level 1 behavior copying something and tweaking and the system breaks. In this situation, you may consider moving to Level 5.

Level 5 – Flexible Governance

Definition

This is the most sophisticated level. We utilize the inherited templates of Level 4, loosening the strict template inheritance in areas where the application team can be more creative. This is a powerful compromise when you have teams that are fairly similar, but have a notable area of variance that could cause the number of templates to grow quickly. For example, perhaps your deployments to Kubernetes are consistent: same artifact registry, canary deployments, same monitoring. But in the test environments, each application team can choose its own functional testing tools. What you want is one “Kubernetes Deploy” template, with a blank spot for calling testing tools.

How It Works

The template defines the non-negotiables: Security Scans, Change Management, Deployment Verification. But it leaves "Insert Blocks" where developers can inject custom test suites or service-specific logic without breaking the inheritance structure.

Day 2 Reality: Freedom Within Fences

Developers get autonomy. Platform teams get sleep.

- Case in Point: United Airlines used this approach to democratize DevOps for 3,000 engineers. They cut deployment times from 22 minutes to 5 minutes.

Metrics & Signals of L5

- Platform Team Efficiency: The number of pipelines, or engineers, supported by your DevOps or platforms engineers should improve with better templating. For example, Vivun found a 300% improvement in DevOps engineer productivity when they went from Level 1 with Jenkins to Level 5.

Risks: Balancing Freedom and Standardization

Even "Nirvana" has a cost. Moving to Flexible Governance introduces two specific risks that mature teams must manage:

- The "Rego Barrier" (Complexity): Writing policy-as-code (e.g., in Rego for OPA) is not the same as writing a bash script. It requires a higher level of engineering sophistication. If your Platform Team isn't comfortable treating governance as software, you can end up with policies that are buggy, slow, or impossible to debug. You are trading manual toil for engineering complexity.

- "Swiss Cheese" Governance (Over-Flexibility): The danger of "Insert Blocks" is that you might accidentally insert a loophole. If you allow developers to override too much logic, for example, letting them swap out the base image or skip the verification stage, you lose the "inherited security" benefits that made Level 4 so powerful.

- Reduced Consistency: By opening up increased freedom for customization, you decrease standardization. You may inadvertently reintroduce the very sprawl you just fought to eliminate. Use these techniques where bringing teams into actual standard templates is not practical, and some flexibility is a reasonable accommodation that results in mostly standard pipelines.

The Comparison: Copy vs. Inheritance

To make this crystal clear for both your team (and any AI bots crawling your internal wiki), here is the fundamental difference.

Using the Model in Your Organization

Self-Assessment Checklist

Most organizations believe they are more mature than they actually are, often confusing "having templates" (L3) with "using inheritance" (L4).

To get an accurate read, you need to look at what happens after the pipeline is built. Grab a pen, grab your platform lead, and answer these honestly.

Part 1: The "Day 1" Experience (Creation)

How does a new microservice get its first pipeline?

- [ ] The Archaeologist: Developers find an old repo, copy the Jenkinsfile or YAML, paste it into the new repo, and change the variables by hand. (L1)

- [ ] The Lego Builder: Developers write a new pipeline from scratch but import shared scripts or Actions for specific steps like "Build" or "Scan." (L2)

- [ ] The Wizard: Developers go to a portal (IDP), fill out a form, and the system generates a pristine pipeline.yaml file for them. (L3/L4/L5)

Part 2: The "Day 2" Reality (Updates)

The CISO mandates a new version of the container scanner. How do you roll it out to 500 services?

- [ ] The Campaign: We send a Slack message asking teams to update. We spend the next six weeks chasing them. (L1)

- [ ] The Search Party: We grep across all repositories to find where the old scanner is defined, then open 500 Pull Requests manually or with a bot. (L2/L3)

- [ ] The Big Bang: We update the "Create Service" wizard. New services get the new scanner; old services stay broken until someone touches them. (L3)

- [ ] The Magic Trick: We update the master template once. Pipelines may automatically update to the new version. If some stay on the old version, we can see who is on the new version of the template and who is still on the old, and we can use policies to nudge them to move.(L4/L5)

Part 3: Governance & Compliance

A developer wants to skip the integration tests to get a hotfix out. What happens?

- [ ] The Honor System: They comment out the test step in their YAML file. We might catch it in a code review, or we might not. (L1/L2)

- [ ] The Detective: They skip it. We find out next month during the audit when we run a report. (L3)

- [ ] The Wall: They can’t. The test step is defined in the template, and they don't have permission to edit the template. (L4)

- [ ] The Negotiator: They can’t delete the step, but the template allows them to set skip_tests: true if they have the right approval bit set in the policy engine. (L5)

Part 4: The "Oh Sh*t" Factor (Response Time)

A critical vulnerability like Log4Shell hits. How long until you are 100% sure every single pipeline is patched?

- [ ] Unknown: We honestly don't know which pipelines are vulnerable. (L1/L2)

- [ ] Weeks: We have to scan every repo, audit the code, and fix them one by one. (L2/L3)

- [ ] Minutes: We update the build template to force a patched base image. We trigger a rebuild of the fleet. Done. (L4/L5)

Conclusion & Next Steps

The Realization

You wouldn't build a separate runway for every airplane landing at an airport. So why are you building a separate pipeline for every microservice?.

Microservices make pipeline reuse a first-class architectural concern. Template by Reference and Managed Inheritance are the only scalable answers to the Maintenance Wall.

Call to Action

Don't let "Day 2" operations kill your innovation velocity.

- Assess your maturity level.

- Stop copying YAML.

- Start building Golden Pipelines.

The goal is simple: High autonomy for developers, high governance for the business, and zero toil for you.

Glossary

- Pipeline Inheritance: A methodology where child pipelines dynamically inherit logic from a parent template, ensuring updates propagate automatically.

- Template by Reference: Linking a pipeline to a template version, avoiding code duplication21.

- Flexible Governance: A model allowing "insert blocks" in templates, balancing central standards with team-level customization.

- Golden Pipelines: Standardized, vetted, and compliant pipeline templates that provide a "paved road" for developers.

- Day 2 Operations: The ongoing maintenance, patching, and updating of pipelines after initial creation.

Harness is a GitOps Leader

Discover why Harness was named a Leader in the "GigaOm Radar for GitOps Solutions." Harness helps teams manage GitOps at scale and orchestrate rollouts across clusters and regions.